Improve PHP Web App Performance Using Memcached

Time to read: 5 minutes

In the first part of this series, I stepped through how to create a Markdown-powered blog in PHP using the Slim Framework. In this, the second part in the series, you're going to learn how to use Memcached with PHP to improve the application's performance.

Let's begin!

Prerequisites

You need the following to follow this tutorial:

- Some familiarity with caching, Markdown, YAML, and the Twig templating engine.

- Some familiarity with the Standard PHP Library (SPL).

- PHP 7.4+ (ideally version 8) with the Memcached extension installed and enabled.

- Access to a Memcached server

- Composer installed globally.

Why use caching?

While the initial version works perfectly well, its performance would peak reasonably quickly, because — on every request — the blog data is aggregated from a collection of Markdown files with YAML frontmatter in the application's filesystem, parsing out the article data before the blog data can be rendered.

To reduce complexity, the blog articles contain only a minimum amount of information, that being: the publish date, slug, synopsis, title, main image, categories, and the article's content.

This parsed data is then used to hydrate a series of entities (MarkdownBlog\Entity\BlogItem) that model a blog article. Following entity hydration, any unpublished articles (based on their publish date) are filtered out, with the remaining articles sorted in reverse publish date order.

While reading files from the application's filesystem isn't the most performant approach, it's also not, necessarily, a performance killer. The deployment server may be fitted out with properly optimised and tuned hardware, such as NVMe technology.

However, regardless of the hardware the host uses, and how well it's optimised, executing the full Read > Parse > Hydrate > Render pipeline on each request, is inefficient and wasteful.

It's far more efficient to execute the full pipeline only once, and store the aggregated data in a cache. Then, on every subsequent request, until the cache expires, read the aggregated data and render it. Doing so will result in notably lower page load times, enabling the application to handle far more users, and reduce hosting costs and its impact on the environment.

The refactoring will be broken into three parts:

- Refactor the article data aggregation process to allow for easier caching integration.

- Add a Memcached service to the application's DI (Dependency Injection) container.

- Integrate the Memcached service into the article data aggregation process.

Clone the source code

To get started, clone the application's source code to your development machine, change into the cloned directory, and checkout the 1.0.0 tag by running the following commands.

Install the required dependencies

Now, you need to add two additional dependencies, so the application can cache the aggregated blog article data in Memcached; these are:

- laminas-cache: This is a broad caching implementation which hands off specific cache implementation to an underlying adapter library.

- Laminas-cache-storage-adapter-memcached: This handles the interaction with Memcached.

To install them, run the commands below, in the project's root directory.

Integrate caching

If you look at public/index.php, you'll see that the blog data is initially aggregated in a DI service named ContentAggregatorInterface::class.

Then, it's sorted and filtered in the default route's handler definition.

This approach works, however, it makes implementing caching problematic because the sorting and filtering processes are run on every request, removing most of the caching benefits.

To refactor the application so that caching can be efficiently implemented will require changes to a number of aspects, but not all that many.

Refactor BlogItem to be serializable

The first thing to do is to refactor MarkdownBlog\Entity\BlogItem so that it is serializable. The reason why is because its publishDate member variable is a DateTime object. That will break serialisation, as many built-in PHP classes, including DateTime, are not serializable.

So, the first things to do are to:

- Refactor

publishDateto be a string - Refactor the

getPublishDatemethod to return aDateTimeobject.

To do that, in src/Blog/Entity/BlogItem.php, change publishDate's type to be a string, as in the example below.

Then, refactor the populate method, removing the special initialisation case for publishDate, by replacing it with the code below.

Finally, update the getPublishDate method to match the code below, so that it returns a DateTime object initialised with publishDate.

Refactor ContentAggregatorFilesystem

The next thing to do is to update src/Blog/ContentAggregator/ContentAggregatorFilesystem.php, to do two things:

- Move the item sorting to the

getItems()method - Create a new method, named

getPublishedItems(), that only retrieves published items.

First, update getItems() to match the version below.

Then, add the getPublishedItems() method below, after getItems().

Then, add the following use statements to the top of the file.

Refactor the DI container

Now, it's time to refactor the DI container. To do that, in public/index.php, replace the ContentAggregatorInterface::class service with the following definition.

Then, add the following use statements to the top of the file.

The revised code starts off by retrieving the application's cache from a, yet-to-be-defined, service named cache. Then, it checks if the cache has an item named articles, which will contain the aggregated articles.

If so, it returns the cached item. If not, the full content aggregation pipeline is executed, the aggregated BlogItem entities are stored in the application's cache, and then they're returned.

Add a Memcached service to the application's DI container

Next, you need to add the cache service (cache) which the refactored content aggregation service (articles) depends upon. To do that, in public/index.php, add the code below after the articles service.

Then, replace <<MEMCACHED_SERVER_HOSTNAME>> with the hostname, or IP address, of your Memcached server.

After adding the new DI service, add the following use statements to the top of the file.

The code specifies the Memcached server to connect to, in $resourceManager. Then, it initialises a Memcached object, which handles interaction with the Memcached server, passing to it $resourceManager.

Following that, it uses that object to initialise and return a SimpleCacheDecorator object. This object isn't, strictly, necessary. However, doing so provides a rather simplistic interface to the Memcached object, one you might be used to if you've implemented caching previously; i.e., methods such as get, set, has, and delete.

Refactor the default route's handler

The final thing to do, in public/index.php, is to update both route definitions. First, replace the default route with the following code.

The route retrieves the articles DI service, calls getPublishedItems() to retrieve the published items, and sets the retrieved items as the items template variable. This makes the route definition a lot more succinct, and doesn't mix several concerns together.

Next, replace the second route definition, with the following code.

This change was required so that it, also, references the articles DI service, instead of the deprecated ContentAggregatorInterface::class service.

Test the changes

With all the changes made, start the application by running the following command in the project's root directory.

Then, in your browser of choice — before loading the application — open a new tab or window. Then, open the Developer Tools and open the Network tab.

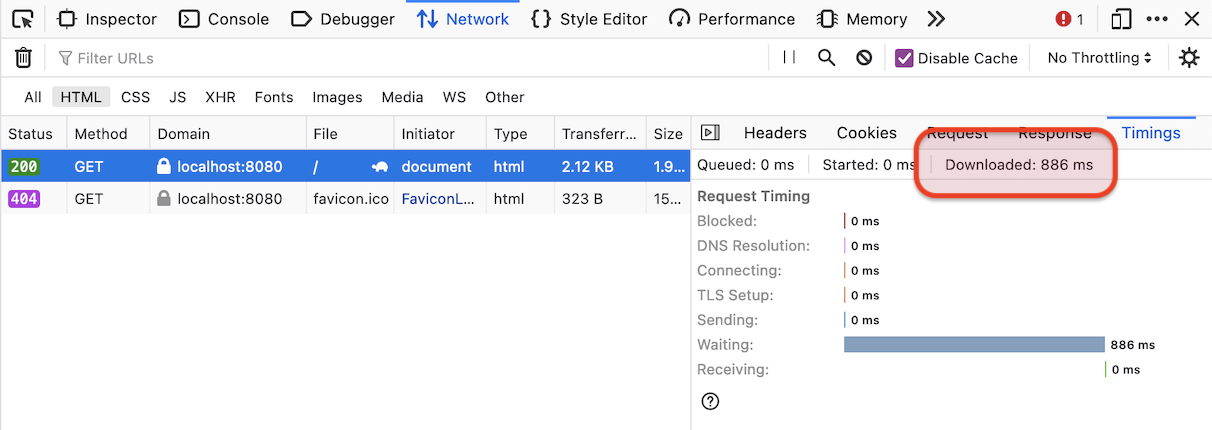

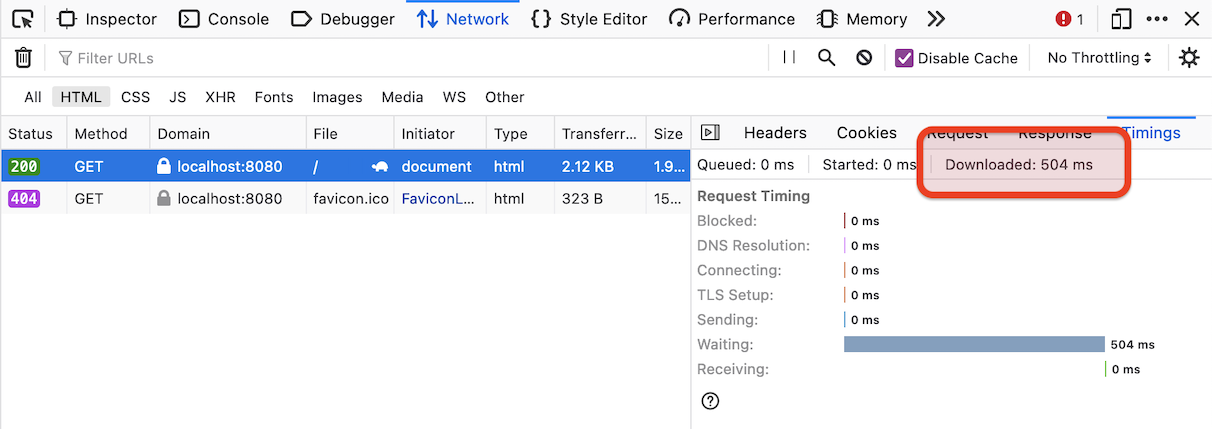

Now, open http://localhost:8080. After the page finishes loading, select the HTML request and, in the right-hand side pane, change to the Timings tab.

Take note of the "Downloaded" time. With this being the first request, no cached content was available, so the full content aggregation process would have been completed, and the result stored in Memcached.

Now, reload the page. The download time should be notably less than the original request, content was retrieved from the cache, not aggregated.

By using caching, the second page load is about 75% faster than the first load, where the cache was not warmed up.

That's how to improve PHP web app performance using Memcached

Sometimes, as in this application, despite the performance benefits that it brings, caching isn't immediately possible. However, allowing for your particular use-case, with a modest amount of refactoring, you can take advantage of it, and start handling significantly more visitors.

Keen to improve the performance of the application even further? Here are five excellent ways.

Matthew Setter is a PHP and Go editor in the Twilio Voices team, and a PHP and Go developer. He’s also the author of Mezzio Essentials and Deploy With Docker Compose. You can find him at msetter@twilio.com. He's also on LinkedIn and GitHub.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.