What you can do with data observability

Monitor, analyze, and troubleshoot events at every stage of data delivery.

How it works

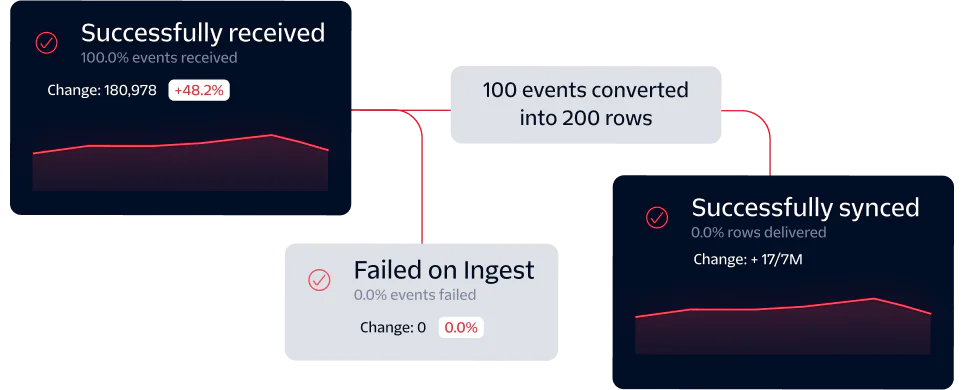

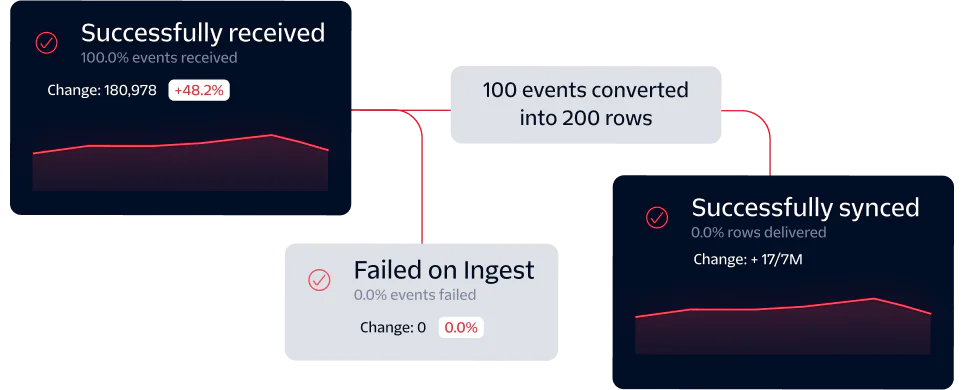

Step 1

Inspect what happens to events at every stage of delivery

Track each stage of event delivery from the time Segment ingests an event, to Source and Destination Filters, and finally whether event delivery was ultimately successful or not. Ensure that data is reliably delivered to downstream tools so that every team can trust the data they activate.

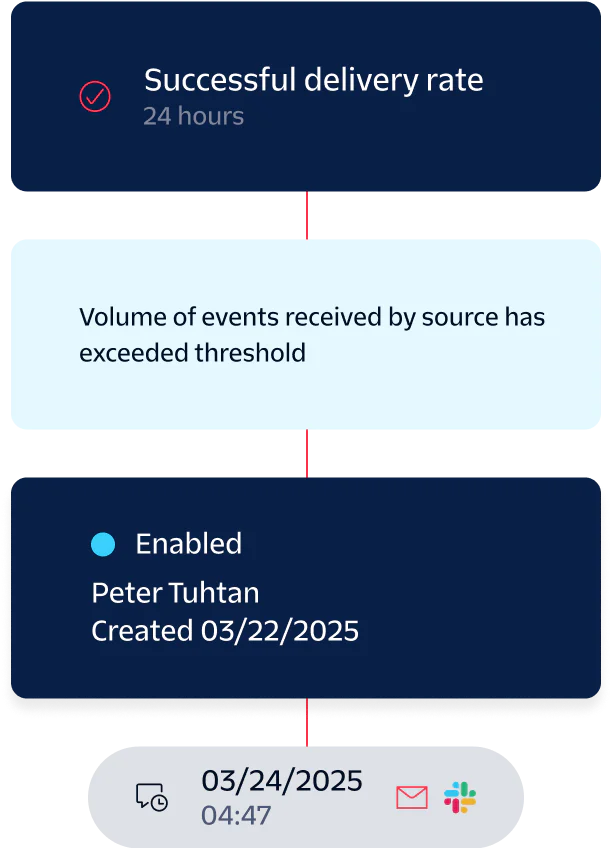

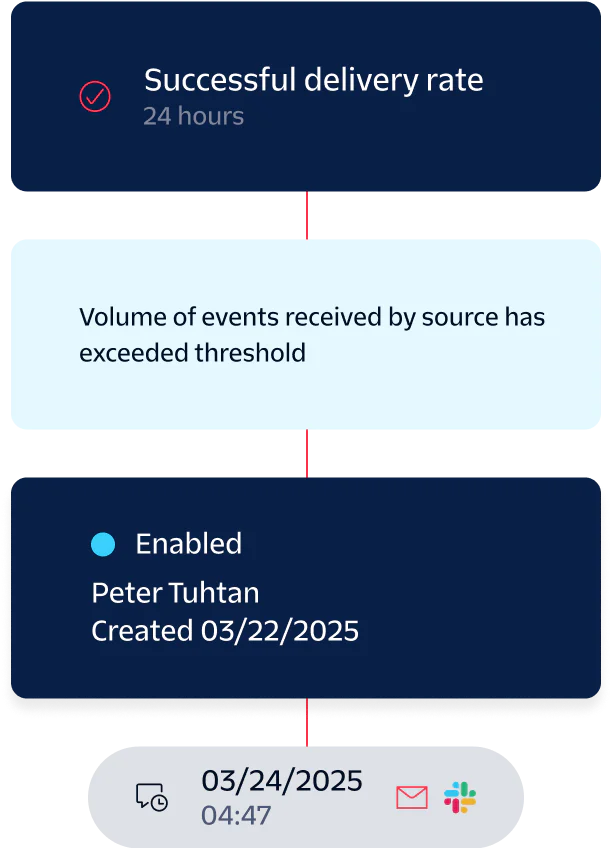

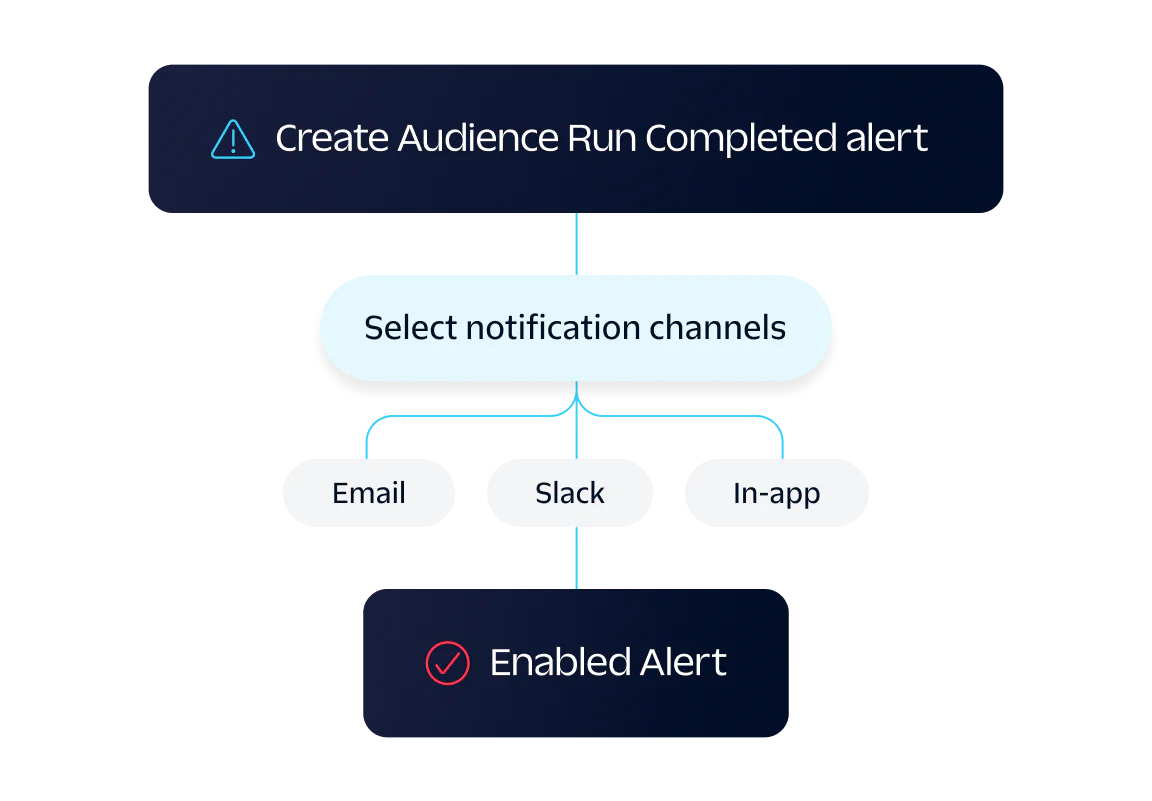

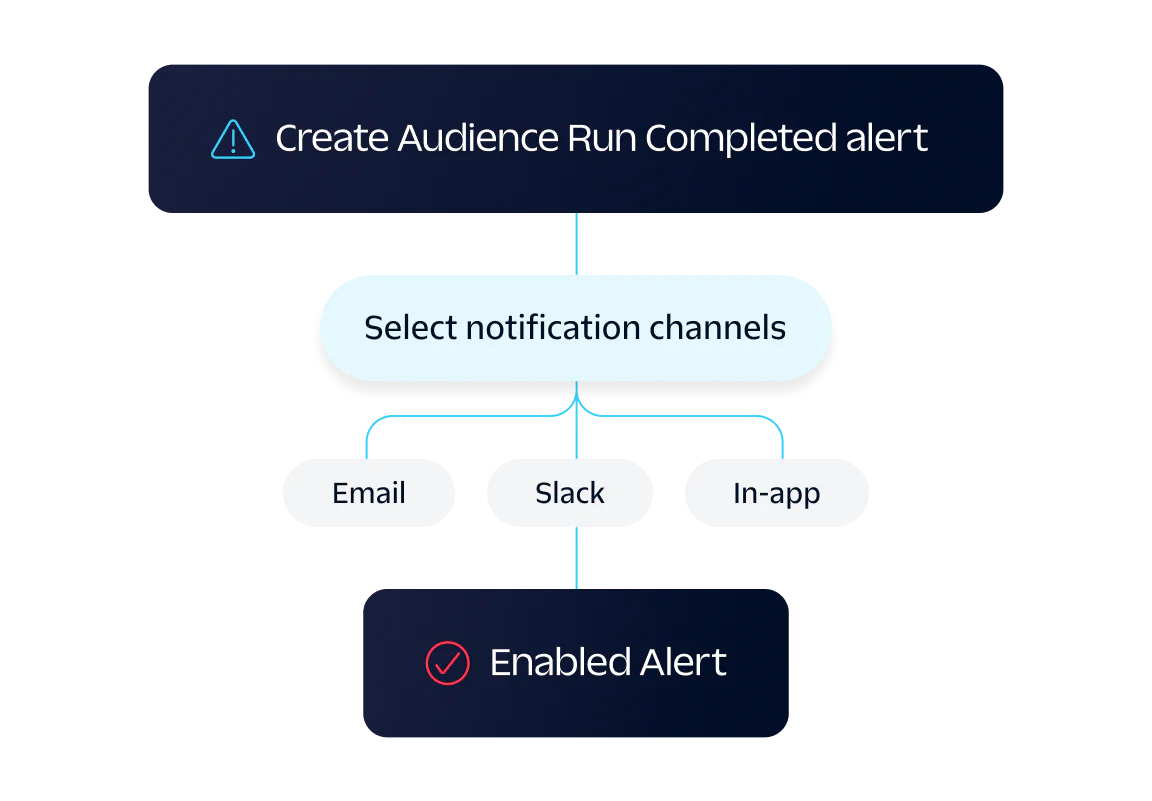

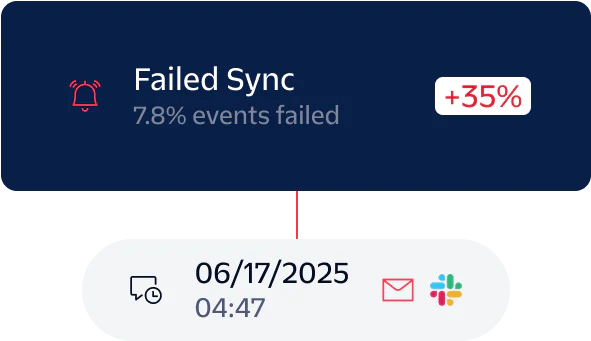

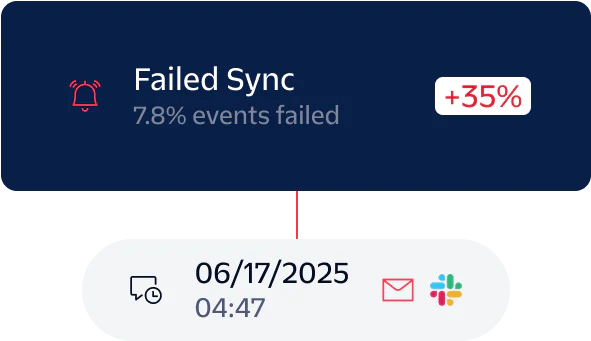

Step 2

Monitor the performance of the pipeline with dynamic alerts

Configure customizable alerts that’ll notify the appropriate parties on your preferred notification channels before anomalies have the chance to undermine the performance of the data pipeline. Plus - evaluate every alert across your entire workspace all in one central, convenient dashboard.

Step 3

Troubleshoot quickly with comprehensive event logs

Analyze event outcomes on a granular level with comprehensive logs that pinpoint exactly what happened to your events and why. No more spending hours trying to figure out why events were dropped or discarded.

Get started with Data Observability

Data Observability supports features across the entire Customer Data Platform, including all Destination types, Reverse ETL, and Twilio Engage. To learn more about the robust Observability offerings available for each feature, check out our documentation.