Building a Chatbot with OpenAI's GPT-3 engine, Twilio SMS and Python

Time to read:

A GPT-3 chatbot is a software application that is able to conduct a conversation with a human user through written or spoken language. The level of “intelligence” among chatbots varies greatly. While some chatbots have a fairly basic understanding of language, others employ sophisticated artificial intelligence (AI) and machine learning (ML) algorithms to achieve an almost human conversational level.

In this tutorial I’m going to show you how easy it is to build a chatbot for Twilio SMS using the OpenAI platform and the Flask framework for Python.

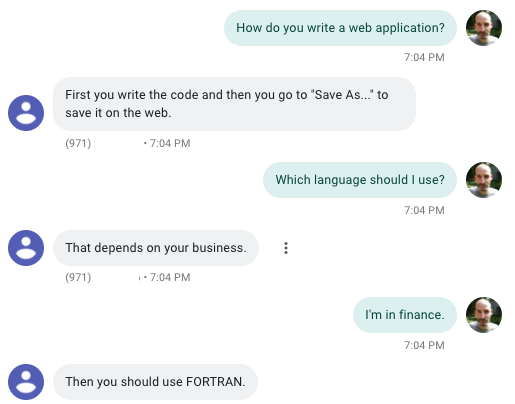

Want to have an idea of what the capabilities of GPT-3 are? Take a look at the following interaction I had with this bot using the project we are going to build in this tutorial:

Note that I have a similar tutorial to this one using the ChatGPT API.

Introduction to GPT-3

GPT-3 (Generative Pre-trained Transformer 3) is a highly advanced language model trained on a very large corpus of text. In spite of its internal complexity, it is surprisingly simple to operate: you feed it some text, and the model generates some more, following a similar style and structure.

What do you think of the first paragraph of this article? Would you believe me if I tell you that I did not write it myself, and that it was fully generated by GPT-3? I fed the model the title and introduction of two other blog posts I have written in the past, and then I added the title of this article. Here is the complete input I provided:

Note how the first two blocks of text have “Title” and “Summary” lines, but the third entry has an empty summary. When the GPT-3 engine looks at this input it understands that it needs to complete the “Summary” line with text that is appropriate for the given title. In my opinion it did this quite well!

GPT-3 is non-deterministic, in the sense that given the same input, multiple runs of the engine will return different responses. I actually generated a few opening paragraphs with the above input and selected the one that I liked the best to start the article. Here are a couple more that deserve to be shown:

Hard to believe, right? As amazing as this seems, you have to be careful, because the engine does not have a full understanding of the text it generates and can provide misleading responses. Consider these other two opening paragraphs that it also produced from my input:

While the first is really good in terms of structure, it has a big factual error: Google did not develop this technology. The second example does not only get the GPT-3 acronym wrong, but it is also equal parts ridiculous and funny!

Implementing a Chatbot with GPT-3

How can we make use of this cool technology? Building a chatbot, of course!

We can “prime” the engine with one or two example interactions between the user and the AI to set the tone of the bot. We then append the actual prompt from the user at the end, and let the engine generate the response.

Here is an example initial query that would work for a chatbot:

The exchange in the first two lines is made up. It serves the purpose of letting GPT-3 know that we want alternating lines that start with “Human:” and “AI:” and that the AI is going to answer questions. The third line is the actual first question from the user.

When I fed this input to the engine, I received the following text back:

So far so good. Now let’s say the user wants to ask another question. Here we have the option of incorporating this last interaction into the input, as this will help GPT-3 have a better context of the conversation. Here is what could be the input for a followup question:

You can probably see why expanding the input with the previous question and answer was a good idea. The user is now asking about “he”, assuming the chatbot will remember that the previous question was about Tom Hanks.

Luckily GPT-3 rises up to the challenge. Here is the response:

Ready to build a chatbot based on this simple algorithm? Let’s do it.

Tutorial requirements

To follow this tutorial you need the following items:

- Python 3.6 or newer. If your operating system does not provide a Python interpreter, you can go to python.org to download an installer.

- A smartphone that can send and receive SMS.

- A Twilio account. If you are new to Twilio click here to create a free account now and receive $10 credit when you upgrade to a paid account. You can review the features and limitations of a free Twilio account.

- An OpenAI API key. Request beta access here.

Create a Python virtual environment

Following Python best practices, as a first step in creating the chatbot we are going to create a separate directory for the project, and inside it we are going to create a virtual environment. Then we are going to install the Python packages that we need for our chatbot on it.

If you are using a Unix or Mac OS system, open a terminal and enter the following commands to do the tasks described above:

For those of you following the tutorial on Windows, enter the following commands in a command prompt window:

The last command uses pip, the Python package installer, to install the three packages that we are going to use in this project, which are:

- The OpenAI Python client library, to send requests to the OpenAI GPT-3 engine.

- The Twilio Python Helper library, to work with SMS messages.

- The Flask framework, to create the web application.

- The python-dotenv package, to read a configuration file.

- The pyngrok package, to temporarily make our web application available on the Internet.

Configuration

As mentioned above, this project requires an API key from OpenAI. At the time I’m writing this, the only way to obtain one is by being accepted into their private beta program. You can apply on their site.

The Python application will need to have access to this key, so we are going to create a .env file in which to put it. The application will then import it from this file as an environment variable.

Create a .env file in your project directory (note the leading dot) and enter a single line of text containing the following:

You will learn how to work with this file in the next section. If you plan on putting your project under source control, make sure this file is excluded, as you would not want to accidentally share your OpenAI key.

Sending GPT-3 requests from Python

In this section we are going to create the support code that will allow us to work with the OpenAI GPT-3 engine. The code will be stored in a file called chatbot.py. Below you can see the initialization section of this file:

The load_dotenv() function imports any variables stored in a .env file as environment variables. Note how we use the OPENAI_KEY variable in the following line to initialize OpenAI with the key. The completion variable holds the actual client to the engine. This is the object we will use to send queries.

I also added a start_chat_log variable, containing the two lines that prime the engine. Once the bot is up and running I encourage you to try different interactions in this variable to see how the bot changes its responses accordingly.

Let’s now write a function that makes a GPT-3 query. Add the following function at the bottom of chatbot.py:

The ask() function takes the question from the user as a first argument, followed by an optional chat log. If the chat log is not provided then the function uses start_chat_log instead.

The prompt variable is built to contain the chat log, followed by the question from the user, which is prefixed with Human: . After the user question we add final line reading just AI:, which is what is going to give the GPT-3 engine the queue to generate a response to the user’s question.

The completion.create() function is where the request to the GPT-3 engine is actually made. This function takes a number of arguments, which are used to configure how the engine should complete the text. Here is a brief description of these arguments:

prompt: the input textengine: OpenAI has made four text completion engines available, nameddavinci,ada,babbageandcurie. We are usingdavinci, which is the most capable of the four.stop: As I mentioned earlier, the GPT-3 engine does not really understand text, so when it completes text it needs to know when to stop. By giving a stop ofHuman:we are telling the engine to just generate text for the line that begins withAI:. Without a stop marker GPT-3 would continue generating text by writing more lines for both the user and the AI.temperature: a number between 0 and 1 that determines how many creative risks the engine takes when generating text.top_p: an alternative way to control the originality and creativity of the generated text.frequency_penalty: a number between 0 and 1. The higher this value the model will make a bigger effort in not repeating itself.presence_penalty: a number between 0 and 1. The higher this value the model will make a bigger effort in talking about new topics.max_tokens: maximum completion length.

These are not the only possible options, so I recommend you review the OpenAI reference documentation to learn about more ways to configure your request.

The response from the completion engine is an object that has a choices attribute, which is a list of completions. We haven’t requested multiple completions, so the list is going to have a single element. This element is a Python dictionary with a text key that contains the generated text. Our function takes this text, removes any leading or trailing whitespace and returns it back to the caller. As mentioned above, consult the API documentation for information on other data items included in the GPT-3 response.

Let’s start a Python shell and play with the ask() function:

Pretty cool, right? What we are missing is the second part of our algorithm, in which we append a question and its response to the chat log, so that we can use it in the following question. We can implement a second function to update the chat log:

This function takes a question and an answer, and adds them at the bottom of the chat log. The updated chat log is returned.

Now we can have a conversation in which the context is preserved. Try the following in a new Python shell session:

These two functions are all we need to manage our chat. In the next sections we are going to integrate them with Twilio SMS messaging.

Configure Twilio Programmable Messaging

Next we are going to configure a Twilio SMS-enabled phone number for the chatbot to use. If you haven’t created a free Twilio account yet, create it now and log in.

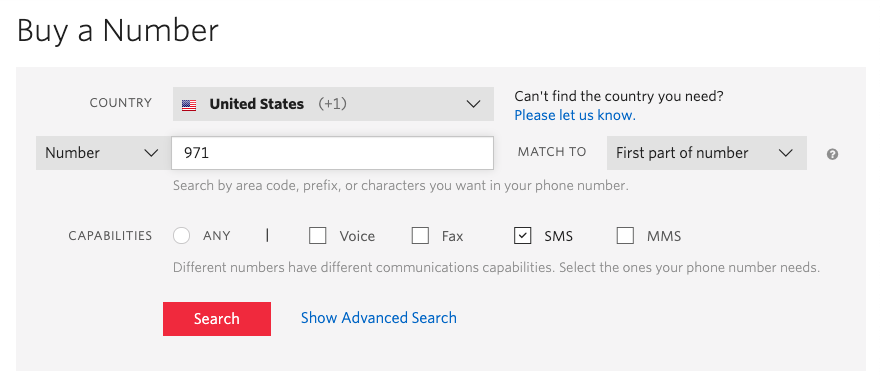

From the Twilio Console, select Phone Numbers, and then click on the red plus sign to buy a Twilio number. In the Buy a Number screen you can select your country and check SMS in the capabilities field. If you’d like to request a number from your region, you can enter your area code in the Number field.

Click the “Search” button to see what numbers are available, and then click “Buy” for the number that you like from the results. After you confirm your purchase, click the “Close” button. Note that if you are using a trial account, the funds for this purchase come from your trial credit.

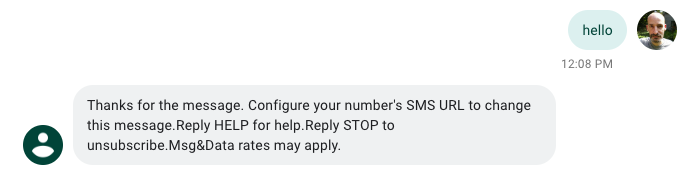

Congratulations, you now have your very own programmable phone number! Grab your smartphone and send a text message to your new number to make sure that it is working. You should receive a response instructing you to configure your number, which you will do next.

The Twilio SMS API uses a webhook to notify an application when there is an incoming message. The automated response that you received when you texted your Twilio number actually comes from a default webhook that is installed by Twilio on your number. The chatbot application will have a new webhook that is going to replace the default one.

With the Flask framework, it is extremely easy to define a webhook. Below is a skeleton application with a webhook definition. Copy the following code into a new file called app.py:

This code creates a Flask application that has an endpoint with the URL /bot. When Twilio is configured to know about this endpoint, it will send a request each time an SMS is received on the phone number you purchased earlier. The application can read the incoming message, do some processing, and generate a response, which is returned at the end of the function.

You can start the above application as follows:

The application is now running on your computer at the http://localhost:5000 URL, but it is not accessible from the Internet. The next step is to provision a temporary public URL that we can give to Twilio so that it can send requests. This work is done by the ngrok tool. Leave the application running and open a second terminal. Activate the virtual environment on this terminal as you have done on the first at the start of the tutorial and then enter the following command:

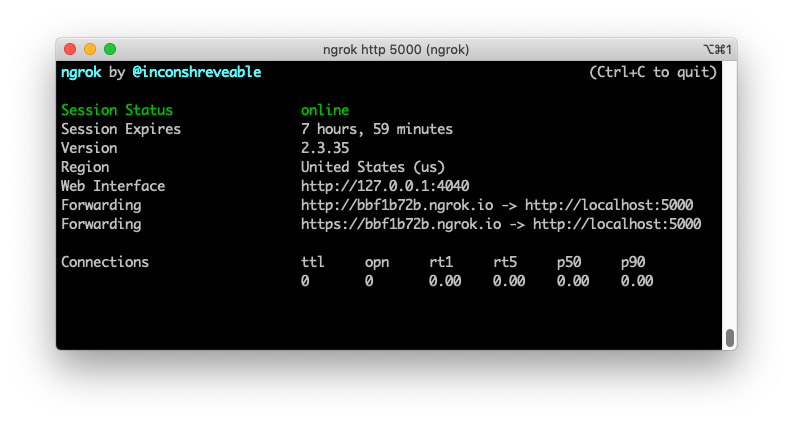

You should see a screen similar to this:

Note the lines beginning with “Forwarding”. These show the public URLs that ngrok uses to redirect requests into our service, both in http:// and https:// format. What we need to do now is tell Twilio to send incoming SMS notifications to this URL.

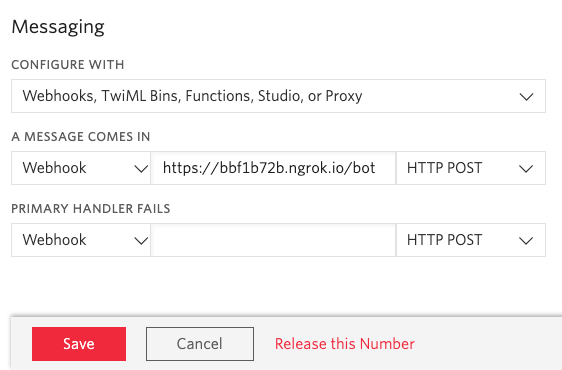

Go back to the Twilio Console, click on Phone Numbers, and then on the phone number that you purchased above. Scroll down to the “Messaging” section, copy the https:// URL from the ngrok output and then paste it on the “A message comes in” field. The endpoint that we created above is exposed under the /bot URL, so /bot needs to be appended at the end of the root ngrok URL. Make sure the request method is set to HTTP POST. Don’t forget to click the blue Save button at the bottom of the page to record these changes.

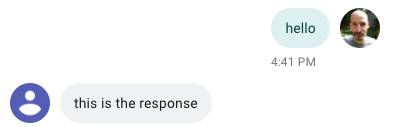

Send another SMS to your Twilio phone number and you will now get the response that comes from the Flask application:

Now we know how to run the GPT-3 engine, and how to receive and reply to SMS messages with Twilio. In the next section, we will integrate the two and have a complete chatbot project.

SMS chatbot with OpenAI and Twilio

Let’s expand app.py to send user messages to the ask() function that we created above, and to maintain the log of the chat. Here are the updated contents of app.py:

The most convenient place to store the chat log with a user is in the session variable from Flask, which uses an HTTP cookie for storage. Twilio works similarly to a web browser in this respect, and will accept, store (for up to four hours) and resend cookies during a conversation with a user. If there are two or more users chatting with the bot at the same time, each will have their own cookie.

Since session cookies are signed, the Flask application needs to have a secret key configured. For security, you should replace the placeholder value I used for the secret key with something unique.

The bot() endpoint begins by obtaining the user message as before, and the chat log from the session. The session object uses the familiar dictionary syntax, so session.get() will return a previously stored chat log if it exists or None if this user does not have a session. This is convenient because the functions in chatbot.py use the standard chat log if we pass None.

Calling the ask() function works exactly as before. We pass the user message and the chat log and we get back the completion from the GPT-3 engine, which is our answer.

The question and the answer are then appended to the chat log and the updated chat log is saved back to the user session so that in the next interaction with the user the complete chat history is used.

To end the function, the answer is returned as the response, and this will send it back to the user over SMS.

Ready to try your new bot? If you are still running your Flask application, stop it with Ctrl-C, and then run it again so that it incorporates the latest updates. If you are not running ngrok anymore, go to your second terminal and restart it, and then follow the instructions in the previous section to update your webhook URL in the Twilio Console, since ngrok creates a different URL every time it runs.

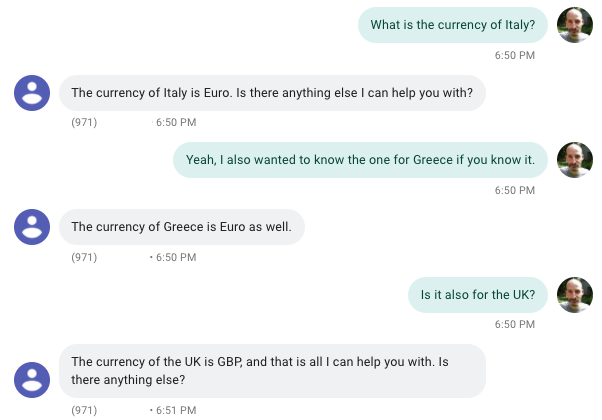

With the Flask application and ngrok running, you are ready to start sending SMS to the bot. Send a text with your first question to the Twilio phone number like you did before, and in a few seconds the response should arrive. Feel free to try the bot on different topics, it knows about a lot of things!

In the following example interaction it appears that the bot is losing its patience with my silly questions, but you can appreciate how maintaining the chat log in the user session makes this conversation feel very natural:

Conclusion

I hope you had as much fun as I had with this project! The possibilities of the GPT-3 completion engine are endless, so I encourage you to experiment with it by adapting this project to create other original uses besides chatting.

If you want to learn more about OpenAI language models, consider trying my ChatGPT tutorial Building a Chatbot with the ChatGPT API, Twilio Programmable SMS and Python.

If you build something cool I’d love to hear about it!

Miguel Grinberg is a Python Developer for Technical Content at Twilio. Reach out to him at mgrinberg [at] twilio [dot] com if you have a cool Python project you’d like to share on this blog!

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.