Build a Video Chat App with ASP.NET Core 5.0, Angular 11, and Twilio Programmable Video

Time to read: 15 minutes

Realtime user interaction is a great way to enhance the communication and collaboration capabilities of a web application. Video chat is an obvious choice for sales, customer support, and education sites, but is it practical to implement? If you’re developing with Angular on the frontend and ASP.NET Core for your server, Twilio Programmable Video enables you to efficiently add robust video chat to your application.

This post will show you how to create a running video chat application using the Twilio JavaScript SDK in your Angular single page application (SPA) and the Twilio SDK for C# and .NET in your ASP.NET Core server code. You’ll build the interactions required to create and join video chat rooms, and publish and subscribe to participant audio and video tracks.

To learn how to build the same app with previous language and framework versions, see these posts:

- Building a Video Chat App with ASP.NET Core 3.1, Angular 9, and Twilio

- Building a Twilio Programmable Video Chat App with Angular and ASP.NET Core 3.0

- Build a Video Chat App with ASP.NET Core 2.2, Angular, and Twilio

Prerequisites

You’ll need the following technologies and tools to build the video chat project described in this post:

- .NET 5 SDK (version 5.0.200+)

- npm and Node.js (version 12.4.0+)

- Angular CLI (version 11.2.3+)

- Visual Studio Code, Visual Studio 2019, or another IDE compatible with the above

- Git (if you’re going to clone the companion repository)

To get the most out of this post you should have knowledge of:

- Angular, including Observables and Promises

- ASP.NET Core, including dependency injection

- C# 9

- TypeScript

The source code for this project is available on GitHub. The code for previous versions of the app are available as branches and versioned corresponding to the .NET version which they represent.

Get started with Twilio Programmable Video

You’ll need a free Twilio trial accountand a Twilio Programmable Video project to be able to build this project with the Twilio Video SDK. Getting set up will take just a few minutes.

Once you have a Twilio account, go to the Twilio Console and perform the following steps:

- On the Dashboard home, locate your Account SID and Auth Token and copy them to a safe place.

- Select the Programmable Video section of the Console.

- Under Tools > API Keys, create a new API key with a friendly name of your choosing and copy the SID and API Secret to a safe place.

The credentials you just acquired are user secrets, so it’s a good idea not to store them in the project source code. One way to keep them safe and make them accessible in your project configuration is to store them as environment variables on your development machine.

ASP.NET Core can access environment variables through the Microsoft.Extensions.Configuration package so they can be used as properties of an IConfiguration object in the Startup class. The following instructions show you how to do this on Windows.

Execute the following commands in a Windows command prompt, substituting your credentials for the placeholders. For other operating systems, use comparable commands to create the same environment variables.

If you prefer, or if your development environment requires it, you can place these values in the appsettings.development.json file as follows.

Note: Be careful not to expose this file in a source code repository or other easily accessible location.

Create the ASP.NET Core application

Create a new ASP.NET Core Web Application named “VideoChat” with .NET 5 and Angular templating using the Visual Studio 2019 user interface or the following dotnet command line:

This command will create a Visual Studio solution containing an ASP.NET Core project configured to use an Angular application, ClientApp, as the front end. The server-side code is written in C# and has two primary purposes: first, it serves the Angular web application, HTML layout, CSS, and JavaScript code. Second, it acts as a Web API. The client-side application has the logic for presenting how video chat rooms are created and joined, and it hosts the participant video stream for live video chats.

Add the Twilio SDK for C# and .NET

The ASP.NET Core server application will use the Twilio SDK for C# and .NET. Install it with the NuGet Package Manager, Package Manager Console, or the following dotnet command-line instruction:

The VideoChat.csproj file should include the package references in an <ItemGroup> node, as shown below, if the command completed successfully.

Note: The version numbers in your project may be higher.

Create the folder and file structure

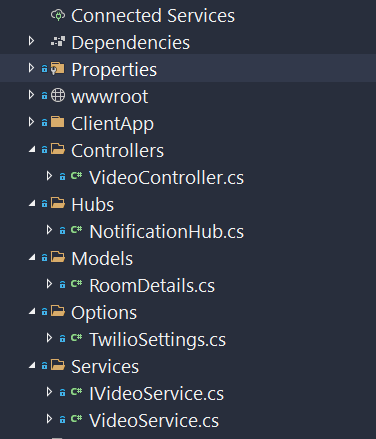

Create the following folders and files:

When you’re finished, your Solution Explorer folder and file structure should look like the following:

In the Controllers directory, rename SampleDataController.cs to VideoController.cs and update the class name to match the new file name.

Create services

The server-side code needs to do several key things, one of them is to provide a JSON Web Token (JWT) to the client so the client can connect to the Twilio Programmable Video API. Doing so requires the Twilio Account SID, API Key, and API Secret that you stored earlier as environment variables.

In ASP.NET Core, it is common to leverage a strongly typed C# class that will represent the various settings. To do that, add the following C# code to Options/TwilioSettings.cs, below the declarations:

These settings are configured in the Startup.ConfigureServices method, which maps the values from environment variables and the appsettings.json file to the IOptions<TwilioSettings> instances that are available for dependency injection. In this case, the environment variables are the only values needed for the TwilioSettings class.

Insert the following C# code in Models/RoomDetails.cs below the declarations:

The RoomDetails record is an object that represents a video chat room. This is using the “record types”, C# 9 language feature. With positional records, you have immutable reference types and enable the “with” syntax for inline cloning.

With dependency injection in mind, create an abstraction for the server-side video service as an interface. To do that, replace the contents of the Services/IVideoService.cs file with the following C# code:

This is a simplistic interface that exposes the ability to get the Twilio JWT when it is given an identity. It also provides the ability to get all the rooms.

To implement the IVideoService interface, replace the contents of Services/VideoService.cs with the following code:

The VideoService class constructor takes an IOptions<TwilioSettings> instance and initializes the TwilioClient, given the supplied API Key and corresponding API Secret. This is done statically and enables future use of various resource-based functions.

The implementation of the GetTwilioJwt is used to issue a new Twilio.Jwt.AccessToken.Token, given the Account SID, API Key, API Secret, identity, and a new instance of HashSet<IGrant> with a single VideoGrant object. Before returning, an invocation of the .ToJwt function converts the token instance into its string equivalent.

The GetAllRoomsAsync function returns a listing of RoomDetails objects. It starts by awaiting the RoomResource.ReadAsync function, which will yield a ResourceSet<RoomResource> once awaited.

From this listing of rooms the code projects a series of Task<RoomDetails> where it will ask for the corresponding ResourceSet<ParticipantResource> currently connected to the room specified with the room identifier, room.UniqueName.

You may notice some unfamiliar syntax in the GetAllRoomsService function if you’re not used to code after the return statement. C# 8 includes a static local function feature that enables functions to be written within the scope of the method body (“locally”), even after the return statement. They are static to ensure variables are not captured within the enclosing scope.

Note that for every room n that exists, GetRoomDetailsAsync is invoked to fetch the room’s connected participants. This can be a performance concern! Even though this is done asynchronously and in parallel, it should be considered a potential bottleneck and marked for refactoring. It isn't a concern in this demo project, as there are, at most, a few rooms.

Create the API controller

The video controller will provide two HTTP GET endpoints for the Angular client to use.

|

Endpoint |

Verb |

Type |

Description |

|

api/video/token |

GET |

JSON |

an object with a |

|

api/video/rooms |

GET |

JSON |

array of room details: |

Replace the contents of Controllers/VideoController.cs with the following C# code:

The controller is decorated with the ApiController attribute and a Route attribute containing the template "api/video". In the VideoController constructor IVideoService is injected and assigned to a readonly field instance.

Create the notification hub

The ASP.NET Core application wouldn't be complete without the use of SignalR, which

is an open-source library that simplifies adding real-time web functionality to apps. Real-time web functionality enables server-side code to push content to clients instantly.

When a user creates a room in the application their client-side code will notify the server and, ultimately, other clients of the new room. This is done with a SignalR notification hub.

Replace the contents of Hubs/NotificationHub.cs with the following C# code:

The NotificationHub will asynchronously send a message to all other clients notifying them whenthat a room is added.

Update Program.cs

The default Program class from the template is a bit boring, let’s spice it up a bit with C# 9’s top-level statements.

Replace the source in the template Program.cs file with the following C#:

The preceding code is literally half the size of the original template as all of the boilerplate code is no longer needed. The application entry point is implicit, the args variable is populated, and you can await running of the WebHost that uses your Startup object.

Configure Startup.cs

There are a few things that need to be added and changed in the Startup class and in the ConfigureServices method. Add the following C# using statements to the top of Startup.cs:

In the ConfigureServices method, replace all the existing code with the following code:

This configures the application settings containing the Twilio API credentials, maps the abstraction of the video service to its corresponding implementation, assigns the root path for the SPA, and adds SignalR.

In the Configure method, replace the app.UseEndpoints call with the following lines:

This maps the notification endpoint to the implementation of the NotificationHub. Using this endpoint, the Angular SPA running in client browsers can send messages to all the other clients. SignalR provides the notification infrastructure for this process.

This concludes the server-side setup. Compile the project and ensure there are no errors.

Build the client-side Angular app

The ASP.NET Core templates are not updated regularly and Angular is constantly being updated. To build the client app with the newest Angular code, it’s best to start with a current Angular template.

Delete the ClientApp directory from the VideoChat project. Then, open a console window (either PowerShell or Windows Console) in the VideoChat project directory and execute the following Angular CLI command:

This command should create a new ClientApp folder in the VideoChat project along with the basic folder and file structure for an Angular application.

The Angular application has a number of dependencies, including the twilio-video and @microsoft/signalr packages. Its development dependencies include the type definitions for the @types/twilio-video.

Replace the contents of the package.json with the following JSON code:

With the updates to the package.json complete, execute the following npm command-line instruction in the ClientApp directory:

This command ensures that all required JavaScript dependencies are downloaded and installed.

Open ClientApp/src/index.html and notice the <app-root> element. This non-standard element is used by Angular to render the Angular application on the HTML page. The app-root element is the selector for the AppComponent component.

Add the following HTML markup to index.html, in the <head> element below the <link> element for the favicon:

This markup enables the application to use the free version of Font Awesome and the Bootswatch Darkly theme.

Continue to src/app/app.component.html and replace the contents with the following HTML markup:

From the command line in the ClientApp directory, execute the following Angular CLI commands to generate the components:

Then execute the following Angular CLI commands to generate the required services:

These commands add all the boilerplate code, enabling you to focus on the implementation of the app. They add new components and services and update app.module.ts by importing and declaring the components the commands create.

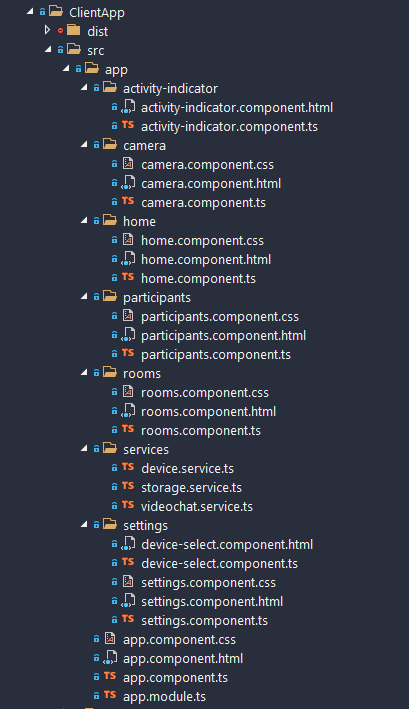

The folder and file structure should look like the following:

Updating the Angular App module

The application relies on two additional modules, one to implement forms and the other to use HTTP.

Add the following two import statements to the top of ClientApp/src/app/app.module.ts:

Next, add these modules to the imports array of the @NgModule as follows:

Add JavaScript polyfills

A JavaScript project wouldn’t be complete without polyfills, right? Angular is no exception. Luckily, the Angular tooling provides a polyfill file.

Add the following JavaScript to the bottom of ClientApp/src/polyfill.ts:

Create Angular services

The StorageService class uses the browser’s localStorage object, which enables the client application to persist state. It can read and write to this localStorage object, and the values are persisted in the browser so the user’s camera preferences are remembered.

Replace the content of services/storage.service.ts with the following TypeScript code:

The DeviceService class will provide information about the media devices used in the application, including their availability and whether the user has granted the app permission to use them.

Replace the contents of services/device.service.ts with the following TypeScript code:

This service provides an observable media device collection to which concerned listeners can subscribe. When media device information changes, such as unplugging or plugging in a USB web camera, this service will notify all listeners. It also attempts to wait for the user to grant permissions to various media devices consumed by the twilio-video SDK.

The VideoChatService is used to access the server-side ASP.NET Core Web API endpoints. It exposes the ability to get the list of rooms and the ability to create or join a named room.

Replace the contents of services/videochat.service.ts with the following TypeScript code:

Notice that the retrieval of the Twilio JWT is marked private. The getAuthToken method is only used within the VideoChatService class for the invocation of connect from the twilio-video module, which is done asynchronously in the joinOrCreateRoom method.

General concepts

Now that the core services are in place, how should they interact with one another and how should they behave? Users need to be able to create or join rooms. A room is a Twilio resource, and can have one or more participants. A participant is also a Twilio resource. Likewise, participants can track publications that provide access to video and audio media tracks. Participants and rooms share cameras which track publications for both audio and video tracks. The app has Angular components for each of these.

Implement the Camera component

In addition to providing audio and video tracks for room participants to share, the CameraComponent also displays a local camera preview. By rendering locally-created audio and video tracks to the DOM as the <app-camera> element. The Twilio Programmable Video JavaScript Platform SDK, imported from twilio-video, provides an easy-to-use API for creating and managing the local tracks.

Replace the contents of camera/camera.component.ts with the following TypeScript code:

Replace the contents of camera/camera.component.html with the following HTML markup:

In the TypeScript code above, the Angular @ViewChild decorator is used to get a reference to the #preview HTML element used in the view. With the reference to the element, the Twilio JavaScript SDK can create local video and audio tracks associated with the device.

Once the tracks are created, the code finds the video track and appends it to the #preview element. The result is a live video feed rendered on the HTML page.

Implement the Rooms component

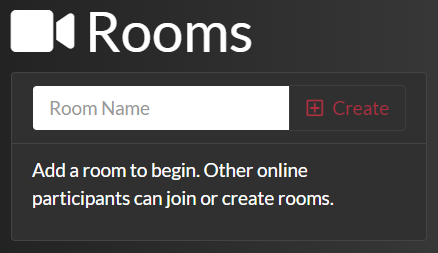

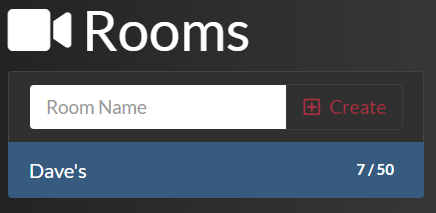

The RoomsComponent provides an interface for users to create rooms by entering a roomName through an <input type=’text’> element and a <button> element bound to the onTryAddRoom method of the class. The user interface looks like the following:

As users add rooms the list of existing rooms will appear below the room creation controls. The name of each existing room will appear along with the number of active participants and the room’s capacity, like the example shown below.

To implement the rooms user interface, replace the markup in rooms/rooms.component.html with the following HTML markup:

The RoomsComponent subscribes to the videoChatService.$roomsUpdated observable. Any time a room is created, RoomsComponent will signal its creation through the observable and the NotificationHub service will be listening.

Using SignalR, the NotificationHub echoes this message out to all the other connected clients. This mechanism enables the server-side code to provide real-time web functionality to client apps. In this application, the RoomsComponent will automatically update the list of available rooms.

To implement the RoomsComponent functionality replace the contents of rooms/rooms.component.ts with the following TypeScript code:

Under the hood, when a user selects a room to join or creates a room, they connect to that room via the twilio-video SDK.

The RoomsComponent expects a room name and an array of LocalTrack objects. These local tracks come from the local camera preview, which provides both an audio and a video track. The LocalTrack objects are published to rooms that a user joins so other participants can subscribe to and receive them.

Implement the Participants component

What good is a room without any participants? It's just an empty room—that's no fun!

But rooms do have something very cool: they extend EventEmitter. This means a room enables the registration of event listeners.

To implement the ParticipantsComponent, replace the contents of participants/participants.component.ts with the following TypeScript code:

A ParticipantComponent also extends an EventEmitter and offers its own set of valuable events. Between the room, participant, publication, and track, there is a complete set of events to handle when participants join or leave a room.

When they join, an event fires and provides publication details of their tracks so the application can render their audio and video to the user interface DOM of each client as the tracks become available.

To implement the user interface for the participants component, replace the contents of the participants/participants.component.html file with the following HTML markup:

Much like the CameraComponent, the audio and video elements associated with a participant are render targets to the #list element of the DOM. But instead of being local tracks, these are remote tracks published from remote participants.

Implement device settings management

There are a few components in play with the concept of settings. We’ll have a camera component beneath several DeviceSelectComponents objects.

Replace the contents of settings/settings.component.ts with the following TypeScript code:

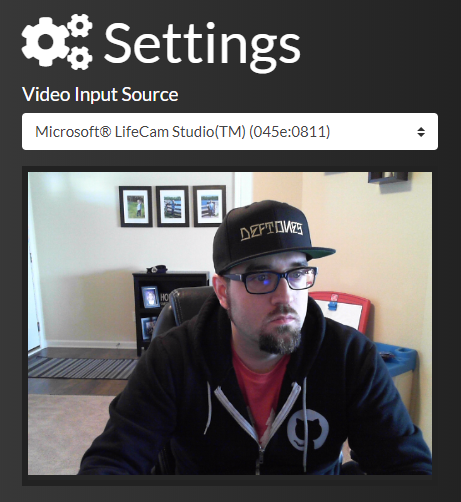

The SettingsComponent object gets all the available devices and binds them to the DeviceSelectComponent objects that it’s a parent of. As video input device selections change the local camera component preview is updated to reflect those changes. The deviceService.$devicesUpdated observable fires as system level device availability changes. The list of available devices updates accordingly.

To implement the user interface for settings, replace the contents of settings/settings.component.html with the following HTML markup:

If a media device option is not available to select, the DeviceSelectComponent object is not rendered. When an option is available, the user can configure their desired device.

As the user changes the selected device, the component emits an event to any active listeners, enabling them to take action on the currently selected device. The list of available devices is dynamically updated as devices are connected to, or removed from, the user’s computer. The user also sees a preview of the selected video device, as shown below:

To implement the settings user interface, replace the contents of settings/device-select.component.ts with the following TypeScript code:

Replace the contents of settings/device-select.component.html with the following HTML markup:

The DeviceSelectComponent object is intended to encapsulate the selection of devices. Rather than bloating the settings component with redundancy, there is a single component that is reused and parameterized with @Input and @Output decorators.

Implement the Home component

The HomeComponent acts as the orchestration piece between the various components and is responsible for the layout of the app. To implement the home user interface, replace the contents of home/home.component.ts with the following TypeScript code:

To implement the home user interface, replace the contents of home/home.component.html with the following HTML markup:

The home component provides the layout for the client user interface, so it needs some styling to arrange and format the UI elements. To do that, replace the contents of home/home.component.css with the following CSS code.

Understand video chat events

The Angular client app uses a number of resources in the Twilio Programmable Video SDK. The following is a comprehensive list of each event associated with an SDK resource:

|

Event Registration |

Description |

|

|

Occurs when a user leaves the room |

|

|

Occurs when a new participant joins the room |

|

|

Occurs when a participant leaves the room |

|

|

Occurs when a track publication is published |

|

|

Occurs when a track publication is unpublished |

|

|

Occurs when a track is subscribed |

|

|

Occurs when a track is unsubscribed |

Putting it all together

Phew, this was quite the project! Time to try it out. Run the application. If you’re running the application in Visual Studio 2019 using IIS Express, the user interface will appear at a randomly assigned port. If you run it another way, navigate to: https://localhost:5001.

After the application loads, your browser will prompt you to allow camera access. Grant the request.

If you have two video sources on your computer, open two different browsers (or an incognito window) and select different devices on each browser. Settings enable you to choose the preferred video input source.

In one browser, create a room and then join it in the other browser. When a room is created, the local preview is moved just under the settings. That way, their video stream of remote room participants who subsequently join, will render in the larger viewing area.

If you don’t have two video sources on your computer, watch for a forthcoming post that will teach you how to deploy this application on Microsoft Azure. When you’ve deployed the app to the cloud you can have multiple users join video chat rooms.

Summary of building a video chat app with ASP.NET Core, Angular, and Twilio

This post showed you how to build a fully functioning video chat application with Angular, ASP.NET Core, SignalR, and Twilio Programmable Video. The Twilio .NET SDK provides JWTs to client-side Angular code as well as getting room details via the ASP.NET Core Web API. The client-side Angular SPA integrates the Twilio JavaScript SDK.

Additional resources

A working example of the application is available on the author’s Azure domain: https://ievangelist-videochat.azurewebsites.net/. The companion repository on GitHub includes better styling, persistent selections, and other features you may want to include in your production app.

You can learn more about the technologies used in this post from the following sources:

- Angular Observables and Promises: Observables on angular.io, Understanding, creating and subscribing to observables in Angular and AngularJS Promises – The Definitive Guide on Medium

- C# local functions: Local functions (C# Programming Guide) on docs.microsoft.com

David Pine is a 2x Microsoft MVP, Google Developer Expert, Twilio Champion, and international speaker. David loves interacting with the developer community on Twitter @davidpine7. Be sure to check out his blog at https://davidpine.net.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.