Transcribe and Email Your Voicemails with OpenAI, Node.js, Twilio, and SendGrid

Time to read: 7 minutes

Transcribe and email your voicemails with OpenAI, Node.js, Twilio and SendGrid

It's always a shame when you miss a call, luckily Twilio Programmable Voice allows you to build your own voicemail to record calls and handle the recordings in code.

In this blog post, you'll learn how to build a simple voicemail, transcribe the recordings with OpenAI's speech to text service (built on their open-source Whisper model), and then email the results to yourself using SendGrid.

Why OpenAI?

We've seen ways to transcribe voicemails on this blog before, so why pick OpenAI to do it? The Whisper model has some advantages over the built-in transcription . It can work with files up to 25MB in size, which will be longer than the 120 seconds available with the built-in, and it can transcribe in 57 different languages. It's also capable of translating from any of the supported languages into English and returning more information about the text, including timestamps.

What you will need

To build the application you will need:

- A Twilio account (if you don't have one yet, sign up for a free Twilio account here)

- A Twilio phone number that can receive voice calls

- A free SendGrid account with a verified sender (either a Single Sender Verification or Domain Authentication) and an API key with permission to send email

- Node.js installed (I'll be using the latest LTS release as of writing, version 20.11.0)

- ngrok so that you can respond to webhooks in your local development environment (you'll need a free ngrok account)

- An OpenAI API key on a premium plan or with available credits

When you have all of that, you're ready to start building.

Let's get building

To receive an incoming call and respond with our voicemail service, you will need a web application that can respond to incoming webhooks. We're going to build that using Node.js and Express. We'll use the Twilio Node.js module to help build responses to these incoming requests, the OpenAI Node module to send the recording data to the OpenAI API, and the SendGrid mail module to send emails with the transcription.

We'll start by building the base of the Node.js application.

Starting the Node.js app

Open up your terminal and create a new directory, then initialise a new Node.js application.

Next, install the dependencies we're going to need:

Create the files we'll need for the application:

The .env file is where you will store your credentials that will be loaded into the environment. Gather your credentials for Twilio, SendGrid and OpenAI and open the .env file in your favourite editor. Fill in your credentials like this:

You will also need to add 2 email addresses to this file, one that is verified with SendGrid to send from, and one where you want to receive your voicemail transcription emails.

One last thing before we write some code. Open package.json in your editor. We're going to add a "start" command to the "scripts".

This uses the new .env file support built-in to Node.js to load your credentials into the environment.

Also, in package.json, add this to the main object:

This will let us use ES modules by default in our code.

Organising the config

In your editor, open config.js. In this file we are going to arrange all of our config from the environment.

Start by destructuring all the variables that were set in the .env file earlier, as well as one extra for the port we'll start the application on, out of process.env.

Now you can apply default values to the config, like setting the port to "3000" if it is not present in the environment. You can also collect each of the variables in relevant objects. Export all the values like this:

Creating the server

The rest of the application will be written in index.js so open that in your editor.

Start by importing all the external dependencies that you will need:

Import the config that you just organised.

Destructure the VoiceResponse from the Twilio.twiml object. You will use this to generate TwiML in response to incoming webhooks from Twilio. TwiML is a subset of XML that allows you to direct calls and messages through Twilio.

Initialise a new express application and set it up so that it can parse URL encoded request bodies. Twilio webhooks send data to your server encoding the data as if it were submitted by an HTML form.

Set the app up to listen on the port provided.

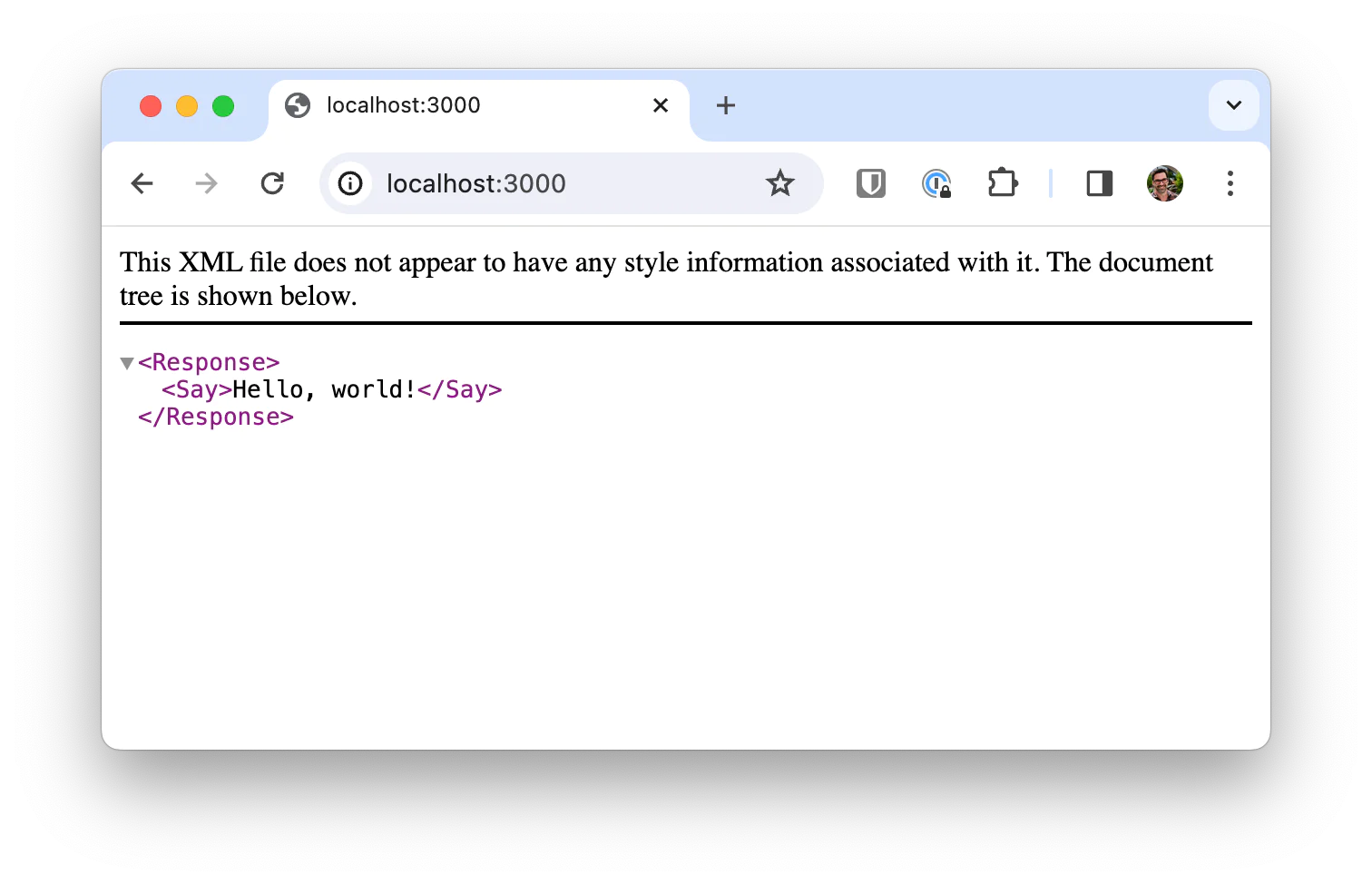

Now you have a server, but it doesn't respond to any incoming requests. Let's do a quick test to see it in action. Create an endpoint at the root of your application that responds to GET requests with an example TwiML response.

Here we are constructing a VoiceResponse, using the <Say> element to say "Hello, world!", setting the content type to text/xml and responding with the generated TwiML. In your terminal, start the application with:

Open your browser to http://localhost:3000 . You should see the XML response in the browser.

That works, now we can get on with building our voicemail.

Recording voicemails

You've seen how to return TwiML from your express server, but you haven't used it with Twilio yet. For this section you are going to create two endpoints that control the phone call and one that receives the recording. We'll use <Say> to read out messages to the caller and <Record> to capture the caller's message.

The <Record> TwiML requires so attributes to be set. The first is the action which is a URL that Twilio will make a request to once the caller finishes making their recording. The second is the recordingStatusCallback which is a URL that Twilio will make a request to once the recording is ready.

If the caller doesn't make any sound during the recording then the call will carry on to the following TwiML verb. For our call we'll say a message and then return them to the start with a <Redirect>.

When the caller has left a message the call will be redirected to the /calls/complete endpoint. This is a good time to confirm that the caller left a message and then hang up.

For now, you also need a /recordings endpoint. Before you get the recording transcribed by OpenAI, you can just log out the URL of the recording to make sure everything is going well so far.

This is enough code to test this part. Next you need to set up ngrok to test with your local server.

Testing webhooks

To send webhooks to your server, Twilio needs a publicly available URL to connect to. ngrok can provide that for us. In a terminal window, start ngrok to point to the local port you're using for development.

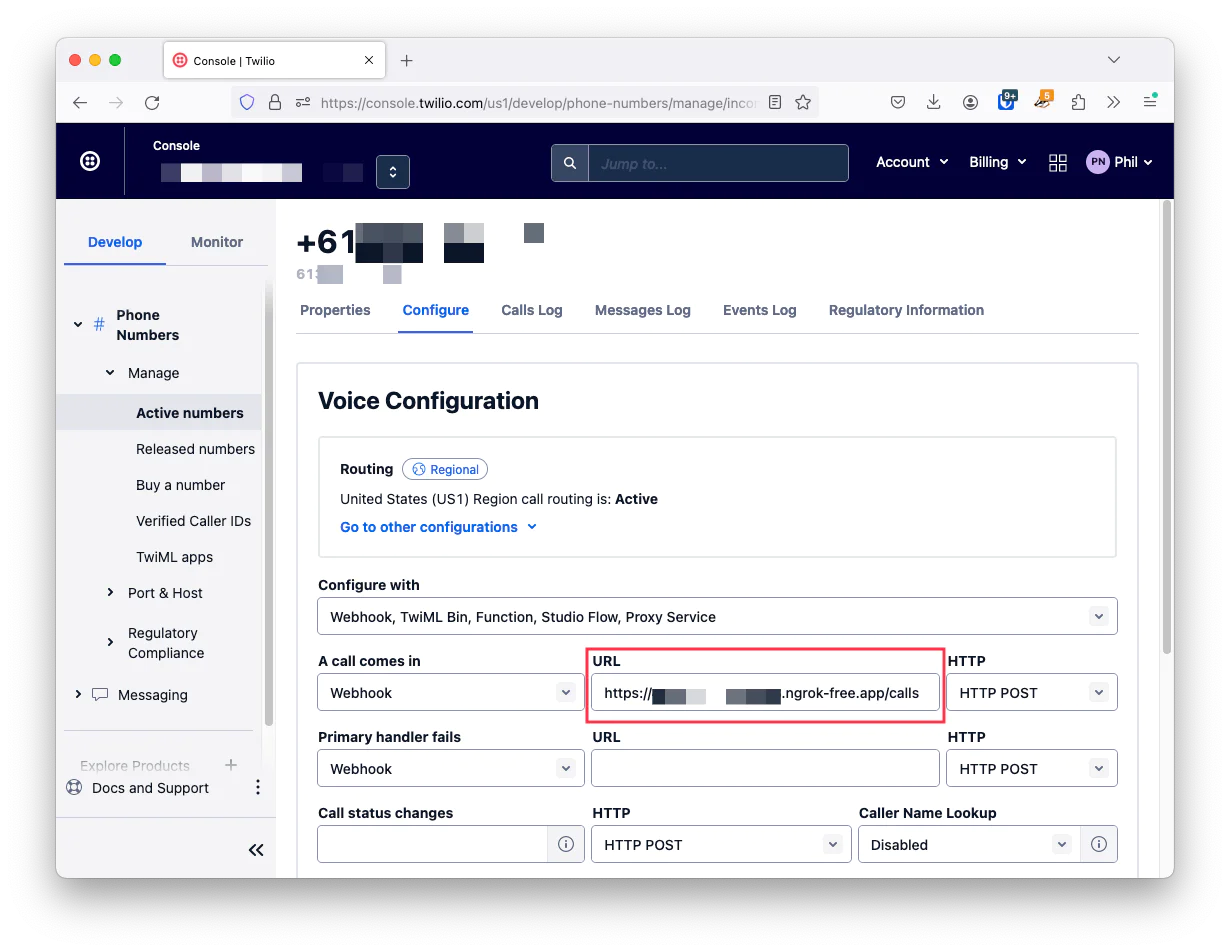

Once ngrok starts up you should see that it is forwarding from a random looking URL to your localhost:3000. Copy that URL and head to your Twilio Console. Open up your Active Numbers and edit the voice enabled number you want to use for this application.

Enter the ngrok URL under the voice configuration for when a call comes in. Make sure to add /calls to the end of the URL.

Save your phone number and give it a call. You should be greeted with the message you wrote, you can leave a message for yourself and once you hang up you will see the URL for the recording printed to your terminal. Open the URL in your browser and you will be able to listen back to your message.

Transcribing with OpenAI's Whisper API

The next task is to download the recording and then send it to OpenAI for transcription. To start this section, create clients for each of the APIs we are going to use. At the top of index.js, below where you import the config, create an OpenAI client, a Twilio client and a SendGrid client using their credentials from config.

To send the recording to OpenAI you first need to download the recording, then upload it to the OpenAI API endpoint. You can use fetch to download the recording and the toFile helper from the OpenAI library to transform it into a format that works with the API. You can then send it to the OpenAI transcriptions API using the "whisper-1" model. For simplicity later, write this all out as a separate asynchronous function that returns the text from the transcription response.

Update your /recordings endpoint to use this function to get the transcribed text and print out to the console.

Restart your server and call your number again. This time when you leave a message you should see the transcription printed to the console.

Accuracy

In my testing I found the transcriptions to be quite accurate. If you don't find the same, there are ways to improve accuracy. This section in the OpenAI documentation on prompting the Whisper model has some ideas that can help make transcribing specific words or acronyms that you might be expecting or ensuring the model includes punctuation.

Sending the voicemail as an email

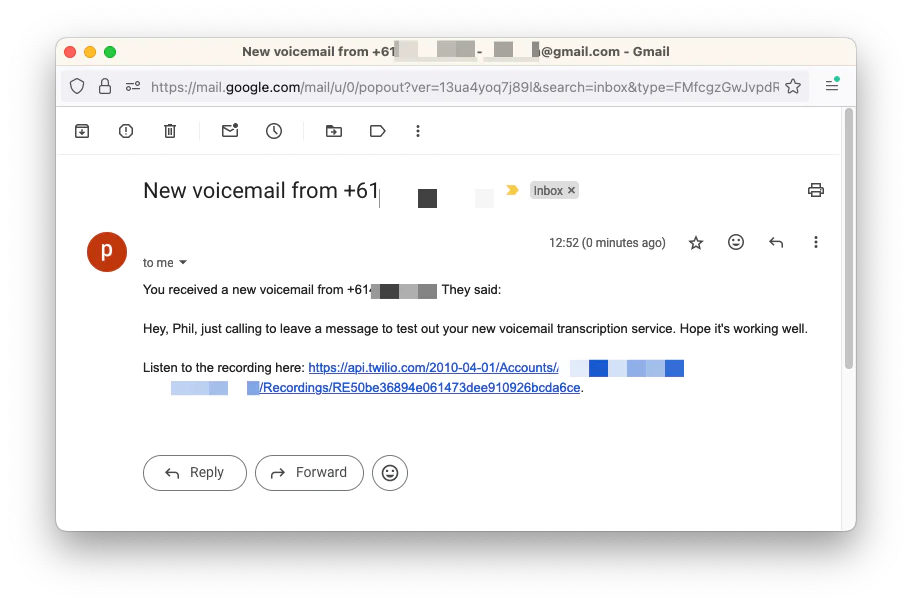

The final part of this application will send the transcript of the message as an email to your provided address. To do this we will gather the number that the call originally came from and the recording URL and send it all as an email using SendGrid.

To get the original number that called, you will need to make a request to the Twilio call resource. You can do this at the same time you are transcribing the recording. You should also catch any errors that happen as part of these API calls. Update your /recordings endpoint to the following:

As you can see, you get the transcription and call details concurrently using Promise.all. Then with those details, construct the text that includes the number, the recording URL and the transcribed text. That is added to a message object, along with the to email, from email and subject of the email, all of which is passed to the SendGrid API client to send. If anything goes wrong, you catch the error and log it, returning a 500 response.

Restart the app one more time and leave yourself another message. This time you will receive an email with all the details about the call.

Lost in transcription

You've just built an application that takes a phone call, records a message, transcribes that message using OpenAI transcriptions and emails the results to you using SendGrid. While this is great for recorded messages, if you want to transcribe speech live on a call check out this tutorial for transcribing phone calls with AssemblyAI.

Phil Nash is a developer advocate for DataStax and Google Developer Expert, based in Melbourne, Australia. You can get in touch with Phil on Twitter at @philnash and follow his writing on JavaScript and web development at https://philna.sh.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.