AI Voice: Analyze your Pronunciation with Twilio Programmable Voice, OpenAI Realtime API, and Azure AI Speech

Time to read:

Analyze your Pronunciation with Twilio, OpenAI, and Azure

Introduction

For millions of language learners worldwide, few options exist that provide both real-time voice conversations and an analysis of pronunciation skills. By combining the capabilities of Twilio, Azure AI Services, and OpenAI’s powerful Realtime API, you can build an intelligent voice coach that listens, responds naturally, and gives feedback on pronunciation skills.

In this tutorial, you will learn how to build an app that:

- Uses Twilio Programmable Voice to facilitate real-time speech interactions.

- Streams user audio to the OpenAI Realtime API for natural, conversational AI responses.

- Evaluates pronunciation using the Pronunciation Assessment feature of Azure AI Services.

- Delivers personalized feedback with Twilio Programmable Messaging for WhatsApp.

Prerequisites

Before getting started, here’s a checklist of items required to follow this tutorial:

- Python 3.12.4 installed on your machine.

- A Twilio account with a voice-enabled phone number.

- An OpenAI account, API key, and access to the OpenAI Realtime API.

- An Azure account with an active subscription. Click here to get started with creating your account.

- A WhatsApp-enabled phone number.

- A code editor like VS Code and a terminal on your machine.

- An ngrok account and an authtoken to make your local app accessible online.

Building the app

Let’s take a quick look at how the app works:

- A user calls in and selects the language they’d like to practice.

- The server initiates a voice practice session between the caller and a Realtime model.

- With the help of Twilio Media Streams, the server sends the caller’s live audio to OpenAI and Azure simultaneously over a WebSocket connection.

- The Realtime model provides an audio response, while Azure AI Speech analyzes pronunciation in the background.

- At the end of the call, Twilio sends the analyzed results to the user via WhatsApp.

You’ll be using the FastAPI web framework to build your server. It is lightweight and has native support for asynchronous WebSocket endpoints, making it an ideal choice for our real-time streaming requirements.

You’ll begin by setting up your development environment and installing the necessary packages and libraries. Next, you’ll configure ngrok, write the server code, and finally test the app to ensure everything works as expected.

Let’s get started.

Set up the development environment

When working with Python in development, it’s good practice to use a virtual environment. Think of this as an isolated sandbox to help avoid conflicts between dependencies used across different projects.

Open a terminal and enter the following commands:

Store the environment variables

Throughout this project, you’ll be working with sensitive keys, and you shouldn’t place these directly in your code. Create a .env file to store them:

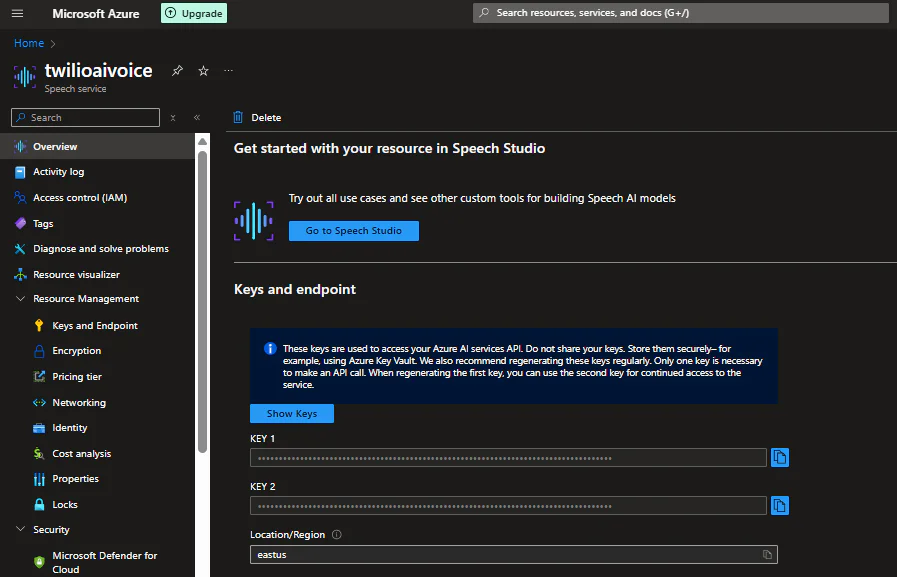

To get your Azure AI Services API key and service region details, you’ll first need to create a Resource group and a Speech service in the Azure portal.

- From the portal Home screen, search for Resource groups and click Create.

- Follow the on-screen instructions to create a group. A common naming convention for an app like the one we’re building would be spch-<app-name>-<environment>. For example: spch-aivoicebot-dev.

- Next, search for and create a Speech service.

- Once done, navigate to the Overview page where you’ll find your key and region:

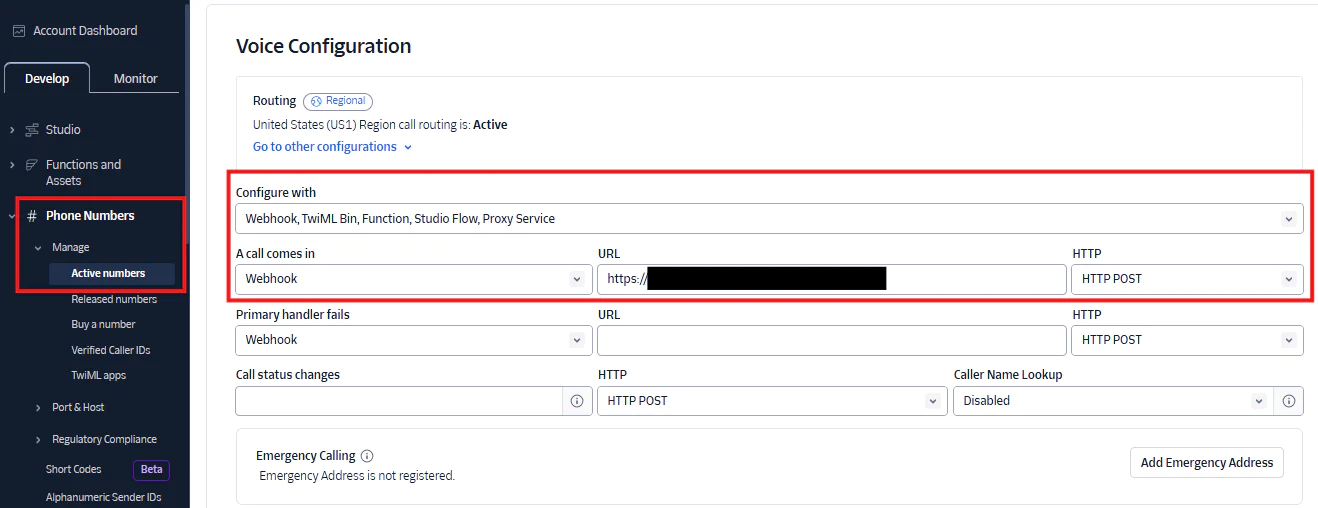

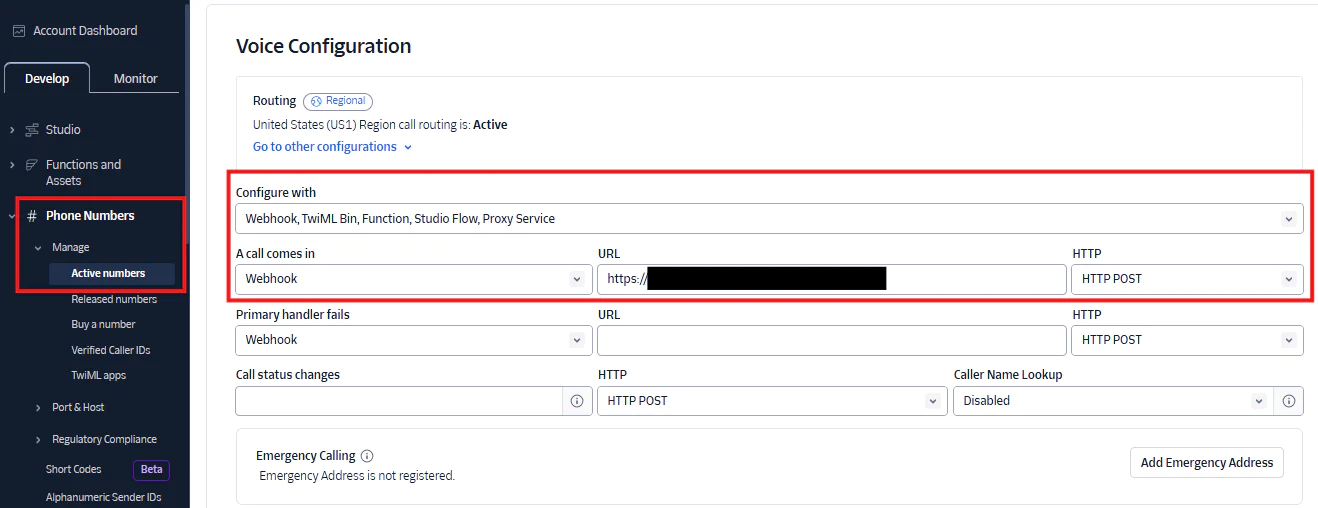

To get your Twilio Number SID, open the console and navigate to:

Phone Numbers > Manage > Active numbers

Select your phone number and click the Properties tab to locate the Number SID.

You can find your account SID and auth token on the homepage of the console.

For OpenAI, log in to your account and navigate to Settings > API keys

Your ngrok authtoken is available on your dashboard.

Now, open the .env file in your code editor and add your keys from their respective service:

Install packages and libraries

Open your terminal and install the required packages for this project:

Write the server code

With the Python environment ready, you can begin writing the server code. The first order of business will be to expose the local server to the internet. Twilio needs a way to send requests to the app, and this is where ngrok comes in.

Create the file to house the main server code:

Import required modules

Open the file in your code editor and import the required modules and .env keys:

Take note of the dictionary labeled language_mapping, as well as the TWILIO_SANDBOX_NUMBER and WHATSAPP_PHONE_NUMBER environment variables. You’ll use them later in the tutorial.

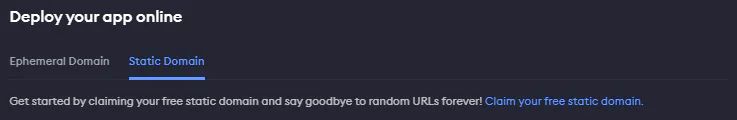

Set up an ngrok tunnel

ngrok’s default functionality is to generate a random URL every time you start an agent. This is not ideal, as you’d have to update your Twilio webhook URL manually each time that happens. Instead, with the help of ngrok’s free static domain and a little extra code, you’ll set up some logic to handle all that automatically.

Head to the ngrok website to claim your free domain:

Next, add the code below to your main.py while replacing your-ngrok-static-domain with your static domain:

The code above represents a Lifespan Event. This term refers to logic that runs once when the application starts up, and again when it shuts down.

Here’s how it works:

- The server authenticates with ngrok and starts a listener using the configuration options.

- This listener returns the URL, and the Twilio client configures a Twilio webhook for your phone number.

- When the app shuts down, it stops the ngrok agent while handling two likely exceptions to allow for a graceful shutdown.

The last bit of code defines the server’s entry point and sets up uvicorn to launch the FastAPI app.

After running the above code from the terminal with:

You should see the message “Twilio voice URL updated:” printed to the terminal, along with the listening URL. Confirm this by going to the voice configuration page on your Twilio console:

Once confirmed, stop the server for now with Ctrl + C and comment out the Twilio configuration part of the code. This ensures you don’t send a redundant update request each time you restart the app. However, if you’re not using a reserved domain, leave it in place so the URL updates dynamically.

You can learn more about Lifespan Events from the FastAPI docs.

Handle incoming calls with Twilio Programmable Voice

With all the preliminary setup out of the way, you’re ready to begin writing the core app logic.

The next order of business is to define the routes for your server. Add the following code to main.py, in-between the app initialization and the server entry point:

The /gather route greets the caller, takes their language selection, and passes it on to the /voice route. When the caller makes a choice, Twilio forwards it using the action=”/voice” attribute on the Gather verb.

Inside the /voice route, the app matches the caller’s input against the language_mapping dictionary to retrieve the corresponding language and prompt. Feel free to modify the options but ensure to select languages the Pronunciation Assessment feature supports.

The Say verb speaks the prompt to the caller, while connect.stream() initiates a connection to the audio-stream websocket endpoint. The Stream noun also specifies a status_callback URL, which Twilio will call when the stream starts and ends. This route will send the analysed results to the caller via WhatsApp.

Integrate the Realtime API and Azure AI Services

OpenAI Realtime API

OpenAI provides two main approaches for building AI assistants that provide audio responses:

- Chained architecture: Converts audio to text, generates a text response with a Large Language Model (LLM), and synthesizes the response into audio.

- Speech-to-speech architecture: Directly processes audio input and output in real time, providing low-latency interactions and a more natural feel to the conversation.

While Chained architecture has its advantages, the better option for our use case is the Speech-to-speech architecture. In this scenario, the voice model responds in real time, providing low-latency interactions and a more natural feel to the conversation.

Let’s write the code to set this up. Begin by creating utility functions and a class to handle various tasks at different points during the conversation.

Create a file named speech_utils.py:

Import the required modules for this file and load your environment variables:

Add these two helper functions, and I’ll explain what they do right after:

The send_to_openai function does exactly what it says. It takes a WebSocket connection to the Realtime API (which we’ll set up later), along with a chunk of the caller’s audio encoded in base64 format, and sends this data to the Realtime model. This function gets called iteratively, as you’ll find out when we set up the websocket connections. The type: input_audio_buffer.append field specifies the type of event we’re sending, which lets OpenAI know what to do with it.

The update_default_session function reconfigures the default session with our specifications. It sets a system message with behavioral instructions for the Realtime model, then picks a voice at random from a list of supported ones. This way, you hear a different AI voice each time the app starts up, and you can then choose which one you prefer and stick with it. Finally, it crafts the event object with the fields we want to update:

modalities: A list of options the model can respond with. Please note that “text” is required.input_audio_formatandoutput_audio_format: Specifies an audio format ofg711_ulaw, which both Twilio and OpenAI Realtime support.turn_detection: Enables server-side Voice Activity Detection (VAD). Setting this field totype: server_vadlets the model automatically detect when the caller starts and stops speaking, so it knows when to respond.

Azure AI Services

The next step is to define a class to configure Azure’s speech recognition and pronunciation assessment.

Add the following code to your speech_utils.py file:

The AzureSpeechRecognizer class bundles all the logic needed for speech recognition and assessment. It declares an initial state for the speech_recognizer object: an empty list which will eventually contain the final results of the speech analysis.

The configure() method sets up speech recognition and pronunciation assessment. When called iteratively, the send_to_azure() method writes incoming audio chunks to the configured input stream.

Meanwhile, start_recognition() and stop_recognition() run asynchronously to initiate and halt the continuous recognition process. This happens in the background so other tasks can run in parallel. Lastly, we define callback methods for specific events fired by the speech recognizer.

And that wraps up the logic for speech_utils.py.

Bringing it all together

It’s time to set up the WebSocket endpoint to receive and process audio from Twilio Media Streams. For that, we go back to the route setup in the main.py file.

First, update the import statements and instantiate a speech_recognizer object:

Now, add this block of code right below the /voice route:

This endpoint establishes two WebSocket connections: one with FastAPI’s WebSocket API (twilio_ws) and another with the aiohttp library (openai_ws). aiohttp integrates well with asyncio, allowing your application to keep everything non-blocking during a concurrent stream.

Upon successfully connecting to the Realtime API, the endpoint defines two coroutines:

receive_twilio_stream: This listens for incoming messages from Twilio and takes action based on the type of message:connected: Starts the Azure speech recognition process.start: Captures and sets thestream_sid, which we’ll use to send the AI response back to the caller.media: Sends the audio to OpenAI and Azure based on their supported formats.stop: Logs a message to the console when the stream ends.send_ai_response: This also listens and takes action based on specific responses, this time from OpenAI:error: Logs errors to the console so they’re easier to debug.input_audio_buffer.speech_started: Manages interruptions by sending aclearmessage to the Twilio stream. The Realtime server sends this response when it successfully detects speech.response.audio.delta: Processes the audio chunks and forwards them to Twilio for playback. It also sends amarkmessage that signals when each bit of audio is complete. The Realtime server sends this event alongside the audio response from the model.

I know it’s been quite the journey, but you’re almost there! Just one final piece remains…

Send feedback via WhatsApp

To send a WhatsApp message from your server, you need to activate the Twilio Sandbox for WhatsApp.

From the Twilio console, under the Develop tab, go to:

Explore products > Messaging > Try WhatsApp

If a dialog box pops up, review and accept the terms, then click Confirm to continue.

On the next screen, follow the setup instructions to activate the Sandbox. You’ll need to send a “join” message to the provided Sandbox number before you can begin sending WhatsApp messages. Once the message is received, Twilio will reply confirming that the Sandbox is active.

You should see your To and From WhatsApp numbers on the Twilio console after you have connected to the sandbox. To avoid exposing phone numbers on a platform like GitHub, add them to your .env file:

Remember, you already retrieved these earlier in the tutorial.

Next, send another message (e.g., “Hello!”) to begin a user-initiated conversation. This allows you to send a message from your server without having to use one of Twilio’s pre-configured templates.

Once this is done, add the code below to main.py to send the results to the caller:

Remember the status_callback attribute we specified on connect.stream() earlier in the /voice route? Well, Twilio sends a request to the specified URL under two conditions: when the stream starts and when it stops. We only want to take action on one of them: stream-stopped.

When the request meets this condition, we loop through the results and craft them in a readable format for the message body. Make sure to replace the text following whatsapp: in both the from_ and to fields with the appropriate values.

With all of the right information in place, we send the results to the caller. The /message-status route logs the message status, whether it was successfully delivered or encountered an error.

Test your app

With ngrok and your Twilio phone number already configured, it’s time to run the app. Ensure your virtual environment is active, and then start the server by running:

If everything is set up correctly, you should see a log of the startup process in your terminal. Now, place a call to your Twilio phone number, and you should hear the greeting message from the /gather route, prompting you to select a language.

Once you do, the AI voice will respond in your selected language. As you speak, you will also see an assessment of your side of the conversation printed to the terminal in real time!

When you’re done talking, hang up the phone, and you should receive your analysis results on WhatsApp.

Troubleshooting

Twilio trial accounts come with certain limitations that may cause errors in your app. The Free Trial Limitations page provides more information on how this might affect your setup during testing.

If you’re running into issues not related to Twilio, try tweaking your setup at different points to detect what the problem might be. For instance, if you’re getting an AI response but no speech recognition output, temporarily suspend the open_ai task to narrow down the problem.

The server setup also includes a fair bit of error handling, so paying attention to exceptions logged to the console could help resolve some issues.

Conclusion

That’s it! You have successfully built an AI Voice app that evaluates your pronunciation skills and provides real-time feedback and post-call analysis.

Now that you have your very own AI Voice coach, you can extend your app’s functionality by adding a few extra features, like:

- Speech emotion analysis to tailor feedback based on tone.

- Detailed breakdown of assessment scores. Here’s a link to some of the key pronunciation assessment results to get you started.

This tutorial builds on Paul Kamp’s article: Build an AI Voice Assistant with Twilio Voice, the OpenAI Realtime API, and Python. Shout-out to the Twilio Developer Voices team for laying a solid foundation for building AI Voice apps with Twilio and the OpenAI Realtime API.

Danny Santino is a software developer and a language learning enthusiast. He enjoys building fun apps to help people improve their speaking skills. You can find him on GitHub and LinkedIn .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.