ConversationRelay Architecture for Voice AI Applications Built on AWS using Fargate and Bedrock

Time to read:

Enterprises have realized there is huge potential in voice-backed AI, or “agentic”, applications. Many new companies have sprung into existence offering Agentic-Applications-as-a-Service (has AAaaS been coined yet?) while other companies are experimenting and building their own.

While connecting a simple AI application to a voice channel is relatively straightforward, building a production-grade agentic application isn’t trivial. Achieving human-like latency and managing the conversation flow (e.g., handling interruptions or tool calls) while providing an engaging experience are voice-specific optimizations that are required for a delightful experience. These experiences are – or soon will be – a primary business to customer interface, and it will make sense for many enterprises to control things end-to-end. To those enterprises, outsourcing this functionality will not be appealing.

The purpose of this post is to provide insights on how you could build an agentic voice experience using Twilio ConversationRelay and AWS Fargate. This blog post includes a repo and instructions on how to set up a proof-of-concept application.

What do you need for a production AI Agent?

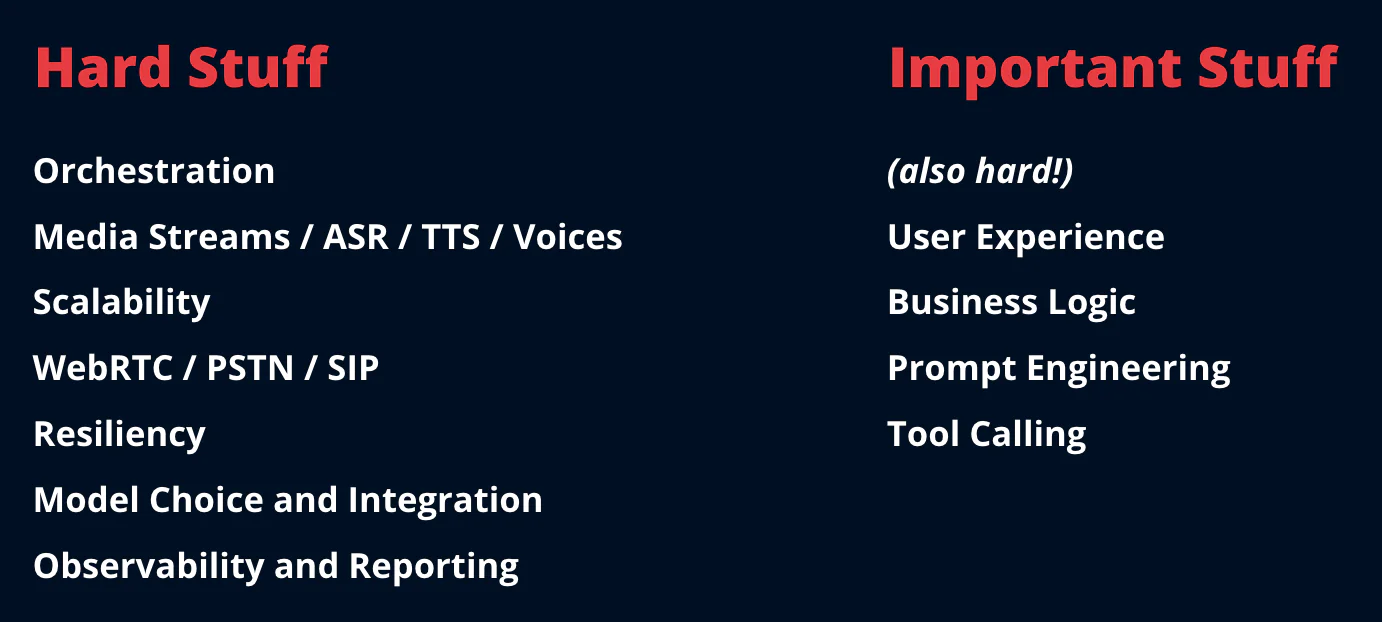

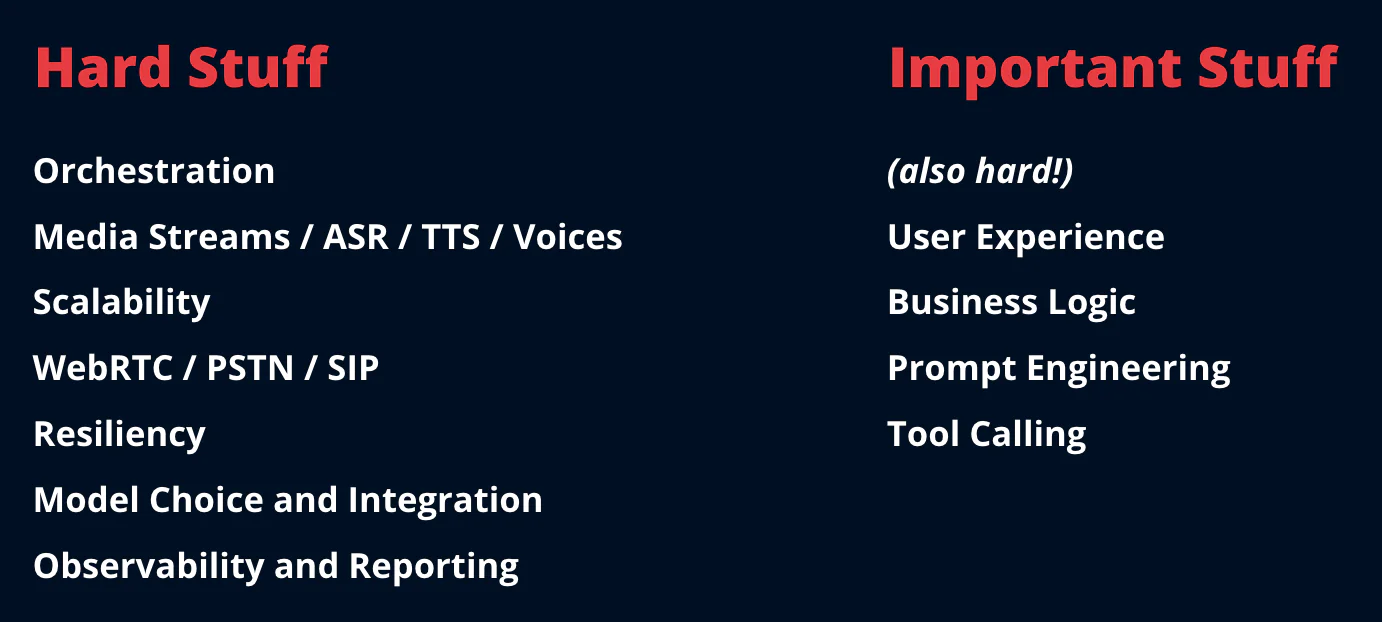

Before we get to the code, let’s go over five key areas to focus on when building production scale agentic applications:

- ability to scale

- ease of development

- the right SST (speech-to-text) & TTS (text-to-speech) providers for the task

- the right LLM for the task

- acceptable latency

Ability to scale

The adoption of agentic experiences is increasing rapidly and shows no signs of slowing down. And as the experiences get better, the flywheel will keep turning – your customers are going to expect a new, higher level of service. Your application will need to be able to handle this new demand as adoption and use cases increase. Twilio and AWS can help.

Twilio has been powering voice calls over APIs and software since 2008, and has a robust platform for enterprise voice applications.

Voice was Twilio’s first channel, and we now power billions of minutes of voice applications across the PSTN, SIP, and WebRTC with our Voice SDKs.

Read our 2024 Cyber Week Recap to get a sense of the voice traffic (as well as messaging and email traffic) Twilio handles.

AWS pioneered the cloud computing revolution and powers applications all across the globe. Their Well-Architected Framework guides and influences modern application development.

There are clear benefits to building on platforms with proven track records of providing resilient and reliable services and APIs.

Using Twilio and AWS to build and power your voice AI applications would be a good choice!

Ease of development

The agentic stack has many components. While it is possible to build them all yourself, it can make sense to let platforms like AWS and Twilio handle the elements that we are good at and allow your development efforts to focus on the components that differentiate your business.

AWS and Twilio can help you offload the boilerplate so you can speed up the time to deployment and value when you are testing and building a solution. Both AWS and Twilio are known for their excellent documentation and developer-friendly APIs and design patterns.

User experience, business rules, prompt engineering, LLM choice, guardrails, tool calling and the customer experience elements are where you should spend the majority of your development efforts.

The right STT and TTS for the task

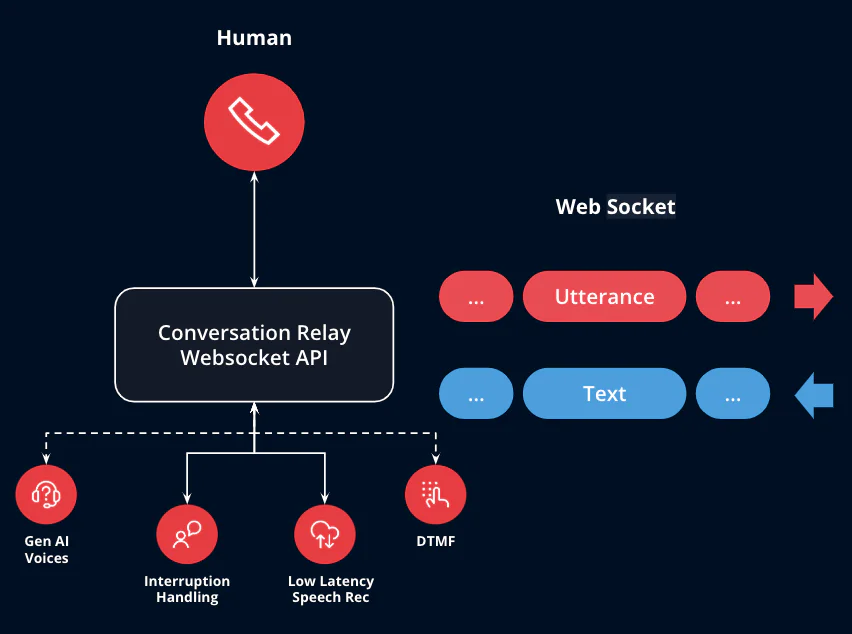

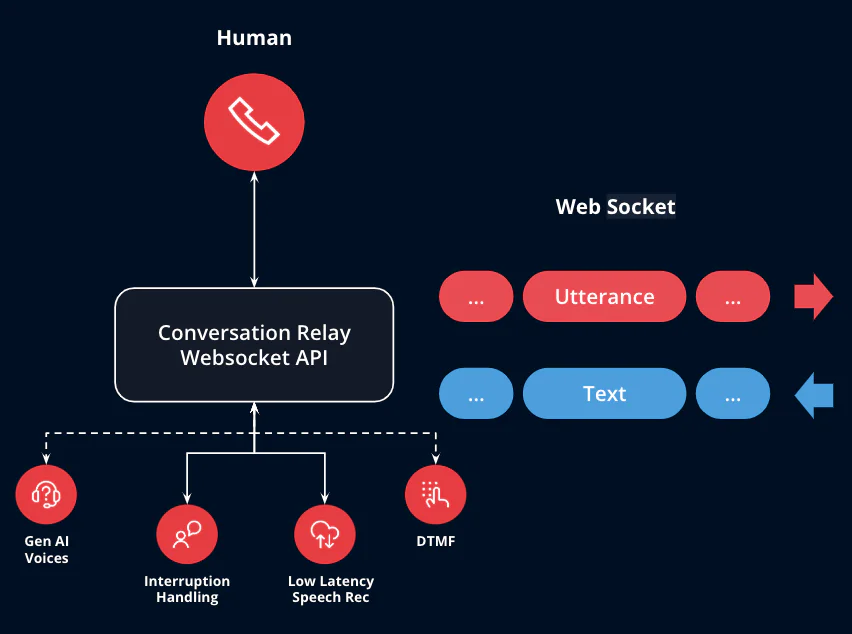

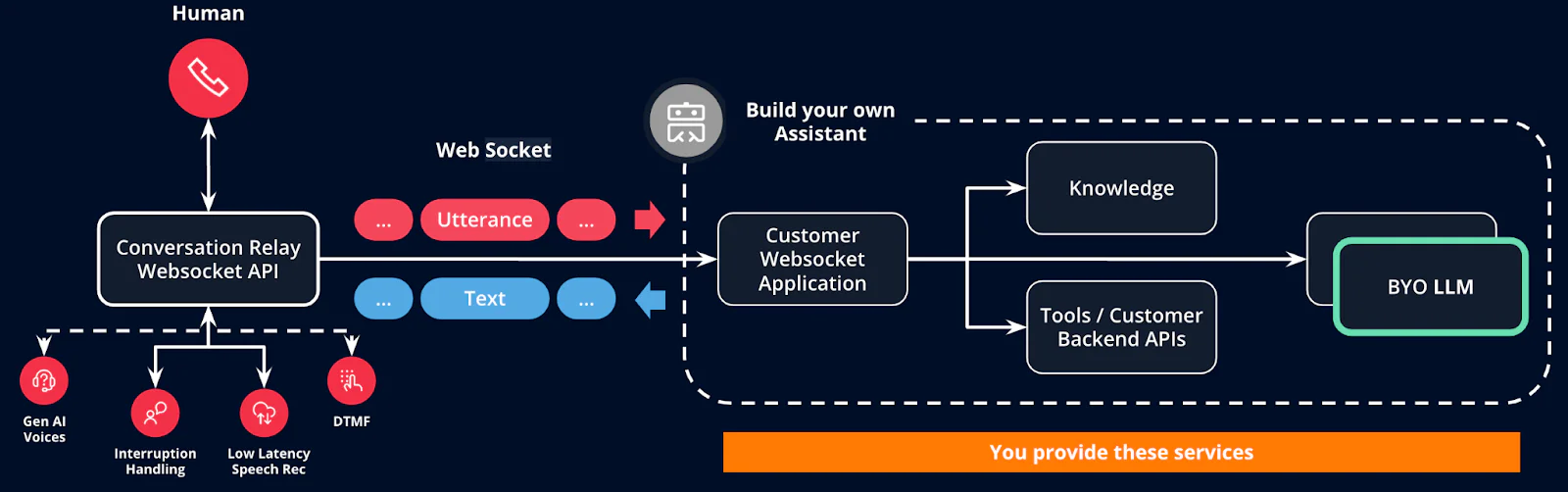

Twilio’s ConversationRelay handles the orchestration of speech-to-text and text-to-speech as well as key functionality like interruption handling. ConversationRelay handles STT and TTS so your application just needs to receive and process text and stream your application’s generated – or retrieved – text back to us.

Twilio has partnered with world-class providers such as AWS, Google, Deepgram, and ElevenLabs, and has provisioned and optimized capacity with providers to optimize for latency.

As speech-to-text and text-to-speech providers rapidly improve and evolve, Twilio is continually evaluating new releases from them as well as adding to our existing providers. And best of all, we leave the choice of providers and configurations ( voices and language for example) completely up to you… and configurable during each session.

The right LLM for the task

LLMs are also changing at a rapid pace, and your application needs to be able to switch models and providers to take advantage of improved features, latency, and cost as the ecosystem evolves.

AWS Bedrock is a compelling choice for many reasons. For starters, it is already in your AWS accounts and can be provisioned within your company’s existing infrastructure.

AWS Bedrock allows you to choose the LLM model your application needs based on your analysis of the cost, performance, and functionality of models in the catalog.

As this space matures, it is becoming clearer that while some agentic experiences may require the latest models, other experiences could be well served by lower cost models. Bedrock allows you the flexibility of trying different models via configuration so you can optimize your application for cost, latency, and function.

In addition you can control model outputs using guardrails and maintain observability for model output and for model distillation and optimization.

Acceptable latency

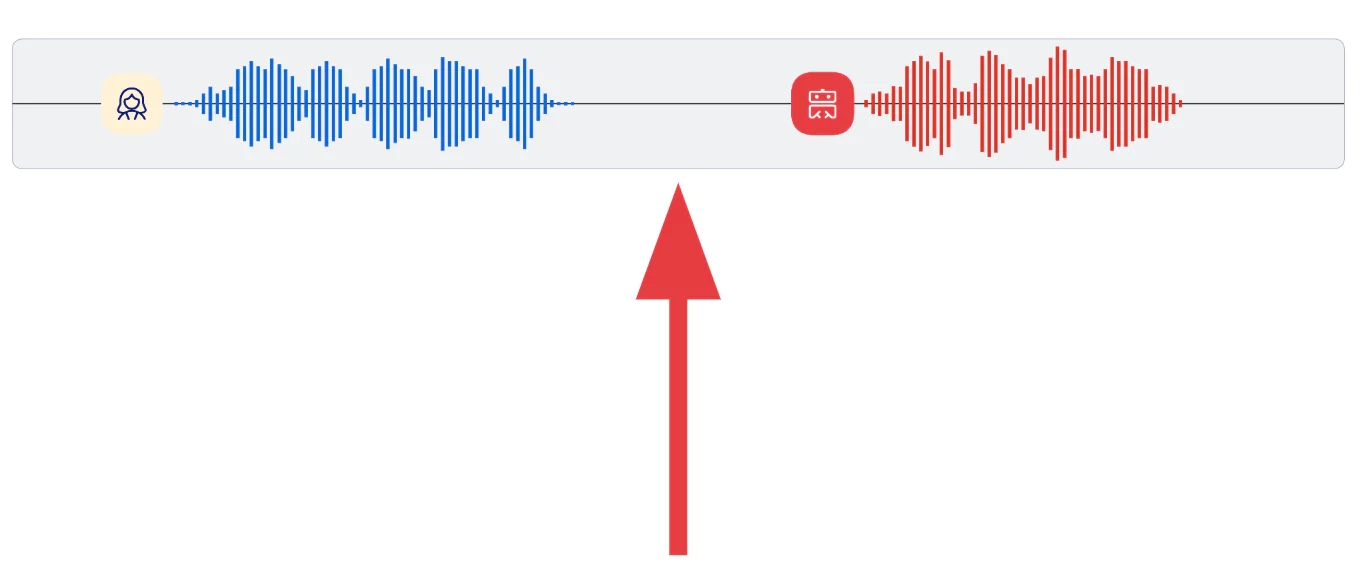

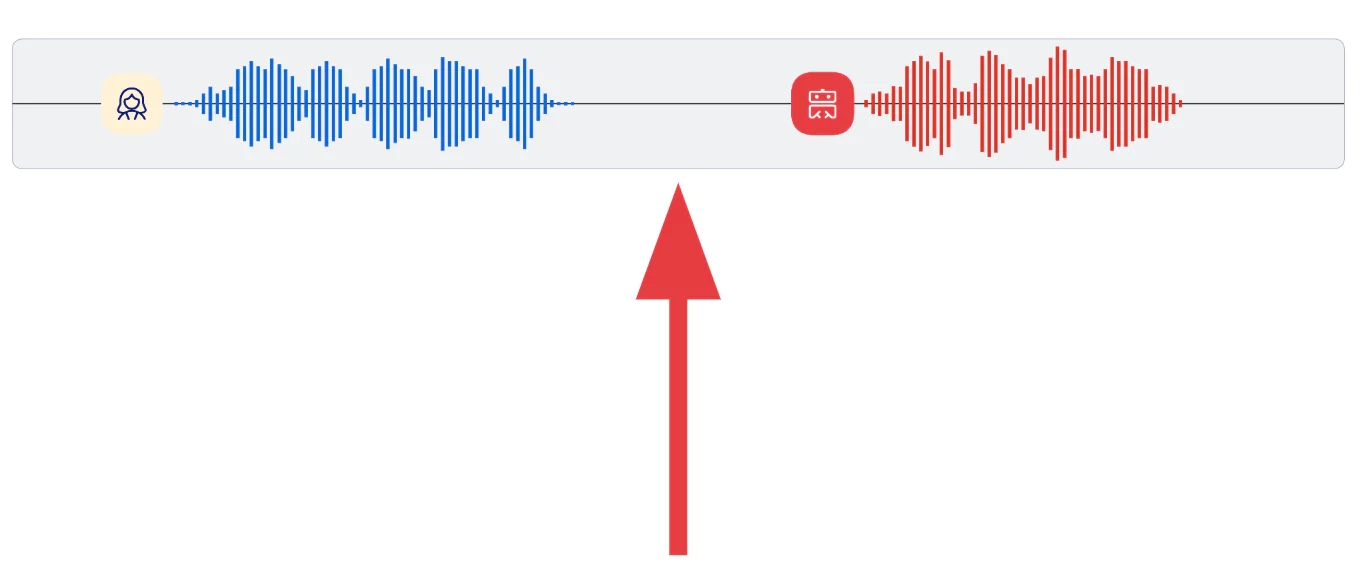

None of the four previous points will matter if you cannot address the time it takes for your model to start speaking a response back to your user – indicated by the red arrow in the screenshot below.

Considering this time gap is crucial in providing agentic experiences that achieve human-normal latency.

The reference design will enable you to build agentic applications that can perform with human-level latency by leveraging Twilio’s Voice platform and ConversationRelay combined with a highly available and performant Docker application hosted on AWS Fargate.

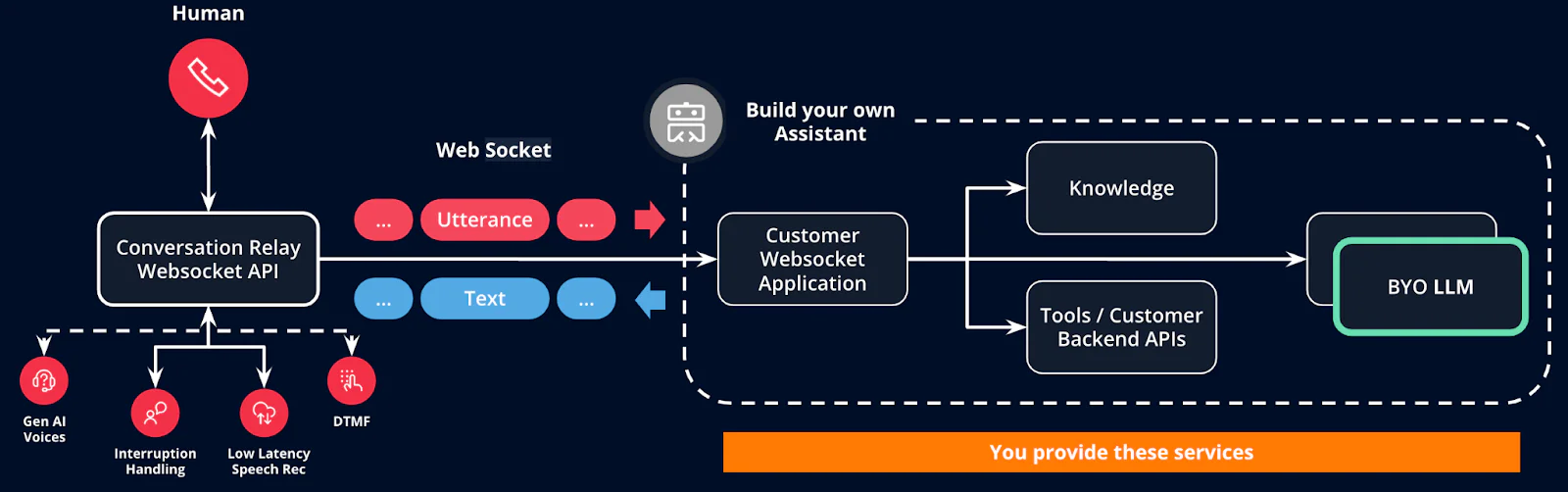

We have already covered how ConversationRelay manages and optimizes the speech-to-text and text-to-speech components for you. While Twilio also handles the key voice interfaces (WebRTC/PSTN/SIP), you still need to build the application (indicated by the orange box in the diagram below labeled You Provide these services).

This post’s application uses Docker and AWS Fargate with an Application Load Balancer to deliver a highly performant and resilient application.

Prerequisites

This is not a beginner level build! You should also be comfortable with the concepts already presented and with programming and command line skills to be able to spin up this demo application.

- Twilio Account. If you don’t yet have one, you can sign up for a free account here

- A Twilio Phone Number with Voice capabilities

- AWS account with permissions to:

- AWS Bedrock

- AWS DynamoDB

- ECR

- Fargate

- AWS CLI installed

- AWS SAM CLI installed

- Node.js installed on your computer. (We tested with versions higher than Node 22)

- Docker on your local machine to build and deploy images

- Ngrok or a similar IP proxy service to proxy requests from Twilio to your local machine

ConversationRelay architecture with AWS Fargate and Bedrock

This application is intended to be straightforward to build and deploy, and serve as the foundation for something that you could take into production.

The application uses Node.js and Express.js, and can be run locally.

We use AWS DynamoDB for a persistence layer, and we use AWS Bedrock for LLM calling.These two services need to be configured for your build of this application, but you could certainly swap them out for different options.

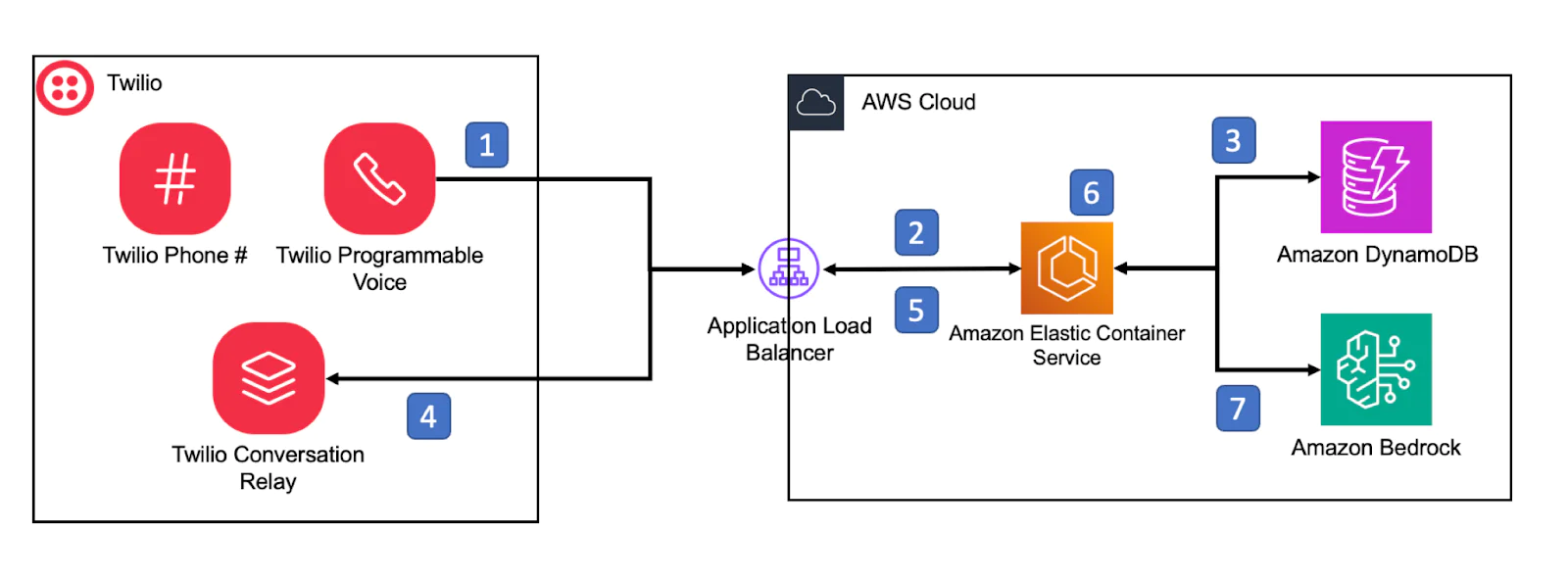

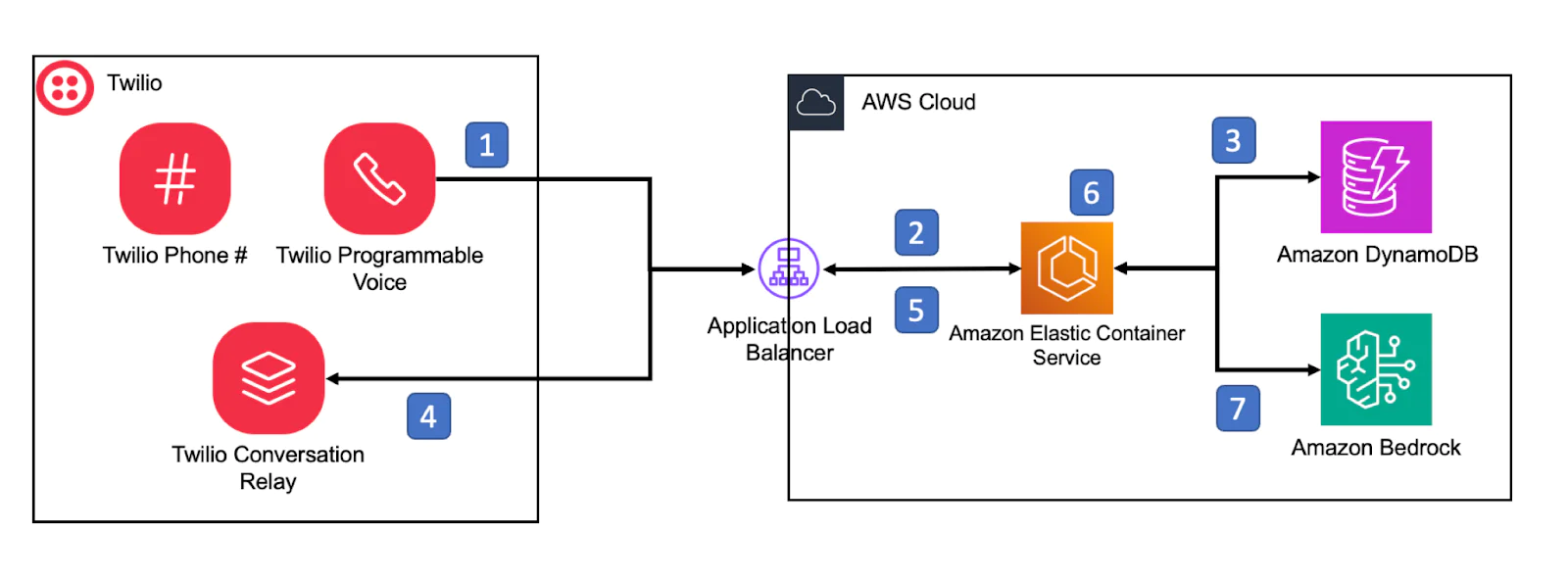

The application is deployed using Docker and Fargate. Here is a high level overview of the entire reference architecture:

- Twilio Voice Infrastructure: Leverage Twilio’s CPaaS capabilities to connect to your customers. The inbound voice handler is routed to a REST API that will establish the ConversationRelay session using Programmable Voice (and Twilio’s Markup Languages, or TwiML).

- TwiML Response: Respond to a new inbound call using TwiML, Twilio’s Markup Language. This initial step can use user context to personalize the session for the user.

- Datasource: Production applications clearly need capable databases to maintain state. The reference application uses DynamoDB.

- ConversationRelay: The TwiML from #2 establishes a unique WebSocket session and is ready to convert speech-to-text and send the text to your application. It’s also ready to receive inbound streams of text to reply to the caller using the selected specified text-to-speech provider.

- WebSocket API: ConversationRelay sends transcribed text from the caller to your application, where you can handle the converted text as events. LLMs will stream text responses back to your application where you can, in turn, stream the text “chunks” back to ConversationRelay to be converted into speech.

- Business Application: This reference application uses Node.js and Express.js deployed as Docker images to AWS Fargate.

- LLM: The reference application makes LLM calls using AWS Bedrock.

Let’s build it!

Download the code from this GitHub repo.

This application is designed to run locally using Docker or as a local Node application for development and testing, and it can be deployed as an x86 image on AWS Fargate for production use. We are providing this application as a sample application with no warranties or support for production.

The README.md for the Github repo outlines all of the ways you can manage running the application. We recommend testing locally first and then deploying to Fargate.

Local Development

This guide is designed to get you going quickly with a local deployment of the infrastructure needed for the app and minimal resources used in the cloud. After you have a local version working, the README.md in the repo suggests how you could deploy the application using Docker or in the cloud using Fargate.

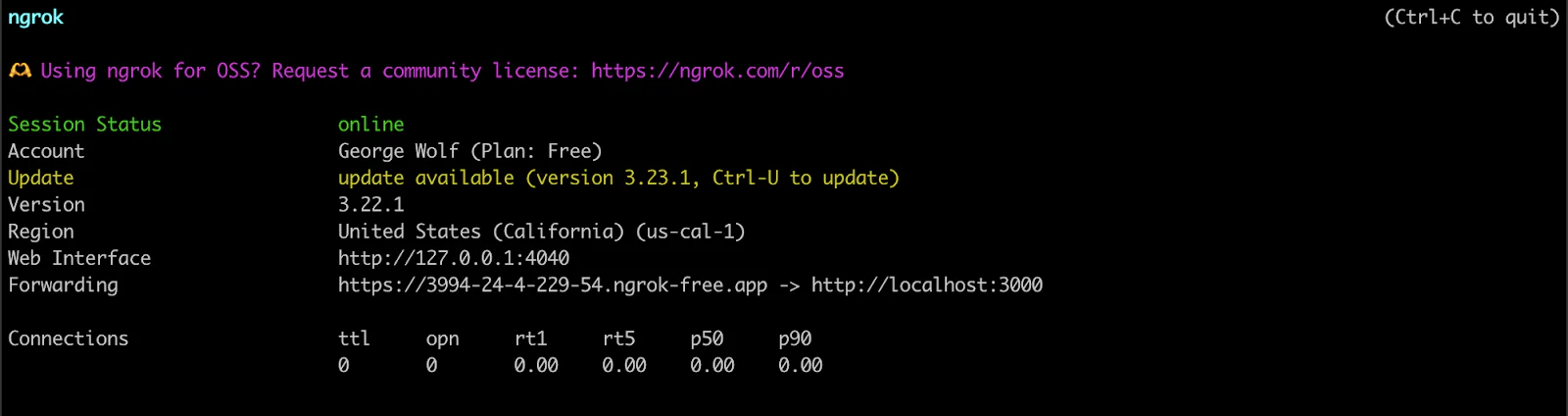

Step 1

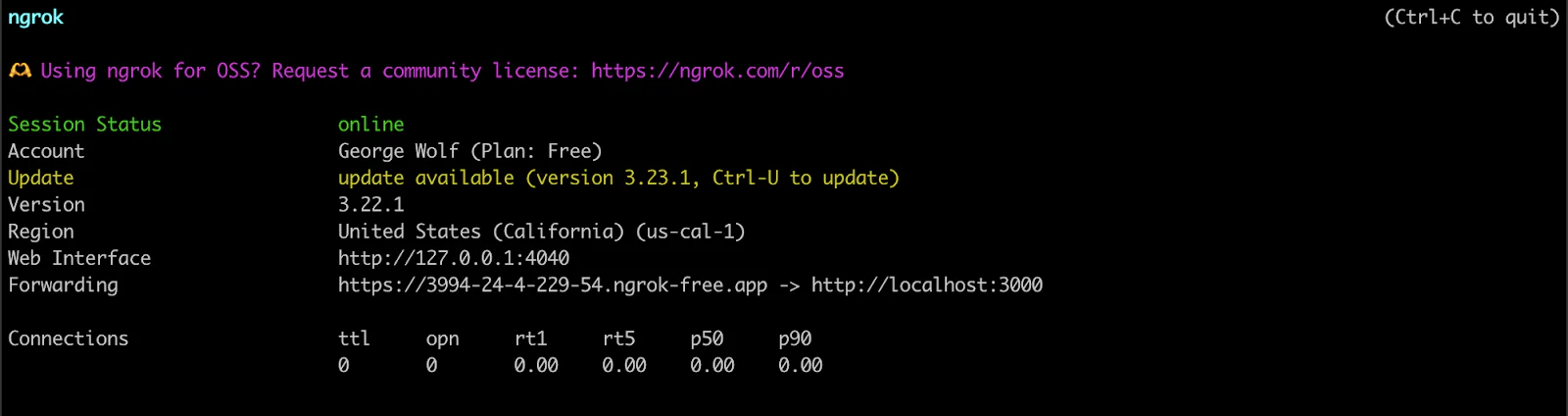

Run ngrok. When you run ngrok, you will get a public internet URL to your proxy. Make a note of this for the next steps:

ngrok http http://localhost:3000

Step 2

Clone the Git repo from Github, if you have not already.

Ensure that the AWS CLI application is installed and can run from your terminal.

Ensure that the model you will be using in this demo is enabled for your AWS account for use in Bedrock.

Step 3

Deploy cloud resources using AWS SAM (sam build; sam deploy as shown in README.md) DynamoDB, Bedrock, and IAM permissions.

Step 4

In the ConversationRelay-LiveChat-Bedrock-Fargate/app directory, install the Node dependencies needed to run the application:

npm install

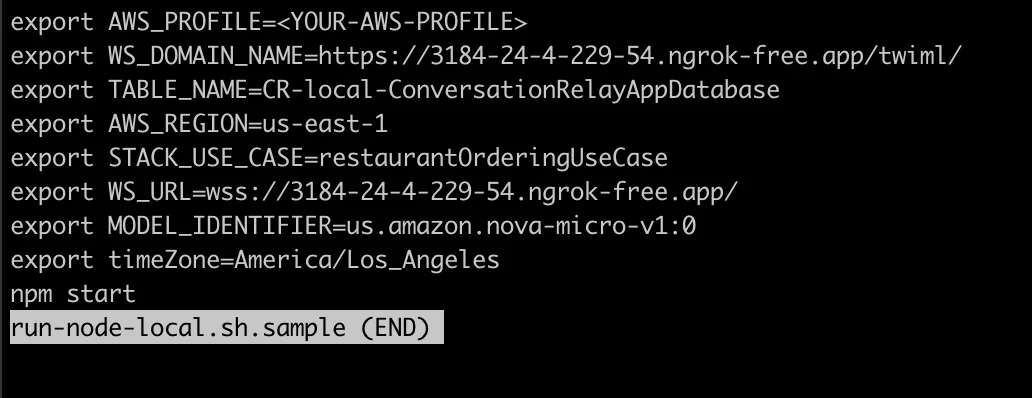

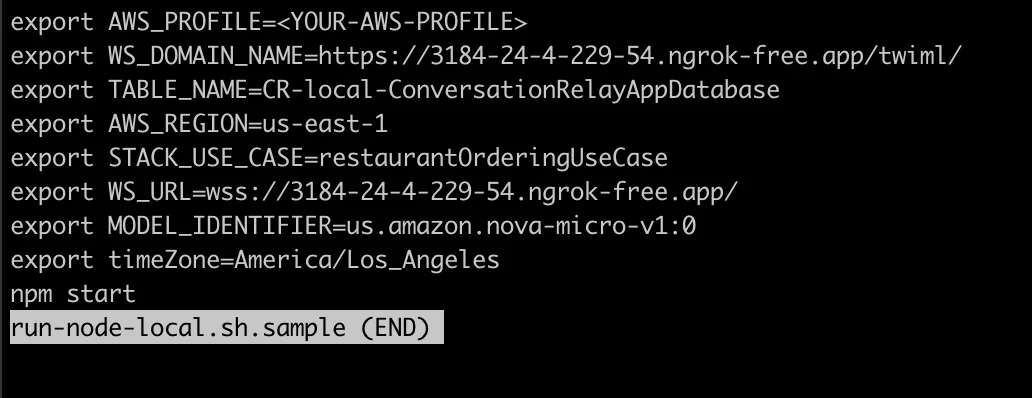

Then, edit the run-node-local.sh .sample file in that directory to point to the resources deployed and to ngrok on your machine.

Then edit the run-node-local.sh .sample file to change the following fields:

Step 5

Set the default use case prompts in the Dynamo DB table this stack created. This will enable the code to run one of the use cases we have predefined in the sample code. Here, we are going to deploy an application that simulates ordering from a pizza restaurant:

Step 6 (Optional)

Add a sample user profile to the application. This will allow the application to address you by name, based on the phone number from the phone that you are calling from. This step is optional.

Step 7

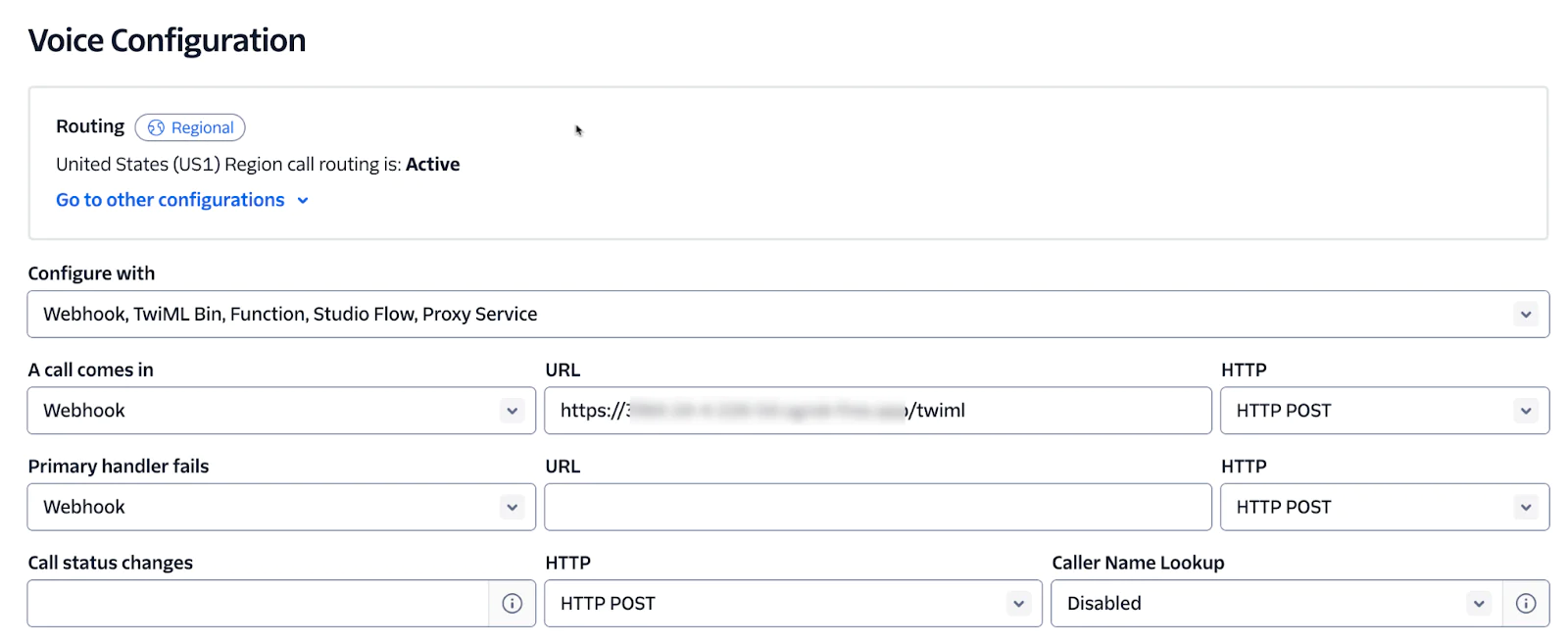

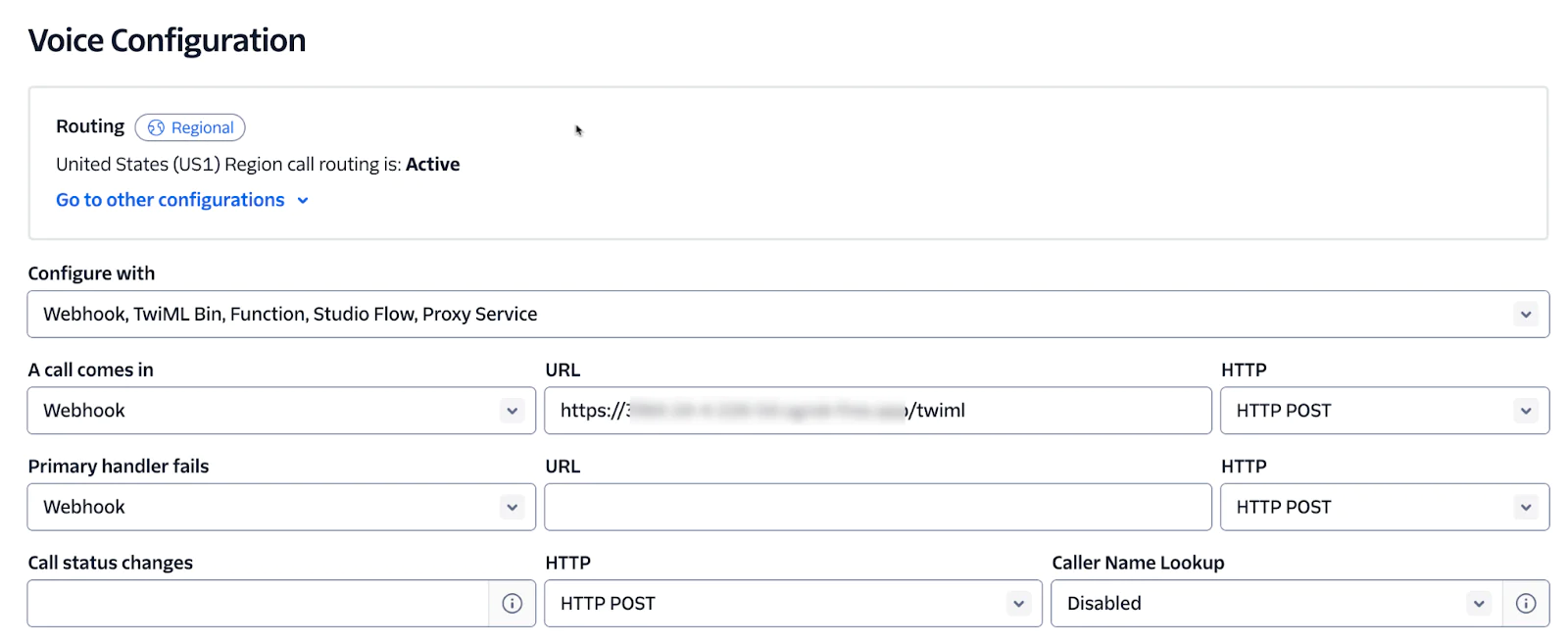

Connect your application to your Twilio Voice phone number:

Go to your phone number configuration screen and select Webhook under ‘A call comes in’ and then enter the URL:

https://<your ngrok proxy URI>/twiml

in the URL field.

Step 8

Run your application locally:

This will start the Express server and run the app locally on port 3000.

Step 9

Now, it’s time to test! Call your application by your Twilio phone number.

You should see application logs with the Welcome Greeting being played from the pizza ordering app. You can now interact with the LLM!

Try putting the app through its paces while watching the logs to get a feel for the build. Remember the elements that we discussed in the beginning of this post: you should get a feel for the speech-to-text and text-to-speech capabilities, along with a feel for the strengths and weaknesses of your LLM choice. And, certainly, you’ll naturally get a feel for the latency with your conversation pacing.

(Try ordering a pizza from the restaurant, too. Just don’t expect a food delivery.)

Key Architectural Considerations

We needed to make several important architectural choices when building out this application. We highlight and explain our thought process below so that you can be prepared for the same choices while building your application.

LLM Performance Choices

The choice of LLM is very important to the use case you are implementing. While the latest model may be attractive, other factors like cost and latency need to be considered.

This reference application is designed to be able to call any LLM via API. You can look at app/lib/prepare-and-call-llm.mjs if you want to change this application to call a different LLM. If you choose to continue to use Bedrock, keep the following in mind:

- Reserved, on demand, and provisioned capacity. Reserved demand can offer significant cost savings over on demand. Most voice applications will need provisioned capacity to optimize latency.

- LLM choice is crucial (and it’s crucial enough to repeat!). The Bedrock model catalog makes it straightforward to experiment with different providers and models.

- Use Inference Profiles or cross-region profiles to access available capacity across multiple regions.

- Log LLM interactions for performance monitoring and auditability

- Use KMS to encrypt data at rest

Text-to-Speech voices

The voices that you choose for your voice AI Applications are a central component of the user experience that your business provides to your customers. ConversationRelay lets you choose the text-to-speech providers and voice that best meets your requirements.

This application has preloaded English voices across three providers (Google, Amazon, and ElevenLabs)

The available voices are increasing rapidly. Refer to the Twilio Docs for the latest voices.

Conclusion

With voice becoming such an important customer interface, most enterprises will want to own the experience end-to-end. Hopefully this blog post and reference architecture shed some light on the key points your enterprise will need to consider while giving you some foundational code to begin your project. When you choose to build these applications with Twilio and AWS you will be able to focus on the work that differentiates your business and provides true value to your customers.

Additional resources

- Twilio ConversationRelay

- Twilio Programmable Voice

- Twilio JavaScript Voice SDK

- AWS Bedrock

- AWS Fargate

- AWS Elastic Container Registry

Dan Bartlett has been building web applications since the first dotcom wave. The core principles from those days remain the same but these days you can build cooler things faster. He can be reached at dbartlett [at] twilio.com.

George Wolf was a Senior Partner Solutions Architect at AWS for over five years. Prior to AWS, George worked at Segment and was responsible for partner technical relationships. He has experience with Amazon Personalize, Clean Rooms, Redshift, and Lambda, and has designed high volume data collection architectures for customer data and machine learning use cases for partners such as Amplitude, Segment, ActionIQ, Tealium, Braze, and Reltio. In his spare time, George likes mountain biking around the San Francisco Bay Area. You can reach George on LinkedIn.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.