Add Token Streaming and Interruption Handling to a Twilio Voice Mistral Integration

Time to read:

In a previous guide, we built an AI agent with Twilio Voice that used ConversationRelay with the Mistral NeMo LLM. The Mistral NeMo LLM was available to us through the Inference Endpoints service from Hugging Face. By using Hugging Face, we could swap in one of hundreds of LLM options for our underlying AI.

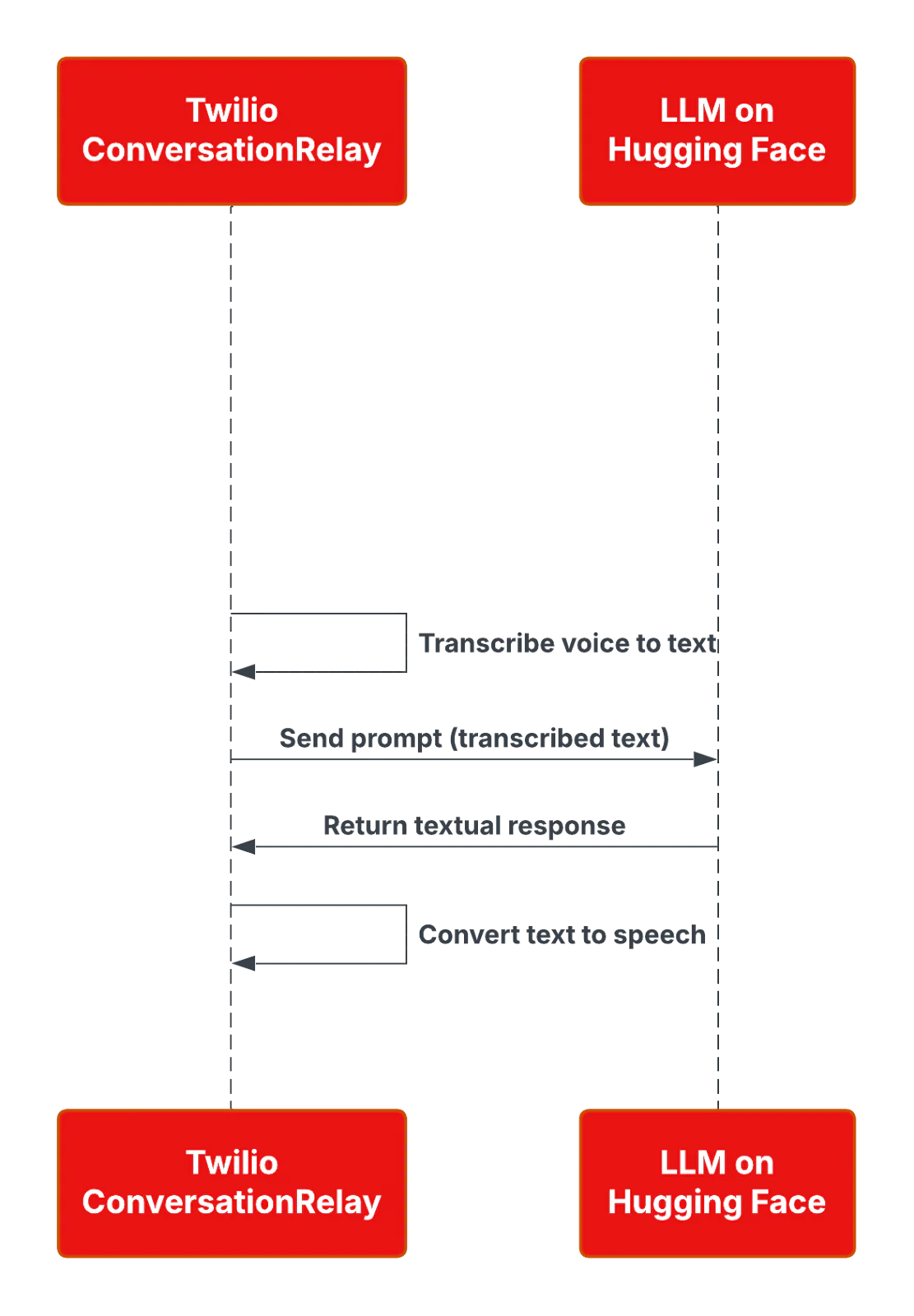

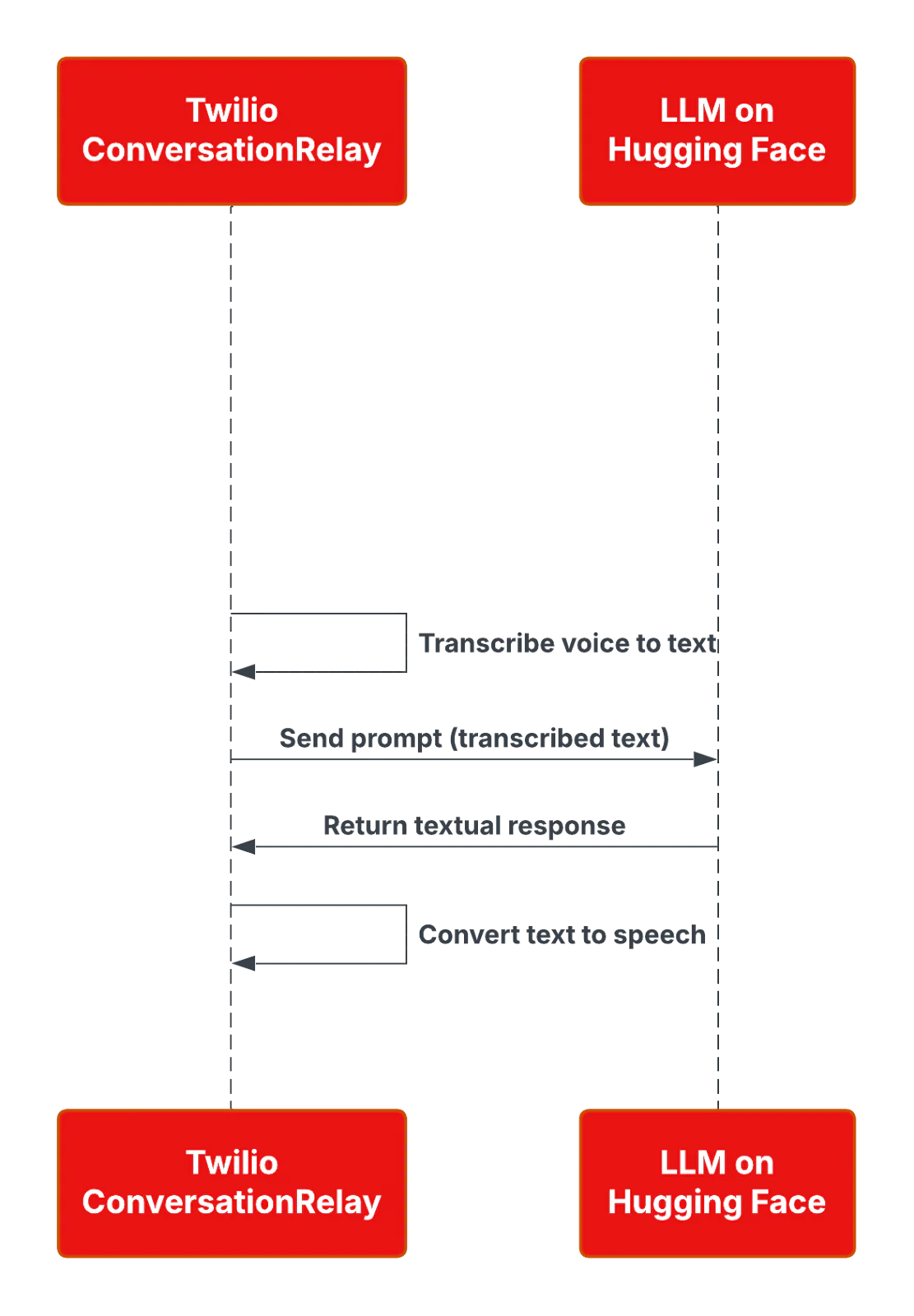

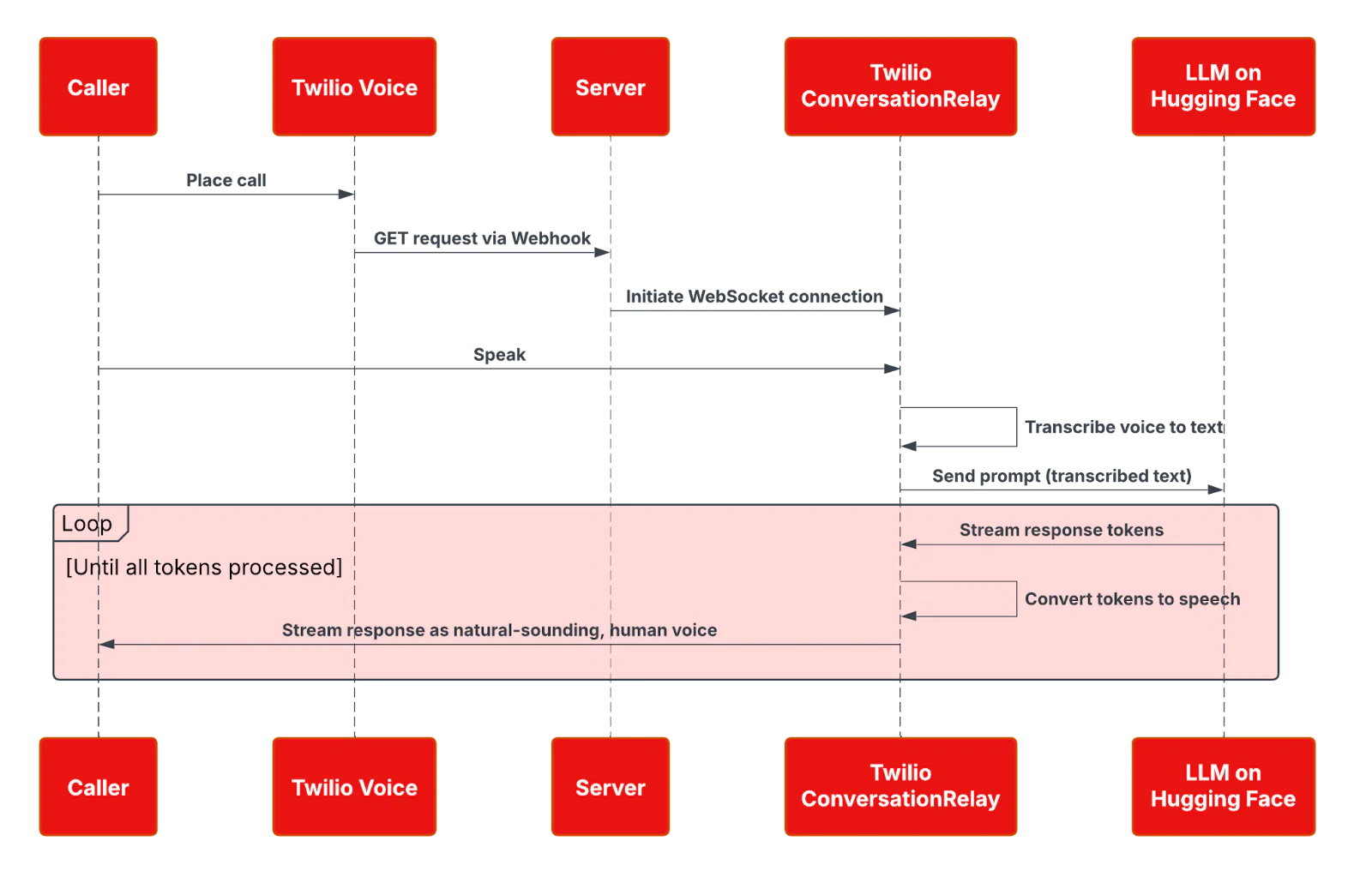

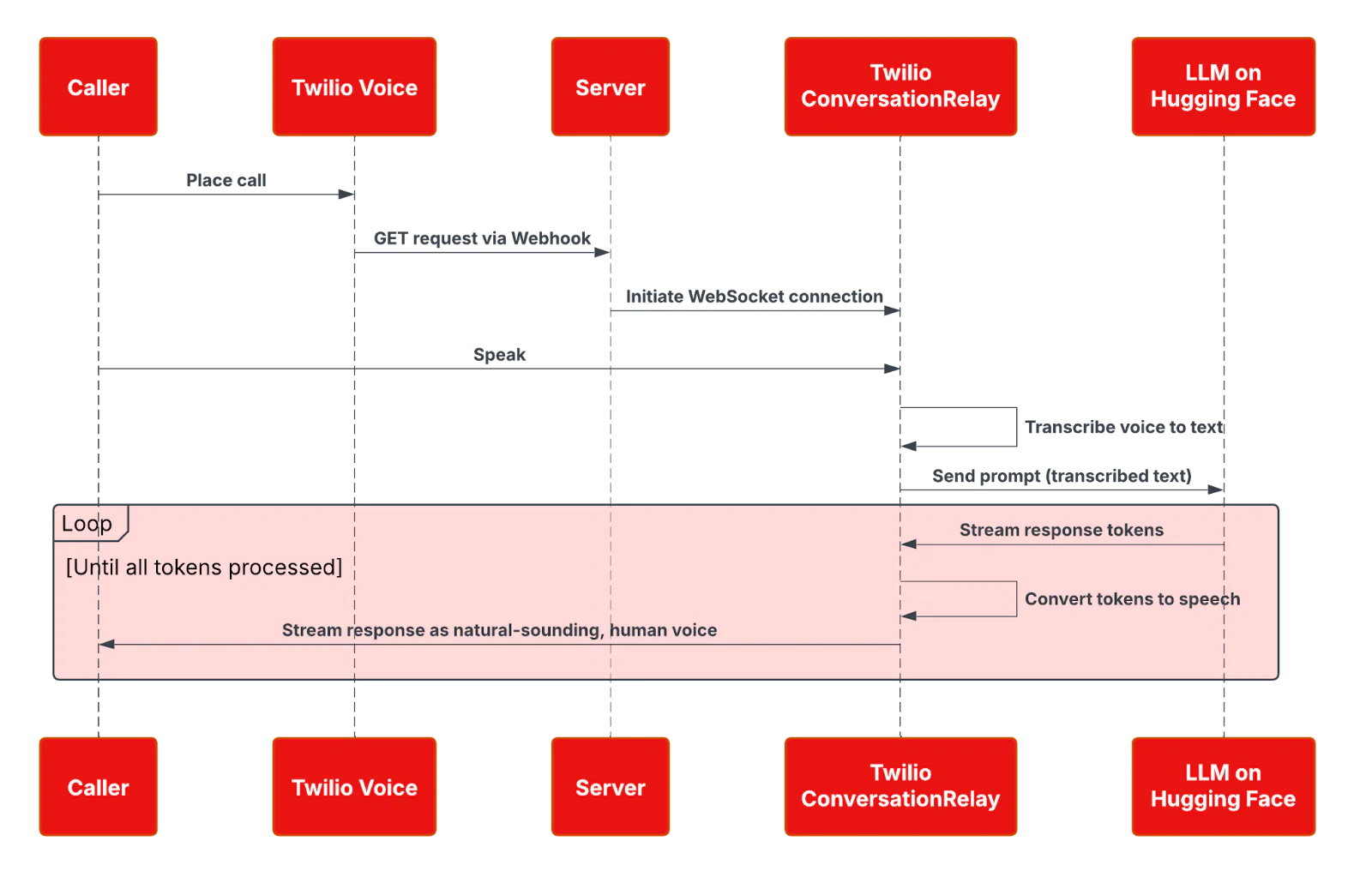

For our application, ConversationRelay handled converting the caller’s real-time speech to text, which we sent as a prompt to the LLM. The LLM responds with text that you forward over a WebSocket, which ConversationRelay would convert into speech, continuing the phone call conversation. By offloading both speech-to-text (STT) and text-to-speech (TTS) to ConversationRelay, you can focus on building feature-rich voice agents and get to market faster.

In this guide, we’ll enhance our original application with token streaming and interruption handling. The result will be a vastly improved user experience, where the voice agent begins speaking its response more quickly – reduced latency – and understands its conversation history when it is interrupted while speaking.

What is token streaming?

If you’re new to this project, read the “Brief overview of our project” section in the previous guide. Let’s focus on the interactions between ConversationRelay and the LLM.

In our original version (above), we wait to receive the entire textual response from the LLM before sending the response to ConversationRelay and converting the text to a speech response for the caller. For short responses, this isn’t an issue. But when the response is long—such as multiple sentences or paragraphs—the end user must wait several seconds before hearing a response from the AI agent. That’s less than ideal.

To solve this problem, we’ll use token streaming.

LLMs work by replying with small units called tokens. Roughly, a single syllable, word, number, or punctuation mark makes up a single token. When you send a prompt such as, “What's the capital of Norway?”, the underlying LLM sees that input as six or seven tokens rather than as one monolithic sentence.

With token streaming, we’ll tell the LLM to return its response as a stream of tokens instead of the singular response in its entirety. The AI agent will begin speaking – starting with the first token received – even as the LLM streams the remainder of the tokens in its response. The result is a snappier response and a conversation experience that will feel more natural to the end user.

What is interruption handling?

Our original application didn’t use token streaming, and it also wasn’t graceful when handling interruptions from the end user. To understand why, imagine asking the AI agent to list the names of all 50 states in the United States in alphabetical order.

- The underlying LLM will take a few seconds to generate the entire response.

- ConversationRelay converts the response, and the AI agent begins speaking.

- In the middle of the AI agent speaking, you interrupt at “Delaware” and ask it to repeat the last state mentioned.

- As far as the LLM is concerned, it has already given you the entire response. The “last state mentioned” would have been the last one in the alphabetical list (Wyoming). However, from your perspective, you interrupted the agent in the middle of the list.

For a more natural conversation flow, when a speaker (in this case, the AI agent) is interrupted in the middle of their utterance, they should be acutely aware of when they were interrupted. With local conversation tracking, this is possible. ConversationRelay will determine the token when the interruption occurred, and pass it back to you. You can then let the LLM know the last utterance that the end user heard.

Now that we’ve covered the core concepts behind our enhancements, let’s walk through how to implement them.

Prerequisites and Setup

The requirements to follow along with this guide are the same as the previous guide. You will need:

- Node.js installed on your machine

- A Twilio account (sign up here) and a Twilio phone number

- ngrok installed in your machine

- A Hugging Face account (sign up here) with payment set up to use its Inference Endpoints service

- A phone to place your outgoing call to Twilio

The code for this project can be found at this GitHub repository. Begin by cloning the repository. Then, install the project dependencies:

The original application from the previous guide is the main branch of the repository. The updated code for this tutorial can be found in the streaming-and-interruption-handling branch. Follow the setup instructions in the repository README, or check out the previous guide for setup details.

Implementing Token Streaming

Our application uses the Hugging Face Inference library for Node.js, which acts as a wrapper around the Inference API. We used chatCompletion in the HfInference class. To implement token streaming, we will use chatCompletionStream instead.

Modify ai.js

Our updated src/utils/ai.js code looks like this:

We have updated our original aiResponse function, renaming it to a more appropriate aiResponseStream. Notice also that aiResponseStream takes a second argument, ws, which is the WebSocket connection associated with the Call.

The original non-streaming code was simple: We sent our conversation (with the latest prompt from the user) to the LLM through the Inference Endpoint’s chatCompletion function, and we awaited the entire generated response. Then, aiResponse would return the response so that the route handler (src/routes/websocket.js) could send the response to the WebSocket connection.

Now, in aiResponseStream, we send the conversation messages to chatCompletionStream. The response stream comes through as chunks—one for each token. With each chunk, we retrieve the token, log it (for debugging), and send it through the WebSocket connection.

The key to token streaming is sending individual tokens through the WebSocket connection so that ConversationRelay can convert them to speech for our AI agent to use in reponding—even while the remaining tokens of the response are still streaming in.

The code also pieces together the final, full response with all tokens the user “heard”, which is used to maintain a proper conversation history.

Modify websocket.js

Our route handler for user prompts has changed as well. The modified code in src/routes/websocket.js looks like this:

The change here is minor. We change the import to the renamed function, and pass ws (the WebSocket connection) to aiResponseStream, which is now responsible for sending individual tokens through the connection as they show. When all tokens have streamed and the final, full response is returned by aiResponseStream, our handler pushes this response to the local session conversation history.

Testing token streaming

To test our application, setup is the same as in our previous tutorial:

- Start ngrok to forward incoming requests to port 8080:

ngrok http 8080 - Copy the resulting

https://forwarding URL to your Twilio phone number configuration, as the URL for the webhook handler when a call comes in. - Copy that same

https://forwarding URL to the project’s.envfile, as the value forHOST. - Create an Inference Endpoint at Hugging Face, using the

mistral-nemo-instruct-2407model. Copy the resulting endpoint URL to the project’s.envfile, as the value forHUGGING_FACE_ENDPOINT_URL. - Copy your Hugging Face access token to the project’s

.envfile, as the value forHUGGING_FACE_ACCESS_TOKEN. - Start the application with

npm run start - Call your Twilio phone number.

With token streaming in place, the server logging for a test call looks like this:

Our token logging (logToken function in ai.js, not shown above) outputs each token on its own line, keeping a running count. Even as these tokens are in the middle of streaming, the AI agent has begun speaking the response.

With token streaming in place, we’re ready to move on to interruption handling.

Implementing interruption handling

Our application maintains a conversation history for each call. This conversation is an array of objects, with each object containing a role (assistant or user) and content, which is the message sent by that assistant (our agent) or user. The entire history is a back-and-forth of alternating messages between the agent and the user.

Because of ConversationRelay, our WebSocket route handler has access to a message of type interrupt. This is triggered whenever the AI agent is in the middle of speaking and the user interrupts with another prompt.

To handle interruptions, we need our interrupt case to determine the last utterance from the AI agent before it was interrupted. Then, we need to modify the conversation history so that the agent’s last message does not contain its entire response, but instead only contains the response up until the last utterance.

Modifying websocket.js

Fortunately, ConversationRelay gives us utteranceUntilInterrupt in the message for our WebSocket handler. Therefore, we modify the interrupt case in src/routes/websocket.js to look like this:

That’s quite straightforward, but the meat of the logic is in our handleInterrupt function:

Let’s walk through what’s going on here:

- First, we search through the entire

conversationhistory for a message from theassistantwhich contained theutteranceUntilInterrupt. - Once we find that message, we track down the

interruptPosition—the exact position within that message whereutteranceUntilInterruptoccurs. - We truncate the message at that

interruptPosition. - We overwrite that message in our conversation history with this truncated message.

- Now, on any subsequent prompts, as we provide the conversation history to the agent, the agent will know that their message stopped at the point of interruption.

With interruption handling in place, the AI agent’s understanding of the conversation (with the message truncated at the point of interruption) will roughly match the caller’s experience of the conversation.

Testing interruption handling

To test interruption handling, restart the server, while keeping ngrok and your Inference Endpoint running unchanged. Then, as you call your Twilio number, prompt the AI agent with a request that will result in a long response. For example:

- Give me a list of each state and its capital.

- Do so in alphabetical order by state.

Here is an audio sample of a test:

In our test, we asked the agent to list the 50 United States in alphabetical order, along with their capitals. The voice response proceeded while the tokens streamed. Then, we interrupted the agent in the middle of the response, asking the agent to provide the last state that was uttered.

The server’s log messages from our test run look like this:

Token streaming and interruption handling are both functioning. These features only required minor code changes, but the improvement in user experience is significant.

Wrapping Up

ConversationRelay lets you build engaging voice agents that feel natural and responsive. With token streaming, responses begin immediately, dramatically reducing wait times and making interactions seamless. Plus, handling interruptions accurately creates a more realistic, conversational experience for users, helping your agent respond intelligently even when conversations shift unexpectedly.

You can find the updated code from this tutorial—with token streaming and interruption handling—in the GitHub repository under the streaming-and-interruption-handling branch. Experiment with your own Hugging Face endpoint or try other models available to tailor the agent to your unique needs.

Ready to take your AI agent even further? Check out the ConversationRelay Docs today!

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.