Analyze Entities in real-time Calls using Google Cloud Language with Node.js

Time to read:

Businesses can better serve customers by determining how a phone call is going in real-time either with a machine learning model and platform, like TensorFlow, or with an API. This post will show how to perform entity sentiment analysis in real-time on a phone call using Twilio Programmable Voice, Media Streams, and the Google Cloud Speech and Language APIs with Node.js.

Prerequisites

- A Twilio account - sign up for a free one here

- A Twilio phone number with Voice capabilities - configure one here

- Node.js installed - download it here

- Ngrok

- A Google Cloud account with a valid billing method

Document-Level versus Entity-Level Analysis

Entities are phrases in an utterance or text that are commonly-known such as people, organizations, artwork, organizations, or locations. They're used in Natural Language Processing (NLP) to extract relevant information. This post will perform both document-level sentiment analysis where every word detected is considered and entity-level sentiment analysis where an utterance or text is parsed for known entities like proper nouns and trying to determine how positive or negative that entity is.

Given the text "Never gonna give you up, never gonna let you down. Never gonna run around and desert you. - Rick Astley", "Rick Astley" could be parsed out and recognized as a "PERSON" with a score of zero and "desert" could be parsed out as a "LOCATION" with a negative score.

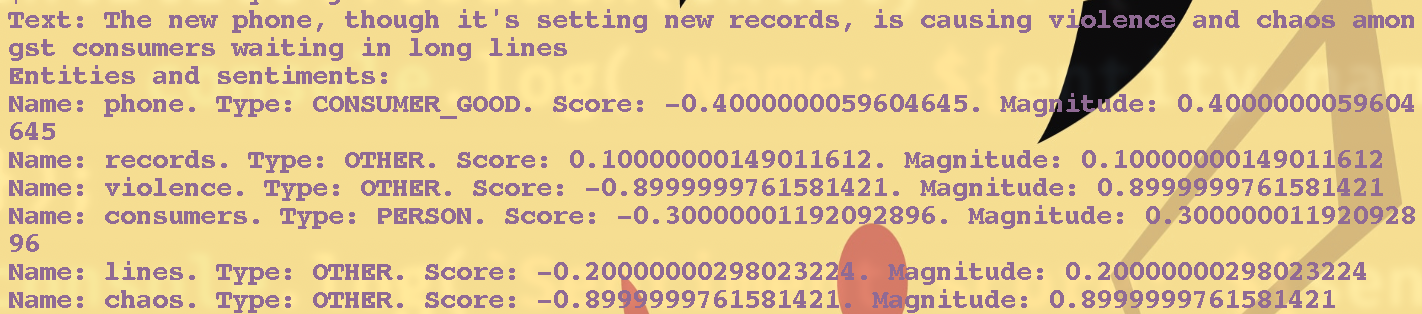

Why would you choose entity-level over document-level or vice versa? Sometimes small words can detract or take away from more important information. Take the sentence "The new phone, though it's setting new records, is causing violence and chaos amongst consumers waiting in long lines" for example. Document-level analysis may not be able to provide a real sense of it because it has both negative and positive components, but entity-level analysis may be able to detect polarities or distinctions toward the different entities in the document.

This post will perform both entity- and document-level sentiment analysis so you can compare them and make an opinion for yourself.

Setup

On the command line run

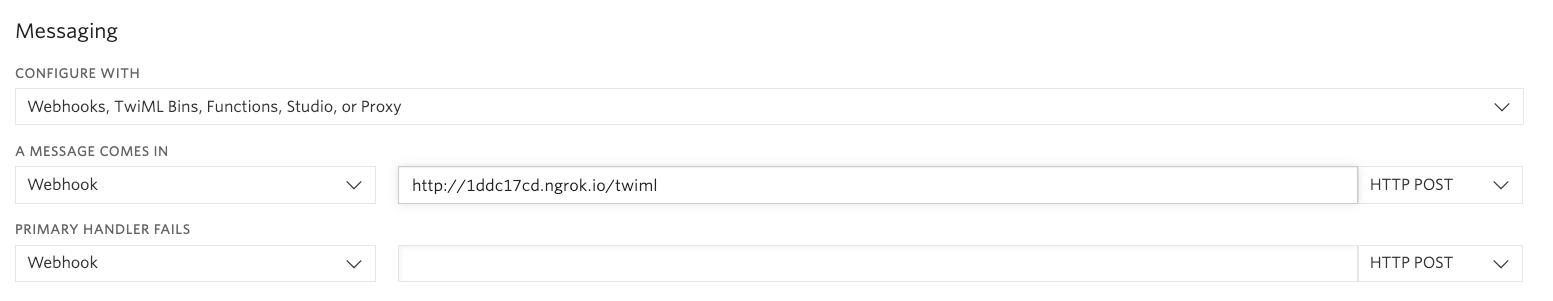

This gives us a publicly-accessible URL to your application. If you haven't bought a Twilio phone number yet, buy one now and configure it by adding your ngrok URL with /twiml appended to it as shown under the “Messaging” section below:

We will use this real-time transcription demo app using Media Streams. Clone it into a folder called sentiment:

cd into the Node realtime-transcriptions example which we will use for this project:

This demo server app consumes audio from Twilio Media Streams with Google Cloud Speech to transcribe phone calls in real-time. To do so, we will need some API credentials to use the Google Cloud Speech API.

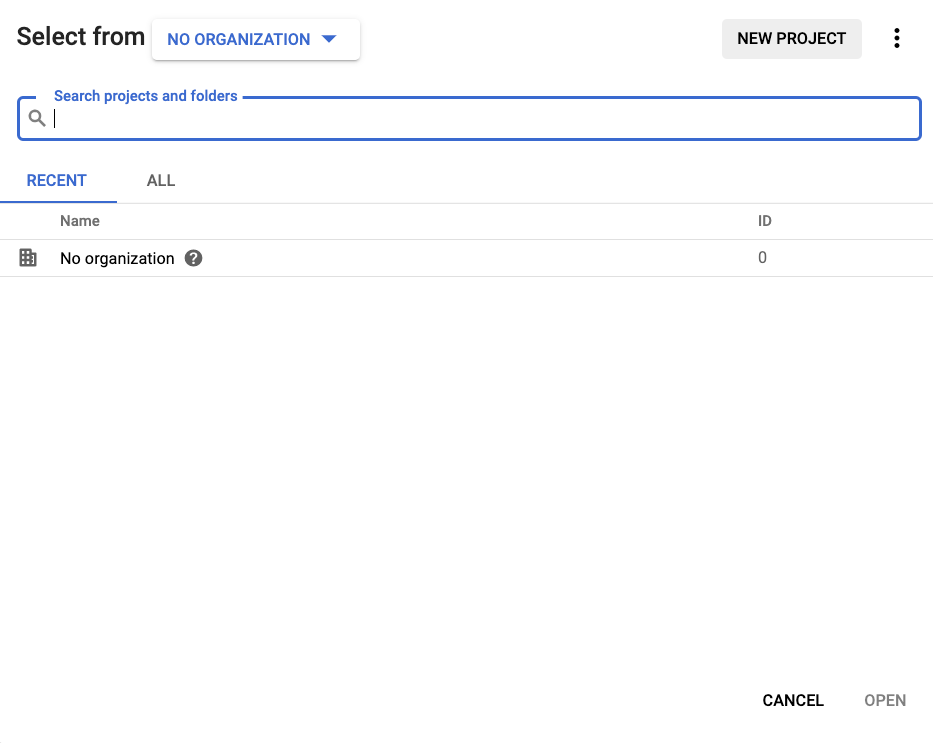

Log into your Google Cloud developer console and enable the Google Cloud Speech API for a newly-created project by clicking this button in the top left corner: it should either say Select a project or No organization.

Select New Project and give it a title like analyze-call-transcriptions.

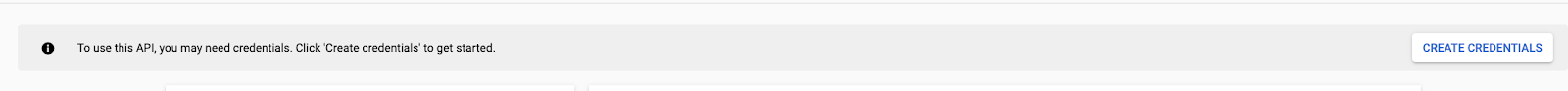

Click Create Credentials.

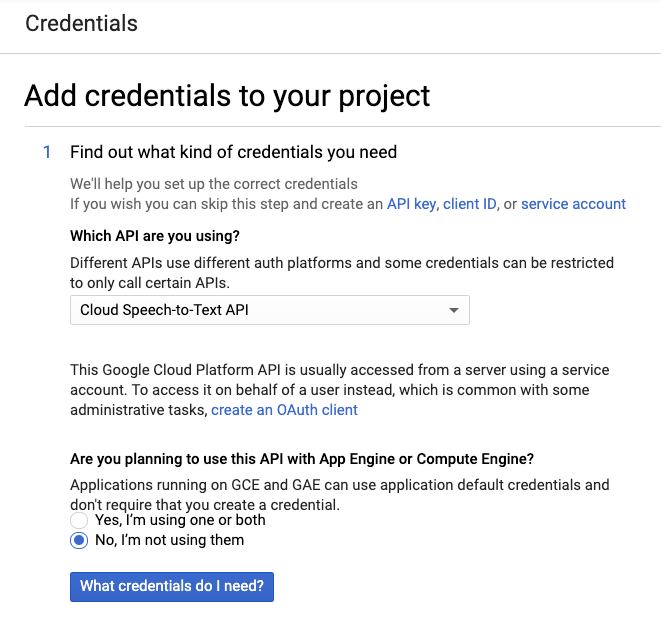

Select the Cloud Speech-to-Text API from the dropdown menu and followed by No when asked if you're planning to use this API with App Engine or Compute Engine. Next click the blue What credentials do I need? button.

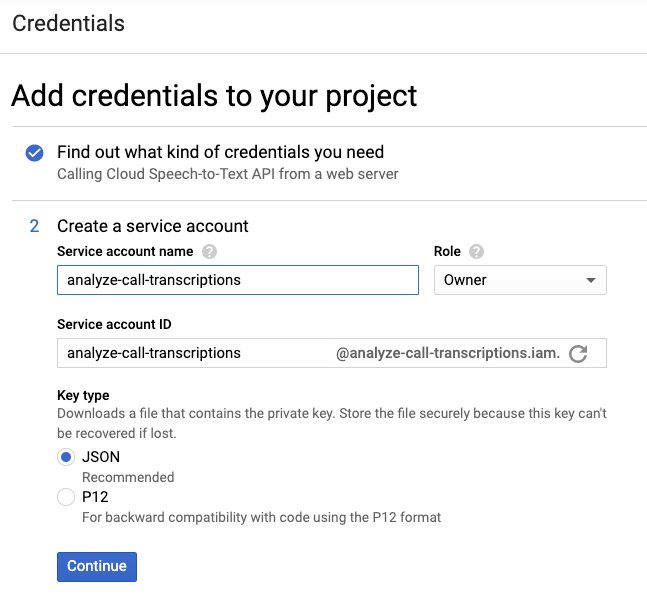

To create a service account give your Service account a name like analyze-call-transcriptions and give it the Role of Owner of the project. The key type should be of type JSON. Click Continue and save the JSON as google_creds.json in the root of your project in /node/realtime-transcriptions.

Transcribe a Call in Real-Time

Now it's time to transcribe a Twilio phone call in real-time. Open templates/streams.xml and replace wss://<ngrok url> with your ngrok URL (in the example above, it would be wss://1ddc17cd.ngrok.io.)

Run npm install on the command line in the realtime-transcriptions directory followed by node server.js. Open up a new tab in the realtime-transcriptions directory and run curl -XPOST https://api.twilio.com/2010-04-01/Accounts/<REPLACE-WITH-YOUR-ACCOUNT-SID>/Calls.json -d "Url=http://<REPLACE-WITH-YOUR-NGROK-URL>/twiml" -d "To=<REPLACE-WITH-YOUR-PHONE-NUMBER>" -d "From=<REPLACE-WITH-YOUR-TWILIO-PHONE-NUMBER>" -u <REPLACE-WITH-YOUR-ACCOUNT-SID>:<REPLACE-WITH-YOUR-AUTH-TOKEN>.

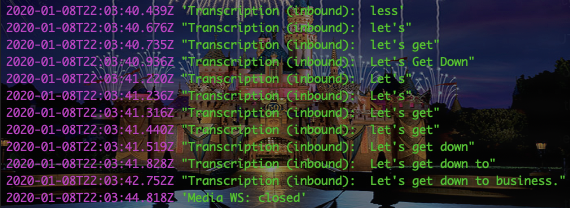

You should get a phone call and as you speak you should see your words appear in the terminal.

The transcription isn't perfect but it is good enough for most purposes including this demo.

Analyze a Call in Real-Time with Google Cloud Language API

Now let's analyze that call in real-time with the Google Cloud Natural Language API. Enable the Natural Language API here and run npm install @google-cloud/language --save on the command line.

Make a new file called analysis-service.js in the root of your project and require the Node.js module at the top of the file.

Now make an async function accepting a parameter transcription to analyze the sentiment of transcription. Instantiate the client and make a new document object containing content (the transcription to analyze) and type (here it's PLAIN_TEXT but for a different project could be HTML.) document could also take the optional parameter language to recognize entities in Chinese, Japanese, French, Portuguese, and more, otherwise the Natural Language API auto-detects the language. See what languages are supported here.

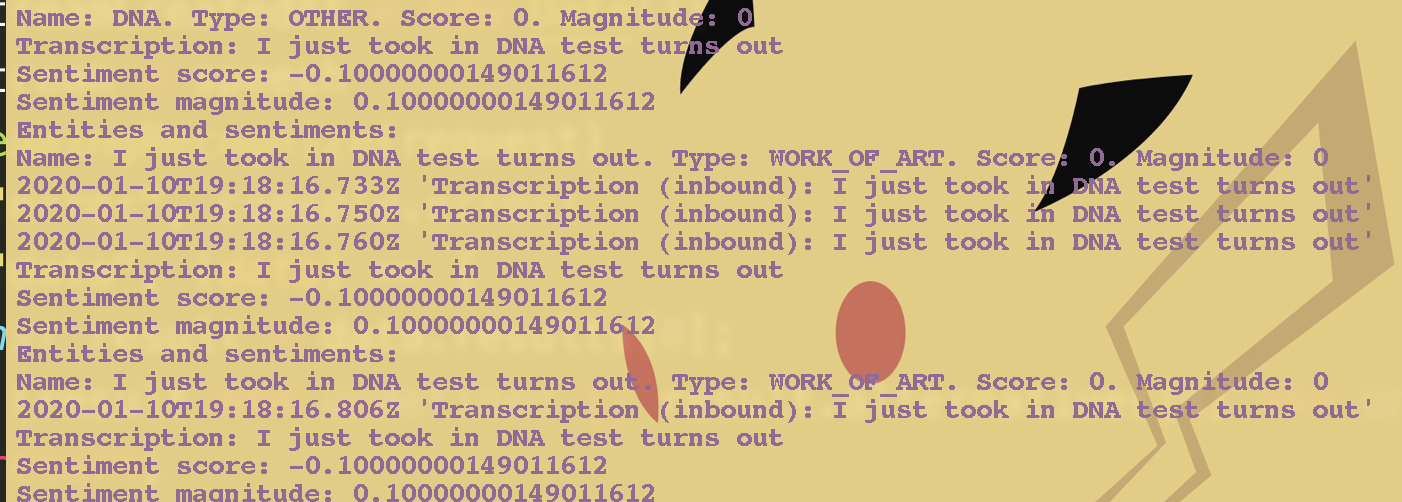

The client then analyzes the sentiment of that document object and loops through the entities, printing out their name, type, score, and magnitude.

The score is a normalized value ranging from -1 to 1 that represents the text's overall emotional inclination whereas the magnitude is an unnormalized value ranging from zero to infinity in which each individual expression in the text contributes to it so texts of longer length could have greater magnitudes.

Open transcription_service.js and import analysis-service.js at the top with

Then add a new line 58 after the line calling this.emit('transcription'...) and call the classify function from analysis-service.js.

Running the command to make an outbound Twilio phone call again (curl -XPOST https://api.twilio.com/2010-04-01/Accounts/<REPLACE-WITH-YOUR-ACCOUNT-SID>/Calls.json -d "Url=http://<REPLACE-WITH-YOUR-NGROK-URL>/twiml" -d "To=<REPLACE-WITH-YOUR-PHONE-NUMBER>" -d "From=<REPLACE-WITH-YOUR-TWILIO-PHONE-NUMBER>" -u <REPLACE-WITH-YOUR-ACCOUNT-SID>:<REPLACE-WITH-YOUR-AUTH-TOKEN> ) should display entity sentiment analysis being performed on your live call transcription.

For the sentence from the beginning, "The new phone, though it's setting new records, is causing violence and chaos amongst consumers waiting in long lines", you would see sentiment and entity analysis like this:

What's Next

There is a lot you can do with NLP in Node.js as well as other languages. You can try entity sentiment analysis on a video call, emails, text messages, etc., check out this post on real-time call transcription using Twilio Media Streams and Google Speech-to-Text or play around with the many NLP capabilities of the Natural Node.js module such as tokenizing, stemming, N-grams, spell-checking, part-of-speech-tagging, and more. I'd love to hear what you're building with NLP online or in the comments.

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.