Add Function Calling to Your Twilio Voice and Claude ConversationRelay Integration

Time to read:

ConversationRelay lets you build real-time, interactive, human-like voice experiences by connecting Twilio Voice to any AI Large Language Model via a WebSocket.

We previously explored connecting Twilio Voice to Anthropic’s AI Model Claude, then building streaming and interruption-aware conversation tracking into our implementation. But what if you want your AI to reach beyond its own knowledge – to call APIs dynamically when triggered by a caller’s request, or use your own internal tools… like a digital assistant?

That’s where Tool Use or Function Calling comes in.

Let’s walk step-by-step through adding Tool Calling to your Twilio and Claude AI agent application. In this build, you’ll enable your assistant to fetch programming jokes from an external service at a caller’s request… and lay the foundation for all kinds of LLM-agent workflows that you’ll want to build next.

Let’s get started!

Prerequisites

You’ll need:

- Node.js installed (I tested with 23.9.0, but other versions should work)

- A Twilio Account and phone number with voice support

- An Anthropic account with API key access

- ngrok or another tunneling tool

- An IDE, text editor, and your phone (for testing voice)

Get the example repo

For a smooth experience, clone the workshop repo. Branches label each major step:

Or, follow along and update your own project based on my code and explanations below.

Set up your environment: .env and install dependencies

Whether updating an existing project or starting new, make sure you have a .env file with:

These placeholder values should be replaced with a working API key, and your ngrok URL (which you will generate below).

Install dependencies:

How tool calling works in Claude

Tool calling or tool use (also called function calling) allows your assistant to choose, based on user input and your initial system prompt, to call an external function or API endpoint you describe. Claude models expose this through a tools array when generating messages, and returns special tool_use content blocks as part of its streaming response.

We’ll also:

- Describe our available "tool" (fetching a programming joke) in our prompt and in our

toolsarray. - Let Claude decide when to invoke our tool, based on the prompt and our instructions

- On a tool call, fetch data (a programming joke) and add it to the conversation, inform the LLM that ‘it’ told a joke, then resume the conversation as normal

1. Update prompts and tool definitions in server.js

First, let’s tweak our greetings and system prompt.

These clarify (for you, the developer, and the model) what capability you’re giving Claude. Pay close attention to what you are adding about get_programming_joke:

This prompt tells Claude when to reach out to the external joke API and when to stick to its built-in knowledge.

2. Add the tool fetching function

Let’s define the function our agent can "call": the ability to fetch programming jokes from jokeapi.dev.

Add this near the top of your server.js:

3. Expand aiResponseStream to handle tool calls

This is the heart of the feature, so let’s break it down.

In the previous tutorial, you streamed tokens from Claude. Now, you both stream tokens and look for special tool_use blocks. When you see one, you:

- Fetch the needed info from your tool (e.g., get a joke)

- "Push" both the LLM trigger (tool use) and your tool result into local conversation state,

- Then send the joke (or other tool output) immediately down the WebSocket to Twilio for ConversationRelay to handle the text-to-speech step.

Your updated functions (focus on if (chunk.type === 'content_block_start' && chunk.content_block.type === 'tool_use') {):

What’s going on here?

- We define the tool metadata (in the

const toolsarray I discussed above). - When you are watching the streaming tokens coming back from Claude, your logic will watch for

content_block_startwithtype: 'tool_use'. If you see that, Claude is asking you to call your tool! - It’s straightforward from there: you fetch the result using your own logic, push the tool use and result into your conversation (so Claude can see the results, or what ‘it’ said, on its turn), and send the joke’s text to ConversationRelay.

Why is there so much bookkeeping in conversation? We want Claude to have a full, accurate “memory” of the conversation (something you might need to watch for non-idempotent tool uses!) including tool requests and responses on each conversation turn.

4. Update WebSocket conversation handling

This part stays mostly the same as in our previous step, except now our session and call storage uses a slightly different data structure for tracking the ongoing conversation.

Look for this case in your WebSocket handling logic:

That’s it! Everything else downstream (including streaming, forwarding tokens, and recognizing interruptions) continues to Just Work™️. More on that in a second.

5. Interruption handling

If you followed my prior tutorial, you already track interruptions by truncating the last assistant message and removing any "future" turns past the interruption point. That logic is unchanged:

And in your WebSocket handler:

6. Test your AI agent with live tool calling

Ready to go? Fire it up and give it a call!

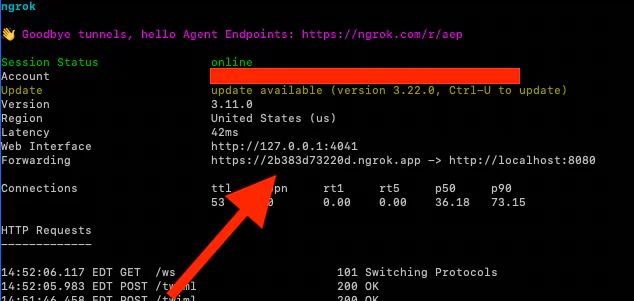

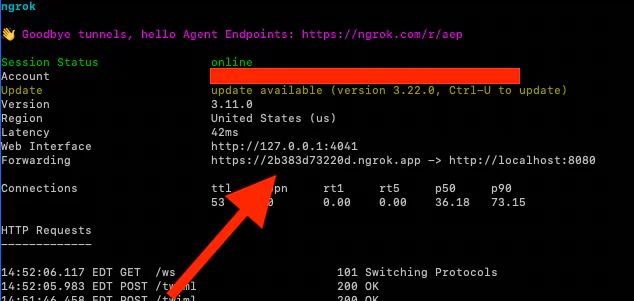

1. Run ngrok:

2. Set your NGROK_URL in .env, omitting the scheme (‘http://’ or ‘https://’) – e.g., NGROK_URL="abcdefgh.ngrok.app":

3. Start your server:

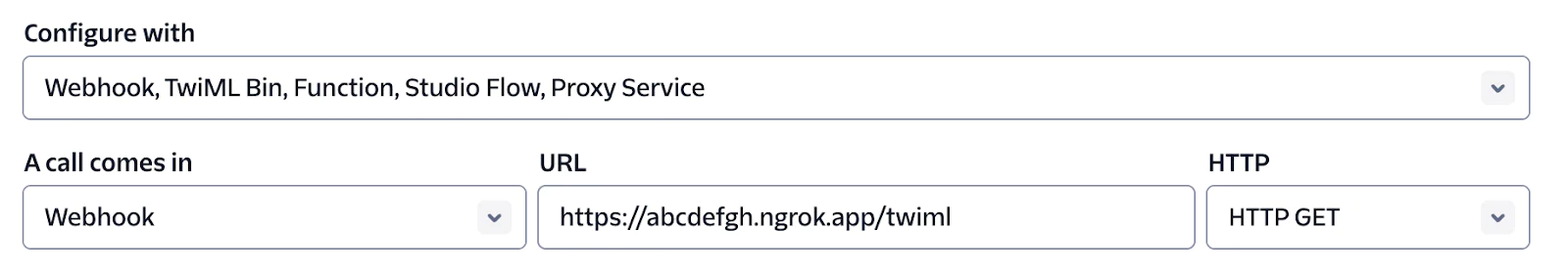

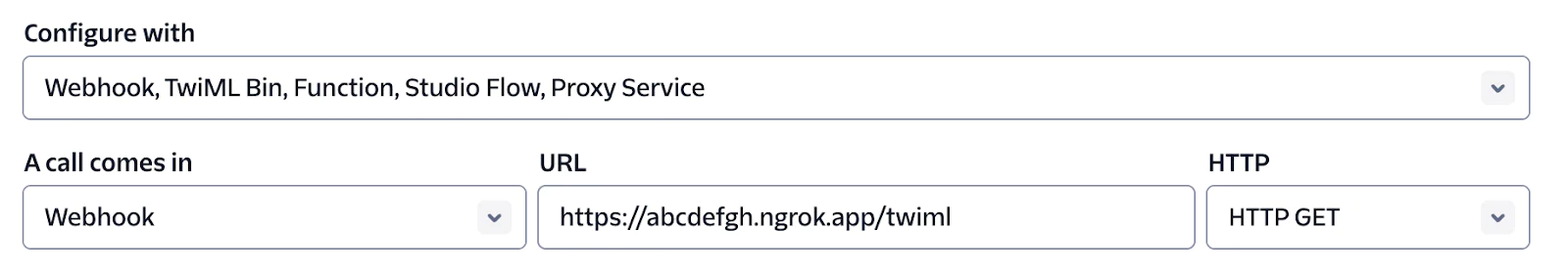

4. In your Twilio Console, go to Phone Numbers > Settings > Active Numbers. Under A call comes in in Voice Configuration, select Webhook, HTTP POST, and set the URL to your ngrok URL followed by the path /twiml:

5. Save

Awesome!

Now, call your Twilio number. You’ll hear the greeting, then have the opportunity to chat with Claude. Ask for a programming joke to trigger the tool (and watch your console).

Try: “Can you tell me a programming joke?”

Claude will ask you to invoke the tool, call the Joke API in clean mode, and you’ll hear the joke streamed back as soon as it’s fetched! I hope you laugh…

What else can you build with Claude and Twilio ConversationRelay?

By adding tool calling to your integration, you just gave your Twilio and Claude voice AI agent agency: it can reach inside your company or outside on the internet, use tools, and make your voice application richer and more interactive. Try connecting to different APIs, or branch further and experiment with complex tool schemas, workflows, or even full assistants.

Now, with your tool or function integrations, Claude can:

- Schedule things on your calendar

- Book appointments

- Look up weather, sports, or news

- Fetch info from any REST API (...or follow your own business logic)

All you have to do is define the right tool in the tools array, and you and Claude are off to the races.

More resources

Inspired? I know I was! Here are some places to visit next for more ConversationRelay and Anthropic (including the earlier tutorials in this series):

- ConversationRelay Docs

- Anthropic Tool Use API Docs

- Integrate Claude, Anthropic’s AI Assistant, with Twilio Voice Using ConversationRelay

- Add Token Streaming and Interruption Handling to a Twilio Voice Anthropic Integration

Paul Kamp is Technical Editor-in-Chief at Twilio. He’s occasionally funnier than jokeapi.dev. (Or, at least, he thinks so.) You can contact him at pkamp [at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.