Creating and Sending Videos via MMS with FFmpeg and Twilio

Time to read:

Creating and Sending Video via MMS with FFmpeg and Twilio

In this tutorial, you'll learn to build a Node.js application that creates short videos from images and text using FFmpeg, then sends these videos as MMS messages via the Twilio Programmable Messaging API. You'll use the fluent-ffmpeg library as a Node.js interface to FFmpeg, and the Twilio API for messaging.

FFmpeg is a versatile tool that allows for a wide range of multimedia manipulations, including encoding, decoding, and applying various filters to audio and video streams. In this project, you will leverage its capabilities to overlay text on images and combine these into video scenes.

The Twilio Programmable Messaging API is a service that allows developers to programmatically send and receive SMS, MMS, and WhatsApp messages from their applications.

The fluent-ffmpeg library provides a fluent API to interact with FFmpeg, simplifying the process of defining complex video and audio processing tasks within Node.js.

By the end of this tutorial, you will have an application that generates videos such as this:

You can download the video here.

Tutorial Requirements:

To follow this tutorial, you will need the following components:

- Node.js and npm installed.

- FFmpeg installed (version 4.3.0 or above).

- Ngrok installed and the auth token set.

- A free Twilio account.

- A free Ngrok account.

Setting up the environment

In this section, you will create the project directory, initialize a Node.js application, and install the required packages.

Open a terminal window and navigate to a suitable location for your project. Run the following commands to create the project directory and navigate into it:

Use the following command to create a directory named images, where the application will store the images that will be used to create the video:

Next, use the following command to create a directory named audio, where the application will store the audio file that will serve as the background music for the video:

Use the following command to create a directory named videos, where the application will store the generated video files:

Run the following command to create a new Node.js project:

After it's created, open the package.json file and add the type: module line. It should look similar to this:

Now, use the following command to install the packages needed to build this application:

With the command above, you installed the following packages:

fluent-ffmpeg: is a Node.js library that provides an interface to FFmpeg for video processing.dotenv: is a Node.js package that allows you to load environment variables from a.envfile intoprocess.env. It will be used to retrieve your Twilio API credentials.twilio: is a package that allows you to interact with the Twilio API. It will be used to send MMS messages.express: is a web framework for Node.js used to build web applications and APIs. It will be used to serve the generated video file.

Collecting and storing your credentials

In this section, you will collect and store your Twilio credentials that will allow you to interact with the Twilio API.

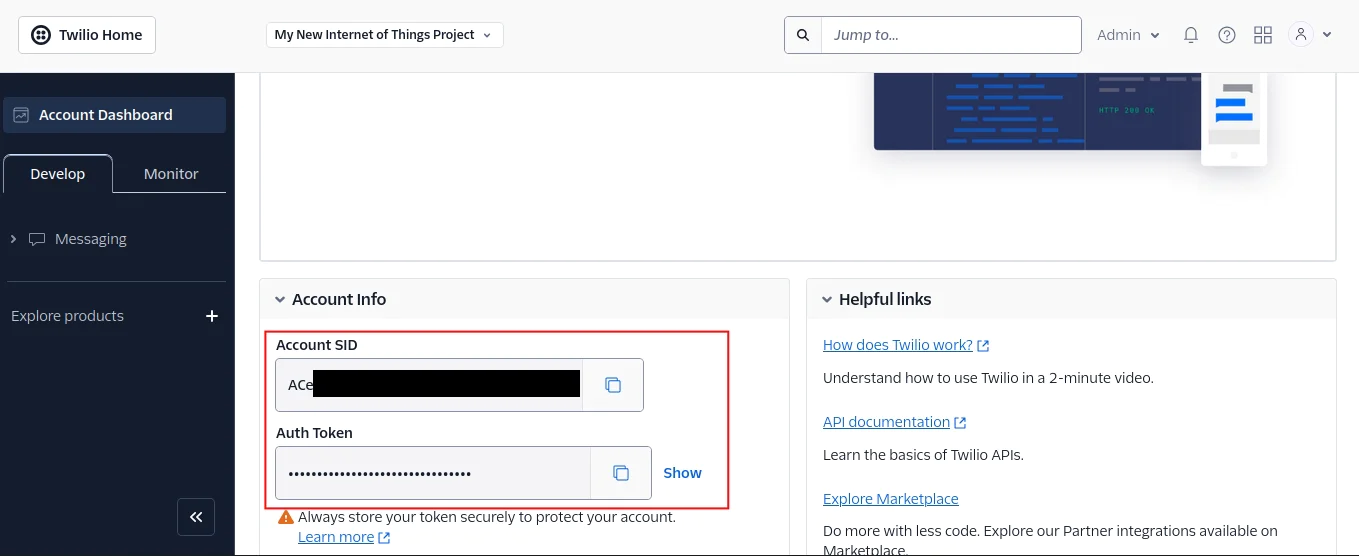

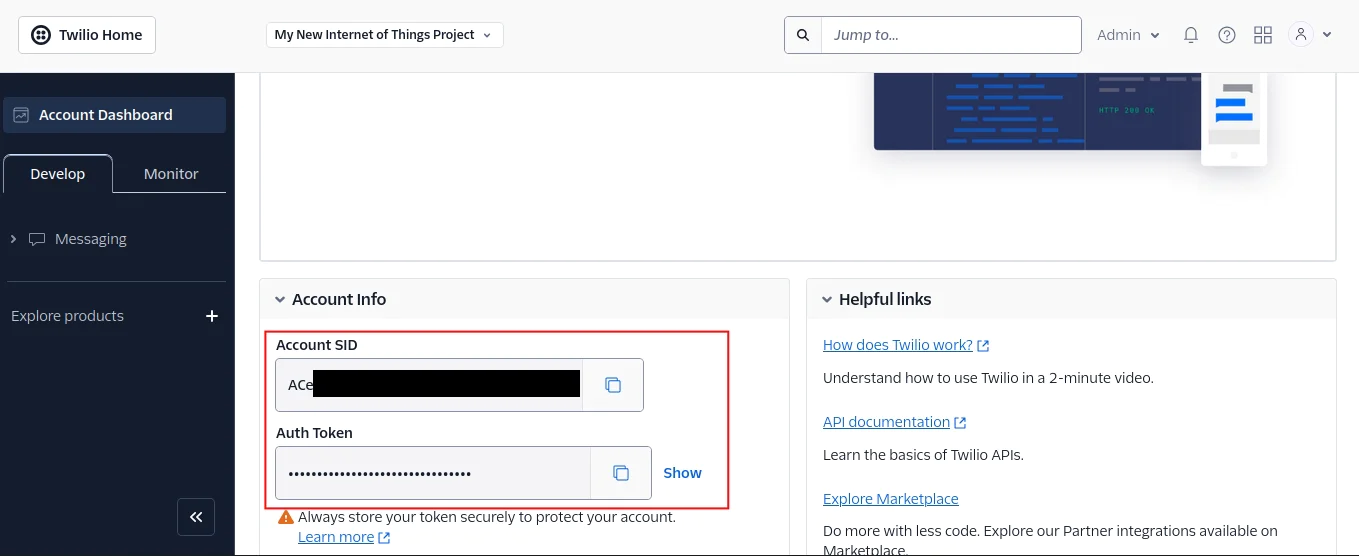

Open a new browser tab and log in to your Twilio Console. Once you are on your console, copy the Account SID and Auth Token, create a new file named .env in your project’s root directory, and store these credentials in it:

The .env file should look similar to this:

Preparing Pre-generated Assets

In this section, you will download three images and one audio file that will be used to generate the video. Then you will define the content for each scene of your video and store this data in a JSON file.

Download the first image from this link and save it in the images directory as twilio-email.png. Do the same for the second and third images, saving them as twilio-messaging.png and twilio-voice.png, respectively. These three images were originally downloaded from the Twilio website.

Next, download the audio file found in this link, which will serve as the background music for your video, and save it in the audio directory as background-music.mp3. This file is a trimmed version of an audio track originally downloaded from Pixabay.

Next, create a file named scenes.json in the project’s directory and add the following code to it:

Each object within the JSON array represents a single scene and contains the following scene information: textContent,textPosition, imagePath, and transition.

The textContent property holds the text that will be displayed as an overlay on the image for that scene.

The textPosition property specifies the desired horizontal placement of this text, with values such as left or right.

The imagePath property provides the local file path to the image that will be used as the visual for this scene.

Lastly, the transition property defines the visual effect to be used when transitioning to the subsequent scene.

The generated video will have three scenes. The first features the twilio-email.png image with text about Twilio's email capabilities on the left, transitioning with a slide left. The second shows twilio-messaging.png with text about Twilio's voice features on the right, using a slide right. The final scene displays twilio-voice.png with text about Twilio's SMS messaging on the left, with no transition

Please ensure that the image files specified in the imagePath property of each scene in scenes.json are present in the images directory and that the names match.

Creating the scenes

In this section, you will create a file named videoGenerator.js file. This file will contain the logic to read the scenes.json file, process each scene by adding the text overlay to the corresponding image using FFmpeg, and save each scene as a separate video file in the videos directory.

In your project root directory, create a file named videoGenerator.js and add the following code to it:

The code added begins by importing the necessary libraries: fluent-ffmpeg for interacting with FFmpeg and fs for file system operations.

Next, the code reads the content of the scenes.json file synchronously using fs.readFileSync(), specifying UTF-8 encoding, and then parses the JSON string into a JavaScript object named scenes. This array of scene objects will drive the video creation process.

Add the following code below the scenes object:

Here, you defined a function named wrapTextForDisplay() which will be used to format the text that will be overlaid on the video frames. This function takes a text string and an optional maxCharsPerLine argument (defaulting to 20). It splits the text into words and then reconstructs it into lines, ensuring that no line exceeds the specified maximum number of characters.

Create a function named saveSceneTextToFile() below the wrapTextForDisplay() function:

The saveSceneTextToFile() takes a text string as input, wraps it using the wrapTextForDisplay() function, and then writes the wrapped text to a temporary file named scene-text.txt within the videos directory. This text file will be used by FFmpeg to draw the text onto the video frame for each scene.

Now, create a function named createScene() below the saveSceneTextToFile() function:

The createScene() function, which takes the imagePath, textPosition, and index as arguments, is key to turning a still image into a short video clip with text on top. It does this by building a detailed instruction for FFmpeg, the tool you're using through the fluent-ffmpeg library.

First, three variables named sceneTextFilePath, outputFilePath, and xPos are declared. sceneTextFilePath indicates the location of the file containing the text that will be overlaid on the image. outputFilePath sets the generated output video name and storage location. xPos is used to store the dynamically calculated horizontal position for the text overlay. This calculation is based on the textPosition argument: if it's "left", the text will be positioned 20 pixels from the left edge; otherwise, an FFmpeg-compatible expression is used to position it 20 pixels from the right edge.

Next, .addInput(imagePath) tells FFmpeg that the image file at imagePath is the starting point for this video scene.

To make this still image last for a set time in the video, you use the .inputOptions(['-loop 1', '-framerate 30']) section. The -loop 1 command makes FFmpeg keep showing the image over and over. This is important so the image is visible for the duration of the scene. Along with this, -framerate 30 sets how many frames per second this input will have, which is needed for setting the video's length.

Next, .outputOptions(['-t 5']) sets how long the video for this scene will be. The -t 5 option makes sure the video is exactly 5 seconds long, made from your looping image at 30 frames per second.

To make the video look better, you add filters to the .videoFilters(...) section. The first filter added is the scale filter, which changes the image to be 640 pixels wide and 480 pixels tall (scale=640x480). This makes all the video scenes the same size.

The second filter added is drawtext, which puts text on the video. The argument textfile='${sceneTextFilePath}' tells FFmpeg to read the text from the file at sceneTextFilePath. This file, which was generated earlier, has the text for the current scene.

The text font is set to Arial (font=arial), the text color to white (fontcolor=white), and the font size to 25 pixels (fontsize=25). The horizontal position is set to the dynamically calculated value stored in xPos (x='${xPos}'). The vertical position is set to the vertical center of the frame (y=(h-text_h)/2). A line spacing of 10 pixels is applied to improve readability for multi-line text (line_spacing=10).

Finally, a fade-in effect is added to the text using alpha='min(t/2,1)'. This makes the text slowly appear over the first two seconds of the video.

The finished video scene is then saved using the .output(outputFilePath) command. Event listeners (.on('end', ...), .on('stderr', ...), .on('error', ...)) are used to track the FFmpeg process: success, standard output, and error events. Lastly, .run() starts the FFmpeg command to make the video scene.

Add the following code below the createScene() function :

Here, the code defines an asynchronous function named generateVideo() and then calls it. This function takes the scenes array as input.

It iterates through each scene defined in your data. For every scene, it first extracts the relevant information: the text content using scene.textContent, the image path, and the desired text position using scene.imagePath and scene.textPosition respectively. It then calls the createScene() function, providing this information along with the scene's index, to generate the corresponding video file.

Finally, it logs the progress and checks if each scene was created successfully. If any scene creation fails, the function logs an error and exits.

Go back to your terminal, and use the following command to generate a video for each scene:

Use the following command to list the contents of the videos directory:

After running the command above, you will find three generated video files and one text file stored in the videos directory named: scene-0.mp4, scene-1.mp4, scene-2.mp4, and scene-text.txt respectively.

Open one of the videos, and you will see a video similar to this:

Concatenating the scenes

In this section, you will update the videoGenerator.js file to include functionality that concatenates the individual scene videos created in the previous step into a single final video. You will also add background music to this final video and implement a cleanup function to remove the individual scene video files after the final video is generated.

Add the following code below the createScene() function:

The updated videoGenerator.js file now includes a new asynchronous function named concatenateScenes(). The concatenateScenes() function takes the scenes object as an argument, and it merges your video scenes into one final video and adds background music to it.

First, it defines the file paths for the individual video scenes, the background music, and the generated output video.

Next, the function starts an FFmpeg command, and you instruct it to take each scene video as a sequential input. Following this, you specify your background music file as another input source.

The blending of video scenes occurs in the .filterGraph(...) section. Here, you use the xfade filter to create transitions between scenes. For each transition, you indicate which two video inputs to blend. The type of transition is determined by data from your scenes.json. The duration of each transition (duration=...) is set to half a second, and you specify when each transition should begin (offset=...) in the combined video.

In the .outputOptions(...) section, you configure the output. You select the processed video stream (with transitions -map [v-out]) and your background music stream (-map 3:a). You also specify the audio encoding (AAC -c:a aac) and ensure the audio duration matches the video length (-shortest).

Finally, .output(outputFilePath) sets the save location for the final video (videos/final-video.mp4). Event listeners are used to track the FFmpeg process: success, standard output, and error events. The .run() command then executes the FFmpeg instruction to combine your video scenes with the background audio.

Add the following function below the concatenateScenes() function:

The cleanupDir() function takes a directory path as input (videos in this case). It reads the contents of the directory and iterates through each file. If a file's name does not include final-video.mp4, it is deleted using fs.rmSync. This function ensures that only the final generated video remains in the videos directory after the process is complete, cleaning up the individual scene files and any temporary text files. This function could also be used to clean the images directory should the need arise.

Now, replace the contents of the generateVideo() function with the following:

The generateVideo() function is updated to first generate the individual scene videos as before. After all scenes are created successfully, it calls concatenateScenes() passing the scenes object as an argument to merge the scenes into a final video.

Finally, it calls cleanupDir() and passes the videos directory name as an argument to remove the intermediate scene files (video and text), leaving only the final-video.mp4 file. The function now returns a boolean indicating whether the entire video generation process (including concatenation and cleanup) was successful.

Go back to your terminal and run the following command to generate the final video:

After running the command above, the videos directory should contain a file named final-video.mp4. Open the video and you should see the following:

Feel free to play around with the transition property in each scene by choosing one of the values found in the Xfade filter wiki.

Here is how the video would look if you changed the first scene transition to dissolve and the second to pixelize

Before moving to the next section, go back to the top of the videoGenerator.js file and comment out the lines where you retrieve the scenes from the scenes.json file, then go to the bottom and comment out the line where you call the generateVideo() function.

Creating the Server

In this section, you will create a simple Express.js server to serve the generated video file and use Ngrok to expose it. This is necessary because Twilio needs a publicly accessible URL to fetch the video for sending via MMS.

Create a file named server.js in the project’s root directory and add the following code to it:

The server.js file starts by importing the express module, which is used to create and configure the web server.

Next, an instance of the Express application is created and assigned to the app constant. The port constant is set to 3000, which will be the port on which the server listens for incoming requests.

Add the following code to the bottom of this server.js file:

The code added uses the express.static() middleware to serve files from the videos directory and sets the MIME Type to 'video/mp4'. This makes all the files within the videos directory, including the generated final-video.mp4, accessible via HTTP URLs. For example, navigating to http://localhost:3000/final-video.mp4 would serve the generated video file.

Now, add the following code to start the server:

Finally, the app.listen() method starts the server and makes it listen for connections on the specified port (3000). The callback function passed to app.listen() logs a message to the console indicating that the server has started and provides the URL at which it can be accessed.

Go back to the terminal and run the following command to start the server application:

To make this server accessible to Twilio, which requires a public URL, you will use Ngrok.

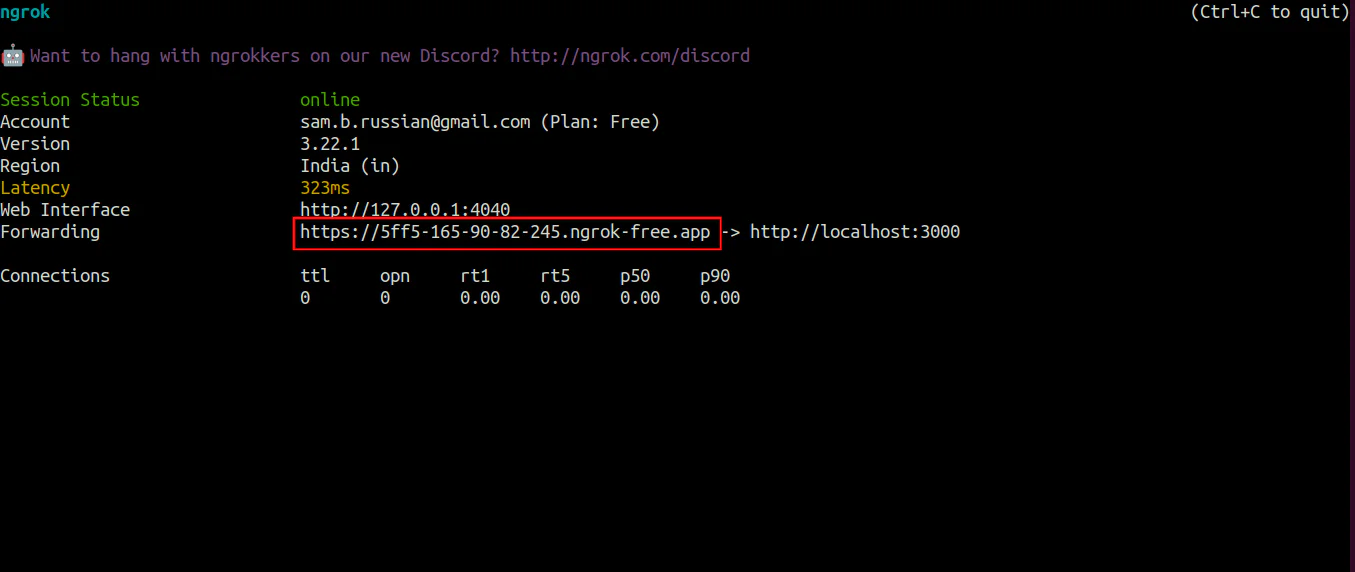

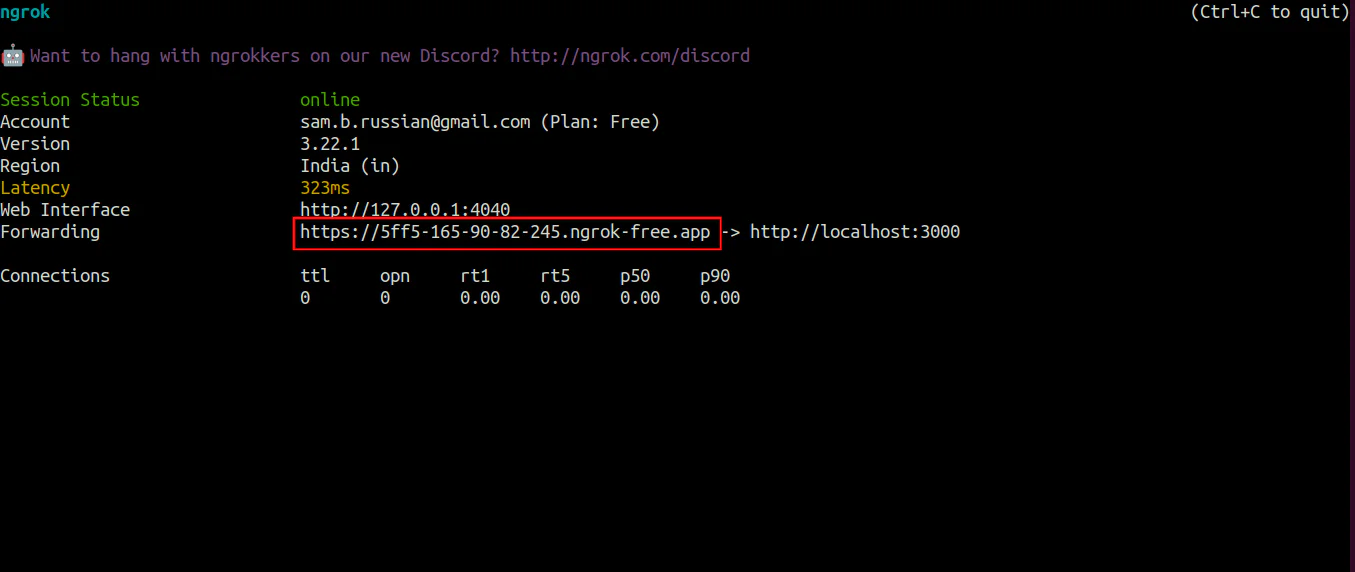

Open another terminal tab and run the following command :

With the command above, Ngrok creates a secure tunnel to your local machine, providing a temporary public URL that you can use to expose your local server to the internet.

Copy the public HTTPS URL it provides and store it in the .env file as NGROK_URL. This URL will be used in the next step to configure Twilio to access the generated video.

Sending the video via MMS

In this section, you will create a file named twilioHelper.js, which will contain the function responsible for sending the generated video as an MMS message using the Twilio API.

Create a file named twilioHelper.js in the project’s root directory and add the following code to it:

The code begins by importing the twilio library and the dotenv/config module to load environment variables from the .env file. It then initializes a Twilio client using your Twilio Account SID and Auth Token, stored as environment variables (TWILIO_ACCOUNT_SID and TWILIO_AUTH_TOKEN). Additionally, it retrieves the Ngrok server URL (NGROK_URL) and stores it in the serverURL constant.

Add the following function to the bottom of the twilioHelper.js file:

The sendVideoViaMMS function added is an asynchronous function that is responsible for sending the MMS message. It first defines placeholder variables for the recipient's phone number (recipientPhoneNumber) and your Twilio phone number (twilioPhoneNumber). It then specifies the name of the video file to be sent ( final-video.mp4). Please note that you will need to replace the placeholder strings with actual phone numbers for the application to work.

The core of this function is performed by calling the twilioClient.messages.create() method, which sends the message. It requires a configuration object specifying the message body (Here is the generated video), the to and from phone numbers, and the mediaUrl. This mediaUrl is an array containing the publicly accessible URL of your generated video, constructed using your serverURL and the video filename, enabling Twilio to fetch and send it.

If the message is sent successfully, the function logs the Message SID (a unique identifier for the sent message) and returns true. If an error occurs during the process, it is caught, logged to the console, and the function returns false.

Finally, this file exports the sendVideoViaMMS() function, making it available for use in other modules of the application.

Bringing It All Together

In this section, you will create a file named main.js, which will serve as the entry point for your application. This file will import the functions for generating the video and sending the MMS, and it will manage the entire process.

In your project’s root directory, create a file named main.js and add the following code to it:

The code starts by importing the generateVideo() function from videoGenerator.js and the sendVideoViaMMS() function from twilioHelper.js. It also imports the fs module to read the scenes.json file.

Add the following code to the bottom of this file:

Here, the code reads the scenes.json file synchronously, parses its content into the scenes array, and logs the scenes to the console, similar to how it was done in videoGenerator.js. This ensures that the scene data is available for the video generation process.

Now, add a function named generateAndSendVideo() to the bottom of this file:

The generateAndSendVideo() function added is an asynchronous function that orchestrates the video generation and sending process. It first calls the generateVideo() function, passing the scenes array to create the video. It then checks the return value of generateVideo(). If it returns false (indicating a failure in video generation), it logs an error message and exits.

Add the following code to the bottom of the generateAndSendVideo() function:

With the code added, the function proceeds to call the sendVideoViaMMS() function to send the generated video as an MMS message. It again checks the return value. If sendVideoViaMMS() returns false (indicating a failure in sending the MMS), it logs an error message and exits.

If both the video generation and MMS sending are successful, the function logs a success message to the console.

Add the following line below the generateAndSendVideo() function:

Finally, the generateAndSendVideo() function is called to initiate the entire process. This file ties together the video generation and MMS sending functionalities, creating a complete application for automating video MMS sending based on pre-defined scenes.

Open another terminal tab and run the following command to generate and send the video:

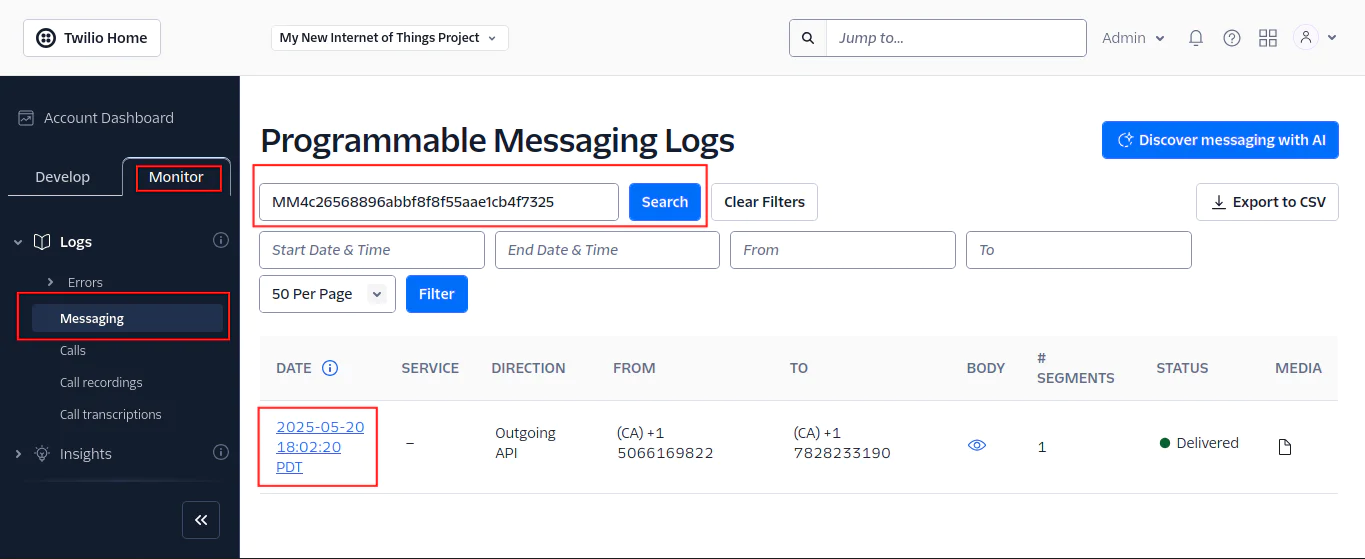

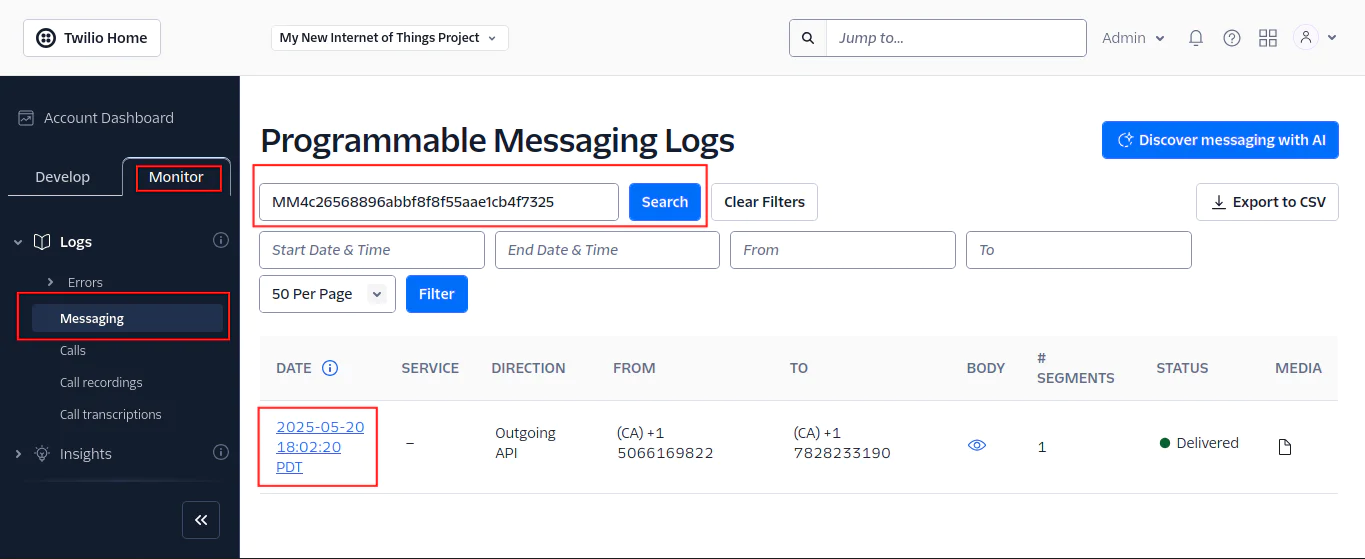

Once the video is generated and sent, copy the message SID in the terminal.

Next, go back to your Twilio console, click on the Monitor tab, located in the Account Dashboard, then click Messaging, and once the page loads, use the search bar to look for the message you have just sent using the message SID.

Select the message found, and you should see the MMS message you just sent.

Conclusion

In this tutorial, you've built a Node.js app to create short videos from images and text using FFmpeg and send them as MMS messages with Twilio. You learned to organize your content in JSON, use fluent-ffmpeg for video creation, and Twilio for sending.

This project gives you a good starting point. You can try more video effects, use different content sources, or improve how the videos are sent.

Combining tools like FFmpeg and Twilio lets you dynamically create and send videos. The skills you've learned here will help you explore more ways to use video in your applications. Keep experimenting and building!

The code for the entire application is available in the following repository https://github.com/CSFM93/twilio-ffmpeg-mms.

Carlos Mucuho is a Mozambican geologist turned developer who enjoys using programming to bring ideas into reality. https://twitter.com/CarlosMucuho

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.