Build a Basketball SMS Chatbot with LangChain Prompt Templates in Python

Time to read:

As I've played with the OpenAI API (and DALL·E and fine-tuning models) and gotten more into ML, developers I meet (as well as my wonderful coworker Craig Dennis who advised me on this tutorial) keep telling me to use LangChain, a powerful and flexible framework for developing applications powered by language models.

Read on to learn how to build an SMS chatbot using LangChain prompt templates, OpenAI, Twilio Programmable Messaging, and Python.

Large Language Models

Large Language Models are trained on large quantities of textual data (i.e. in ChatGPT's case, the entire internet up to 2021–so you can provide more context or data that the model is missing with prompt engineering and Prompt Templates–more on those later) to produce human-like responses to dialogue or other natural language inputs.

To yield these natural language responses, LLMs use deep learning (DL) models, which use multi-layered neural networks to process, analyze, and make predictions with complex data.

LangChain

LangChain came out late last year and already has over 43,000 stars on GitHub and a thriving community of contributors.

It is many things, but ultimately at its core is an open-source framework that simplifies the development of applications using large language models (LLMs), like OpenAI or Hugging Face. Developers can use it for chatbots, Generative Question-Answering (GQA), summarization, and more.

With LangChain, developers can “chain” together different LLM components to create more advanced use cases around LLMs. Chains can consist of multiple components from several modules:

- Prompt Templates: Prompt templates are templates for different types of prompts. Like “chatbot” style templates, ELI5 question-answering, etc

- LLMs: Large language models like GPT-3, Hugging Face, BLOOM, etc

- Agents: Agents use LLMs to decide what actions should be taken. Tools like web search or calculators can be used, and all are packaged into a logical loop of operations.

- Memory: Short-term memory, long-term memory.

LangChain recognizes the power of prompts and has built an entire set of objects for them. It also provides a wrapper around different LLMs so you can easily change models, swapping them out with different templates. The chat model could be different, but running and calling it is the same–a very Java-like concept!

LangChain Twilio Tool

Recently, LangChain came out with a Twilio tool so your LangChain Agents are able to send text messages. For example, your LLM can understand the input in natural language, but Agents can let you complete different tasks like calling an API.

You'll need your own Twilio credentials and to install it with pip install twilio. The code for the tool would look something like this:

This tutorial will, however, show you how to use LangChain Prompt Templates with Twilio to make a SMS chatbot.

LangChain Prompt Templates

"Prompts” refer to the input to the model and are usually not hard-coded, but are more often constructed from multiple components. A Prompt Template helps construct this input. LangChain provides several classes and functions to make constructing and working with prompts easy.

Prompts being input to LLMs are often structured in different ways so that we can get different results. For Q&A, you could take a user’s question and reformat it for different Q&A styles, like conventional Q&A, a bullet list of answers, or even a summary of problems relevant to the given question. You can read more about prompts here in the LangChain documentation.

Prompt templates offer a reproducible way to generate a prompt. Like a reusable HTML template, you can share, test, reuse, and iterate on it, and it will update. When you update a prompt template, it updates for anyone else using it or the whole set of apps that could use it.

Prompt templates contain a text string (AKA “the template”) that can take in a set of parameters from the user and generate a prompt.

Importing and initializing a LangChain PromptTemplate class would look like so:

Since OpenAI LLMs lack data after September 2021, its models can't answer anything that occurred after without additional context. Prompt templates help provide additional context, but differ from fine-tuning - fine-tuning is like coaching the model with new data to get certain output, but prompt engineering with Prompt Templates provides the model specific data to help it get the output you want.

Let's use this template to build the chatbot.

Prerequisites

- A Twilio account - sign up for a free one here

- A Twilio phone number with SMS capabilities - learn how to buy a Twilio Phone Number here

- OpenAI Account – make an OpenAI Account here

- Python installed - download Python here

- ngrok, a handy utility to connect the development version of our Python application running on your machine to a public URL that Twilio can access.

Configuration

Since you will be installing some Python packages for this project, you will need to make a new project directory and a virtual environment.

If you're using a Unix or macOS system, open a terminal and enter the following commands:

If you're following this tutorial on Windows, enter the following commands in a command prompt window:

The last command uses pip, the Python package installer, to install the packages that you are going to use in this project, which are:

- The OpenAI Python client library, to send requests to OpenAI's GPT-3 engine. You could use a different LLM, listed here in the LangChain docs.

- The Twilio Python Helper library, to work with SMS messages.

- The Flask framework, to create the web application in Python.

- The load_dotenv library to load environment variables from a .env file

- The LangChain library to building applications with LLMs through composability

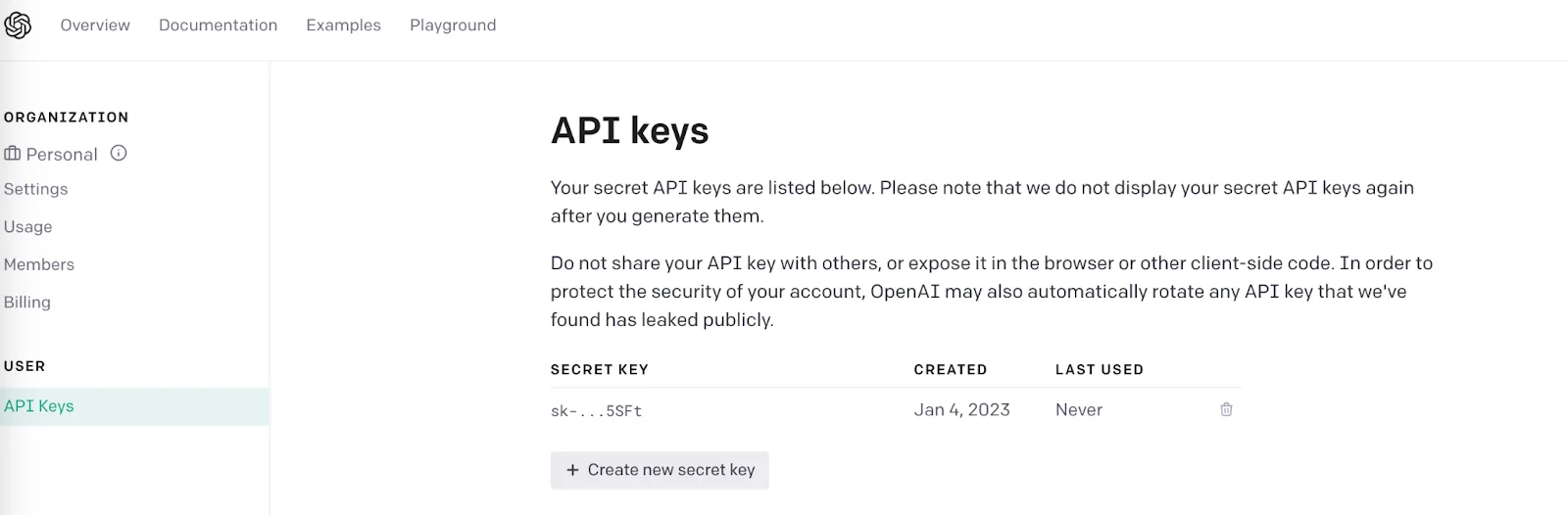

As mentioned above, this project needs an OpenAI API Key to use the LangChain endpoints to OpenAI. After making an OpenAI account, you can get an OpenAI API Key here by clicking on + Create new secret key.

The Python application will need to have access to this key, so we are going to make a .env file where the API key can safely be stored. The application we create will be able to import this key as an environment variable soon.

Create a .env file in your project’s root directory and enter the following line of text, making sure to replace <OPENAI_API_KEY> with your actual key:

Make sure that the OPENAI_API_KEY is safe and that you don't expose your .env file in a public location such as GitHub.

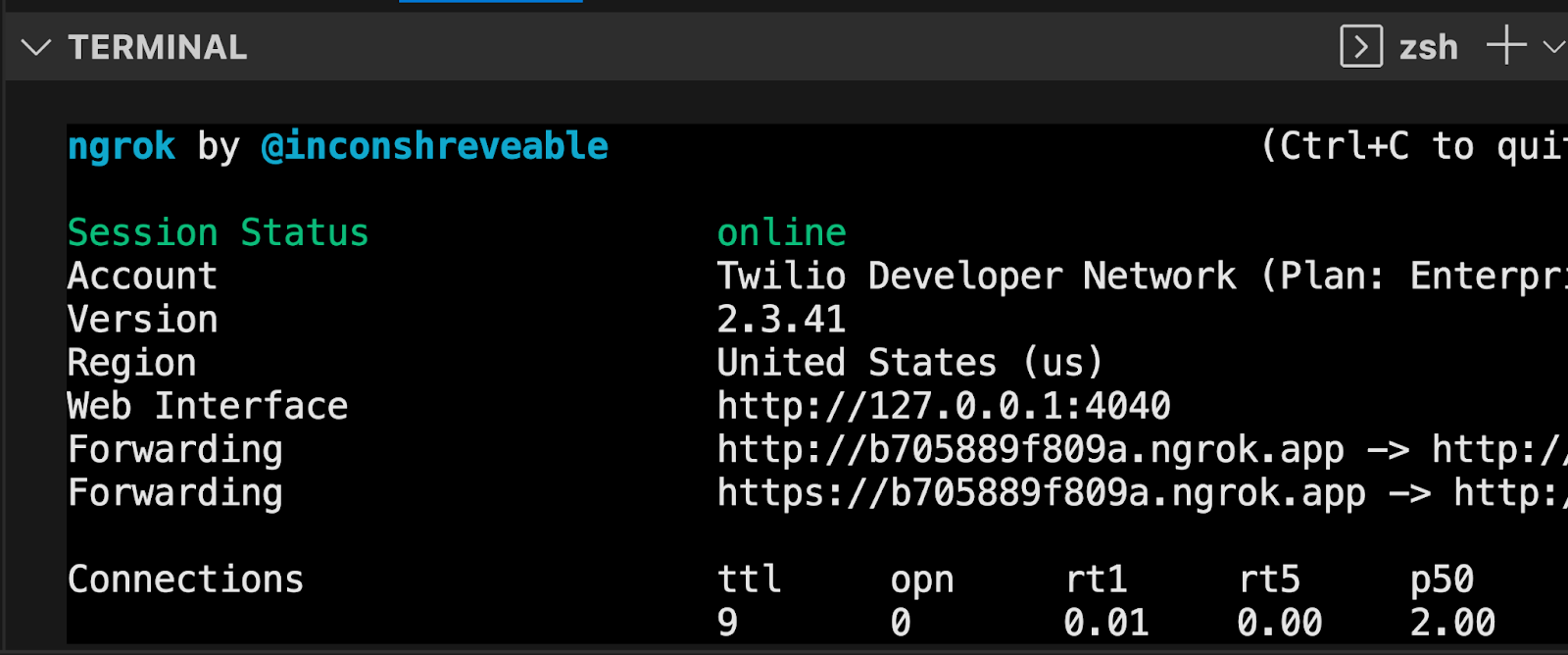

Now, your Flask app will need to be visible from the web, so Twilio can send requests to it. ngrok lets you do this: with ngrok installed, run ngrok http 5000 in a new terminal tab in the directory your code is in.

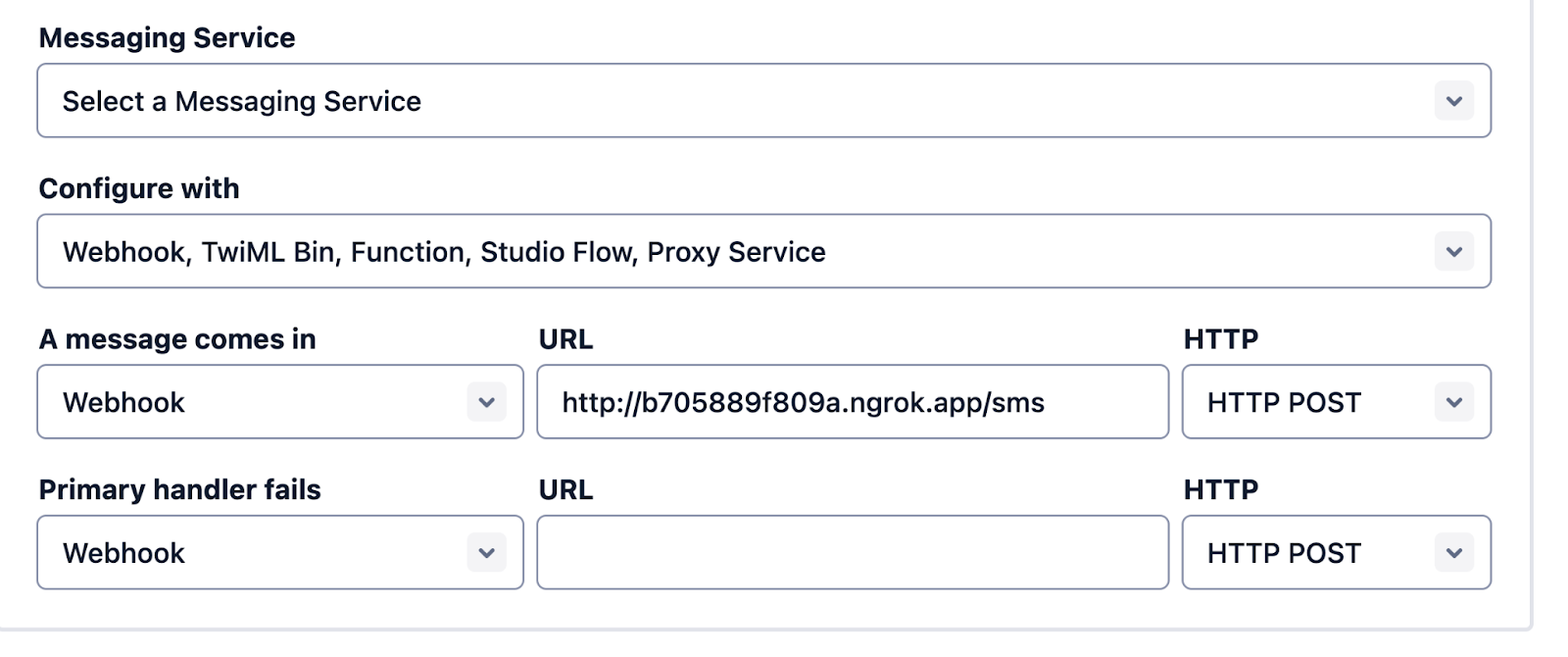

You should see the screen above. Grab that ngrok Forwarding URL to configure your Twilio number: select your Twilio number under Active Numbers in your Twilio console, scroll to the Messaging section, and then modify the phone number’s routing by pasting the ngrok URL in the textbox corresponding to when A Message Comes In as shown below:

Click Save and now your Twilio phone number is configured so that it maps to your web application server running locally on your computer. Let's build that application now.

Generate text through LangChain with OpenAI in Python via SMS

Inside your lc-sms directory, make a new file called app.py.

Copy and paste the following code into app.py to start off the ChatGPT-like SMS app to import the required libraries.

Then, make the OpenAI LLM object (which could be another LLM--this is where LangChain can make reusability easier!), passing it the model name and API key from the .env file, and create a Flask application.

Make the start of the /sms webhook containing a TwiML response to respond to inbound SMS with.

Add the following template code below to help shape the queries and answers from the LLM. In this tutorial, it's Warriors basketball-themed. (Yes, I know the 2023 NBA Finals are the Heat versus the Nuggets. A girl can dream.)

Get the user's question via inbound text message, print out the answer by passing the question to the prompt template and formatting it, and then pass that to Twilio as the text message to send back to the user.

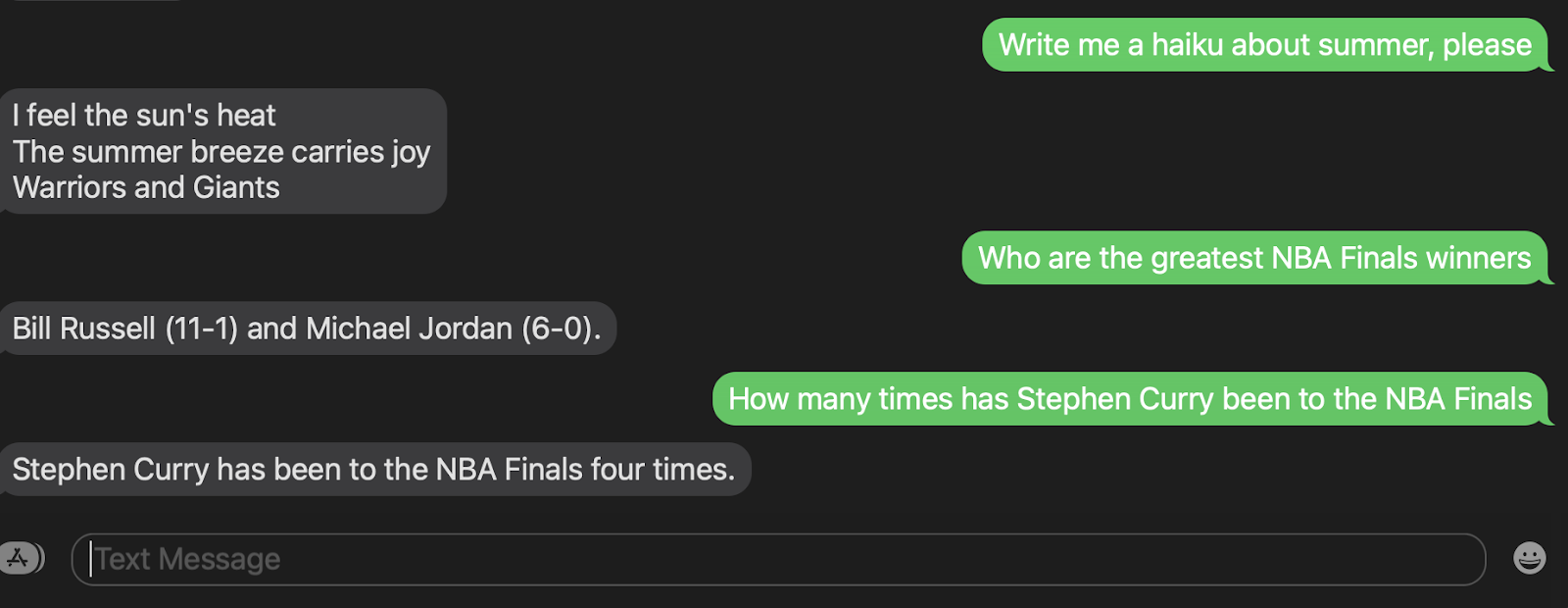

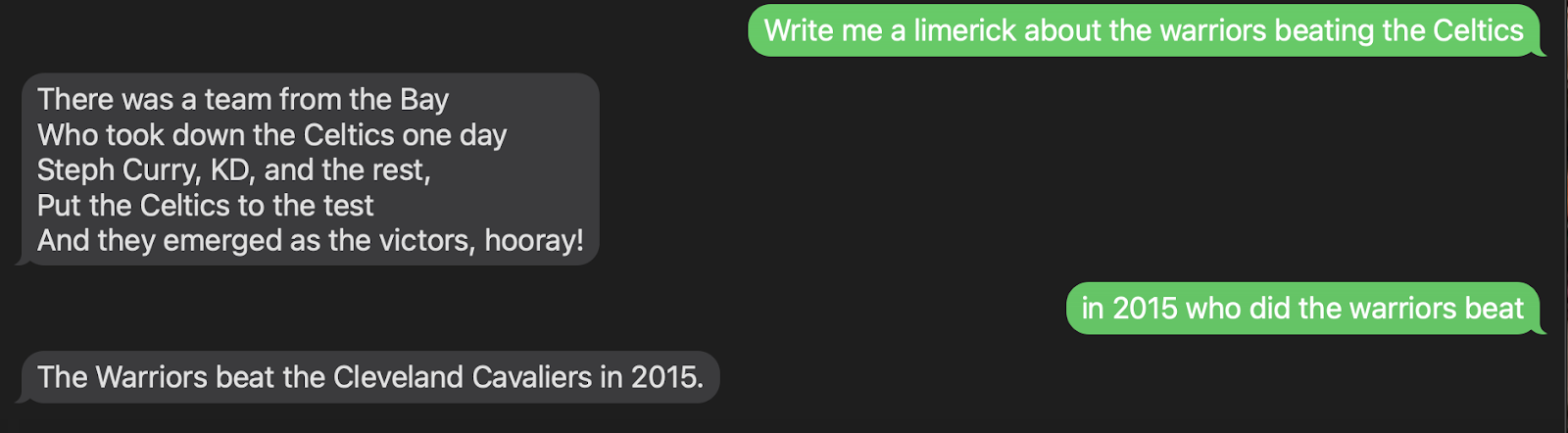

In a new terminal tab (while the other terminal tab is still running ngrok http 5000), run python app.py. You can now text your Twilio number (questions about the Warriors because we provided the model Warriors context) configured from above.

The complete code can be found here on GitHub.

What's Next for Twilio and LangChain

LangChain can be used for chatbots (not just Warriors/basketball-themed), chaining different tasks, Generative Question-Answering (GQA), summarization, and so much more. Stay tuned to the Twilio blog for more LangChain and Twilio content, and let me know online what you're building with AI!

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.