Build a Mock Interview Agent Using Twilio WhatsApp API, LangGraph, and OpenAI

Time to read:

Build a Mock Interview Agent Using Twilio WhatsApp API, LangGraph, and OpenAI

Preparing for interviews is often a lonely process. Mostly, you Google common questions, rehearse alone, or ask a friend to help. But these methods rarely mimic the pressure and unpredictability of real interviews. On the other hand, hiring platforms offer mock interviews, but they can be expensive and inflexible.

That’s where WhatsApp comes in. It’s familiar, widely used, and already installed on most people’s phones. In this tutorial, you'll learn how to build a mock interview agent using LangGraph, OpenAI’s GPT-4o, and Twilio’s WhatsApp API. The agent will ask questions based on your chosen role, review your responses, and offer customized feedback to help you understand how to answer interview questions and what interviewers are looking for.

Prerequisites

To follow along with this tutorial, ensure you have the following:

- Basic understanding of Python and command line usage

- A free Twilio account (will handle WhatsApp messaging)

- An OpenAI account ( with access to GPT-4o)

- Python 3.9 or later installed

You don't need advanced knowledge of AI. You will learn the necessary concepts to build this agent as you progress.

Setting up your development environment

Having covered the basics, you're ready to set things up. This includes creating a Python workspace, installing the required libraries, and configuring both Twilio and OpenAI.

Python Environment Setup

Start by creating a dedicated directory for your project anywhere on your computer and name it mock-interview. Then open it using your preferred IDE; this tutorial uses Visual Studio Code. Head to the IDE’s terminal and run the following command to create a virtual environment.

Then activate the virtual environment by running the following command on the terminal.

When you see (interview_bot_env) at the beginning of your command prompt, it indicates that your virtual environment is active. Creating a virtual environment will help you manage dependencies by keeping them isolated from the system-wide Python installation and other projects.

Installing Required Libraries

With your virtual environment active, you need to install the packages that your agent will need to run successfully. These will be:

langchain-openai: Provides integration with OpenAI's language modelslanggraph: Enables building and managing generative AI agent workflowsflask: Creates the web server to handle WhatsApp webhookstwilio: Official SDK for sending and receiving WhatsApp messagespython-dotenv: Loads environment variables from a .env file

To install the above packages, run the following command in the terminal:

After the installation is complete, you are ready to move on to the next phase of the setup.

Creating the Environment Configuration File

Your AI agent will need API Keys to access the OpenAI and Twilio services. But you should never hard-code them in your source code, as this might expose them to bad actors. Instead, create a file named .env in the mock-interview directory. This is where all the API Keys will reside. Go on and paste the following placeholders:

You will later replace these placeholders with the actual API Keys and Twilio number.

Configuring Your Twilio Account

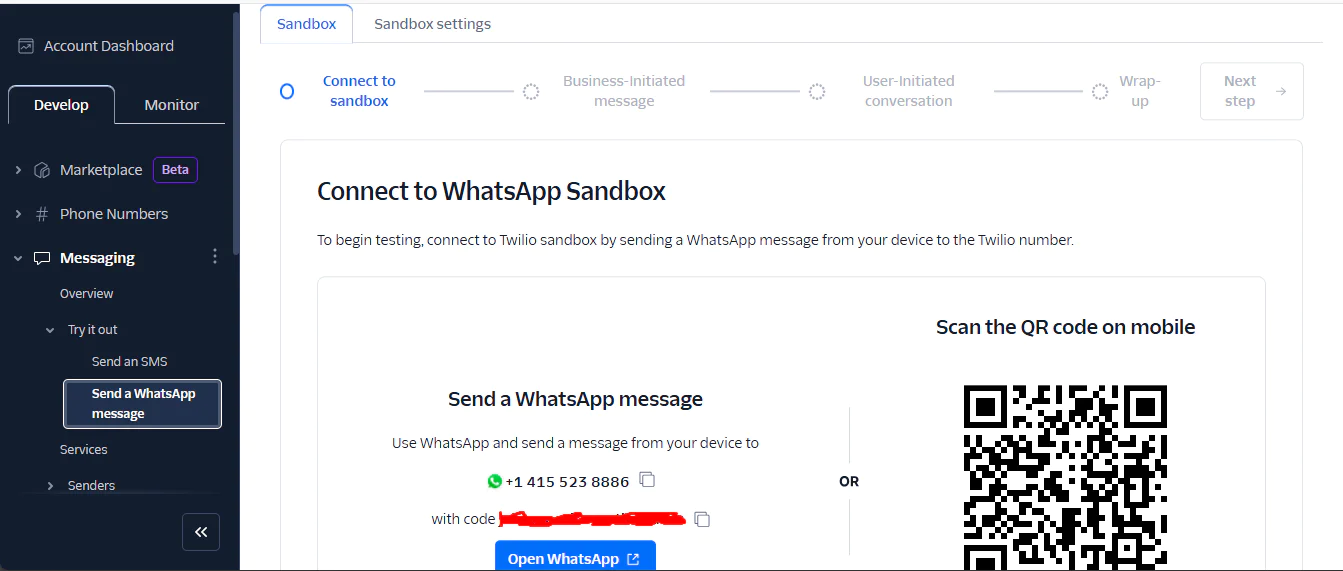

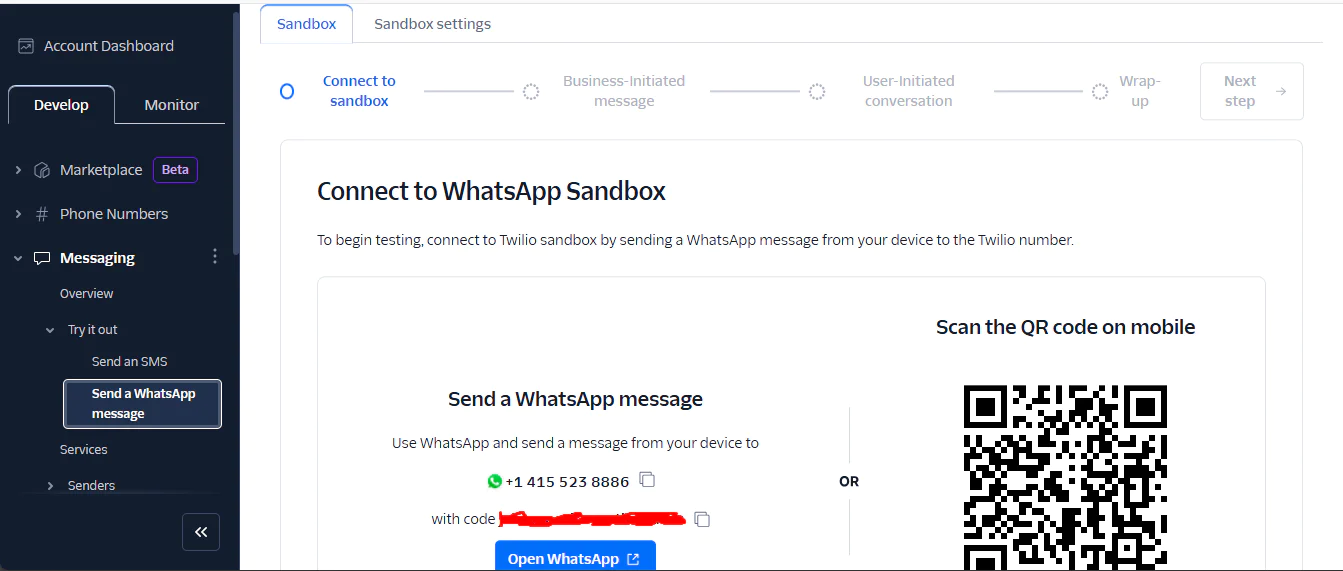

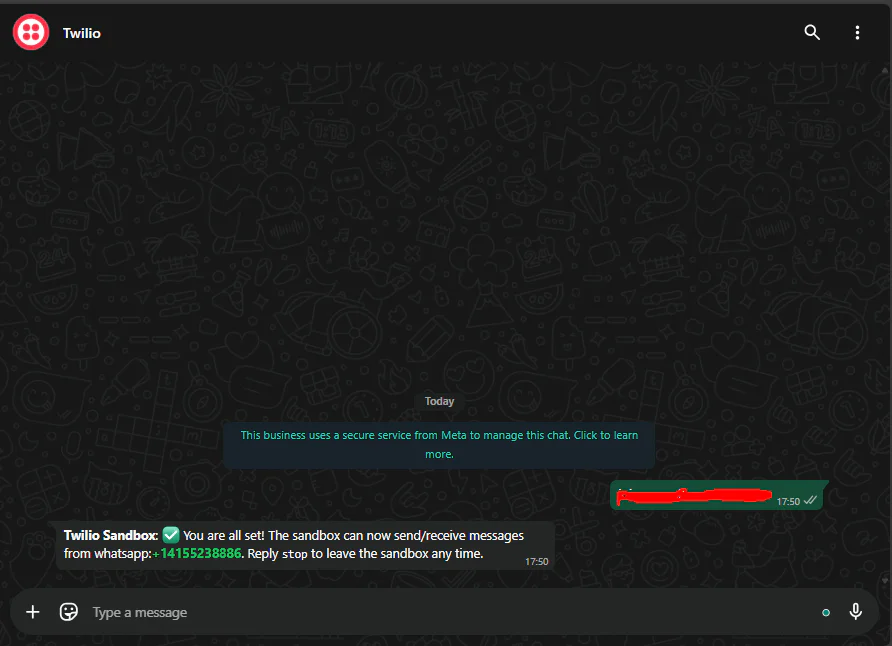

Log in to your Twilio account and navigate to Messaging → Try it out → Send a WhatsApp message. This will open the Twilio WhatsApp Sandbox as shown below:

The sandbox enables you to test the agent without undergoing WhatsApp's business approval process. Copy the WhatsApp number and add it to your .env file. Then, go to WhatsApp and send the displayed code to the Twilio number shown.

You should receive a confirmation message welcoming you to the sandbox, like the one above. This confirms that your number has been verified.

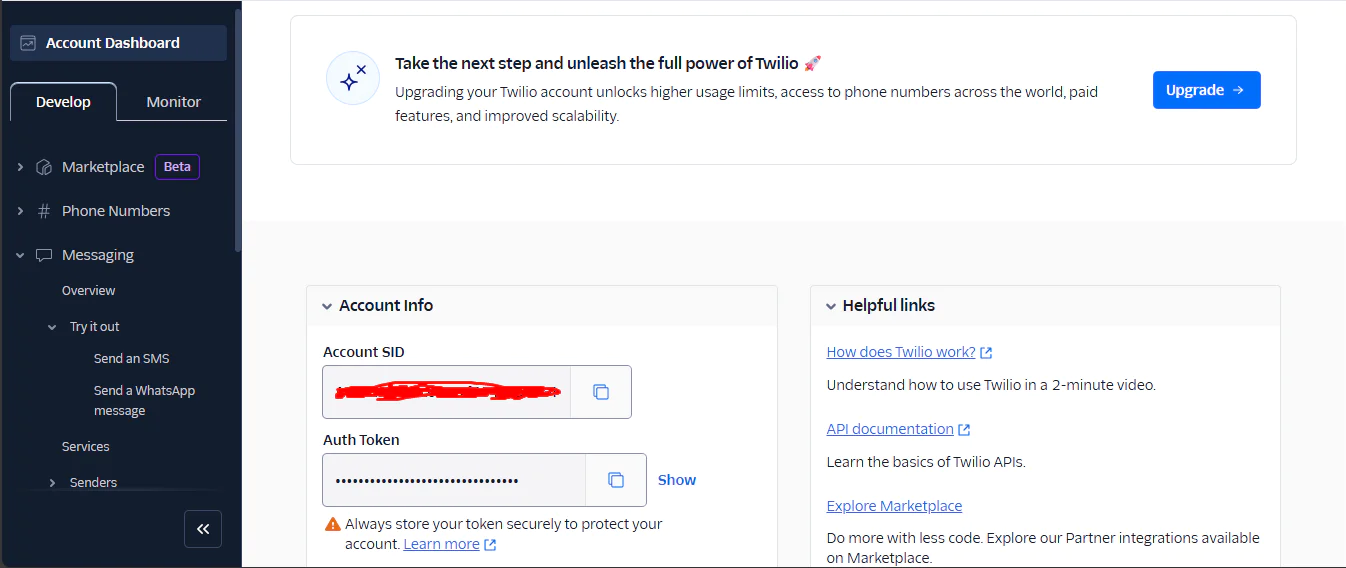

Now, proceed to your Twilio Console, find your Account SID and Auth Token in the main dashboard.

Update your .env file with these values to complete setting up Twilio for your project.

Configuring OpenAI API Access

This is the final step in your development environment setup. Visit the OpenAI API platform page and create an account or log in. Once logged in, navigate to the API Keys section in the left sidebar. Click Create new secret key and give it a descriptive name like WhatsApp Interview Bot. Copy the generated key immediately because OpenAI only shows it once for security reasons. Then, update your .env file with your OpenAI API key. If your account is new, OpenAI will give you test credits. If not, you will need to add credits to your account. $5 is sufficient since the GPT-4o-mini model you will use for this project is cost-effective.

You now have everything you need to start building the application. Let's begin.

Building the Autonomous Agent

In this section, you will concentrate on creating the actual agent itself. This will be the brain of the system. It will manage the conversation, ask questions, and guide the user through the interview. Inside your mock-interview folder, create a new file named interview_graph.py. All the code in this section will go into this file.

Importing the Required Libraries and Initializing the Agent Configuration

Open the interview_graph.py file and start by importing the following classes and functions from the libraries you installed earlier.

These importations will enable you to call and use the methods and functions they support in your code. The create_react_agent function is the heart of the agent, as it allows you to quickly create an agent that can reason and act( ReAct) without coding from scratch. The ChatOpenAI will help you make an object to connect with the OpenAI API and access their models. The tool import is a decorator that will mark your functions as actions the agent can perform during interview conversations. As for the PromptTemplate it will help you build dynamic and reusable prompts. A prompt is the way you tell an AI model or system what you want it to do and how to do it. The SystemMessage will define the behavior and tone of the agent. Finally, the os and load_dotenv will help access and load the environmental variables from the .env file.

After the imports, load the .env file to make its values available to your program, then initialize the large language model to enable communication with OpenAI’s API.

The most important parameter is the temperature. The LLM's temperature is what controls the creativity of the model’s output. The lower the temperature, the more deterministic the output, and the higher the temperature, the more random the output will be. For the mock interview agent, you should aim for a value that balances between creativity and consistency. This will prevent the agent from sounding robotic and also help maintain professional quality. But you don't have to guess it right the first time; you should adjust the value as you are testing and iterating on your agent’s responses.

Defining the Core Interview Prompt Templates

After setting up the LLM, the next step is creating the prompt templates. These will guide the agent on how to formulate its messages and what details to include. You will need a template for each part of the conversation that the agent will handle. This will include a welcome prompt, a question prompt, a feedback prompt, a help prompt, and a final review prompt. For each prompt template, you will need to specify the input variables, the pieces of information the agent needs for that message, and the template, which is the structure the agent will follow when responding.

Start by defining the welcome prompt.

This template will be used to greet the user at the beginning of each WhatsApp session. It will inform the users of the steps they will encounter throughout the interview. In this case, there are no input_variables because it’s a static greeting for new users.

After greeting the user, the agent will ask the user’s job role, experience level, and number of questions. This happens early to enable the agent to personalize the interview questions based on the user’s experience and niche. You will need to validate each of these user responses using the LLM, as any invalid answer might lead to a failed session. For example, if a user provides an invalid job role, the agent will struggle coming up with the right questions. Go on and create the validation prompts.

The role_validation_prompt takes in the user’s job role input and checks and validates it. If it is a valid job, it cleans it up for consistency. If it is not valid, it rejects it with a clear reason. The same process happens during the validation of both the level and the number of question inputs.

After validating the interview setup questions, the next step is to define a prompt that the agent will use to generate the interview questions.

This prompt will generate personalized questions based on the user's job role and experience level, which are passed as input variables. The previous_context variable contains a summary of what has already been covered in the interview to avoid repeating topics.

After the user answers the question, the agent will need to provide feedback on how they performed. For this, you will need a feedback prompt.

This prompt will provide the LLM with the user’s role, experience_level, question, and the response given. Then the LLM will critique the response, pointing out what the user did well and how to improve. It also coaches the user on what interviewers are looking for in a good answer when asking the question at hand. But what happens when the user asks for help rather than answering? This is where you need the help prompt template.

This PromptTemplate uses role, experience_level, and question to generate coaching tips. When the user says they don’t know the answer to a question or explicitly asks for help, the agent will use this template to generate tips on how the question should be answered and give the user another try.

After looping over and reaching the number of specified questions, the agent will give a final feedback to the user on how they fared during the full interview session and tips on how to proceed. For this, you will need the final_review_prompt.

This PromptTemplate uses role, experience_level, and interview_summary (a summary of your answers and feedback) to wrap up the interview. You can still continue with the interview if you want more questions by notifying the agent that you want to practice more. This is because the agent sets the number of questions the user gives, hence it can update it at any stage of the interview.

Up to now, you have defined the PromptTemplates, but you haven’t seen how the agent will actually use them. In the next step, you will create tools that call these templates and send them to the language model when the agent needs to generate a message during the conversation.

Creating Tool Functions For Agent Tasks

Tools are helpers that the agent can pick up when it needs to do something specific. For example, in this case, it can be actions like asking a question, giving feedback, etc. Each tool wraps a function and describes what the function does, what it needs as input, and what it will return. When you add tools to your agent, it can decide which tool to use based on the conversation, making it autonomous. Proceed to define the tools we will need. Each PromptTemplate will have its own tool.

When the agent runs, it will use these tools to call your prompt templates, fill in the details, and send them to the language model to generate a clear, focused message for the user. Each print statement in the tools ensures you know when a tool is called in the debug logs. This will help in troubleshooting in case the agent does not run as expected. Make sure the name and description you give your tools describe what they achieve. This will help the agent choose the right tool.

Setting the Agent’s Instructions

After creating the tools, the next step is to define how your agent will behave using a SystemMessage class. This is a prompt that gives the agent an identity. It instructs the Large Language Model powering the agent what role to assume and which mission it aims to accomplish.

The above system_prompt tells the LLM to assume the identity of a mock interview coach for the role provided by the user. It then continues to set the goal of helping users excel in job interviews through realistic practice and expert feedback. This keeps the agent focused on delivering a practical experience. It then makes the LLM aware that it can use the tools it has at its disposal in any way it sees fit. It then notifies the LLM that it is autonomous, hence will be making the decision solely on its own, and to ensure it meets professional interview standards. The final part has constraints that are very critical to the mock interview agent. They instruct the agent not to reveal how it works (no system internals) and must protect the user's privacy by managing session data carefully. It must also politely decline off-topic requests, like What’s the weather? This keeps the conversation focused on interview practice.

Assembling Your Agent

You now have everything you need to create your agent using the create_react_agent function. But this function takes in the tools in a list form, hence you will have to wrap the tools you defined earlier in a list.

After this, create the ReAct agent and pass the LLM it will use, the tools at its disposal, and finally the system prompt that will guide it.

Now that you have your agent ready, you need a way to manage users and their conversations.

Managing User Conversations

Go ahead and create a function named run_interview that connects the users to your agent.

The run_interview function handles passing the user_id and user_message to the LangGraph agent while maintaining conversation state in a session_store dictionary. It retrieves or initializes the user’s session based on the user_id, adds a system message for new users to trigger a welcome message. It then appends the user_message to the conversation history. The function then feeds this to the agent’s graph.stream method, which uses the ReAct(Reason then Act) pattern to select tools to generate a response. It extracts the agent’s reply, updates the session with the user_id, and returns it, falling back to an error message if needed.

Your system is now ready to interact with users and create realistic mock interviews. But before you connect it to WhatsApp, it's best to test the backend independently.

Running the agent locally for testing

Create a main block that creates a command-line interface where you can type messages, interact with the LangGraph agent, and receive responses.

The above code simulates how a real user using WhatsApp would interact with the agent. It initializes an empty session_store dictionary to track conversation history and sets a user_id as test_user. It then creates a while True loop that prompts for input, processes it via run_interview, and prints the response.

Proceed to test the agent by running the following command on the terminal:

You should see an input prompt to start interacting with the agent, as shown below.

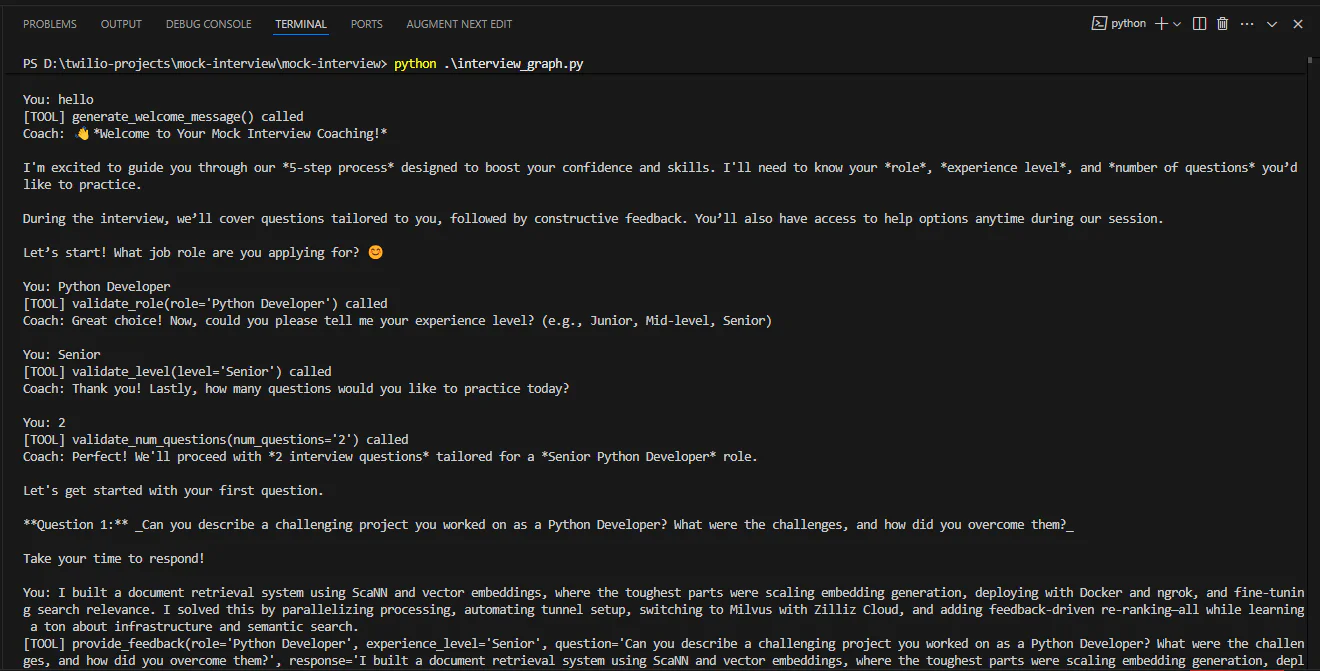

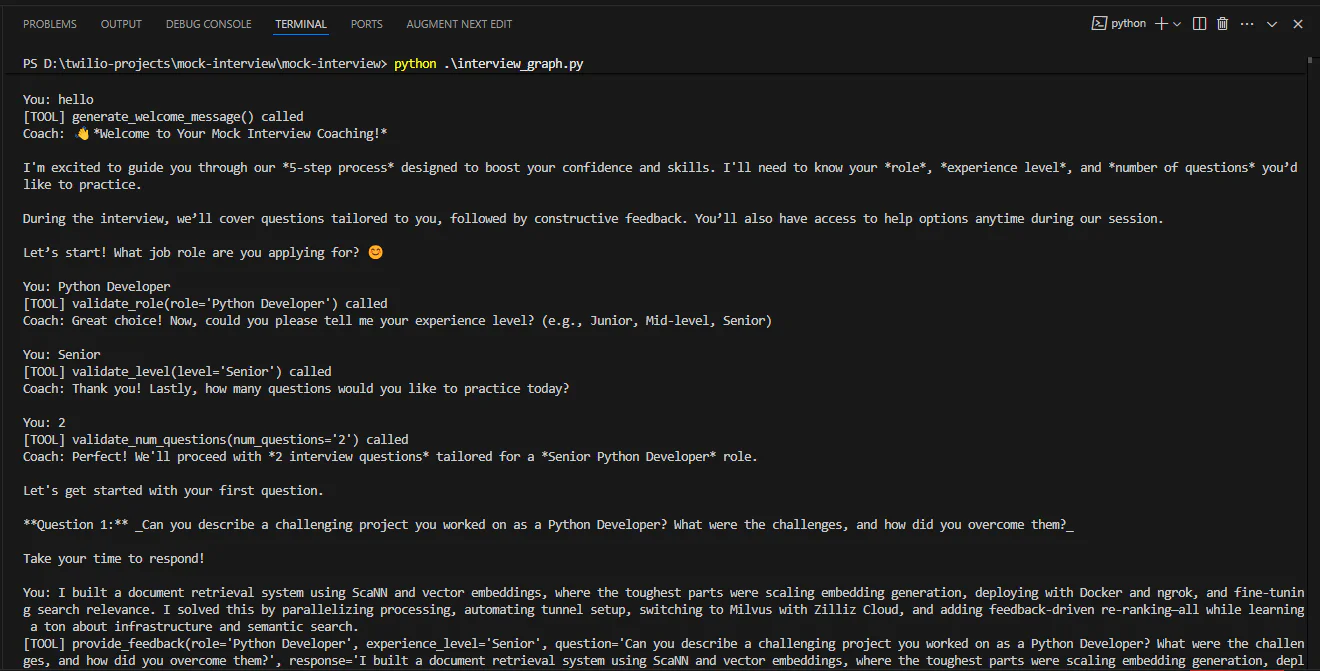

Send a message to start the conversation. For a Python Developer role, here are the results.

The screenshot does not show the full interaction, but as you can see, the agent is doing an incredible job. When you are satisfied with the results, the next step is to connect the agent to WhatsApp.

Setting Up the WhatsApp Flask Server for Agent Integration

To connect the agent to WhatsApp, you will need a Flask server. Start by creating a new file named app.py inside your mock-interview folder. All the code under this section will go into this file.

Importing the Necessary Libraries

Start by importing the following Twilio and Flask classes and objects. Also, import the run_interview function you created.

Flask and request create a web server and handle incoming HTTP requests. MessagingResponse from twilio.twiml.messaging_response formats replies for WhatsApp. Finally, the run_interview will process messages using your agent.

Initializing the Flask App

After the imports, proceed to initialize a flask application.

This creates a basic Flask application. The __name__variable tells Flask where to find the app’s files. Also, initialize a session store that will store user conversation states, mapping each user’s WhatsApp number to their session data.

This will ensure that when multiple people message the Twilio WhatsApp number, their interactions don’t get mixed up.

Creating the Webhook Endpoint

After this, create a webhook that Twilio calls when a WhatsApp message arrives.

The code above serves as the endpoint for Twilio WhatsApp messages, extracting the message text and sender’s WhatsApp number from the request. It then processes them with the run_interview function from interview_graph.py and returns a TwiML-formatted reply via MessagingResponse. The error handling ensures there is a friendly fallback response if the input is invalid or an exception occurs.

Running the Server

Create a main block that will run the server when the app.py file is executed.

The main block runs the Flask server locally on all network interfaces (host='0.0.0.0') at port 5000 with app.run. This enables the webhook to receive WhatsApp messages for the mock interview agent.

Exposing the Flask Server to the Internet

Now your agent and server are ready. But you need to expose the local server to the internet so the Twilio WhatsApp API can send messages to it. To achieve this, proceed to the Ngrok website and sign up for free. Then, download the installer that matches your computer and follow the given instructions to set up Ngrok.

After you finish setting up Ngrok, you are ready to test your agent via WhatsApp.

Running and Testing the Agent Via WhatsApp

To test your application, proceed to the terminal and run the following command.

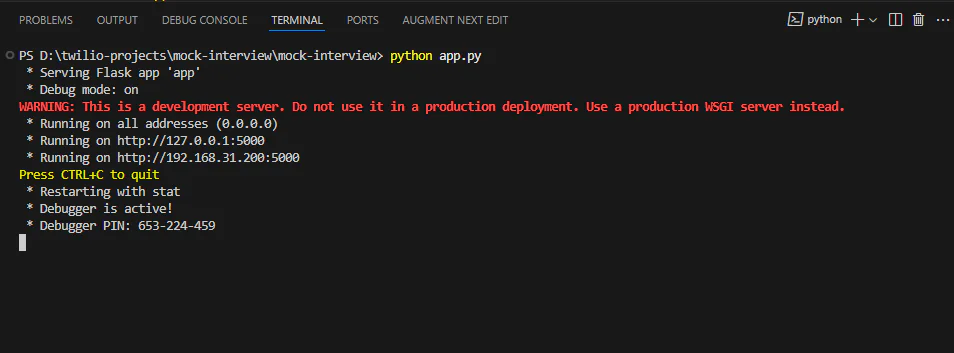

This will start your development server as shown below.

This shows the server is active and listening for requests on port 5000 locally. Now, run the Ngrok application you downloaded. Then, run the following command on the Ngrok terminal to create a secure tunnel to your Flask server

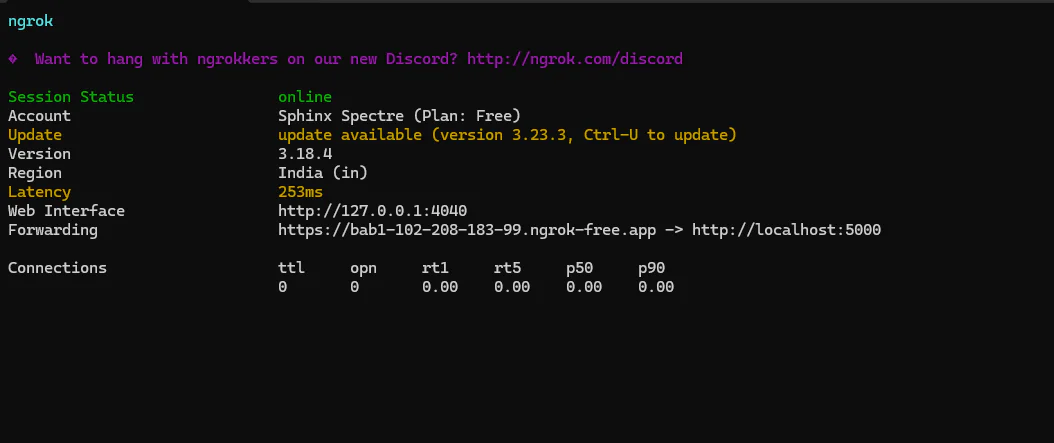

Ngrok should display a public URL in the terminal, indicating the terminal is active and forwarding requests to http://localhost:5000, as shown below.

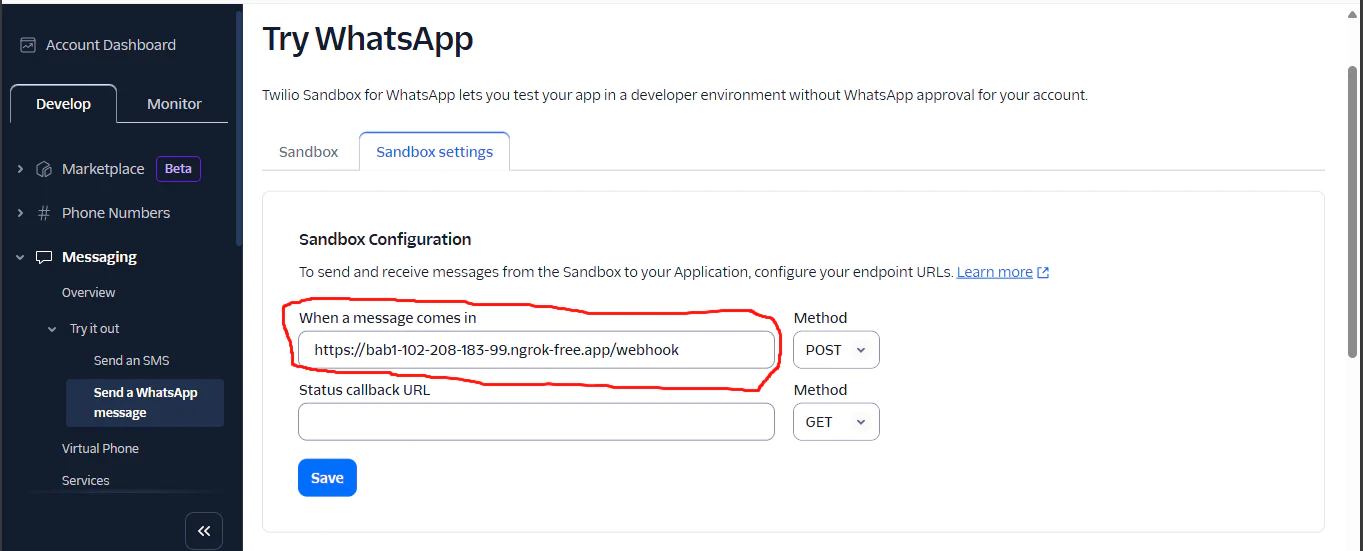

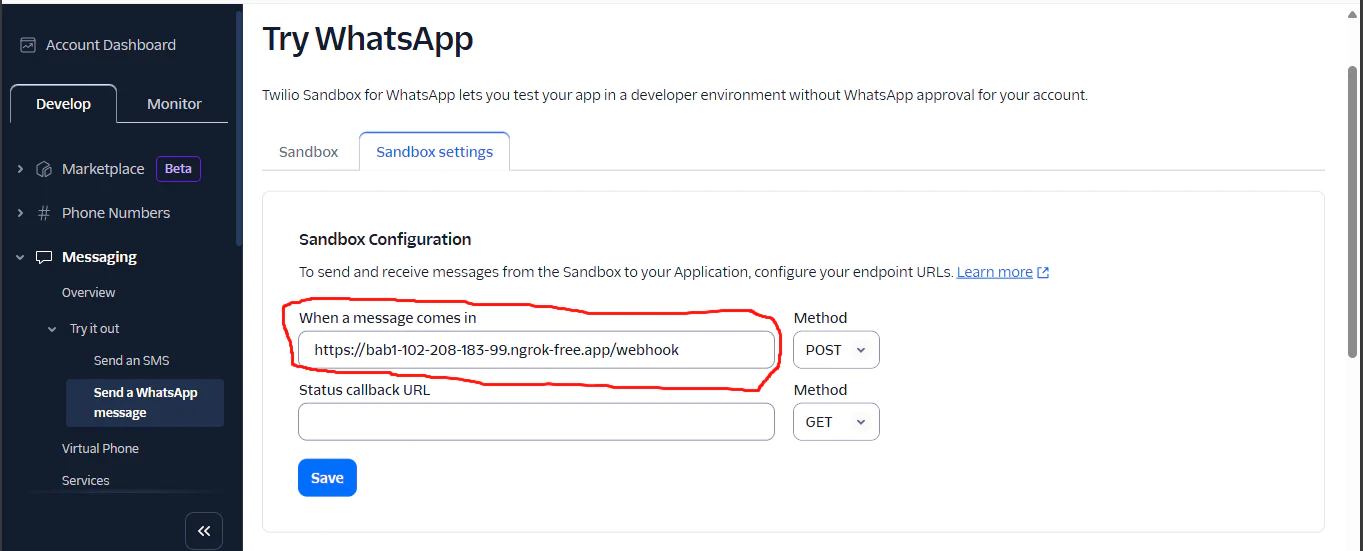

Now, copy the public URL shown. In this case, it is https://bab1-102-208-183-99.ngrok-free.app. Proceed to the Twilio WhatsApp Sandbox page and click Sandbox settings. Then, under Sandbox Configuration → When a message comes in, paste the Ngrok’s public URL and add your webhook at the end so that it will look like this https://bab1-102-208-183-99.ngrok-free.app/webhook and save.

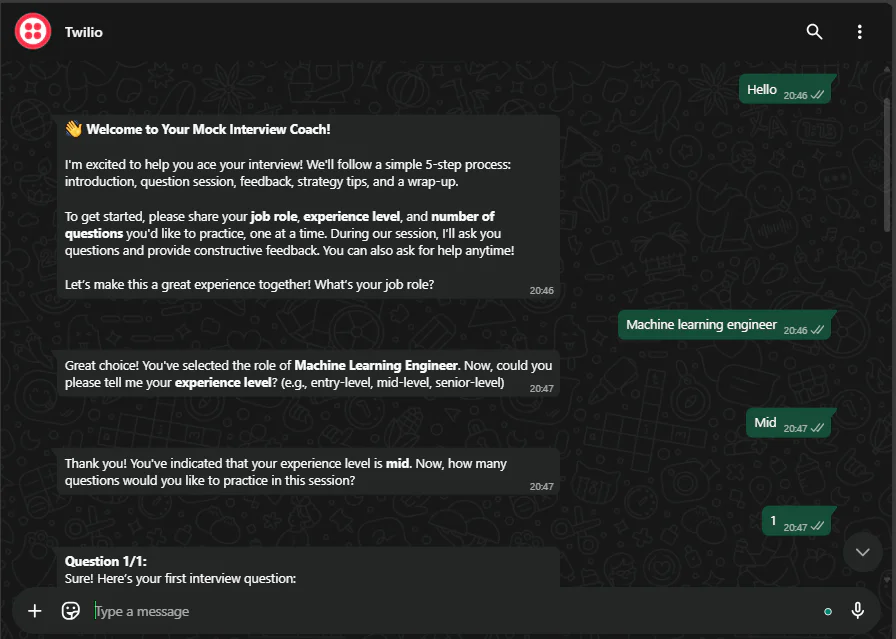

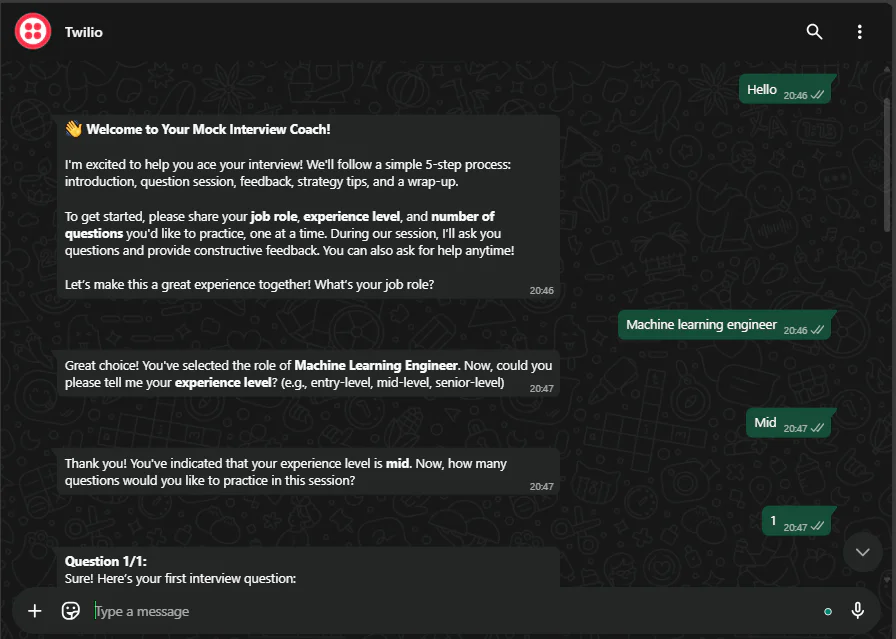

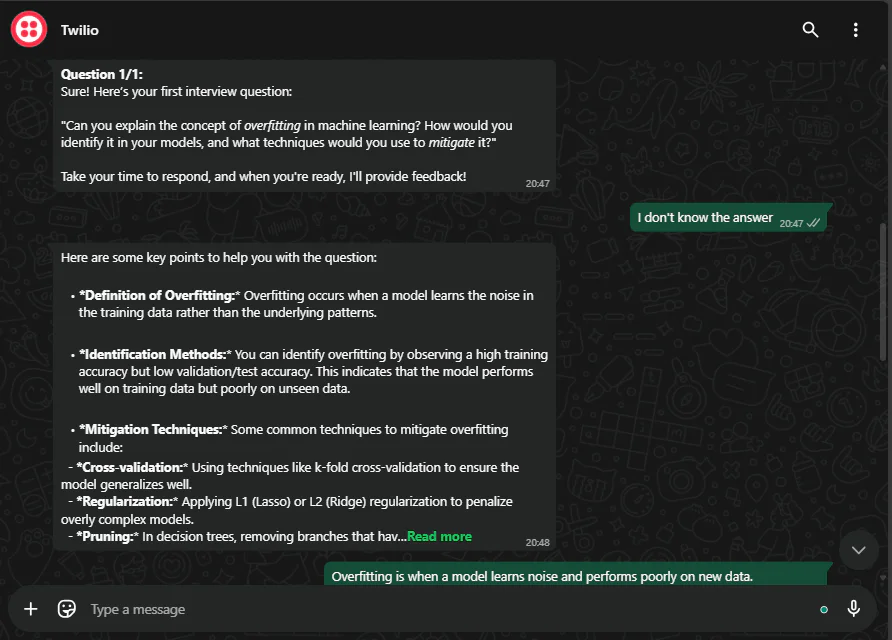

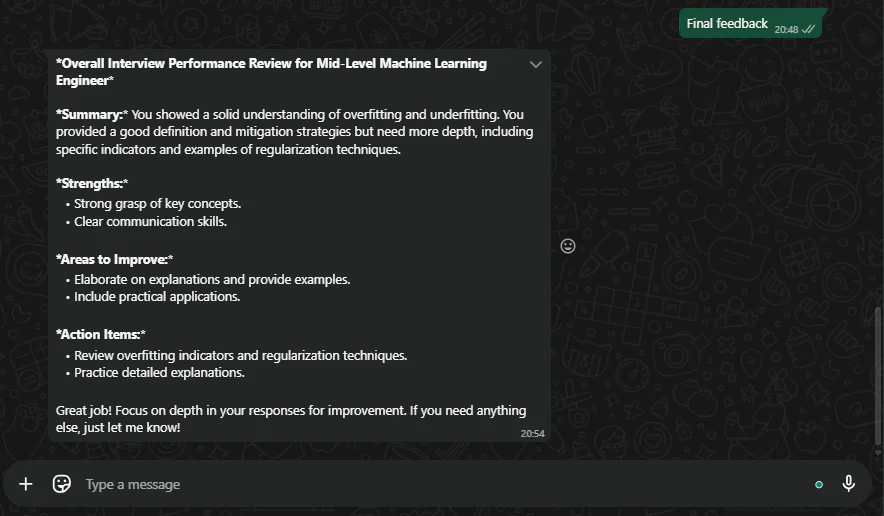

This tells Twilio where to send incoming WhatsApp messages so that they can be forwarded to the Flask server by Ngrok. You are now ready to chat with your agent on WhatsApp. Proceed to WhatsApp and try interviewing for a role of your choice. Here are the sample results for interviewing for the machine learning engineering role.

From the chats, you can see the mock interview agent handles the interview flawlessly. Try it with more questions to see how it holds up.

Conclusion

In this tutorial, you have created an autonomous mock interview agent. You now have the foundational skills of how to create agents using LangGraph and how you can serve them via WhatsApp using Twilio WhatsApp. But this is not the end of the road; in the world of AI, there are many possibilities for what you can create. Use the knowledge you have learnt to challenge yourself and create more amazing AI agents.

Happy Coding!

Denis works as a machine learning engineer who enjoys writing guides to help other developers. He has a bachelor's in computer science. He loves hiking and exploring the world.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.