Configuration Deployments at Twilio

Time to read:

This post is part of Twilio’s archive and may contain outdated information. We’re always building something new, so be sure to check out our latest posts for the most up-to-date insights.

Configuration Deployments are a way to inject configuration at deployment time to be consumed by a service at runtime. Runtime configuration is information that services consume once deployed. Specifically, runtime configuration data varies with the target environment and deployment context of a service. By decoupling runtime configuration from built artifacts we can gain a number of benefits in predictability, resilience, velocity, and engineer experience.

12 Factor Apps and Configuration

Here at Twilio, we embrace the best practices described in the 12 Factor App. These principles help us build resilient, scalable, and maintainable software applications that our customers can rely upon to be available whenever they are needed. In this blog, we will cover how we have created a solution to address creating, injecting, and auditing application configuration.

Why we needed to build something new

It may be helpful to go over the previous state of the world in understanding why we needed to build something that solves this problem for us. We use Chef for Configuration Management and typically services put a lot of their application configuration into Chef as chef attributes. Our Chef code is checked into a monolithic Git repository which contains the cookbooks for all of Twilio’s services. There are several problems with this that we thought could be improved, namely that it is error prone, chef attributes are not type safe, each change to our chef repository required a new artifact build, and a bad chef artifact build could break deployments of all services.

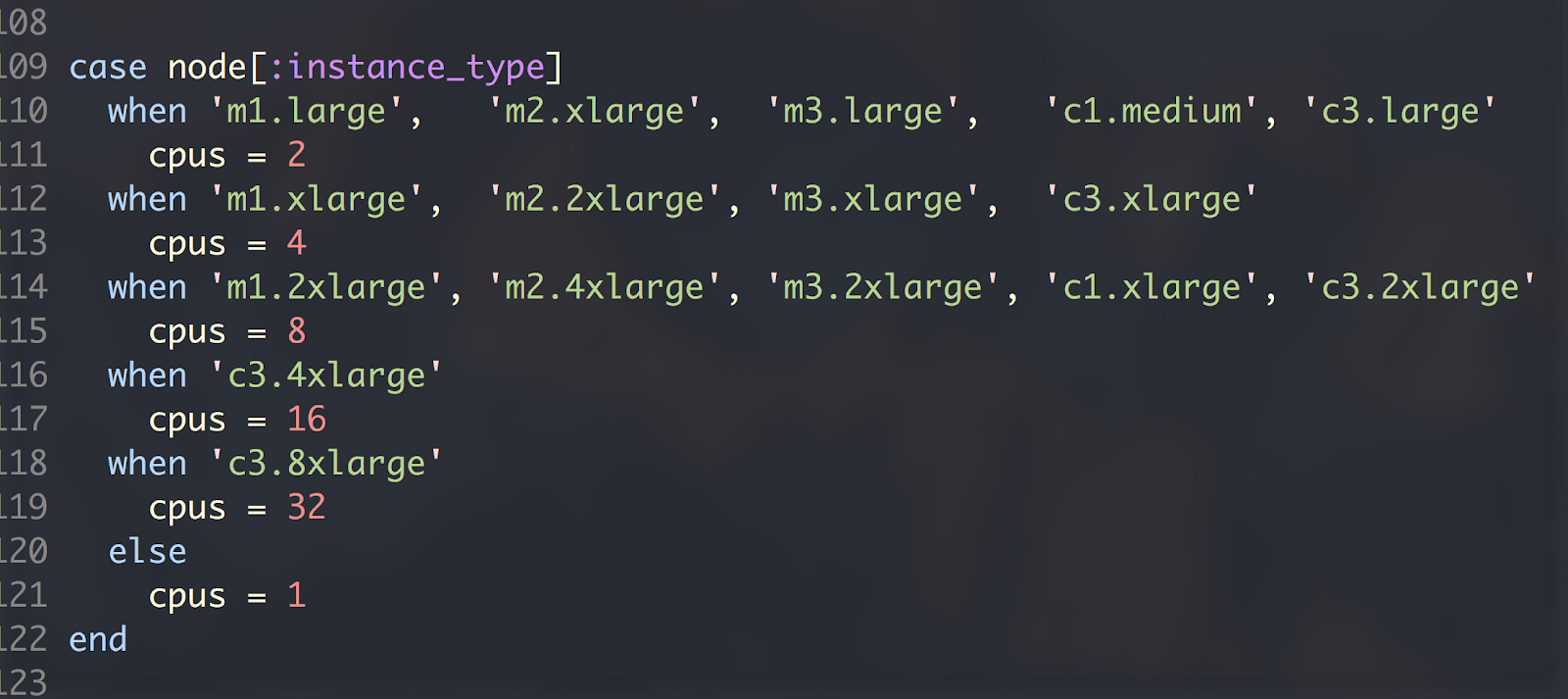

Sometimes services need to use configuration from other places than Chef – often a service would have a configuration file checked into the repository and would be mixed with chef attributes rendered at runtime to perform some logic based on branch conditions. For example, see the snippet below that determines the java_max_heap_setting JVM argument based on the environment to which we are deploying:

When considering that we often make decisions about the behavior of our software based on multiple factors, these branching conditionals are used frequently. We found this to be very error prone to typing in an incorrect value or type and difficult to maintain as we scale out our services and the number of variables increases. Using this type of logic also makes running our services locally extremely difficult to replicate and local development became a burden.

Additionally, we didn’t have very much insight into auditing and determining changesets of Configuration very easily. We had this monorepo in Git, but finding a specific change that may have caused an incident was more challenging that it should have been. We would really like to be able to correlate the exact Configuration change between multiple deployments easily without needing to work through the logic of all those potential branches during incident response.

Finally, as we make the migration over to containers we no longer have Configuration Management as a central location to declare application configuration. One of the preferred ways to get application configuration onto a container is to be injected through the environment. We wanted to build a tool that would provide a single interface for application developers whether they were still developing in the old world of Virtual Machines or the new world of Containers and to help bridge the migration by providing a consistent developer experience.

To recap, the requirements of this system are:

- Provide a single interface to create and manage application configuration to be injected into the environment at deploy time and consumed at runtime

- Provide type safety, schema validation, and auditability to application configuration

- Bridge the developer experience between deploying software to containers and virtual machines so there is a consistent approach for developers operating in either environment

- Ensure that this system is highly available so that configuration can be quickly, safely, and easy rolled forward or back during incidents

What we built

We built a system that we are calling Configuration Deployments that enables Configuration to be a top level property of a deployment. Previously, a Deployment consisted of both a Software Definition and a Hardware Definition. Now, a Deployment includes a Configuration.

Configurations are namespaced and can contain multiple namespaces for services that run multiple software packages side-by-side and each require a unique Configuration. Configurations are created at the time of Deployment so that they can modified in a per environment and region basis. Anywhere that software can be deployed, we can deploy a unique Configuration.

Application developers have the ability to create Configurations based on a JSON Schema Specification so that all Configurations values are typed and verified against their schema when created to prevent deploying unsafe Configuration. Configurations are also immutable, so in order to modify a Configuration you create a new revision of an existing one, leaving us with both the original and modified Configuration. This helps to keep an audit log of all changes to Configuration allowing us to know who made changes, when they were made, and to which software the changes were applied. It also helps with rolling back configurations to a previous version as any old Configuration will always be present.

How it works

Application developers create a file called configuration-schema.yaml, and check this in to their softwares Github repository at the top level. This file defines the configuration required by the software as a JSON Schema Specification (we actually use YAML, which is a superset of JSON, but for this purpose they are interchangeable). See this (shortened) example from of our automated cluster remediation service, Lazarus, below:

There are two important parts to this file; the properties definitions, including types, default values, and a short description, and the list of required parameters that must have a value defined at Configuration creation time in order for the Configuration to be considered valid.

Once this schema has been defined, we want to create a Configuration based on it that will be deployed along with our software. We do this through our Deployments User Interface that will allow us to bump the version of our software we have deployed and then create and attach this configuration to our deployment. It is important to note that configuration schemas are tied to a specific version of software so that they are tightly coupled – when the configuration schema changes, there should be code changes that will use the new configuration values, so these can be code reviewed and deployed together to ensure complete compatibility.

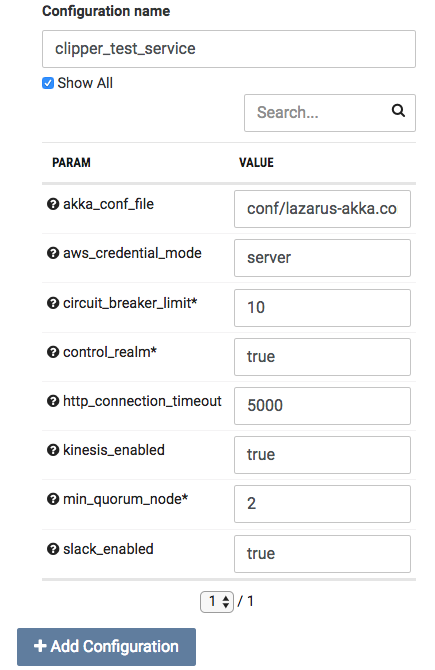

Our Configuration Editor is shown in the screenshot below:

By default, we only show parameters that are required (identified with the ‘*’) or parameters without values. You can filter for specific parameters with the search box or see them all by selecting the “Show All” checkbox.

We can add more Configurations by clicking the “Add Configuration” button at the bottom to make sure we include all the Configurations required by our software. As I mentioned before, these Configurations are namespaced so users are free to name their Configuration in any identifying way they choose.

As we change the version of our software to deploy, the UI will automatically pick up the appropriate configuration schema associated so that the Configuration values can be entered manually. The configuration will then be published to both our internal database cluster and multiple S3 buckets in different regions for redundancy. We use multiple S3 buckets so that all hosts will have access to their configuration without our MySQL infrastructure being a failure point that prevents deployments, and in the scenario of an S3 failures or network partitions, we can ensure that configurations will still be accessible.

After your deployment has completed and either the VM or container are created, a sidecar will fetch your configuration from one of the S3 buckets and the Configuration will be exposed to the application in 3 ways:

- If running in a Virtual Machine, Chef will contain the configuration so that a services Chef cookbook can access these values identical to how they were used before

- Via an HTTP server running on the host

- Exported as environment variables so that applications can transparently rely on their environment to be configured correctly with no overhead

By providing multiple means of accessing configuration, we do not restrict the types of workflows and applications that can be built using Configuration Deployments or force developers into using a method that is not convenient for their software.

Conclusion

Our platform organization is working hard to provide best-in-class solutions to our application developers to enhance their productivity, the resiliency of their services, and ability to focus on what matters most to them – building the applications that Twilio’s customers rely upon.

We have received excellent feedback on this system so far, but there is much we need to iterate on and improve. For now, Configurations are static. We want to add the ability to dynamically update configurations so that new host or container deployments are not required. We also plan to improve the user interface to allow for comparing configurations across deployments. Finally, we would love to leverage Netflix’s Archaius, which provides a rich feature set that would enhance our own Configurations system.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.