Build a Smart IT Help Desk using AI with SendGrid, Twilio and Qdrant

Time to read:

Build a Smart IT Help Desk using AI with SendGrid, Twilio and Qdrant

What if your help desk could fix issues as they are being raised? Picture a support engine that grows smarter with every conversation, instantly triaging incoming issues and knowing when to bring a human into the loop by escalating only what truly demands human attention. An automated system like this would leave your team to focus on innovation, not inbox overload.

In this hands‑on tutorial, you’ll assemble a Smart IT Help Desk System that:

- Listens on WhatsApp via the Twilio Business API for instant, familiar user interactions.

- Analyzes issues with Large Language Models, turning raw symptom data into clear, actionable diagnostics.

- Indexes and searches troubleshooting guides in Qdrant’s vector database to ground every answer in your own domain knowledge.

- Automates ticket workflows classification, priority‑setting, asset updates, slashing resolution times.

- Supports multimodal input by accepting screenshots or photos of errors, so agents see exactly what users see, cutting ambiguity and speeding fixes.

- Escalates critical incidents with an automated email alert to your admin team for urgent, on‑site intervention and satisfaction surveys.

Prerequisites

Before you begin, make sure you have the following:

- A Twilio account with the WhatsApp sandbox enabled. (You’ll use this for testing.)

You’ll need your Account SID, Auth Token, and the sandbox WhatsApp number.

- An OpenAI API key for GPT-4o (visit o penai platform to sign up).

- A Twilio SendGrid account and API key for sending emails (alerts and surveys).

- Python 3.13+ installed. (You’ll use FastAPI for the server).

- Ngrok (or Pyngrok) to expose your local server for Twilio webhooks.

- Familiarity with Python and APIs.

Project Setup

First, clone the source code repository. You can find the full code on GitHub. In your terminal, run:

Then create & activate a virtual environment. Run:

Next, install the required Python packages. Make sure you're in the directory where the requirements.txt file is located. Run the command below:

This pulls in:

FastAPIanduvicornfor the web server.twiliohelper library for WhatsApp messaging.sendgridfor email API.openaifor calling GPT-4o (through LangChain).qdrant-clientandlangchain-openaifor vector search.python-dotenvto load .env files.pyngrokto manage an ngrok tunnel from Python.

Create a .env file in the project root to hold your configuration. Using a .env ensures you keep credentials out of source code. FastAPI, via Pydantic’s BaseSettings, will automatically load these variables. Setting env_file = ".env" in the Settings class ensures these values are available in settings. Replace these values with actual credentials:

Your application uses these environment variables to keep secrets and configuration out of your code. TWILIO_ACCOUNT_SID and TWILIO_AUTH_TOKEN together authenticate and authorize your app to send SMS or WhatsApp messages through your Twilio account. These can be found on your Twilio dashboard.

TWILIO_PHONE_NUMBER is the E.164‑formatted number you send from (whether sandbox or production). When sending from the Twilio WhatsApp Sandbox, this value will be shown on the WhatsApp Sandbox Dashboard under From. OPENAI_API_KEY lets your code call OpenAI’s APIs for text, embeddings, or other AI services. NGROK_AUTH_TOKEN connects your local server to a public URL so you can receive webhook callbacks during development. SENDGRID_API_KEY secures your access to SendGrid’s email‑sending service.FROM_EMAIL and NOTIFICATION_EMAIL define the sender address for outgoing emails and the recipient for system alerts respectively. Finally, USER_EMAILS holds a JSON mapping of user phone numbers to their email addresses so you can link incoming messages to the correct user records. With all these understood, proceed to creating your application.

Build the Application

To begin, dive into the Python application app.py. This FastAPI app orchestrates the chatbot’s logic. This tutorial will now review the main parts of the code section by section to understand the application at a low level.If you want to try out the application yourself, jump down to the header to run your server.

Configuration and Settings

The load_dotenv() reads variables from the .env file into the environment. The class Settings(BaseSettings) defines your configuration schema. It then uses Pydantic’s BaseSettings so you can override these with environment variables. Each attribute (e.g., TWILIO_ACCOUNT_SID) pulls from os.getenv. If not set, defaults to an empty string.

The inner Config class tells Pydantic to load .env and allow extra fields.

Finally, instantiate settings: settings = Settings(). All keys from .env are now in settings.

Initialization: Ngrok and FastAPI App

This code imports pyngrok and sets its auth token to can start tunnels programmatically.

You then create the FastAPI app instance (app = FastAPI()), which will serve your webhook endpoints. Next, move onto memory management.

Class Conversation Memory

This section handles recall.

The ConversationMemory class is designed to manage the conversation history between users and the assistant in a seamless, ongoing manner. When a user sends a message, the class first determines whether the message should be grouped with previous messages in an existing session or if a new session should be started, based on how much time has passed since the last interaction. It then stores each message, whether from the user or the assistant, along with relevant metadata such as the message type, content, media URL, timestamp, and session ID in a SQLite database.

As the conversation continues, the class retrieves the most recent messages for a user, up to a configurable limit, to provide context for the assistant’s responses. This allows the assistant to reference earlier parts of the conversation and maintain continuity, making interactions feel more natural and informed. To ensure the database remains efficient and doesn’t grow indefinitely, the class automatically deletes older messages that exceed the set history limit for each session and periodically cleans up conversations that have expired based on a timeout setting.

Additionally, the class provides methods to clear all conversation history for a specific user, which can be triggered by user commands or admin actions, and to perform manual or scheduled cleanup of expired conversations. This continuous management of conversation data enables the assistant to deliver context-aware, multi-turn interactions while keeping resource usage under control.

KnowledgeBase Class

This section demonstrates how to build a simple retrieval‑augmented knowledge store using LangChain’s OpenAI embeddings and a Qdrant vector database.

The self.embeddings = OpenAIEmbeddings(...) creates a LangChain OpenAIEmbeddings instance (using your GPT API) to turn text into vector embeddings. The collection_name and vector_size, tells your application to store embeddings in a Qdrant collection named technical_docs, with dimensionality 1536 (the size of OpenAI’s text embeddings). self.qdrant_client = QdrantClient(path="./qdrant_storage") then initializes a local Qdrant client that stores data in ./qdrant_storage. The initialize_knowledge_base() checks if the collection exists. If not, it creates it with cosine similarity. The _create_knowledge_base() then loads all .txt files under technical_docs/, splits them into chunks, embeds each chunk with OpenAI, and upserts them into Qdrant with their text as payload. This populates the vector DB. The get_relevant_context(query, k=3) embeds the query, performs a vector search in Qdrant, and returns the top k matching document texts joined together. This gives us context snippets to feed to the API.

Together, this class ensures your technical documentation and troubleshooting guides are indexed for semantic search. Using Qdrant’s graph-accelerated search means you don’t have to compute distances against every document every time; instead, Qdrant finds the closest matches quickly.

DiagnosticAssistant Class

Building upon the KnowledgeBase class, which efficiently stores and retrieves semantically relevant information, you now introduce the DiagnosticAssistant class. This class coordinates the services to run diagnostics.

The self.twilio_client = Client(...) initializes the Twilio REST client to send messages. The self.openai_client acts as the client for the OpenAI API (GPT-4o). self.knowledge_base instantiates the KnowledgeBase above, so your vector DB is ready. The self.email_service creates an EmailService (defined in email_service.py) for sending maintenance emails and surveys. self.user_emails then loads the phone-to-email mapping.

The get_user_email() strips the whatsapp prefix and looks up the user’s email in our dict. If none is found, you won’t send emails to that user. Next, you need to write the code to build the conversation.

The _build_conversation_context function constructs a list of message dictionaries that represent the conversation context for the AI assistant. It starts by adding a system message that instructs the assistant on its role and how to use conversation history. Next, it retrieves the user's recent conversation history from the ConversationMemory class. For each message in the history, it appends a dictionary to the messages list, distinguishing between user and assistant messages. If a user message includes an image, it notes this in the content. Finally, it adds the current user message to the end of the list. The resulting messages list provides a structured context, including both past exchanges and the current input, which is then used as input for the AI model to generate a relevant, context-aware response. Next, you need to write the code to send the WhatsApp message.

This send_whatsapp_message() formats the “from” and “to” numbers with the whatsapp prefix. To avoid hitting Twilio’s message length limit, it calls chunk_message() to split long responses into 1500-char chunks. It then loops through each chunk and sends it via the Twilio API. The implementation pauses briefly between chunks so Twilio can process multiple messages. If an error occurs, the code logs it and returns a 500 error to the webhook.

The chunk_message() helper to break a long string into numbered message pieces under Twilio’s limit. It splits on sentence boundaries and adds “(1/3)” style prefixes if more than one chunk is needed.

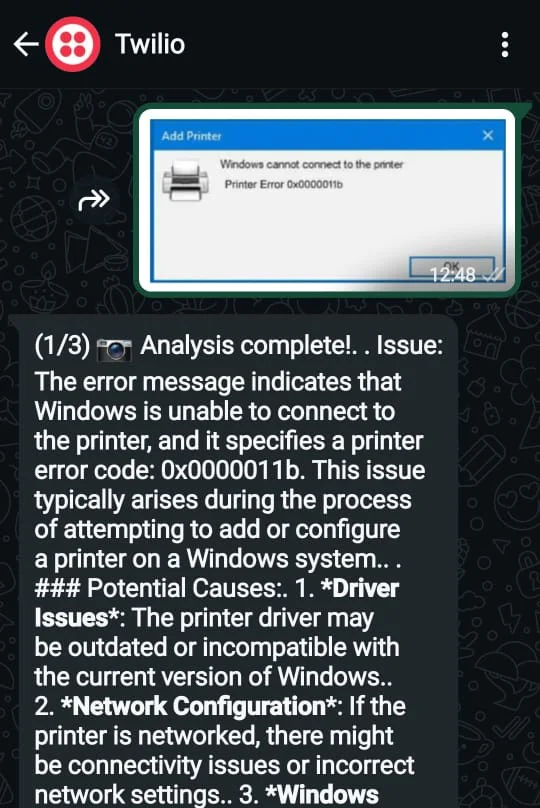

The check_hardware_issue() uses GPT-4o as a classifier. This sends a very strict system prompt asking only for “true” or “false” answers. GPT-4o analyzes the user’s text and tells you if it’s a hardware/maintenance issue. This lets the application automatically detect when to escalate to maintenance. Next, you extend your pipeline beyond plain text to ingest and analyze user‑submitted images as well. This enables your help desk to process screenshots of errors or photos of physical issues for richer, multimodal troubleshooting.

In process_image(), when a user sends an image, your code downloads it via Twilio’s authenticated URL, encodes it in base64, and submits it to the vision-capable GPT-4 endpoint gpt-4o-mini, with a prompt asking for a description. Once you receive the text description of the technical issue, you query Qdrant for related context and issue a second GPT-4o request to generate a solution. The final response then combines the described issue with GPT‑4o’s suggested fix.

Text Processing

This section implements the core logic that powers the technical support assistant. This handler is responsible for processing incoming WhatsApp messages, determining whether they are valid technical support queries, and generating appropriate responses. It combines language model capabilities, context retrieval from a vector database, and email notifications for hardware issues and customer feedback. See the code below.

process_text() : This is the core message handler. First, you ask GPT-4o if the text is a technical support query. If not, you reply with a default “I can only help with technical issues” message. This helps to conserve your LLM resource as well as tie it to domain specifics. If it is technical, you get relevant context from Qdrant, then send the context + user query to GPT-4o for a solution. You then check if it’s a hardware issue is_hardware_issue. If true, you call send_maintenance_notification(), see EmailService below, to email the maintenance team. You add a note in the WhatsApp response that maintenance has been alerted. If the sender has a registered email, you generate a random interaction_id and call send_satisfaction_survey() to email them a survey after the support is done. Finally, you return GPT-4o’s solution text back to WhatsApp.

This method ties together all parts: GPT-4o classification and answer, Qdrant context retrieval, and triggering emails via SendGrid when appropriate.

Ngrok Tunneling

Next, expose your local endpoints publicly with ngrok so Twilio can reach and interact with your application.

start_ngrok() Opens a ngrok tunnel on port 8000 where your FastAPI will run. Twilio webhooks must point to this public URL so they reach your local server. cleanup() Disconnects the ngrok tunnel on shutdown.

At the end of app.py, you set up the FastAPI endpoints and the main loop:

/webhook This FastAPI POST endpoint is where Twilio sends incoming WhatsApp messages. We extract:

From: who sent it (e.g., "whatsapp:+1234567890").

MediaUrl0: if an image was attached.

Body: the text message.

Then you strip the whatsapp: prefix from the sender’s number, then check if there’s an image or text. Based on that, you call process_image() or process_text().

Finally, you reply by calling send_whatsapp_message() to send back to the same sender.

Twilio expects a quick HTTP response, so you return JSON after scheduling the response message.

A simple root endpoint to verify the server is live.

In main() when you run python app.py, this starts ngrok, prints the public URL, and runs the FastAPI server. It also reminds you to set the Twilio webhook to <ngrok_url>/webhook.

On shutdown, it cleans up the ngrok tunnel gracefully.

At this point, every line of app.py has been explained. You’ve covered configuration, the knowledge base, message handling, and how emails are triggered for maintenance alerts and surveys. Now let's move to the email service.

How the Email Service Integrates with Your AI Workflow

This helper module handles sending HTML emails via SendGrid. It defines an EmailService class with methods for maintenance alerts and satisfaction surveys.

You create a SendGrid API client using the SENDGRID_API_KEY. from_email is your verified sender; notification_email is where you’ll send maintenance alerts.

Next, is the maintenance notification.

The send_maintenance_notification() sends a styled HTML email to alert maintenance staff.

You then use SendGrid’s Mail helper to construct the email. The subject indicates the issue and priority. The HTML includes the issue description (escaped for safety), priority (colored red for High), contact info (WhatsApp number and email of the user), and any extra hardware details in a <pre> block. You include a timestamp. Then you send the email via self.client.send(message), logging the response status. This follows SendGrid’s Python examples, which require a JSON body. You’re using the helper library to build it for us. Proceed to send the survey.

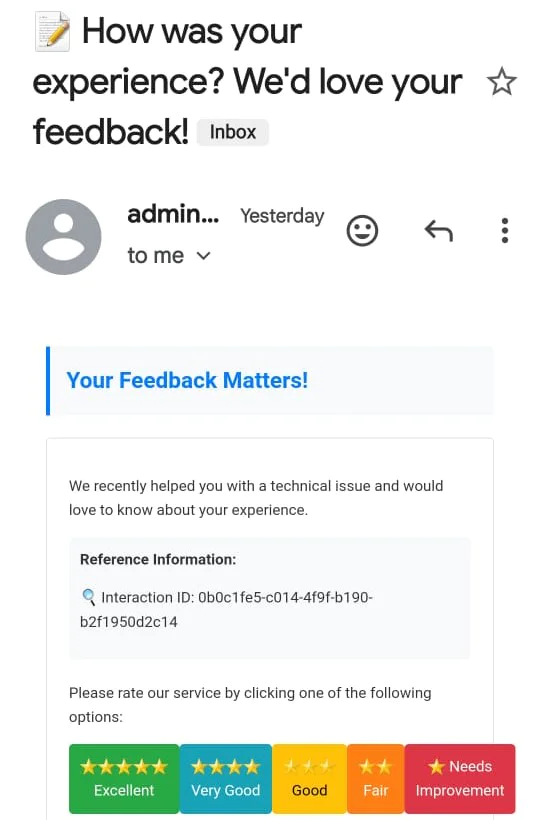

In this final section, the send_satisfaction_survey() builds an email asking the user to rate the service. It includes clickable buttons that are actually mailto: links to the notification email with a pre-filled subject and body containing the rating and interaction ID. For example, clicking “⭐⭐⭐⭐ Very Good” opens an email to support-team@company.com with subject “Service Rating - {interaction_id}” and body “Rating: 4 stars, Interaction ID and Comments”. You turn off SendGrid’s click tracking to allow these mailto links to work properly. Finally, you send the email and log the status.

With all these implementations in place, time to test the application.

Run the Local Server

To start the application, simply run:

This will:

- Launch an ngrok tunnel and print the public URL.

- Start the FastAPI server on port 8000.

You should see output like:

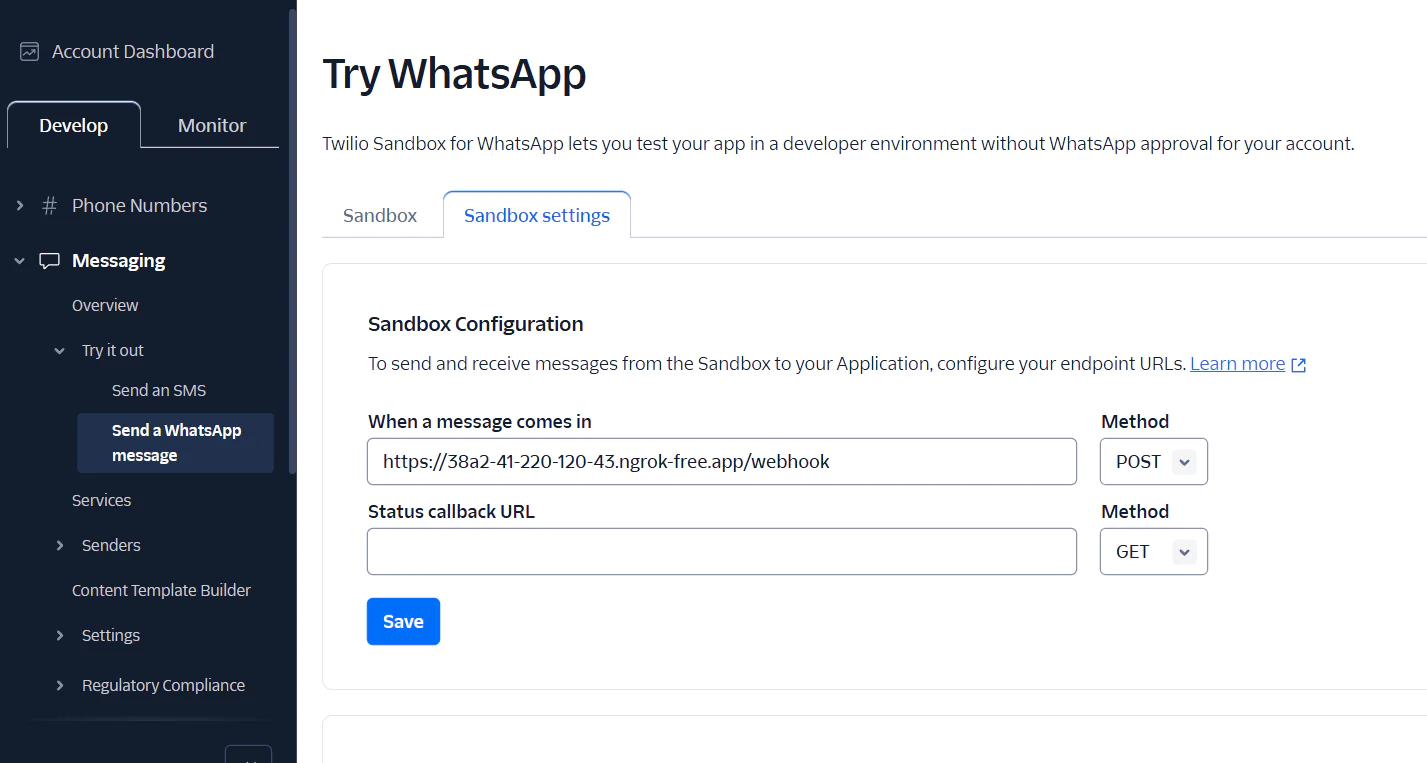

Configure Twilio WhatsApp Sandbox

Log into your Twilio Console, navigate to Messaging → Try it Out → Send a WhatsApp Message, and follow the steps to activate your sandbox. In particular:

- Join the Sandbox: On your phone’s WhatsApp, send the message

join <your-keyword>to the sandbox number +1 234 567 8900. Twilio will reply, confirming you’ve joined. - Configure Webhook URL: You will need to tell Twilio where to send incoming message webhooks. You’ll do this after running your application, since it will produce a ngrok link. For more illustrations, see the screenshot below.

The Twilio WhatsApp Sandbox is a pre-configured environment that lets you prototype immediately without having a fully approved Twilio number. Once you’ve joined the sandbox, any messages you send to the Twilio number will be forwarded to your webhook, and you can reply programmatically. After you are connected to the sandbox, your From number from Whatsapp should be displayed under Send a business-Initiated message. This number will need to be the same as the Twilio number in your .env file.

With the server running, you can now test the bot:

- Send a message from your WhatsApp (that joined the sandbox) to your Twilio sandbox number.

- The bot should reply after a moment. For example, try asking a simple technical question or even send an image of an error.

- If you say something clearly non-technical, it should politely decline.

- If you describe a hardware issue (e.g. “My printer is making a grinding noise”), the application will solve it using GPT-4o and also trigger an email alert.

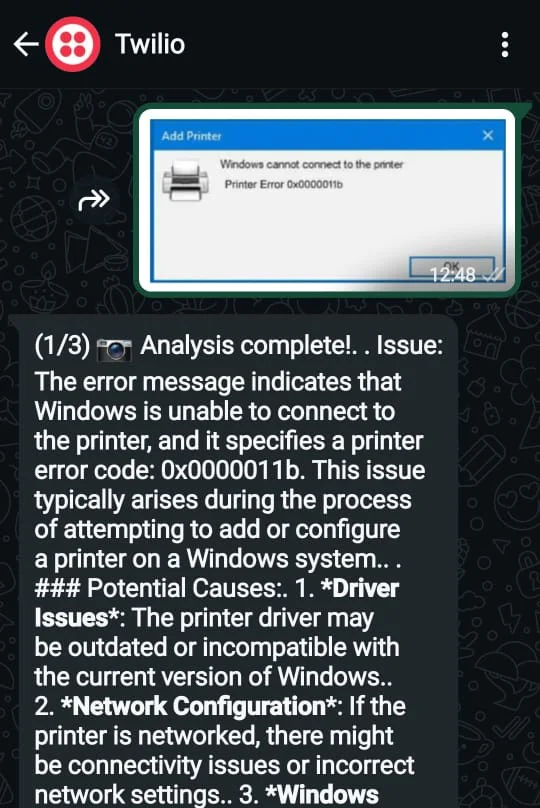

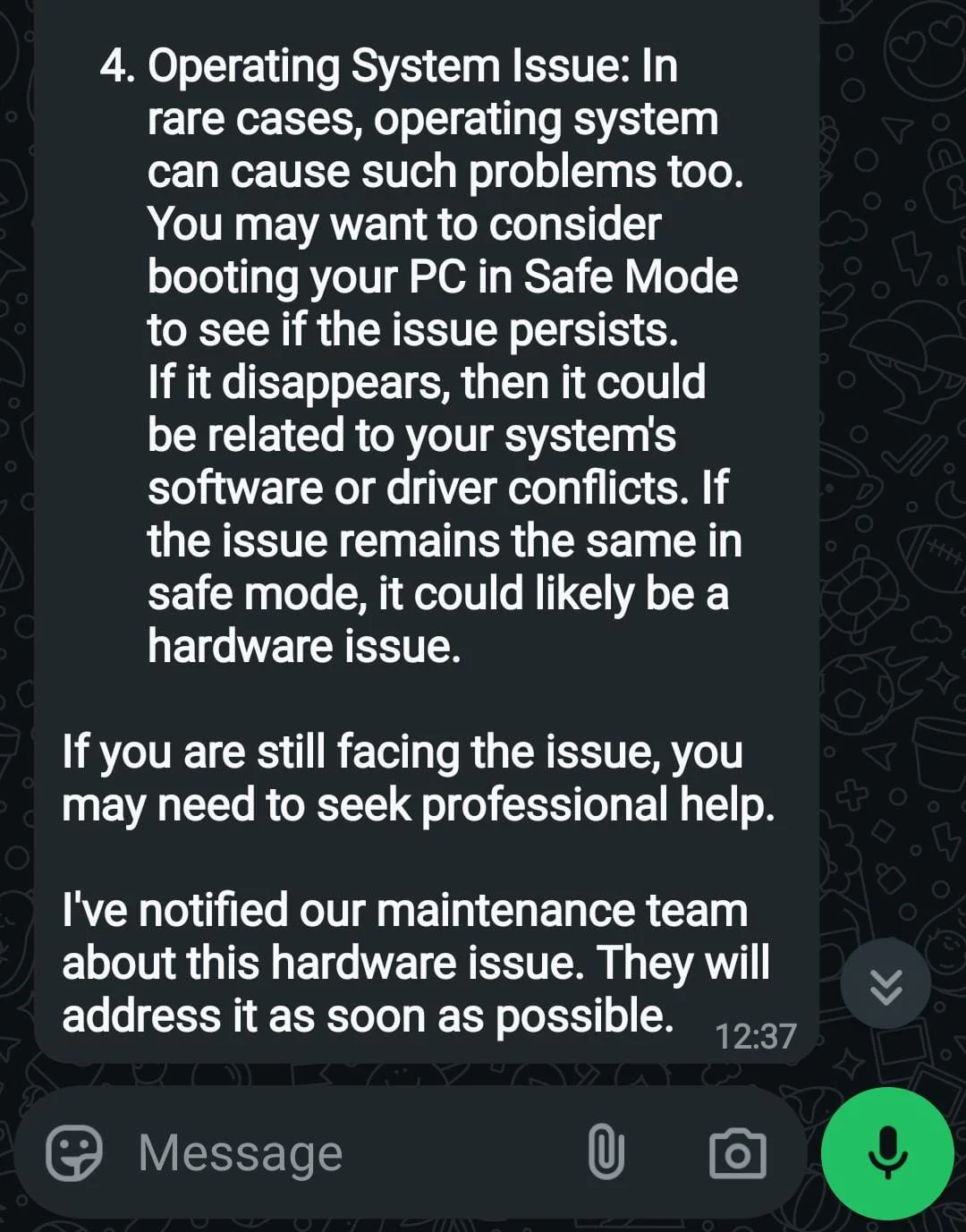

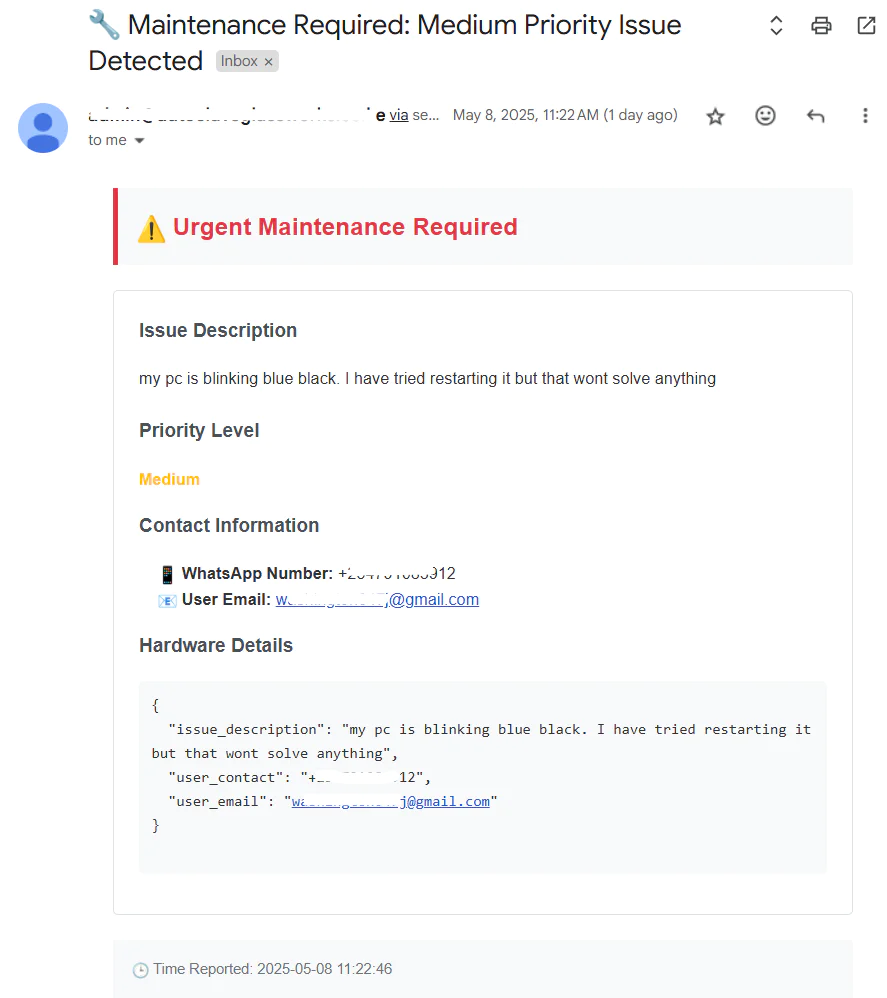

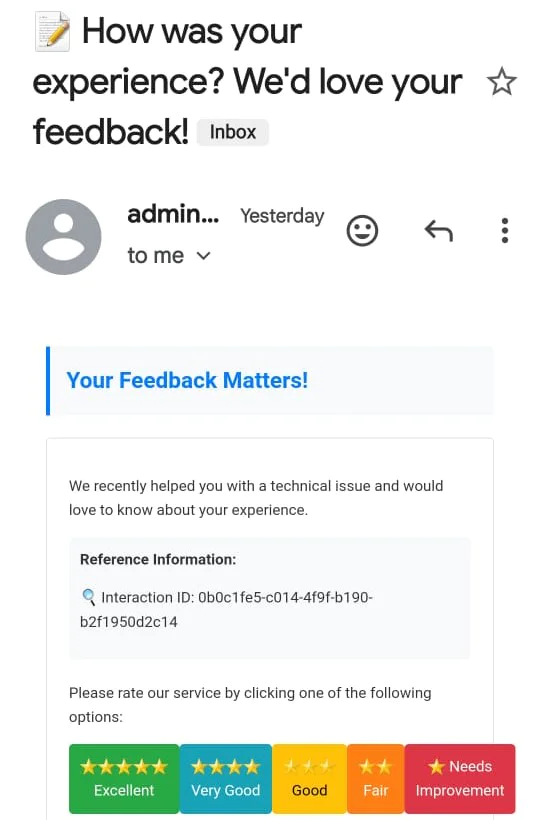

Your maintenance team will receive a SendGrid email if it’s a hardware issue, thanks to the send_maintenance_notification() call. After the conversation, if your number was in USER_EMAILS, you’ll get a satisfaction survey email. See the screenshots below on how the application works on sending different issues.

Send an image to see what happens.

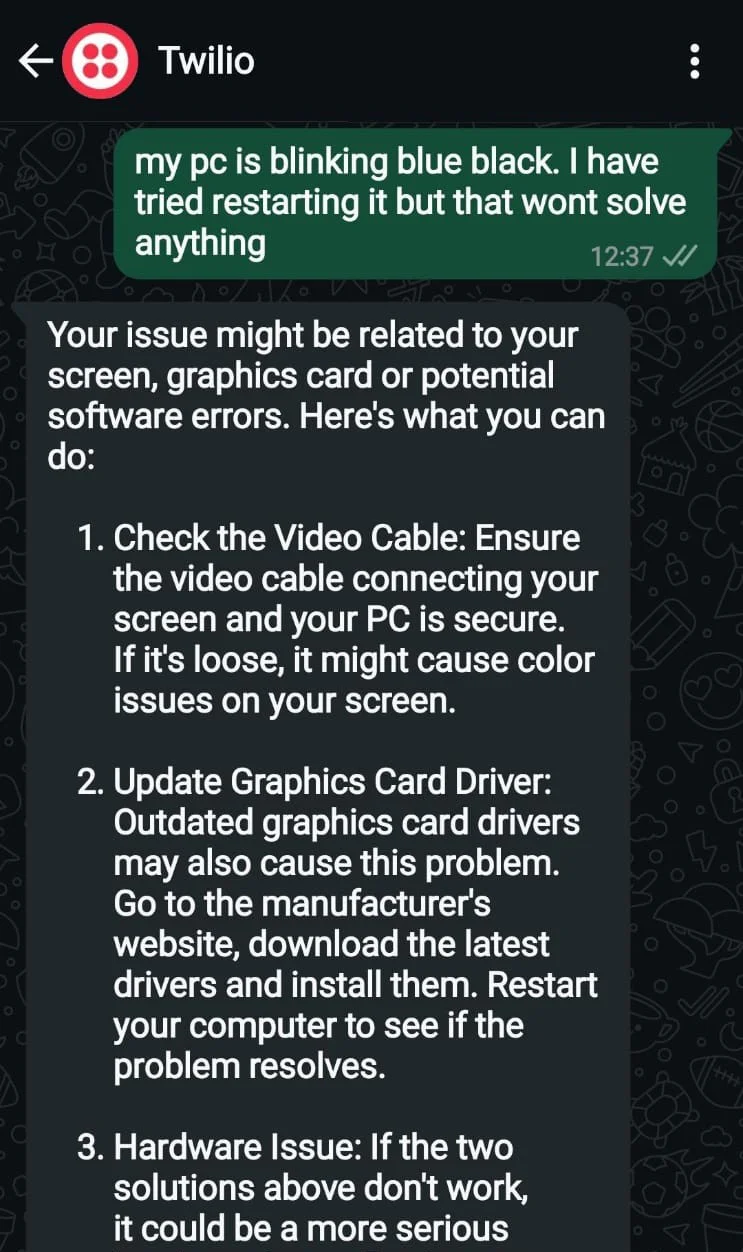

Now a text to see how it responds.

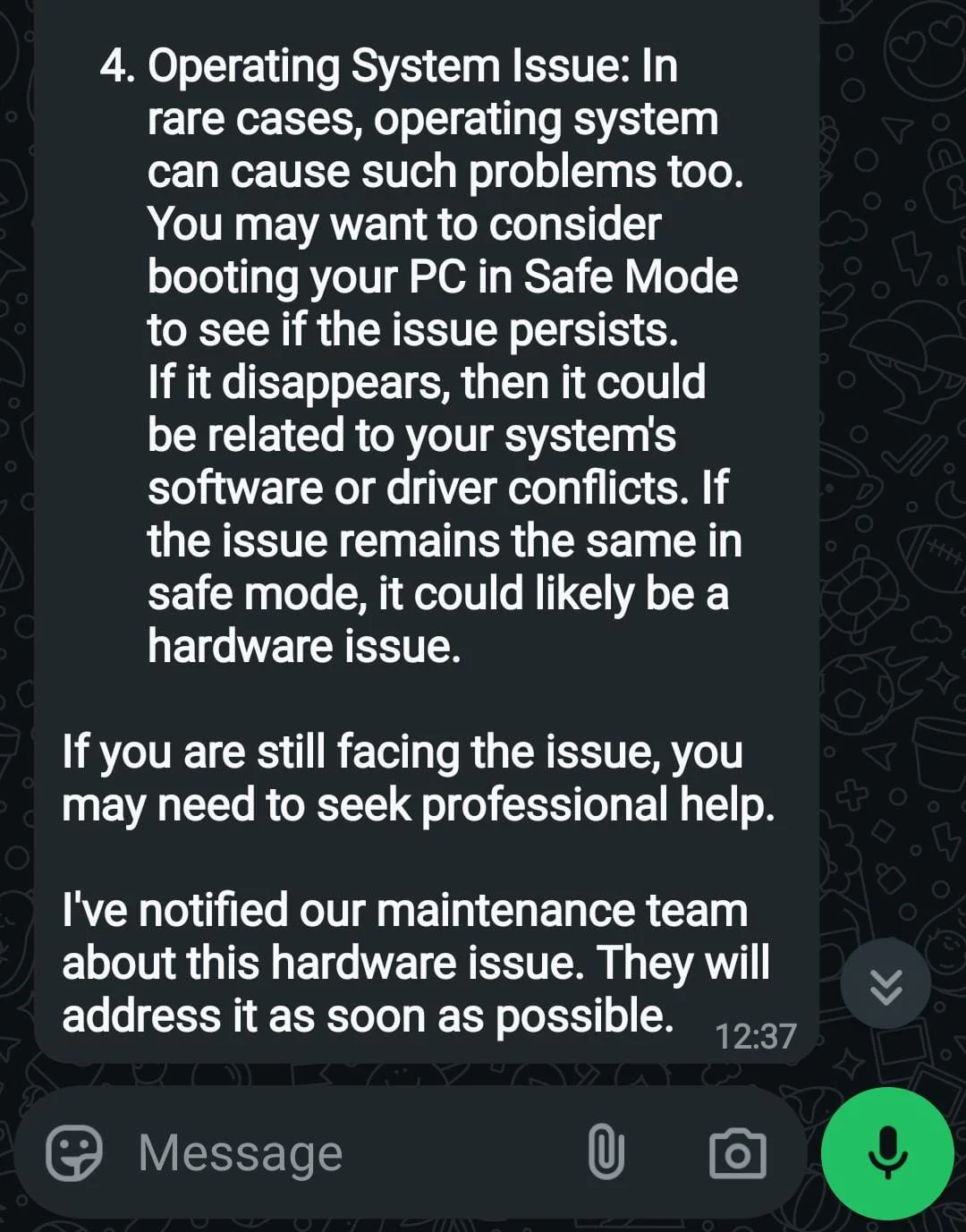

Below is a continuation. It proceeds to bring a human in the loop when needed, by sending a notification email to the administrator. See the screenshot below.

It just doesn't stop there; see the screenshot containing the email triggered and sent to the technical support team.

Finally, an email is sent for a survey to check the users' satisfaction. See the screenshot below.

Troubleshooting Steps

Verify 'From' Address:

- Double-check that the

Fromparameter is set towhatsapp:+141XXXXXXXX. - This is the default number for the Twilio WhatsApp Sandbox.

Activate and Join the Sandbox:

- Navigate to the Twilio WhatsApp Sandbox page.

- Follow the instructions to send the join code from your WhatsApp number to the sandbox number.

Use Live Credentials:

- Ensure that your API requests use your live Twilio Account SID and Auth Token.

- Avoid using test credentials, as they don't support WhatsApp messaging.

Check Twilio Console for Approved Numbers:

- Visit the Twilio WhatsApp Senders page to confirm which numbers are approved for WhatsApp messaging.

- Only approved numbers can be used as the

Fromaddress in your API requests.

Check your Email configuration

- Ensure the

FROM_EMAILis SendGrid verified to be able to send emails. - If you do not receive emails in your inbox, check spam or promotions for notification emails.

That’s how to build a Smart IT Help Desk with AI, Twilio WhatsApp, Qdrant, and SendGrid

This tutorial gave you a complete, end-to-end example of how to stand up a locally hosted Smart IT Help Desk. It receives WhatsApp messages via Twilio’s Sandbox for WhatsApp, semantically enriches them with Qdrant vector search; reasons over context with GPT‑4o, and automatically escalates hardware issues and sends satisfaction surveys via SendGrid. You saw how to configure FastAPI, expose your webhook with ngrok, index technical docs into Qdrant, and wire together every component in app.py and email_service.pywith every line of code explained.

For further enhancements, consider adding:

- Robust Data Architecture:

Migrate vector embeddings from local Qdrant to Qdrant Cloud for auto‑scaling, high availability, zero‑downtime upgrades, monitoring, and backups

Introduce a relational database (PostgreSQL/MySQL) with tables for users, tickets, messages, and survey_responses—enabling ACID guarantees, structured queries, and storage of user feedback for analytics and model retraining

- Feedback‑Driven Learning Loop with a Self‑Improving Application:

Capture survey_ratings (1–5 stars) and comments in your SQL survey_responses table; periodically extract low‑rating cases to refine prompts, update Qdrant context, or fine‑tune models—so the system learns from its mistakes.

By systematically logging survey responses, analyzing low‑rating interactions, and updating both your semantic index and LLM prompts, you create a closed‑loop process that helps the help desk get smarter over time. Making it an adaptive system that learns from its mistakes and continuously refines its own performance.

These additions can further streamline your customer care, providing deeper insights and improving customer service efficiency.

Jacob Muganda builds AI applications and demystifies complex topics through concise tutorials on Python and AI integrations. He’s an experienced AI engineer adept at crafting and deploying cutting‑edge algorithms to solve real‑world challenges. He won the EFH Innovation Sprint Hackathon for contextualizing LLM reasoning to scientific papers and fintech.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.