Create an AI Commentator with GPT-4V, OpenAI TTS, Replit, LangChain, and SendGrid

Time to read:

Problem: When Draymond Green got ejected for putting Rudy Gobert in a chokehold, I wish I could've had my favorite NBA commentator Stephen A. Smith's commentary on it.

Solution: I built this application using OpenAI's new Vision API (GPT-4V), Twilio SendGrid, Replit, LangChain, Streamlit, and OpenCV to Stephen Smith-ify any video by generating commentary as he would say based on some input video clip. (Thank you to Twilio Solutions Engineer and my AccelerateSF hackathon teammate Chris Brox for guarding me and letting me break his ankles for the video below!)

You can test the app out here!

Prerequisites

- Twilio SendGrid account - make an account here and make an API key here

- An email address to test out this project

- A Replit account for hosting the application – make an account here

- OpenAI account - make an account here and find your API key here

Some of the dependencies needed for this tutorial include:

- moviepy to help process video and audio

- cv2 (OpenCV) to help handle video frames

- langchain to read and parse a CSV for relevant statistics

- openai for OpenAI's GPT-4V and text-to-speech (TTS) APIs

- requests for making HTTP requests to OpenAI's API

- streamlit to create a web-based UI in Python

- tempfile to help handle temporary files while processing

Get Started with Replit

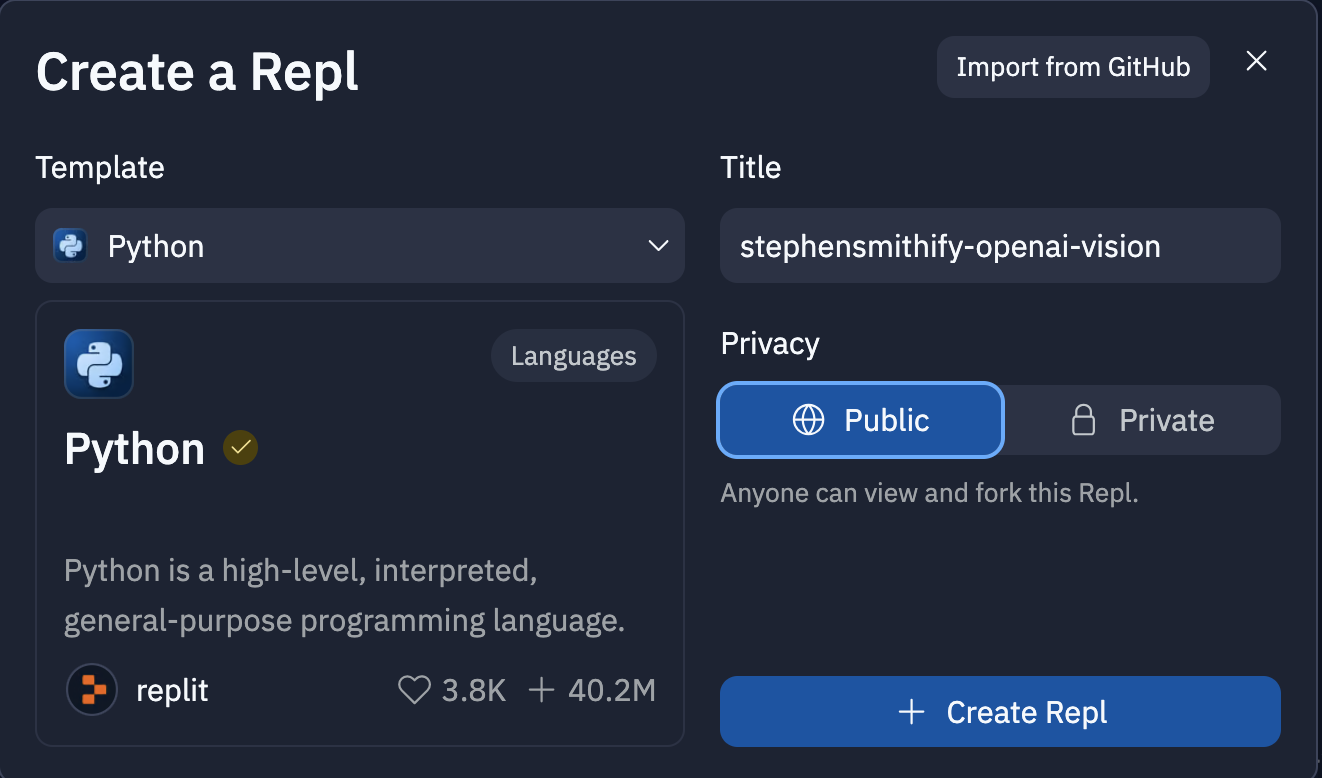

Log in to Replit, click + Create Repl to make a new Repl. A Repl, based on "read-eval-print loop", is an interactive programming environment that lets you write and execute code in real-time.

Next, select a Python template, give your Repl a title like stephensmithify-openai-vision, set the privacy, and click + Create Repl.

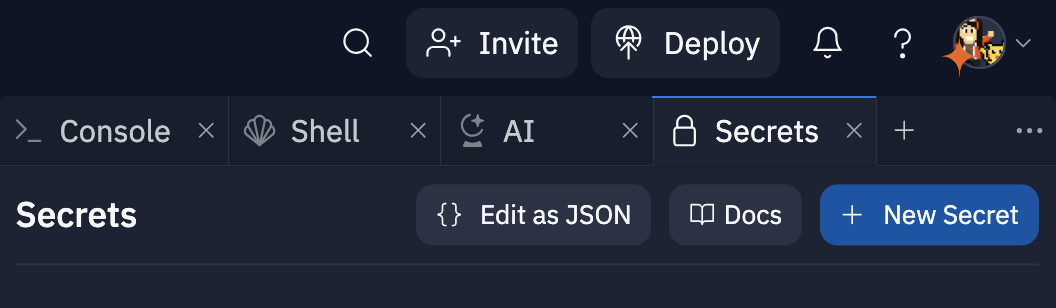

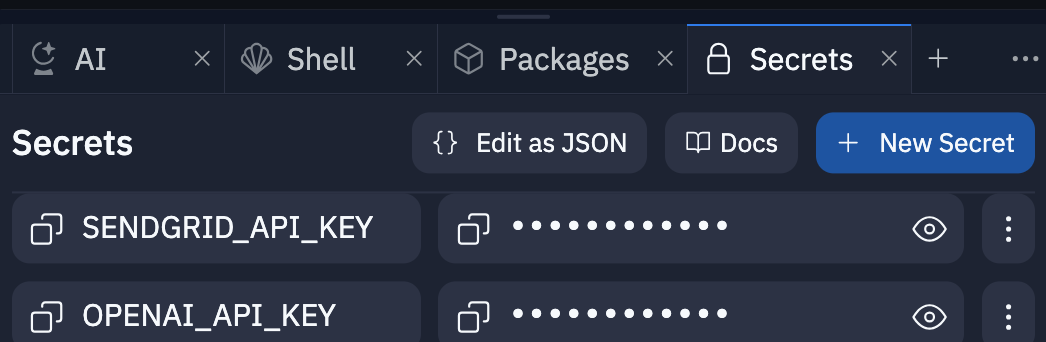

Under Tools of your new Repl, scroll and click on Secrets.

Click the blue + New Secret button on the right.

Add two secrets where the keys are titled OPENAI_API_KEY and SENDGRID_API_KEY, and their corresponding values are those API keys. Keep them hidden!

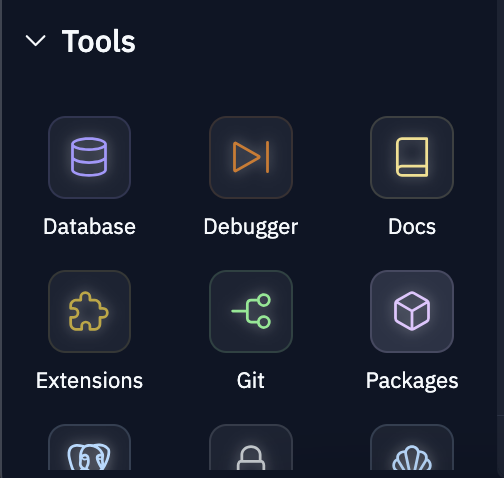

Back under Tools, select Packages.

Search and add the following:

- base256@1.0.1

- langchain@0.0.345

- langchain-experimental@0.0.43

- moviepy@1.0.3

- opencv-contrib-python-headless@4.8.1.78

- requests@2.31.0

- sendgrid@6.10.0

- streamlit@1.28.2

- tabulate@0.9.0

In the shell, run pip install openai==0.28--this lower version is needed in order to use OpenAI's Chat Completion.

Download this picture or a similar one of Stephen Smith.

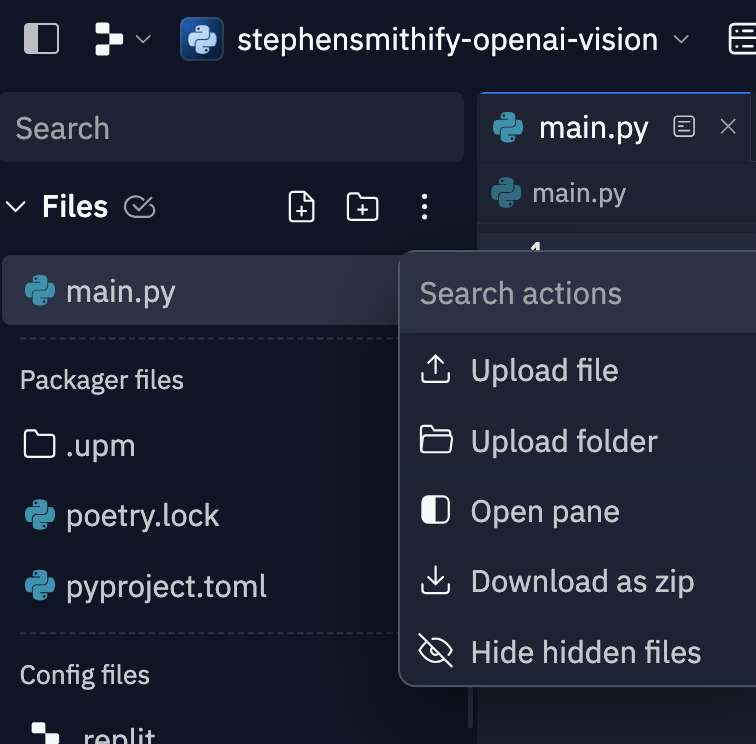

Upload it by selecting the three dots next to Files and then clicking the Upload file button as shown below.

Similarly, download basketball data like Stephen Curry's statistics here on Kaggle. This is just for fun and pretend and will be considered in the prompt that generates the commentary using a LangChain CSV agent.

Upload it by again clicking the three dots followed by the Upload file button.

Lastly, open the .replit file and replace it with the following so whenever the app is run, streamlit run is run.

Now we're going to write some Python code to generate text with OpenAI's new Vision API based on an input video.

Generate Stephen Smith-like Text for an Input Video

At the top of the main.py file in Replit, add the following import statements:

Next, include the downloaded Stephen Smith image and set the prompt we will pass to OpenAI telling the model to generate a short script summarizing an input basketball video split into frames. OpenAI's model didn't like being told to mimic someone else, but you can get around that using what is called the grandma exploit.

Now we'll make some helper functions. The first function uses OpenCV to extract frames from an input video file.

Once we get the video frames, we can make our prompt and send a request to GPT every 48 frames because GPT does not need to see each frame to understand what's happening.

Now create a function to convert that written text in the style of Stephen Smith to audio using OpenAI's tts-1 text-to-speech model that's optimized for real-time usage. The audio is saved to a temporary file.

The final helper function creates a new video that combines the input video with the generated audio, replacing whatever audio was included in the initial uploaded video.

Now include the following Streamlit Python code to add a title, explanation about the application, file uploader, text input for an email to send the new video to, and a button. If the button is clicked, create a conditional checking that something is uploaded via the st.file_uploader function.

If there is a video uploaded, it is played with st.video and st.spinner temporarily displays a message while executing the next block of code which calls the helper functions above. An estimated number of words is calculated based on the length of the input video and this is added to the prompt so that the output audio is roughly the same length of the input video.

That prompt is displayed on the web page for transparency, and then the audio is generated from the generated text, merged, and displayed.

That new video containing audio commentary in the style of Stephen Smith is nice. Developers can use Twilio SendGrid to email it as an attachment!

Lastly, clean up the temporary files!

Now you can run the app by clicking the green Run button at the top middle!

The complete code can be found here on GitHub.

What's Next for GPT-4V, OpenAI TTS, LangChain, and SendGrid

It's so much fun to use GPT-4 Vision to analyze, critique, or summarize both videos and images. You can also receive images or video via Twilio Programmable Messaging or WhatsApp too!

You can play around with different OpenAI Text-to-Speech voices, play the audio over Twilio Programmable Voice, and more.

Let me know online what you're building (and send best wishes for the Warriors)

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.