Talking Texts with .NET Core, Cognitive Services and Azure Storage

Time to read:

This post is part of Twilio’s archive and may contain outdated information. We’re always building something new, so be sure to check out our latest posts for the most up-to-date insights.

Imagine you are driving along in your car and your phone beeps, letting you know that a text message has come in. We all know it’s beyond dangerous to read a message whilst driving, it’s a pet hate of mine when I see people doing it, so why not get your text message phoned through to you? Hands-free, of course!

This post will show you how to create talking texts using Twilio, .NET Core, Cognitive Services and Azure Storage.

We will build an application that will convert incoming SMS into speech using the Speech Service, currently in preview, on Microsoft Cognitive Services. We will then use Twilio to call your mobile and play the speech recording.

Let’s get started.

What you will need

- .NET Core 2.1 SDK (version 2.1.300 or greater)

- Azure Storage Account with a blob - set the access policy on your blob to anonymous read access for blobs only

- Cognitive Services – Speech Services API Key

- Twilio Account and Phone Number with both SMS and Voice capabilities

- The outline project from GitHub

I will be developing this solution in Visual Studio 2017 on Windows. However, you can certainly use VS Code for cross-platform development. Either is available from the Microsoft site.

If you would like to see a full integration of Twilio APIs in a .NET Core application then checkout this free 5-part video series I created. It's separate from this blog post tutorial but will give you a full run down of many APIs at once.

Overview

We are going to write a fair bit of code today so let’s have a look at an overview of what we need to do.

- When a text message comes into Twilio, Twilio will make an HTTP POST request to our application endpoint.

- Our application will then call a method in the Controller that will get the body of the incoming text, and pass it along to the

TextToSpeechService - The

TextToSpeechServicewill then use the body to make a call to Azure Speech Service which will return an MP4 - We will then save this MP4 to Azure Blob Storage and return the path to the MP4 back to the Controller

- We then use the Twilio NuGet package to create a TWIML response which instructs Twilio what to do for the call. In this case, play our soundbite and hang-up.

Download the Outline Project

To get you started I have created an outline project which you will need to download or clone from GitHub. The completed project can be found on the completed branch.

The outline project has three folders;

- Controllers; this folder has the default

ValuesControllerand the one we shall be editing, theSpeechController - Services; this folder has both the AuthenticationService and TextToSpeechService files as well as their respective interfaces.

- Models; this folder has all the models needed to map your

appsettings.jsonand also the models needed for the requests.

Let’s restore the NuGet packages to ensure we have them all downloaded, build and run the project to make sure all is in order.

The project comes with a default controller from the dotnet template called ValuesController, which I leave in for debugging purposes, so we should see ["value1","value2"] displayed in the browser.

Configuring App Settings

We will need to map the various keys from Azure, Twilio and Cognitive Services into the appsettings.json file. These keys are sensitive so I suggest using User Secrets to help prevent you committing them to a public repository by accident. Check out this blog post on User Secrets in a .NET Core Web App if you’re new to working with User Secrets.

Add the following configuration to your secrets.json file, inserting your own values in the relevant places.

The above code should match the code in the appsettings.json file, but remember the appsettings.json file gets checked in to source control, so leave the values blank or use a reminder such as “value set in user secrets”.

If you look in the Startup.cs file, you will see where I have mapped the app settings to our values.

We can then inject these settings into any class using IOptions and the Options Pattern.

Fetching an Auth token from Cognitive Services

To enable us to talk to the Speech Service we will need to be issued an auth token from the Azure Speech Service.

To do this, let’s update the AuthenticationService.cs in the Services folder. Add the following code, where we create a new HTTP request with the Token API URI and our Azure Speech Service subscription key to receive our auth token:

Converting Text to Speech

Next, we will update the service that handles the conversion of text to speech via an HTTP request to Speech Services. Go to the TextToSpeechService.cs file and add the following code:

In the above, I have created a variable called text and assigned a Speech Synthesis Markup Language or SSML string. I then passed in the from number and the message body text. You can have fun and play around with things like speed and pronunciation or even change the voice and accent of the speaker.

If you go to the Startup.cs file, you will see our two new services have already been added, ready for .NET Core’s built-in dependency injection to pick up.

I have chosen to configure my services as Scoped as I want the instance to be around for the lifetime of the request. You can read more on the service registration options on the Microsoft documentation.

Saving your new Soundbite

Our code will return a bit stream from Speech Services and now we need to store it someplace. We will be using an Azure Storage Blob.

Create a private method in the TextToSpeechService.cs that will write the MP3 to the blob and then call that in our public method. This private method will return the path to the newly stored item, then we can pass that forward to Twilio.

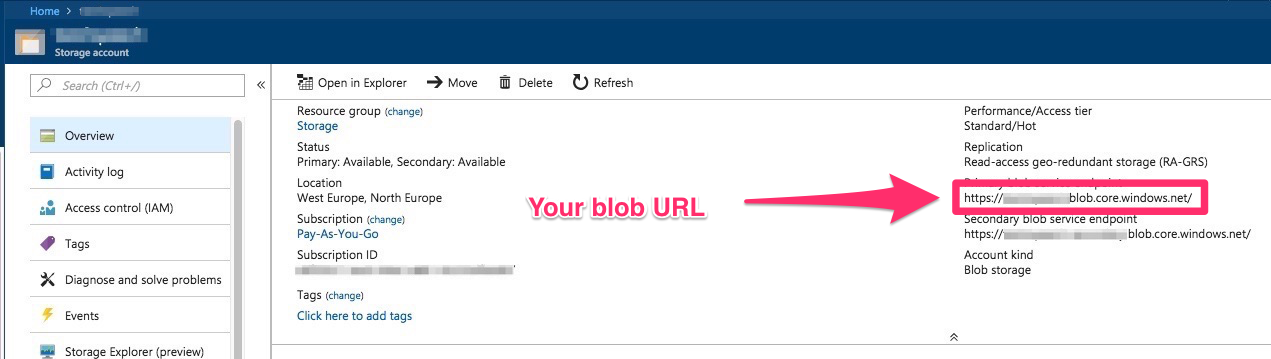

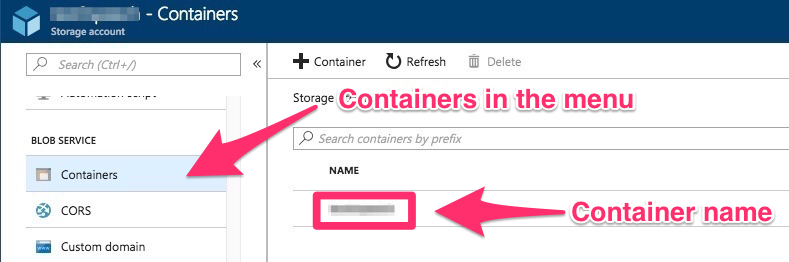

You will need to construct a PATH_TO_YOUR_BLOB_STORAGE URI to match your Azure Storage URL and Container name.

You can find the Azure Storage URL from within the portal when you click on your storage resource.

Your container name is just the name of your storage container and can be found under containers within the storage resource.

It should look something like this:

Interfacing with Twilio

We need to update our SpeechController.cs to accept a POST from Twilio that will kick off our conversion of text to speech.

First, add the API endpoint that Twilio uses as the webhook for when an SMS comes in.

We will only map the incoming message SID from Twilio to the TwilioResponse, as that is all we need to pass on to the next stage.

In the response, we tell Twilio that it needs to initiate a new voice call and we pass it a URI, containing the incoming message SID, that will tell Twilio what we require in the voice call.

We return an empty content so Twilio knows that we don’t want to reply to the incoming text.

Now we need to add the API endpoint that Twilio will call after the webhook above. The call will expect the message SID from the route so, using annotations, we can set that up.

The code below makes a call to Twilio, using the message SID to fetch the incoming message body. It then passes the message body to the TextToSpeechService which creates the soundbite and returns the URI of the stored soundbite.

We then use the Twilio helper library to create a TwiML response telling Twilio to play our soundbite, passing in the returned URI, and then hang up.

Wait… That was a lot of code!

It certainly was, so let’s just recap what we have done.

When a text message comes into Twilio, Twilio will make an HTTP POST request to our Voice action in the SpeechController.

Our application will then create a new call and pass in a URI to the instructions for the call. That URI will be the route to the Call action on the SpeechController and it will pick up the message Sid off the route.

With this message Sid we can fetch all the details of the text message and then pass them into the TextToSpeechService which in turn, returns the URI of the stored soundbite.

We then use the helper library to create a TWIML response which instructs Twilio on what to do for the call. In this case, play our soundbite and hang-up.

Setting up ngrok

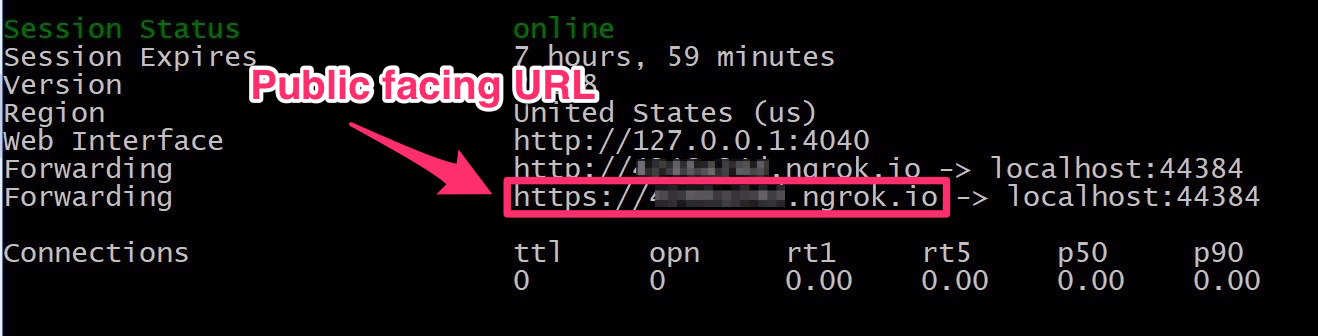

We can use ngrok to test our endpoint rather than deploy to a server, as it creates a public facing URL that maps to our project running locally.

Once installed, run the following line in your command line to start ngrok, replacing with the port your localhost is running on.

You will then see an output similar to below.

Copy the public facing URL, and update the

SITE_URL in the SpeechController with it.

Let’s run the Solution either by pressing Run in the IDE or by dotnet run in the CLI.

Now you can do a quick check using the default ValuesController we left in from the template.

Enter the ngrok URL https://.ngrok.io/api/values into your browser and you should see ["value1","value2"] displayed once again.

Setting up the Twilio webhook and trying it out

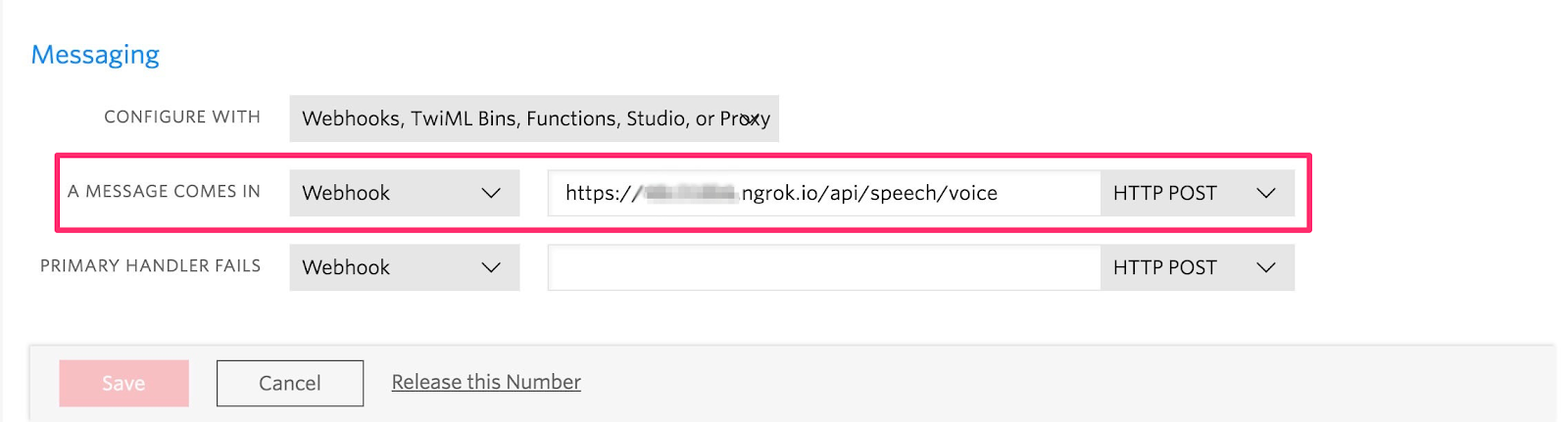

To enable Twilio to make the initial request that fires off our string of events, we need to set up the webhook.

Go to the Twilio console and find the number that you created for this project. Next add the API endpoint https://.ngrok.io/api/speech/voice from ngrok and paste into the A MESSAGE COMES IN section.

You should now be able to send yourself a text message and then shortly after receive a phone call which plays the text-to-speech soundbite. Give yourself a high-five – that was a lot of code!

What Next?

There is so much you can do now! Perhaps you can create a lookup of all your contacts using Azure table storage and cross-reference it with the incoming text and have Speech Services tell you the name of the sender. Or you could write a webjob that clears out your blob storage on a regular basis. You could even extract the code that creates the Cognitive Services Auth token into an Azure Function and re-use it across multiple apps!

Let me know what you come up with and feel free to get in touch with any questions. I can’t wait to see what you build!

- Email: lporter@twilio.com

- Twitter: @LaylaCodesIt

- GitHub: layla-p

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.