How to Analyze Text Documents Using Langchain and SendGrid

Time to read:

In today's digital age, the need for efficient document analysis is crucial. This tutorial aims to guide you through building a solution that leverages Flask , LangChain , Cohere AI , and Twilio SendGrid to analyse documents seamlessly. We will create a Flask application that receives documents, processes them using LangChain for summarization and Cohere AI for analysis, and communicates the results via SendGrid email. The combination of these technologies allows for a robust system to analyse documents and share results via email.

For this use case, you will use PDF documents but the process can be applied to other documents such as csv and txt files.

Prerequisites

To follow along, ensure you have the following:

- Python 3.6+ installed.

- Signup for a SendGrid account .

- Register for a Cohere AI account .

- Postman installed.

Set up your environment

In this section, you will set up your development environment to achieve success in implementing this tutorial. Begin by creating a virtual environment and installing the required dependencies. Open your command line and navigate to your project folder. Run the following commands:

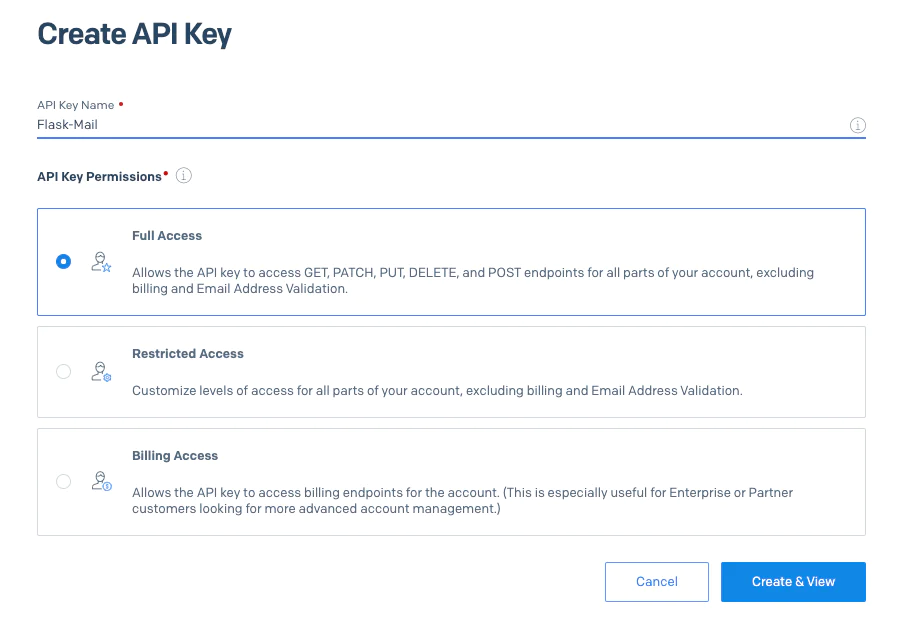

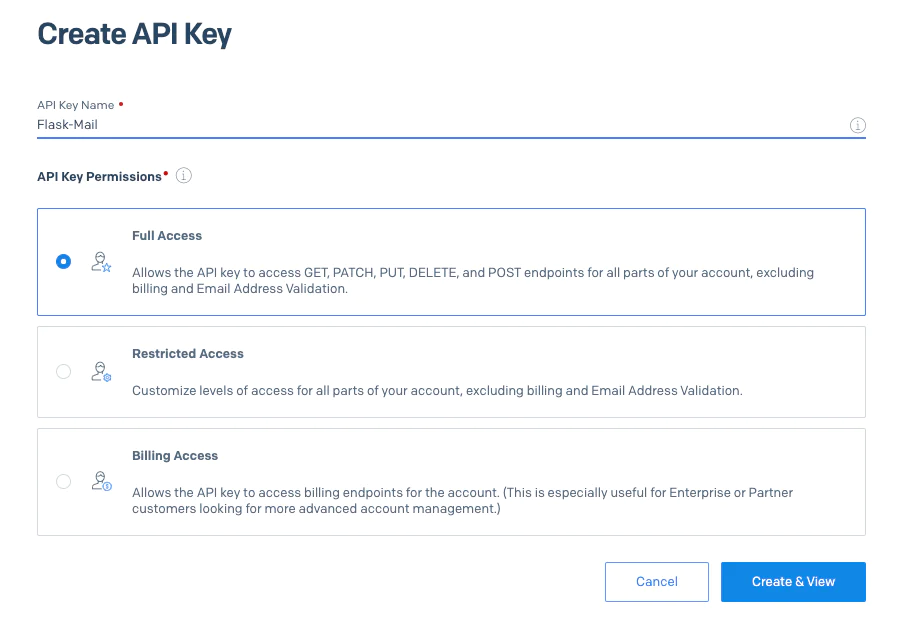

After that, log in to your SendGrid account, click on Settings in the left sidebar then select API Keys. Click Create API Key, give it a name and select your preferred access level. Click Create & View, and copy your API Key for later use.

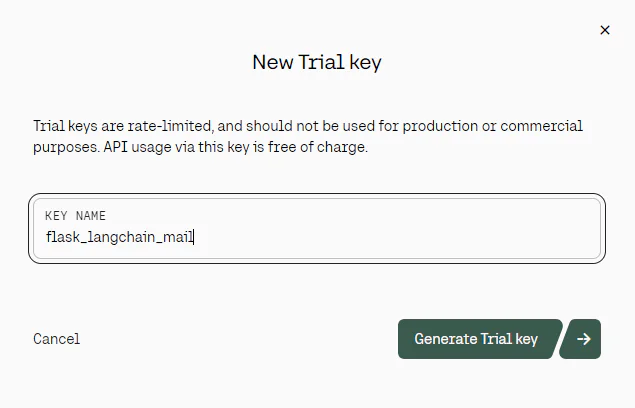

Next, log in to your Cohere AI account. Cohere AI was chosen for this tutorial because it offers a free trial for developers and it is supported by LangChain, a framework you’ll use later on. Navigate to API Keys > New Trial Key to create your API key as shown in the screenshot below. Make sure you give your key a name such as "flask_langchain_mail" and click Generate Key. Copy the new API key for later use.

Create a . env file for your environment variables. You will add the Cohere AI and SendGrid API Key and a default mail sender as shown below.

Create a new app.py file in the root of your project folder and import all dependencies as shown below:

Finally, create a Flask app and add the configurations for SendGrid as shown below:

The code above will use the . env variables to set up the Flask mail to smtp.sendgrid.net. The mail port is configured as 587 and TLS (Transport Layer Security) is enabled for secure communication.

The username is set as "apikey" and the SendGrid API key is set to serve as the password for authentication.

Write the Flask application

In this section, you will focus on development of the application features:

- to receive and process PDF documents.

- create embeddings and index them in a vector database.

- implement a question-answer mechanism to query the documents.

In your project folder, create a new folder called uploads that will host your uploaded PDF documents. Open your app.py file and add the following codeblock

This sets the upload folder in your Flask application and defines the allowed document extensions. This will be used to avoid Cross-Site Scripting (XSS) attacks. Add the following code block in the app.py file

This initialises Cohere’s Command model , a text generation large language model (LLM) that you will use to generate responses. The temperature parameter is used to set the degree of randomness in the answers provided by the LLM. Temperature values range between 0 and 1. Lower values makes the LLM’s output to be more predictable while higher values allow LLMs to output creative answers.

In app.py, add the following function to extract documents from a PDF file:

The code snippet above uses the PyPDF library to load the contents of a PDF document. You are using LangChain’s implementation of the PDF loader. LangChain is a framework that helps developers build powerful AI applications that leverage LLMs. It implements a standardised API allowing developers to use various models and python libraries in a simpler manner. Once the text is loaded from the PDF, you use LangChain’s RecursiveCharacterTextSplitter to split the text into segments that make further processing easier.

Add the following function in app.py for creating embeddings using Cohere:

The function above initializes Cohere’s Embeddings endpoint to generate vector representations of text, used to create powerful analytical applications. These embeddings are numerical values that represent the text in a way that machine learning models can understand.

The function also uses Chroma , an open-source vector database, to store the embedings created using the documents from the previous process. Chroma offers an in-memory database that stores the embeddings for later use. You can also persist the data on your local storage as shown in the official documentation . For this tutorial, you are using LangChain’s implementation of Chroma.

Create a new function in app.py for accepting a user query and responding with an answer using the information acquired from PDF files:

Using the query, the code snippet will first perform a similarity search using the information in the vector database. A similarity search uses a query to find matching documents that are numerically similar to the query.

Next, you use LangChain to load a retrieval question-answering chain that utilizes Cohere’s command model to generate the answers. Finally, run the chain using the matching documents and the query as input and Cohere will generate the required answer using this information.

Create two functions in app.py as shown below:

The first function above is for checking the file extension of the uploaded file to avoid XSS attacks. The second function creates an API route that uses the POST method. This route is responsible for uploading the file, extracting text from it and creating the required embeddings using the functions created before.

Create another route for receiving the user query and sending results as an email as shown below:

This route receives a query and an email address as the input. The query is passed to LangChain’s QA Retrieval using Cohere’s AI to extract answers from the PDF file. This answer is sent as an email to the email address provided.

Add the following code block at the end of app.py that will be responsible for running your Flask app in debug mode.

Test the document analyzing Flask application

You can access the code for this tutorial in this GitHub repository . To test your application, you need to open your terminal and run the following command at the root of your project folder. This will start your Flask application and run it at http://127.0.0.1:5000/

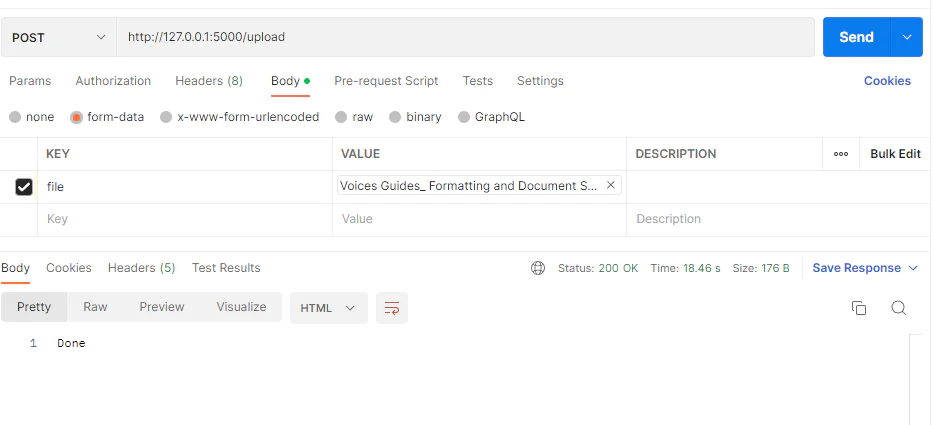

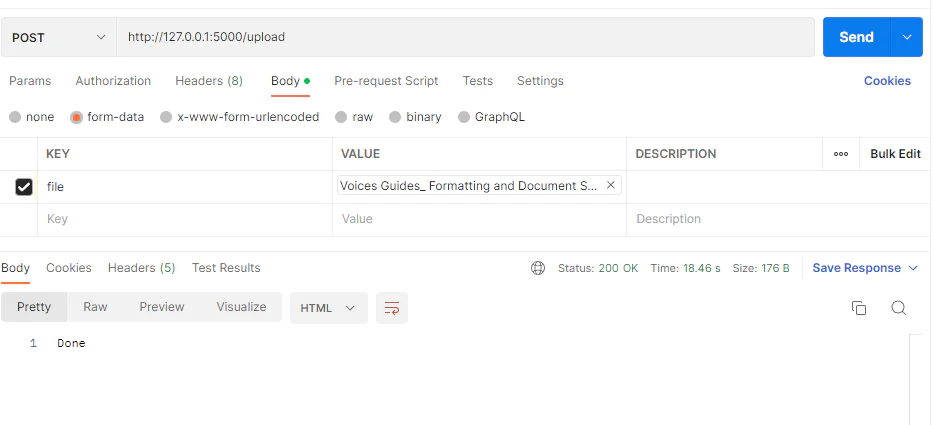

Open the Postman application and create a new POST request. Add the URL to be http://127.0.0.1:5000/upload . Select Body and choose the form-dataoption. Under key, enter file and switch from Text to File. Under value, choose the PDF file you want to use. For this demonstration, I will use Twilio’s Style Guide . Click Send and you should receive a 200 response as shown below.

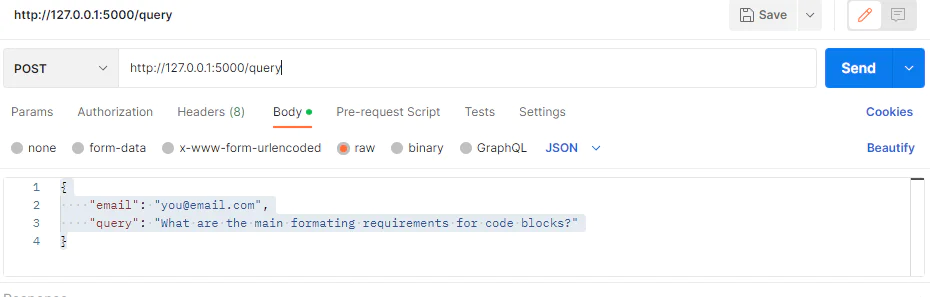

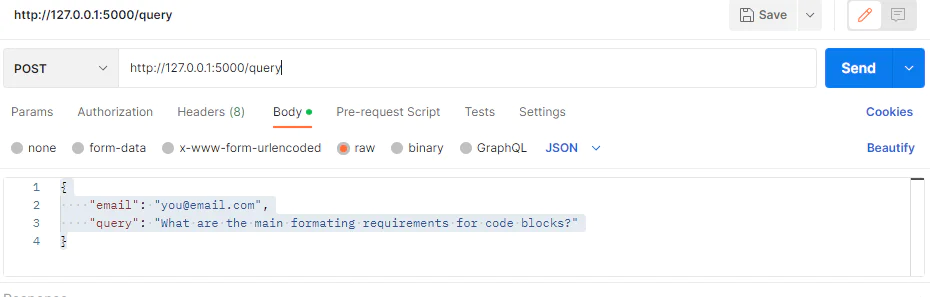

Create a new POST request in Postman and use http://127.0.0.1:5000/query as the address. Select Body and choose the raw option. Choose the JSON option and add the payload as shown in the screenshot below. Make sure you add your email.

After hitting the Send button, the request should respond successfully and you will receive a new email with the relevant answer for the query submitted via the API. The answer is generated using the information in the PDF document used earlier on. This concept is called Retrieval Augmented Generation (RAG) and is used to increase the validity of answers generated by LLMs.

For example, the query “What are the main formatting requirements for code blocks?” sends an answer as described below:

What's next for document analysing Flask apps?

To conclude, we addressed the need for efficient document analysis by creating a comprehensive solution using Flask, LangChain, Cohere AI, and SendGrid. The technologies selected offer specialized capabilities, providing benefits such as text extraction, summarization, analysis, and convenient email communication. This tutorial serves as a starting point for building more sophisticated document analysis applications.

You can check out how to receive incoming emails using SendGrid and allow your users to query PDFs directly from their mailbox.

With a decade in the computer science industry, Brian has honed his expertise in diverse technologies. He pursues the fusion of machine learning and design, crafting innovative solutions at the intersection of art and technology through his design studio, Klurdy.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.