Add Token Streaming and Interruption Handling to a Twilio Voice Anthropic Integration

Time to read:

ConversationRelay from Twilio lets you build real time, human-like voice applications with AI Large Language Models of your choice. It opens a WebSocket with your app so you can integrate your choice of AI API with Twilio Voice.

In my previous post, I showed you one way to integrate Anthropic with Twilio Voice using ConversationRelay in Node.js. This setup allowed you to create a basic real-time voice assistant using Anthropic's Claude models. However, there were some weaknesses to that basic app, particularly around latency and handling interruptions during conversations.

In this post, I'll show you how to implement token streaming to reduce voice latency and add local tracking for interruption-aware conversation turn handling. Let’s dive in!

Prerequisites

Before you get started, you’ll need a few accounts (and a few things ready):

- Node.js installed

- A Twilio phone number ( Sign up for Twilio)

- An IDE or code editor

- Ngrok to expose your server

- An Anthropic Account and API Key

- A phone to place a phone call to test the integration!

And with those things in hand, let’s get started…

Add token streaming

The first thing you are going to address from the basic app is the latency. If you prompt the basic app with something requiring a longer response – for example, “name ten things that happened in 1999” – you’ll notice it takes a few seconds before you hear the AI’s response. Some of that pause is because we’re waiting on the full response from the AI (and you guaranteed a long one with a prompt like that!).

You're going to address some of the latency by streaming tokens from Anthropic’s Claude instead of waiting on a complete response.

What is Token Streaming?

Tokens are the smallest units of a response generated by an AI Language Model. In your basic app, you were waiting for the entire response – including all the tokens – before we sent the AI’s text to our text to speech providers.

But waiting for a whole response isn’t the only mode supported by Anthropic and other providers. You can also receive partial responses from a model and incrementally stream tokens to your app. From there, you can send them along to ConversationRelay as text tokens with the attribute last: true, and a user will hear the AI’s response before it’s even finished coming up with it.

Modify how the AI responds

Our demo repository has branches showing every step we took to enhance the base tutorial. If you get stuck in this post, you can clone Step Two: Streaming Tokens and Step Three: Conversation Tracking from our repo. A future blog post will discuss Tool/Function Calling.

You need to make two changes to our code to add token streaming. You'll first change how you call Anthropic and wait for a response, then modify how you handle the ConversationRelay ’prompt’ message type.

Change the aiResponse() function

Open your server.js and locate the aiResponse function. You’re going to change its name to aiResponseStream – since that’s now more descriptive – and write a little code to handle the streamed tokens.

Here’s the code before:

Once you’ve located that block, change the code to this:

When you use the Anthropic library’s stream() function, Anthropic changes to token streaming mode and sends one token at a time. Your app then forwards tokens to ConversationRelay with last: false so ConversationRelay knows to expect more.

And finally, when the response is done, this code sends an empty text message with last: true and returns the final accumulated response from the assistant using stream.finalMessage().

Change how you handle the ConversationRelay ‘prompt’ message

In server.js, locate the case "prompt": in your switch block in fastify.register(. Since you’re now calling an asynchronous function that returns a Promise, you need to wait for the Promise to return before adding the “assistant” response to the conversation.

Here’s the code before:

Change that code to the following:

As you can see, you now await the return from the aiResponseStream function, and push the role and content from the LLM into the local conversation storage.

Add conversation tracking and interruption handling

Nice work so far! If you test the app again – and try your open ended prompt of “name ten things that happened in 1999” – you should notice much improved latency. But if you try to interrupt the AI you might notice another problem: while it sounds ( verbally) like you interrupted its response, the AI doesn’t know how much of the response you heard!

In this step, you’ll track the conversation locally, and show one way to handle these interruptions and keep your AI informed using ConversationRelay’s utteranceUntilInterrupt attribute on interrupt messages and indexing into local conversation storage.

Modify the AI response function again

Head back to your aiResponseStream function. You’re going to update it further to accumulate tokens as they arrive:

You’re now both forwarding tokens to ConversationRelay and accumulating them in the assistantSegments array. When the AI’s response is done, you push the complete response into sessionData.conversation where we are storing all of the turns of the conversation. As you see in a minute, we will index into those conversation turns to determine where we interrupt. But first: more changes to the ’prompt’ message handler.

Change how we manage the ‘prompt’ message

You're yet again changing how you track the conversation. Since aiResponseStream now handles accumulating tokens and adding them to the session conversation history, you can simplify the ’prompt’ message case in your case block.

Change the ’prompt’ case to:

… and then move on to ‘interrupt’ messages.

Handle ‘interrupt’ messages

Now, modify the WebSocket handling to better handle the interrupt message from ConversationRelay. Start by calling a new function, handleInterrupt, when we get the interrupt message from ConversationRelay:

Now, turn your attention to this new function. It’ll take the utteranceUntilInterrupt from the interrupt message along with the callSid which the app is using to index the conversation turns.

Here’s the function to add:

As you saw, the handleInterrupt function is only called if a voice interaction is interrupted – that is, when ConversationRelay sends the interrupt message. This code finds the utteranceUntilInterrupt in your local conversation storage and replaces that part of the AI’s turn with what ConversationRelay told your app the user has heard. Then, the next time you pass the conversation to Anthropic (with aiResponseStream(conversation, ws), the AI will ‘know’ how far the caller heard. Pretty cool, right?

If you missed a step and it isn’t working, you can see the complete code for this step, here.

Test your AI application

It’s time for the payoff; you’re ready to test!

You might already have these initial steps memorized (or finished) for the basic tutorial. Feel free to skip the setup if so.

Set up the AI app

In your terminal, open up a connection using ngrok:

Now, update your .env with your new ngrok URL (do not include the scheme (the “https://” or “http://”):

Now, start up the server:

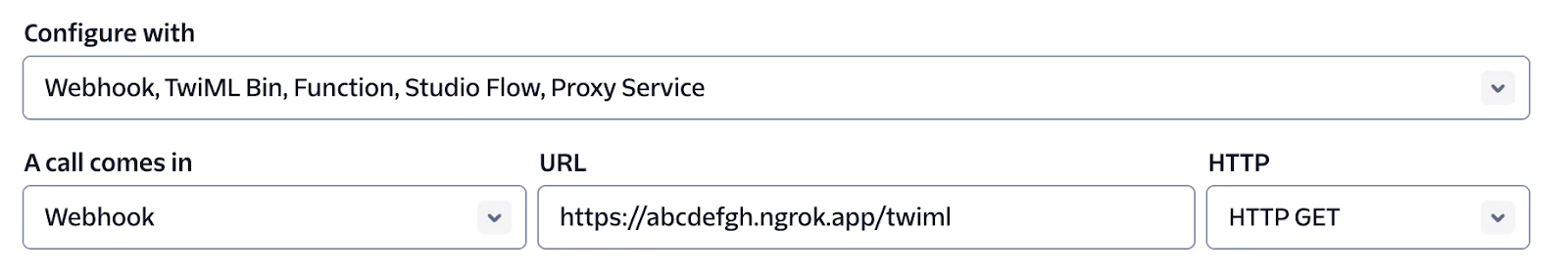

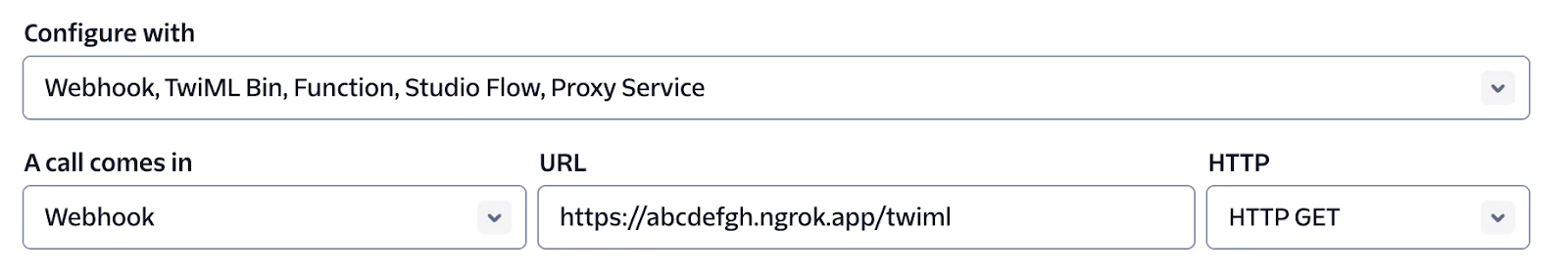

Go into your Twilio console and find the phone number to use. Set the configuration under A call comes in with the Webhook option and HTTP GET in the HTTP field.

For the URL, add your ngrok URL (this time include the “https://”), and add /twiml. For example, https://abcdefgh.ngrok.app/twiml.

Hit Save, you’re ready to test.

Call Claude

Dial that Twilio number you set up. You should soon hear a greeting – talk to your new, enhanced Claude – Anthropic’s AI Assistant!

Try interrupting the AI when it is responding to your prompts. If you’re sick of asking about 1999, ask about various dog breeds, or ask it to come up with a long, complicated sentence with a lot of commas that seems to run on and never end, despite its best intentions, even though long, complicated sentences aren’t usually appropriate for voice conversations. Whatever response you interrupt, test by asking how far the AI got before you interrupted.

Next steps

Congratulations on the new, more powerful AI voice assistant you just built with Node.js, ConversationRelay, Claude, and a few lines of code! In the next post, we’ll explore tool or function calling to expand your AI assistant’s horizons just a little bit further.

Paul Kamp is the Technical Editor-in-Chief of the Twilio Blog. He felt awkward continually interrupting the AI. You can reach Paul – and don’t worry, you aren’t interrupting – at pkamp [at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.