Build the Twilio Video AI Avatar Experience: Real-Time Photorealistic AI Communication in the Cloud

Time to read:

Combining Large Language Models, or LLMs, with digital avatars is about to change the way we build and scale engaging experiences. Research shows video conferencing enhances attention and interpersonal awareness over audio only experiences, adding video to audio experiences improves learning outcomes in children, and non-verbal cues convey a lot of the information in conversations. And it goes both ways: AI will understand what we’re saying and expressing during these interactions, serving us even more effectively.

For any application you’re excited about, engaging, real-time communication is key. The Twilio Video AI Avatar Experience brings the promise of photorealistic AI avatars to life, today – powered by a blend of technologies including Twilio Video for live interactions, real-time avatars from HeyGen, speech-to-text capabilities from Deepgram for transcription, and OpenAI for intelligent processing.

This blog post walks you through the app’s architecture, technical components, and step-by-step deployment instructions using Docker and fly.io.

Overview

The Twilio Video AI Avatar Experience demonstrates a variety of use cases – from customer support and virtual events to immersive digital experiences.

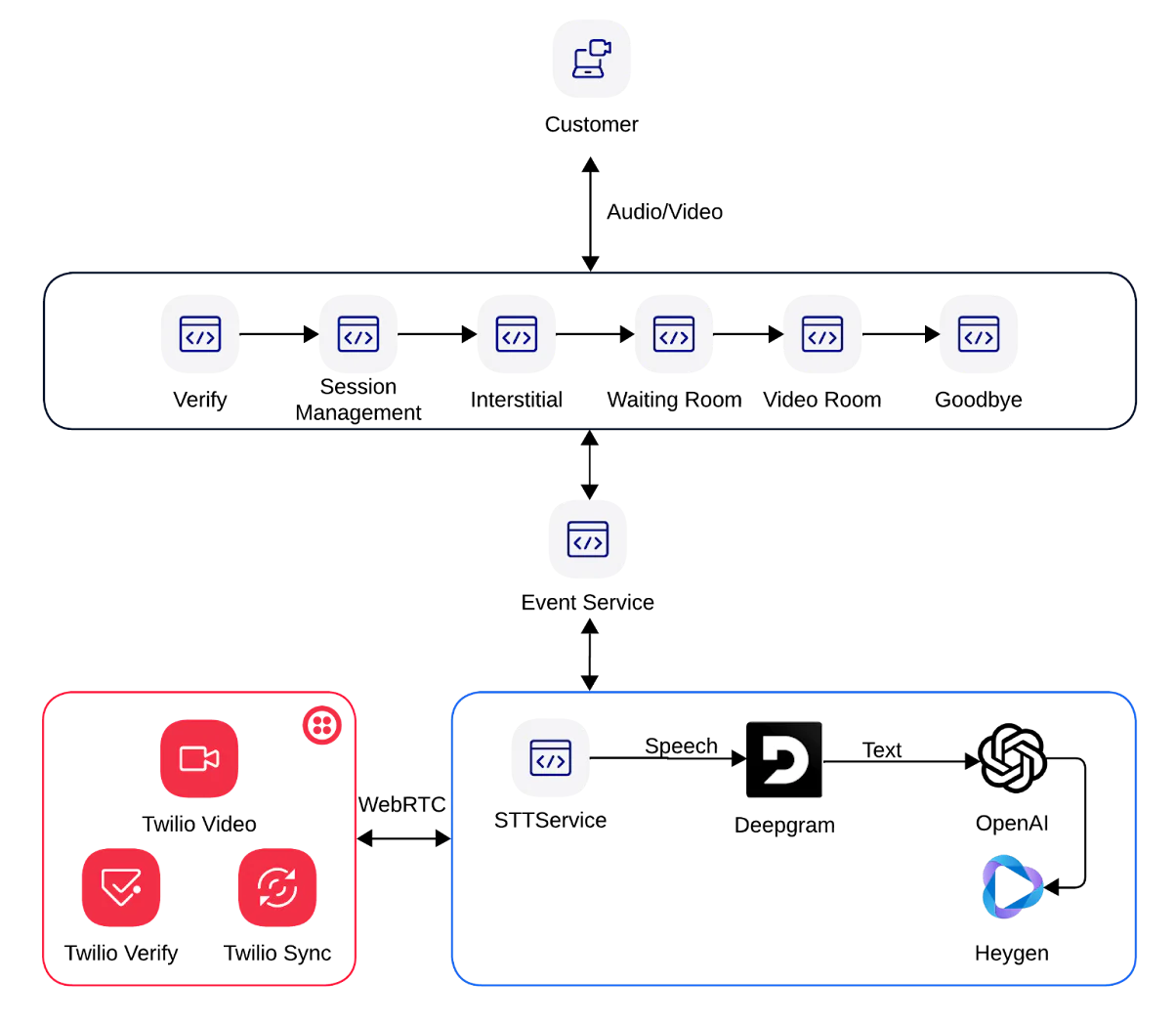

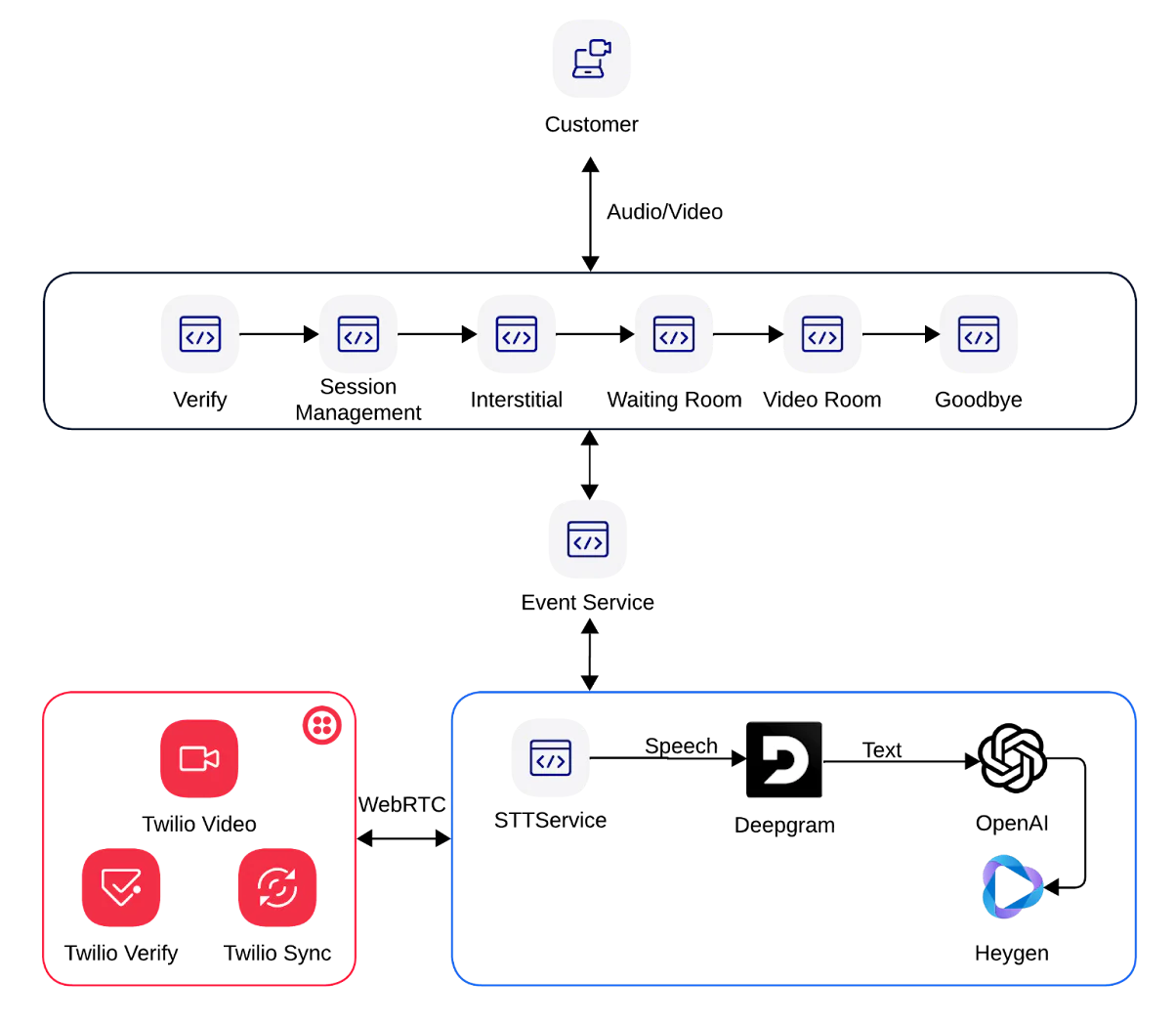

The application’s architecture is designed to be event driven so messages and multimedia content flow seamlessly between the front-end and back-end. Key highlights include:

- Live Video Communication: Twilio Video powers its real-time, high-quality video interactions.

- Interactive Avatars: HeyGen provides real-time avatar support and text-to-speech capabilities to the app.

- Advanced Audio Processing: Utilizes speech-to-text and Deepgram to transcribe spoken words with precision.

- AI-Driven Responses: Integrates OpenAI to generate context-aware responses and insights.

- Modern Front-End: Built with Next.js and React, styled using React Bootstrap for a responsive, dynamic user experience.

- Secure application management: Uses Twilio Verify for verification and Twilio Sync to store session data.

- Cloud-Native Deployment: Docker provides a container for the app to be deployed on fly.io for scalability and ease of management.

Architecture Overview

At the core of the Twilio Video AI Avatar Experience is an event-driven backend that coordinates communication between multiple services and external APIs. The following diagram outlines the high-level architecture:

Key Components

- Frontend (Next.js, React & React Bootstrap) Provides the user interface for engaging with the Twilio Video AI Avatar Experience. The front-end uses Next.js for server-side rendering and React for client-side interactions.

- Event Bus/API Gateway Acts as the communication hub. As users interact with the system, events (like video stream updates or voice inputs) are published to this layer, triggering other processes.

- Media & AI Services

- Twilio Video manages live video sessions for real-time interactions.

- Deepgram converts audio streams into text with high accuracy speech-to-text.

- OpenAI processes transcribed text to generate intelligent responses or commands.

- Verification & Session Handling

- Twilio Verify to manage user verification with a one-time passcode; protecting against unauthorized use and SMS traffic pumping (AIT)

- Twilio Sync to store allowed numbers and session information. Sync is used to gate access to the example application.

Build an Event-Driven backend

Why Event-Driven?

An event-driven architecture allows the experience to scale, processing asynchronous events from multiple sources in (near) real-time. When a user speaks, the audio is processed and transcribed. The resulting text triggers further actions (such as generating responses via OpenAI or synchronizing a video avatar with HeyGen) all without blocking the main communication channel: Twilio Video.

Technologies in Use

- Next.js with Typescript: For building the web application.

- React & React Bootstrap: For building dynamic UI components and ensuring a responsive design.

- Serverless/Event-Driven Patterns: Extending the Event Emitter class in Node.js to provide a simple framework for passing events

Prerequisites

Before you can begin chatting with the app, you’ll need to set up a few accounts and prepare your development environment. I’ve divided the prerequisites below.

Accounts and APIs

- Twilio Account: To access Twilio Video and Verify APIs in order to generate credentials for real-time video streaming and authenticate users of your app.

- Deepgram Account: To access the Speech to Text API and produce transcribed text

- OpenAI Account: To access a LLM and get chat completions when provided text from Speech to Text with Deepgram

- HeyGen Account: To create and manage photorealistic avatars, including API access for avatar integration.

- Docker Account: To create and manage dockerized containers of the example app.

- Fly.io Account: To create and manage instances of the app in the cloud.

- Sentry.io Account (optional): To track actions and errors in the app. Note: this has been disabled in the sentry.client.config, sentry.edge.config, and sentry.server.config files and should be set to

trueif you want to use logging with Sentry. Additionally, add thedsnvalue that Sentry gives you within the .env file and switchNODE_ENVto equal todevelopmentto turn on logging.

Programming Languages and Frameworks

- Programming Language: Node.js and TypeScript

- Frontend Framework: React and React-Bootstrap

- Backend Framework: Next.js

- Concepts: Websockets and Events

- Deployment Framework: Docker and App Deployment in the Cloud (Fly.io, in my build)

Dependencies

Other versions of Node and Chrome may work, but these are the ones I tested with:

- Node v22.12.0

- Chrome Browser v134.0+

Set up Twilio Verify

You will need to set up a Twilio Verify service for the app.

Navigate to Verify in the Twilio console, select Services, then Create new. Give the service a name and enable it for SMS and Voice. Once you have created it, enable Fraud Guard, hit Continue, then take a note of the Verify Service SID… we will add it to the variables in the .env file later.

Set up Twilio Sync

This app uses Twilio Sync to manage who can access the app and to manage sessions.

Navigate to Twilio Sync and then Services within the console, then hit Create new. Give your Sync service a friendly name and hit Create. Once you have created it, take a note of the Sync Service SID and add it to the .env file under the TWILIO_SYNC_SID entry.

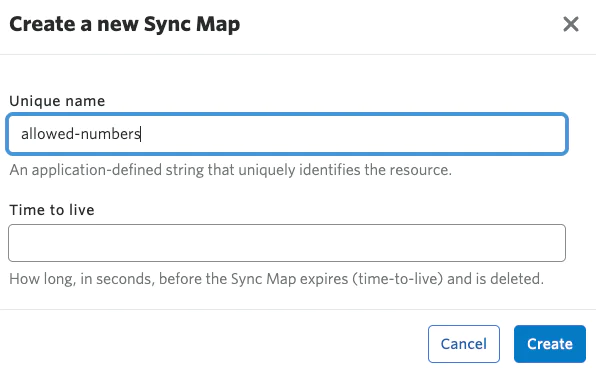

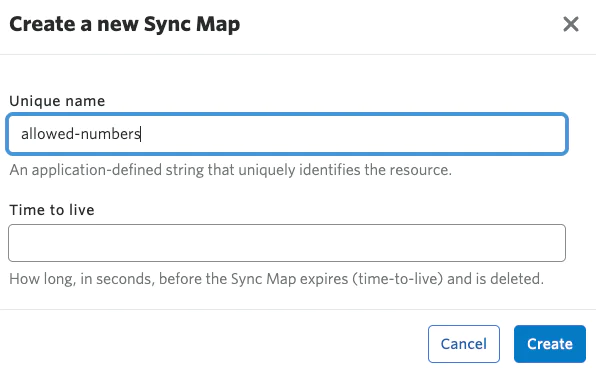

Once you have created your Sync service, navigate to Maps from within the service and select Create new Sync Map. Give it a unique name like ‘allowed-numbers’ and hit create. Take a note of the SID for the allowed-numbers sync map – we will add it to the .env file under the TWILIO_SYNC_MAP_NUMBERS_SID entry.

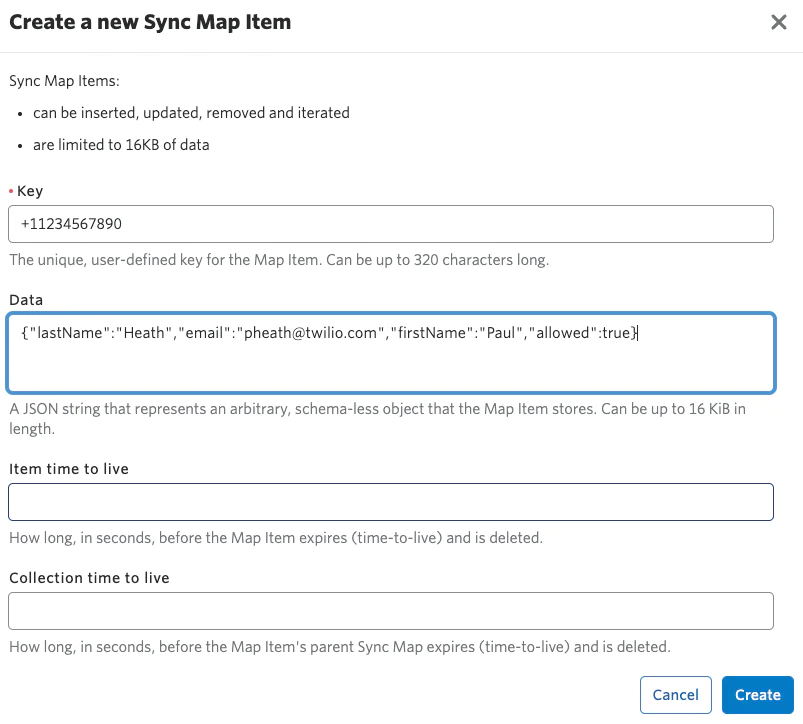

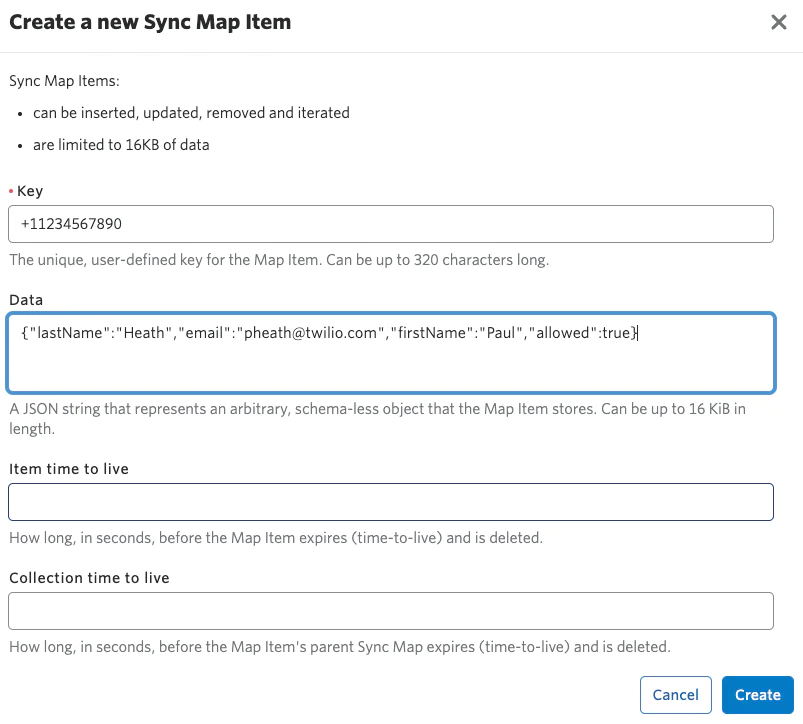

When you have created the Sync Map for allowed-numbers, you will have to add your own number to the sync map so you are allowed access to the app. This Sync map is where you manage access to the app.

If the number in the form of the Key is present within this sync map, then it has access. For example, if my number in E.164 format is +11234567890, then that represents the Key. The data that resides in the item is a JSON object which stores a lastName, email, firstName, and allowed field set to true.

Here is an example JSON object you can copy and edit for your first allowed number:

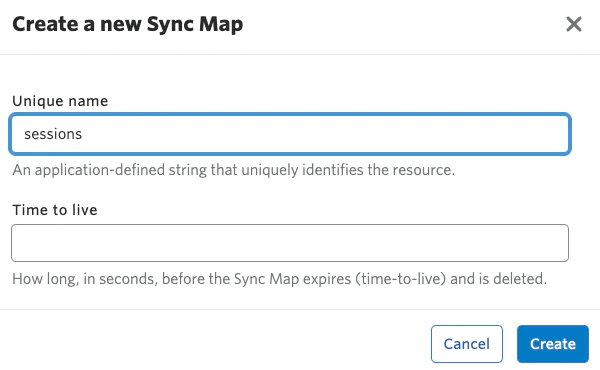

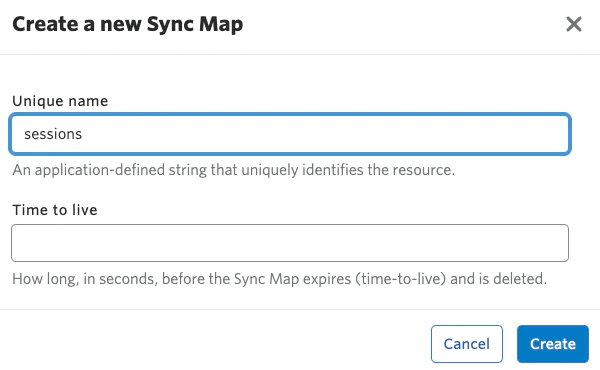

Next we will have to create another Sync Map for holding information about sessions.

Again, navigate to Maps from within the service and select Create new Sync Map. Give it a unique name like ‘sessions’ and hit Create. Take a note of the SID for the sessions sync map and add it to the .env file under the TWILIO_SYNC_MAP_SESSIONS_SID entry. You won’t have to manually create entries within this sync map, as session storing is handled by the app.

The Repo

To get the code from this repository, clone it to your local machine using Git. Open your terminal and run:

This command downloads the repository’s contents, allowing you to explore, modify, and build on the code as needed.

Setup the .env file

Copy .env.example and ensure that you have an account key or SID for each entry (except Sentry, if you choose not to include logging). Save it as .env

Containerize with Docker

To ensure consistency across environments and simplify deployment, the Twilio Video AI Avatar Experience is containerized using Docker.

Below is an example of the Dockerfile to get you started, it already comes with the repo so you don’t need to make changes for this example:

This Dockerfile builds your Next.js application, installs dependencies, and serves the app on port 3000. Customize as needed for your specific project setup.

Deploy on fly.io

fly.io provides a great platform for deploying containerized applications globally. Follow these steps to deploy the app. Here is the fly.toml app you cloned from the repository:

1. Update the fly.tomlfile and name your app something relevant (using the variable app). Set your primary region as one of the fly.io specified regions. Save the file and exit to your terminal.

2. Download and install the fly.io command-line tool, flyctl, from fly.io/docs/getting-started.

3. The very first thing you should do is login using the command line tool, using flyctl auth login will open a browser window for you to authenticate with your fly.io credentials.

4. In your project directory, run lyctl launch if this is the first time you are launching the app. If you are making changes then you can run flyctl deploy

5. This command initializes your application, creates a fly.toml configuration file and sets up your deployment environment.

6. You will be asked if you would like to copy the configuration for the existing fly.toml file. You can enter y for yes

7. When presented with the screen above, if you are happy with the configuration you can indicate N to not tweak these settings before proceeding.

8. Here you can indicate y yes to overwrite the previously saved deploy file.

9. Once you do this, fly.io will create the app in the cloud and provide you with some output on what it is building with respect to the Dockerfile that is provided.It will take around a minute or so to complete the Next.js build. However, you will eventually be notified that it is launching a new machine and was successful once complete as seen in the image above.

10. Your Docker container is now built and deployed to the fly.io cloud, with global load balancing and automatic scaling!

11. Monitor and Manage:

Use the fly.io dashboard or CLI commands to monitor your application’s health and performance. Or to switch the machine off you can either scale back or use the kill command.

Permissions check

Make sure that when you go to test this, that you have enabled permissions in your browser for the site where your application has been deployed to. If you haven’t enabled permissions you will see a modal like the one above.

If you haven’t added permissions, navigate to the button beside the url in the browser. You will be presented with a screen like the one above where you can set the permissions for the camera and the microphone. Once you have done that, reload the screen and the modal should disappear.

Talk to the bots!

Conclusion

The Twilio Video AI Avatar Experience demonstrates a new era of interactive, AI-powered communication. By combining photorealistic avatars, video communication, and near real-time transcription, the application delivers an engaging experience all on top of Twilio Video. Next, you might try adding your own avatars and use cases – our friends at HeyGen have reported great results in fields as diverse as retail, training, and insurance!

Whether you’re looking to create immersive digital experiences, or just explore new AI capabilities, the Twilio Video AI Avatar Experience shows you what’s possible, today. Stay tuned for more updates as we continue to refine and expand the capabilities of the app – next, I’ll look at identifying emotions and working them into the conversation’s context.

Happy coding, deploying, and chatting!

Bonus Material

As AI technology continues to advance, the applications of AI avatars will expand. If you have been following along with my other blog posts you will notice that I still don’t like semicolons!

You can read more about how I captured human emotions with Twilio Video and AWS Rekognition, providing another dimension of context in an interaction.

Paul Heath is a Solutions Architect at Twilio and Lead AI Major. He specializes in AI, and continually tries to think about what’s next in that field. His email address is pheath [at] twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.