Add Voice AI to your website with the Twilio Voice JavaScript SDK and ConversationRelay

Time to read:

Spin up a Demo Application that shows you how you can deploy talk-to-AI-agent buttons on your websites and mobile applications.

Voice is quickly becoming a primary interface for applications using Generative Artificial Intelligence. We at Twilio are seeing massive demand for Voice AI Agents in most corporate verticals.

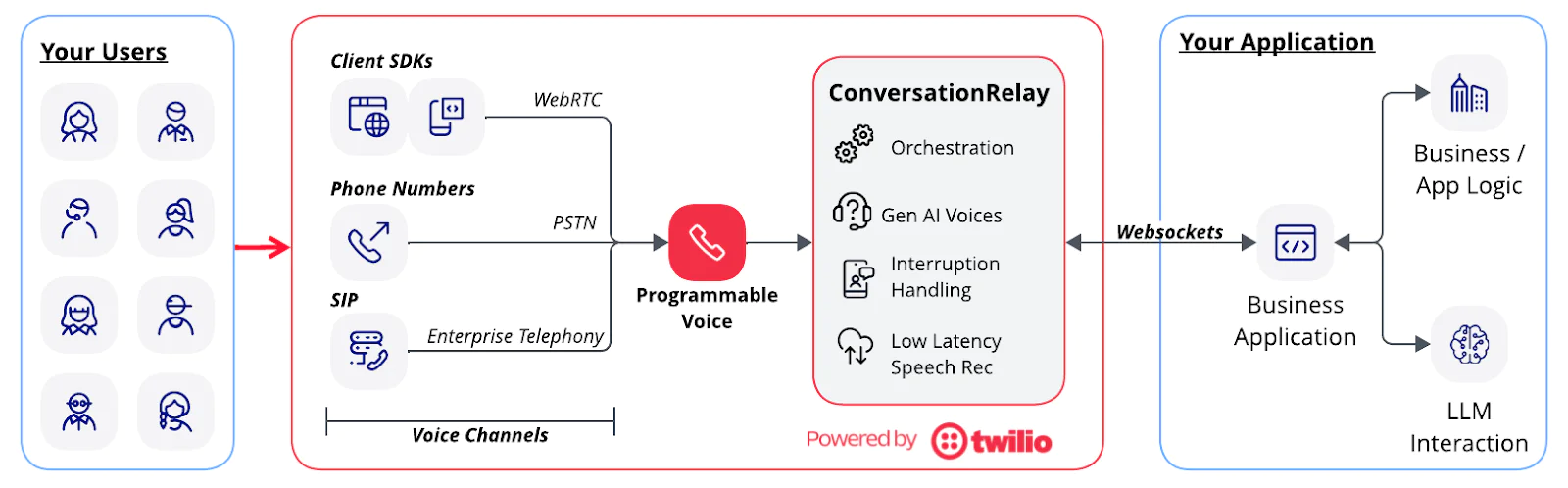

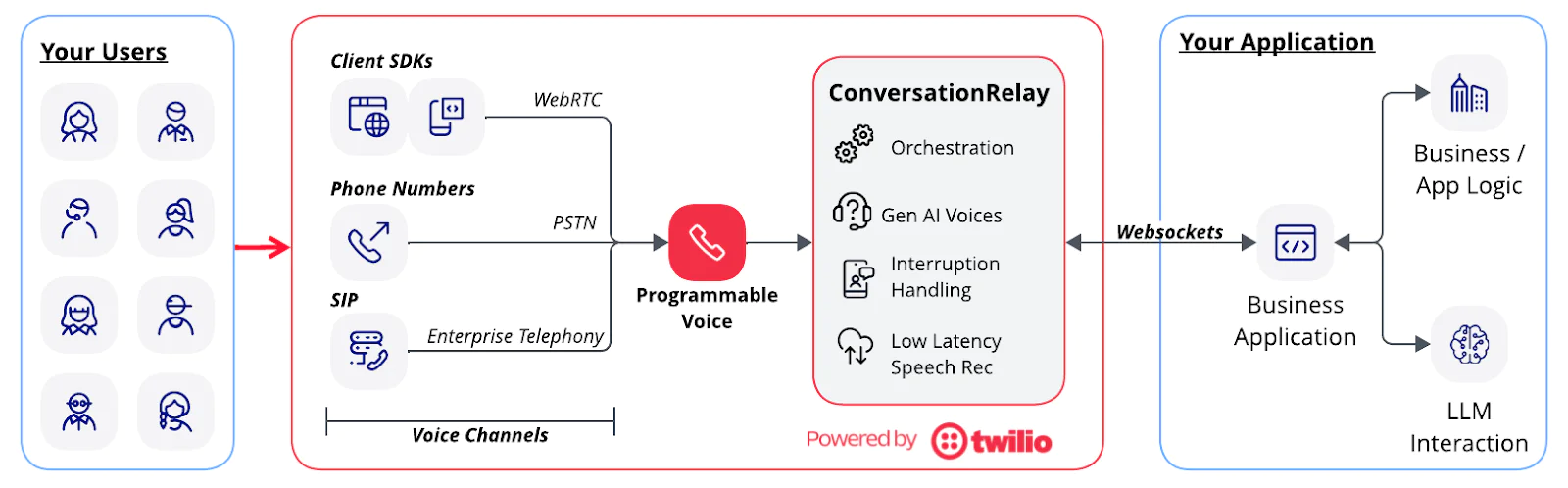

That’s why we built ConversationRelay, which helps you build real-time, human-like voice experiences using the LLM of your choice on any channel: WebRTC, PSTN, or SIP. Twilio has been a leader in voice communications for over a decade, powering applications in everything from the browser to the call center to the PSTN – and now, powering AI-driven conversations.

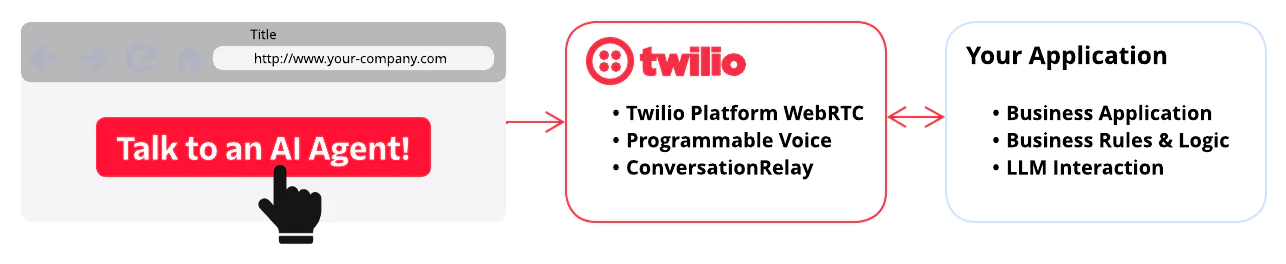

In this demo, I’ll show you how to build a simple web interface with a TALK-TO-AGENT button that utilizes Twilio’s Voice JavaScript SDK. Calls from a web interface connect to Twilio ConversationRelay, which combines fast speech-to-text (STT) and text-to-speech (TTS) capabilities with the OpenAI API or your choice of model on Amazon Bedrock. Additionally, you’ll be able to try out multi-channel capabilities with Twilio Messaging and emails with Twilio SendGrid.

Sound good? Well, you make the call when you talk to the agent. Let’s get started.

Some of the key benefits of our ConversationRelay Voice AI solution offered are the choices we provide you.

Twilio handles the things we are good at: Voice channels ( WebRTC, PSTN, SIP), scale, latency, orchestration, and direct relationships with speech-to-text and text-to-speech providers. We leave the important business decisions to the people building on our platform: the AI Provider, LLM Interaction, choice of SST and TTS providers, and the overall “agentic” or “conversational” experiences (applications) that you want to build.

Want to see what we are building?

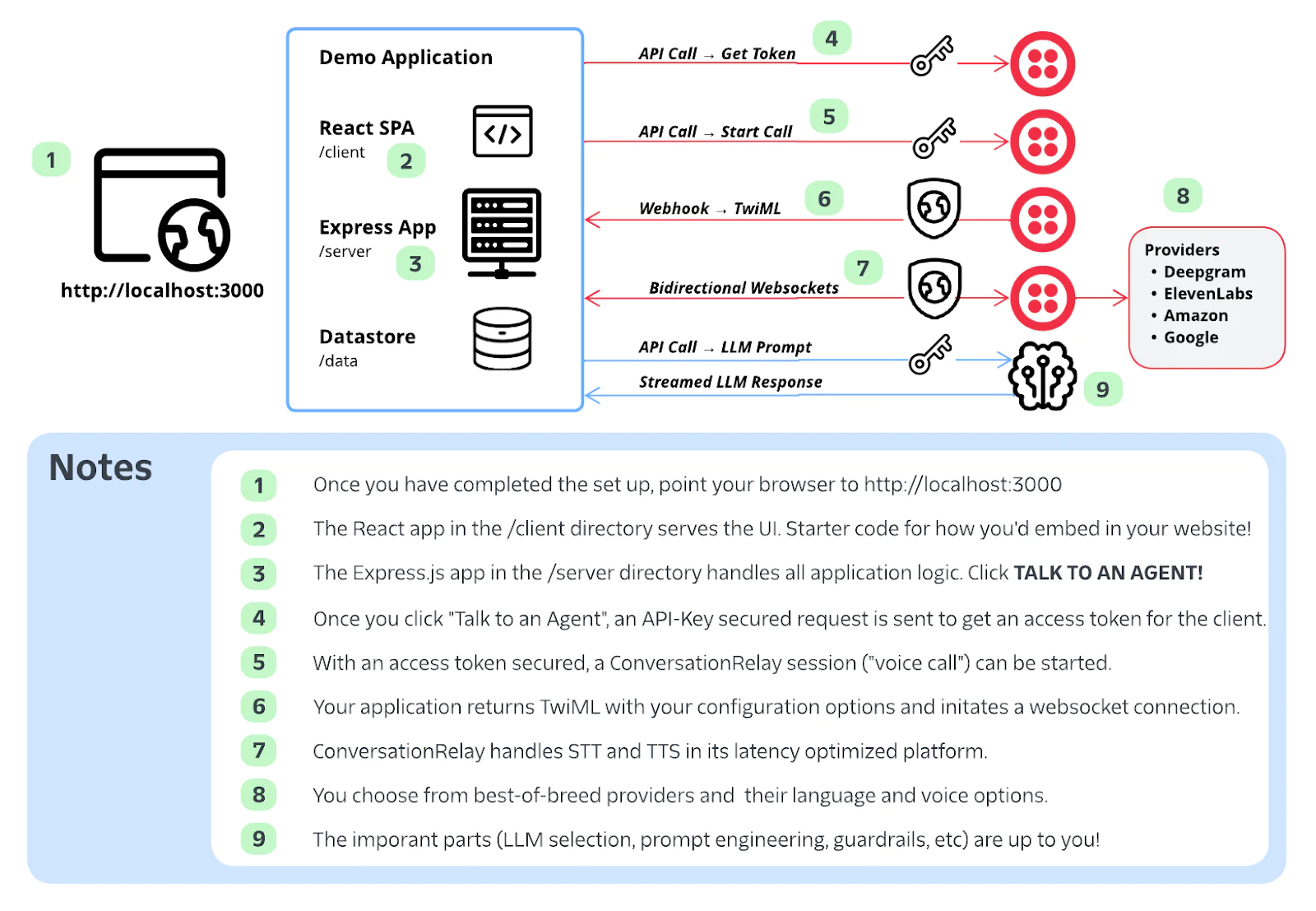

Here is a diagram of the components of this demo application, along with a high-level sequence that shows how Twilio can connect a WebRTC client to your application and the LLM provider of your choice:

A web or mobile application using the Twilio Voice JavaScript SDK needs to get an authentication token, and then it can place a call into Twilio Programmable Voice where you can unlock the magic of the Twilio Platform!

Let’s get started…

Want to watch a video of the installation instead?

Prerequisites

This is not a beginner level build! You should also be comfortable with some basic programming and command line skills to be able to spin up this demo application.

- Twilio Account. If you don’t yet have one, you can sign up for a free account here.

- Either :

- An OpenAI account with API access (or)

- An AWS account with access to AWS Bedrock.

- Node.js installed on your computer. (We tested with versions higher than Node 20)

- Ngrok or a similar tool to allow access to your local machine.

Let’s Build it!

1. Download the Code for this Application

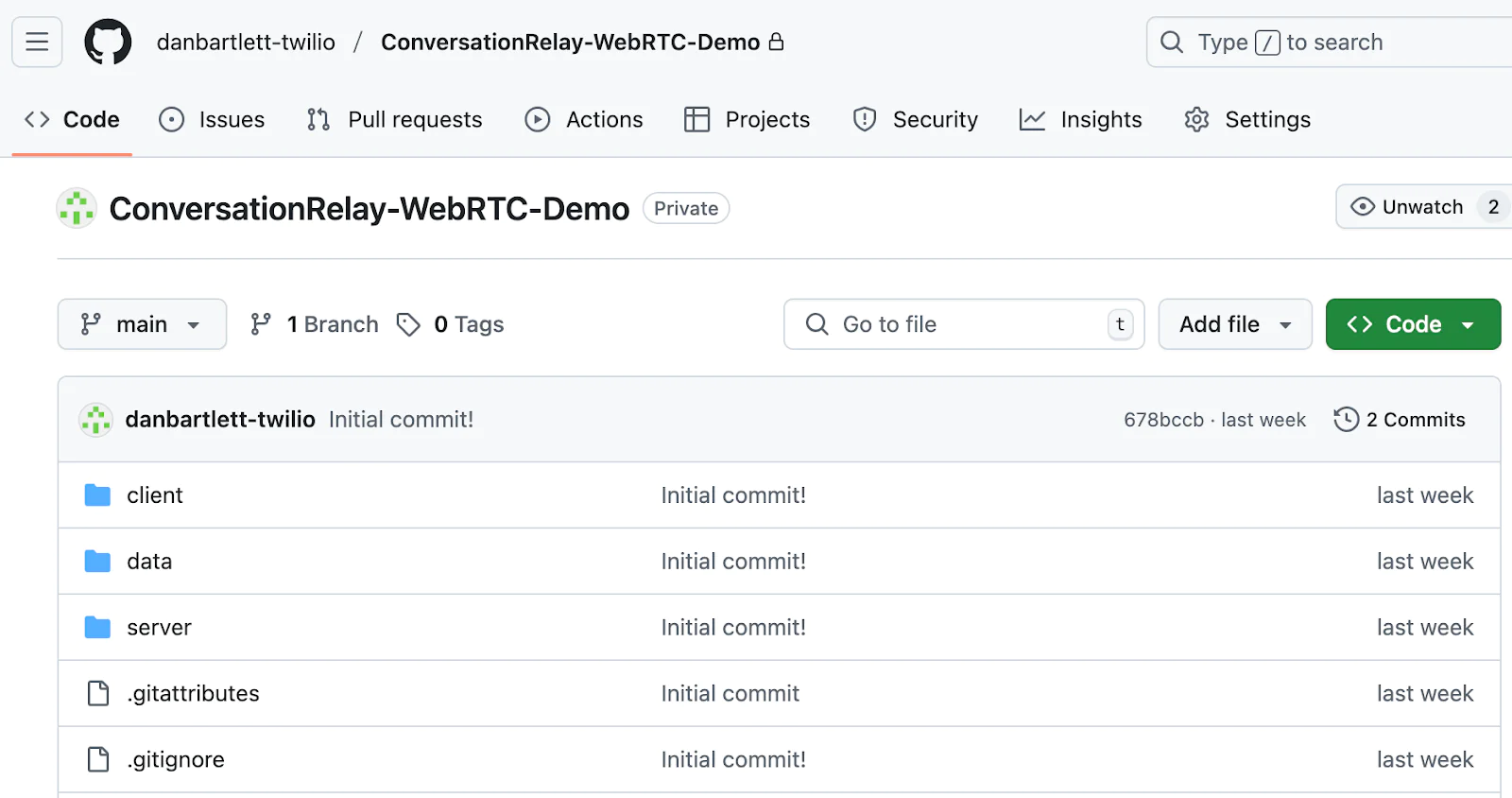

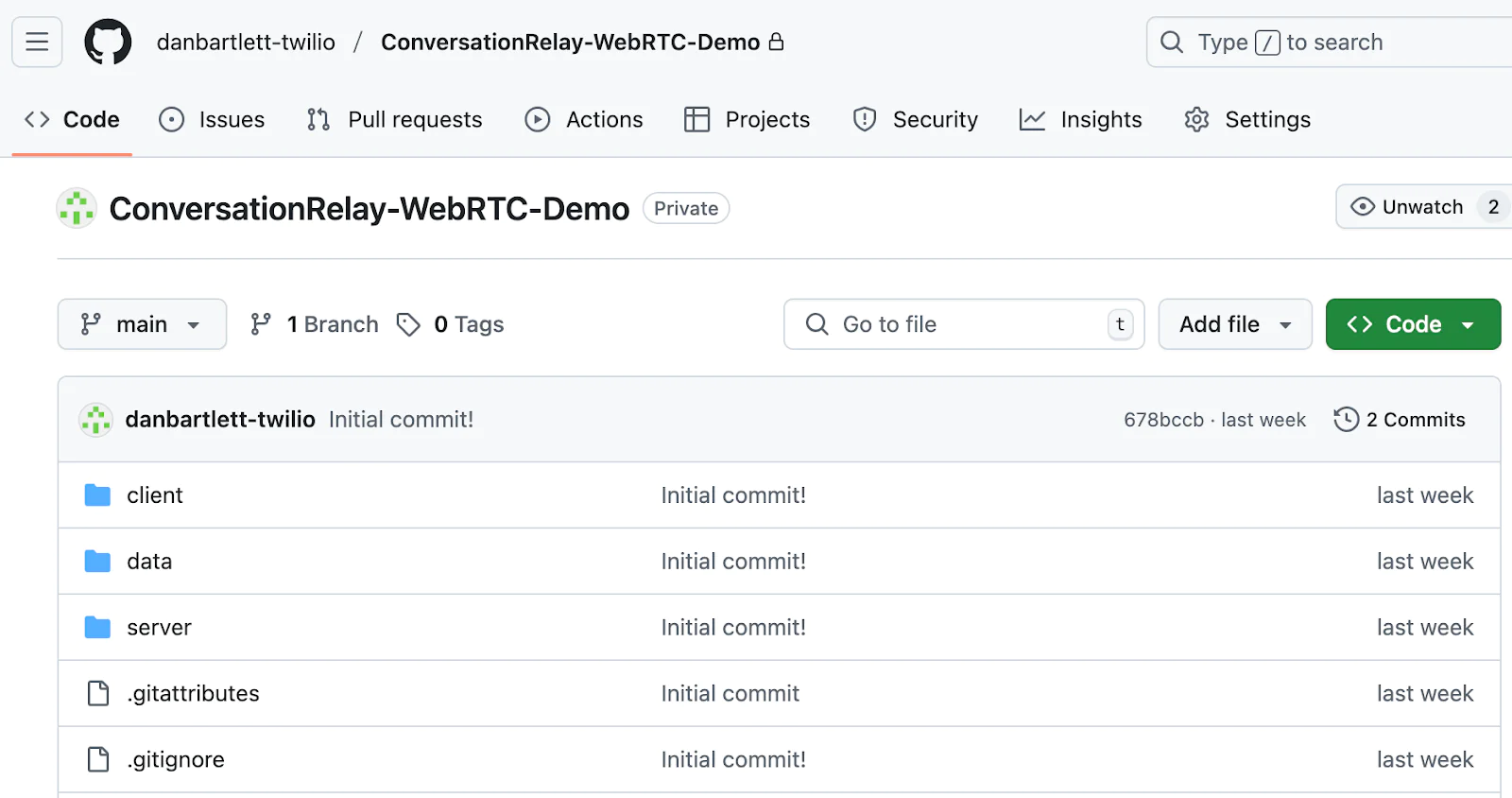

Download the code from this repo, and then open up the folder in your preferred development environment.

The repo is divided into three folders:

- /client ⇒ Contains a React Single Page Application (SPA) and contains the necessary code to show a button on your website to initiate a call. In addition, the client contains tools to help you build and experiment.

- /data ⇒ We use a simple file system JSON object database for the Demo Application. You can certainly choose your own database for your application, but this simple solution will allow you to play with the functionality.

- /server ⇒ We chose Express.js to power the backend of our Demo Application. Express is written in familiar Node.js and can easily deploy and run locally. It handles REST APIs and WebSockets well and is straightforward to follow. It should be very approachable for anyone interested in experimenting and building POCs!

2. Build the Client and the Server

From the root directory of the project, run the following:

This will install all of the node.js dependencies and build a complete version of the client application (Single Page Application). The Express.js app, in the /server directory, uses the client application built in this step.

3. Start ngrok

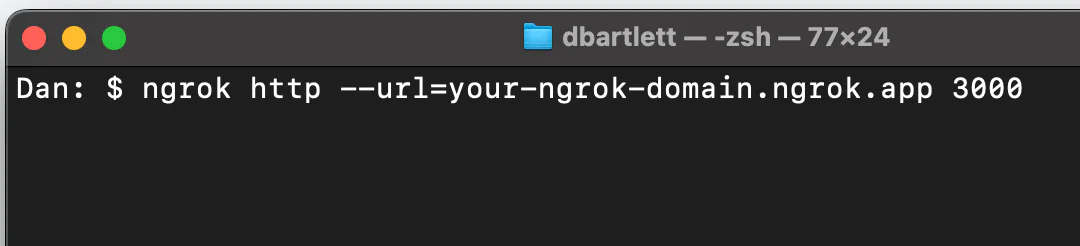

In order for this demo application to work locally, Twilio needs to be able to communicate with your local machine. Ngrok is a good option for this task, but you can use any alternative that does the same thing.

We will assume that you are using ngrok. Open a new terminal window and start ngrok on your local machine and point it to port 3000, `using ngrok http 3000. Copy down your URL – you will need it later!

4. Create a Twilio API Key

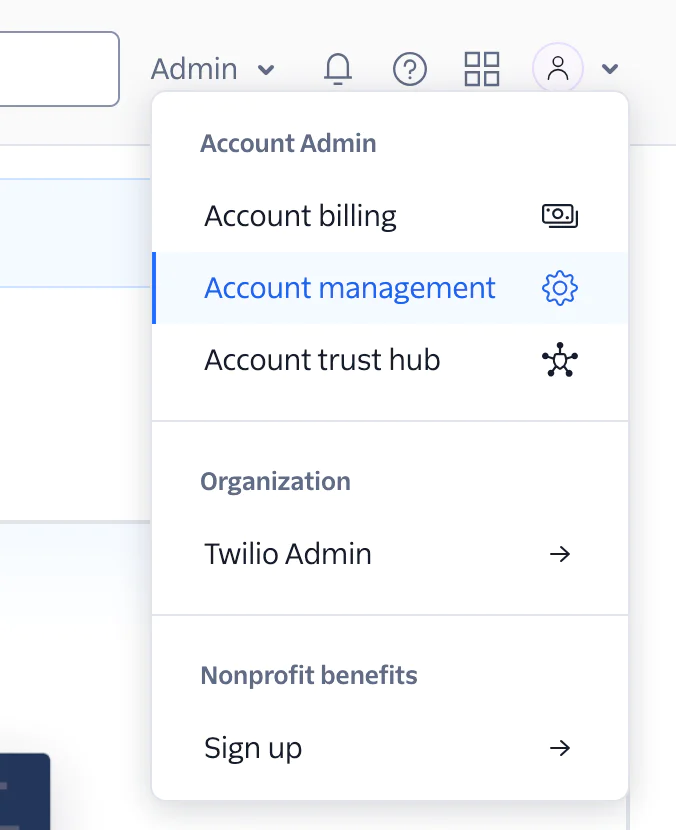

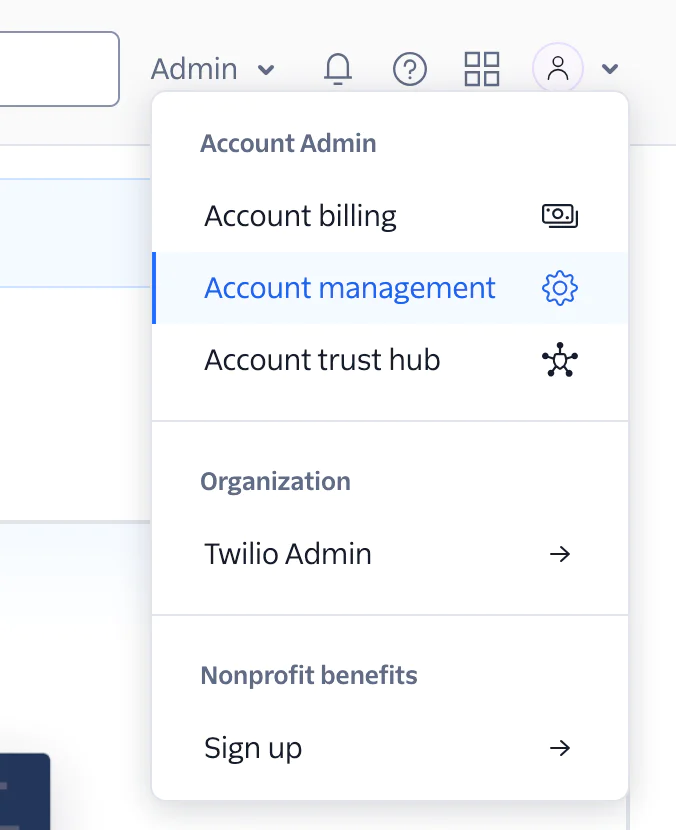

The Twilio Voice JavaScript SDK requires a Twilio API Key. From your Twilio Console, find Admin in the upper right corner and then select Account management.

On the next screen, select API keys & tokens in the left column. Create a Standard API Key.

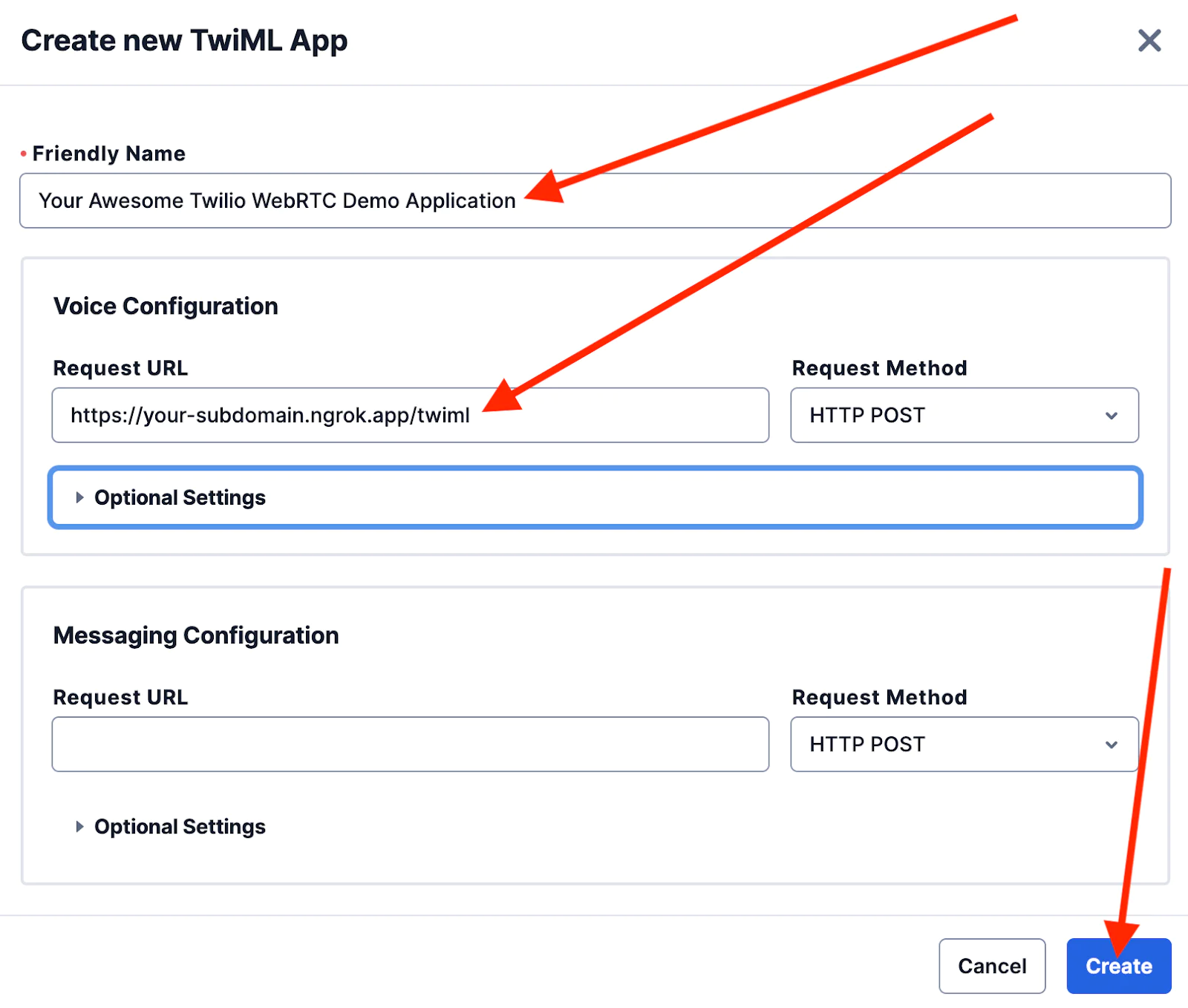

5. Create a Twilio TwiML App

The Twilio Voice JavaScript SDK needs to point to a TwiML app so that your client application knows where to get instructions to handle inbound calls.

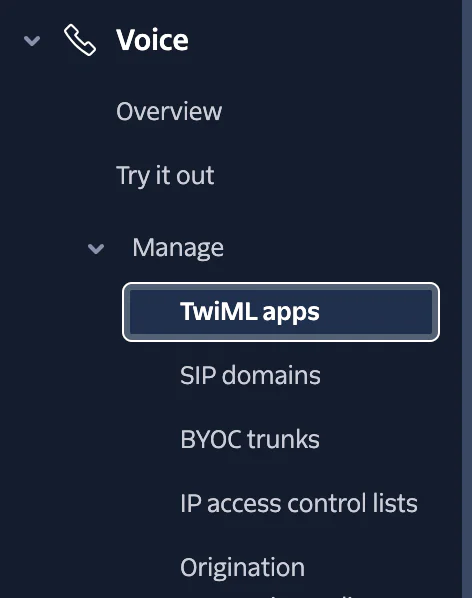

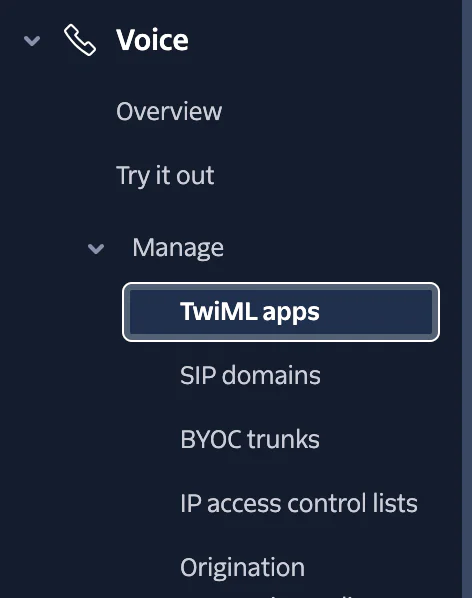

From your Twilio Console, find Voice in the left column under Develop. If Voice is not in your left column you can add it by clicking on Explore Products at the bottom of the left column .

Under Voice, select Manage → TwiML apps :

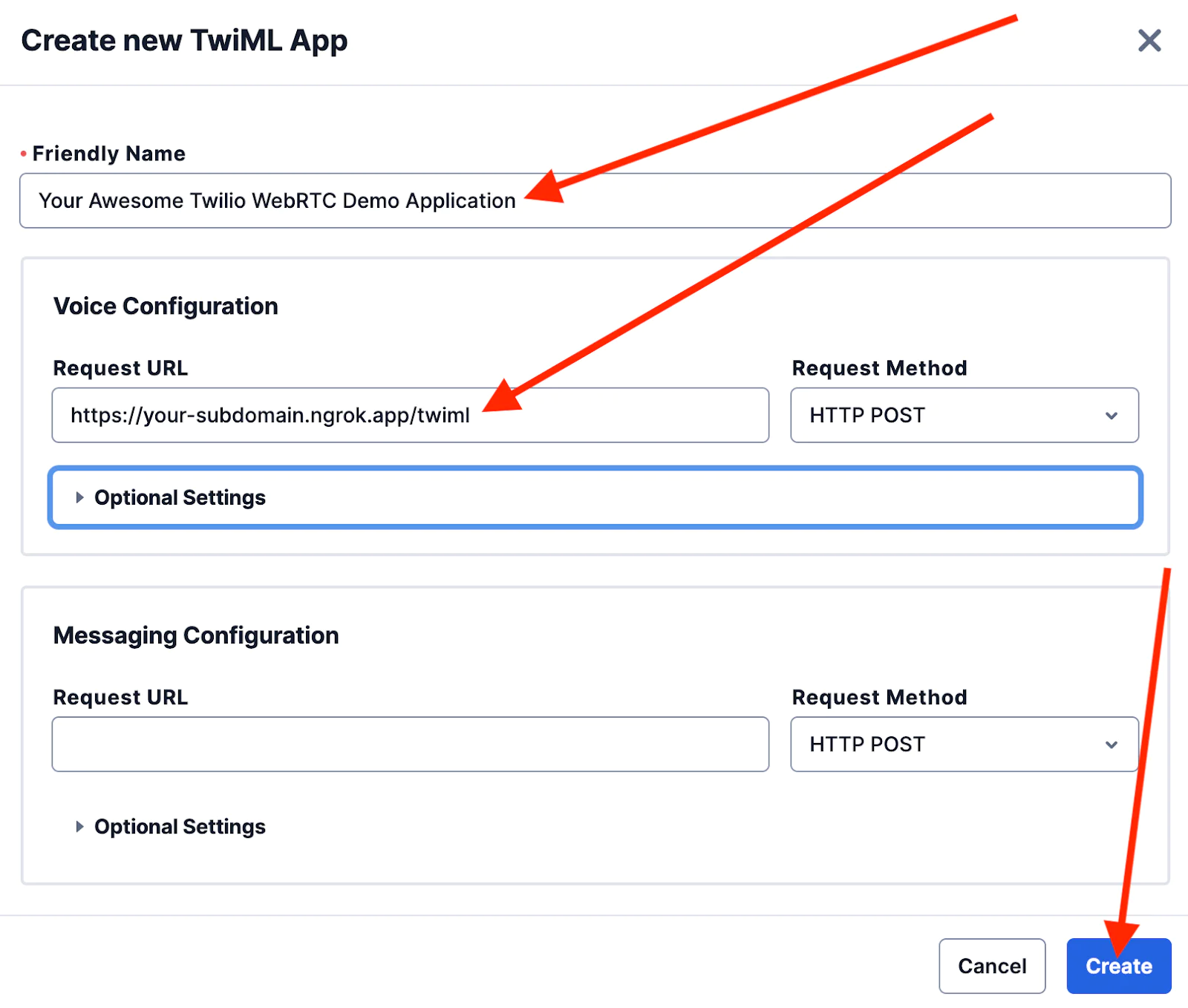

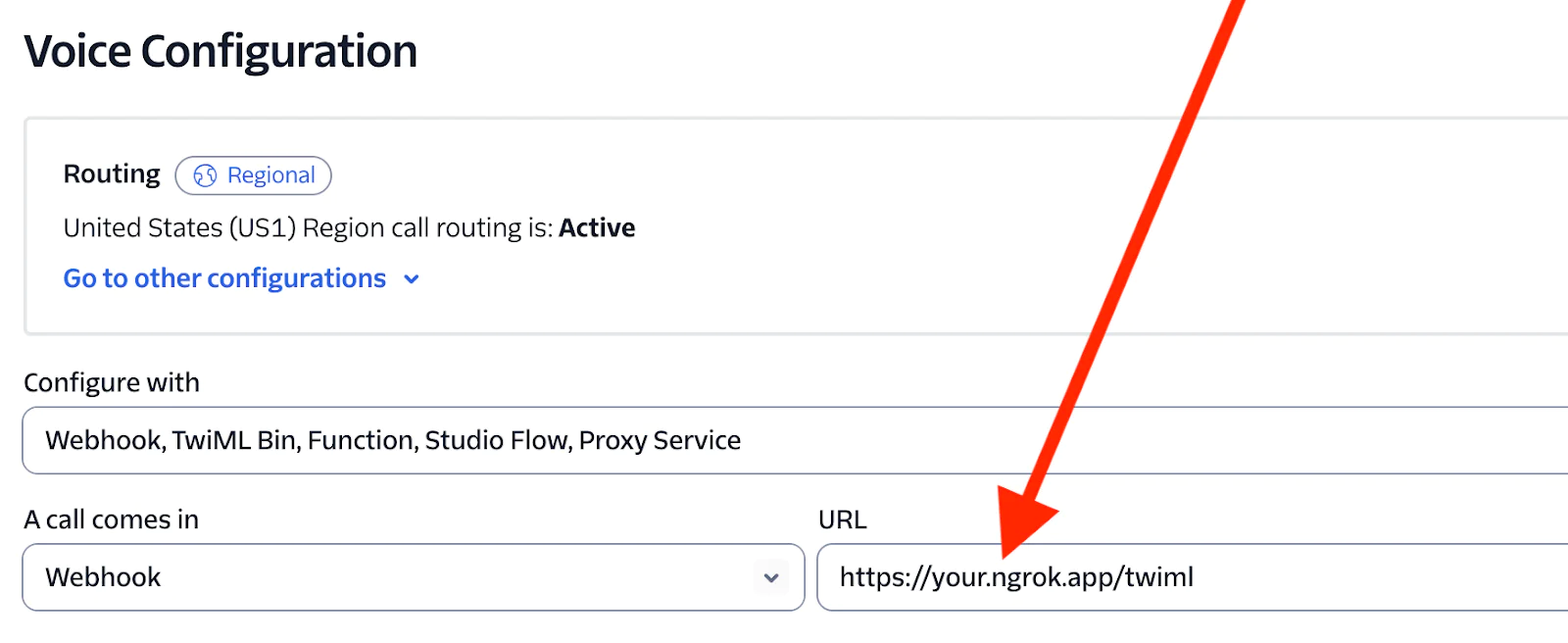

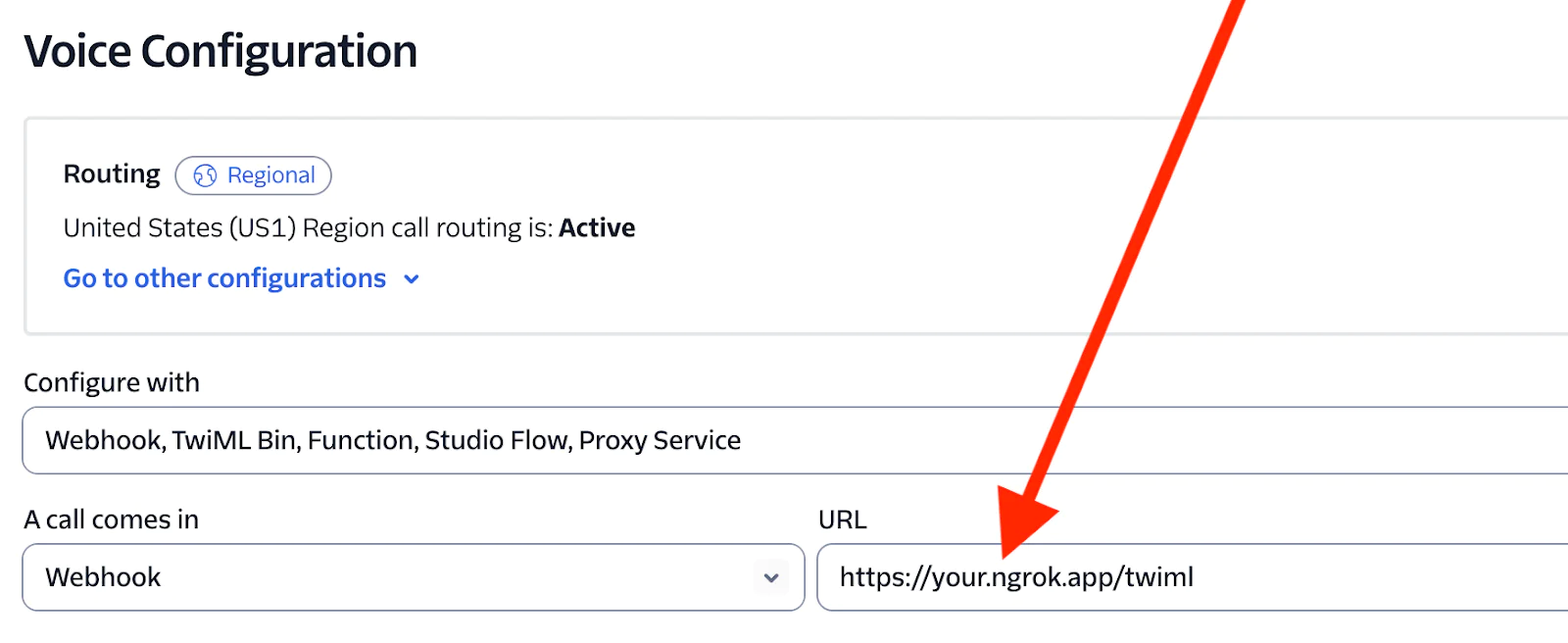

Select Create new TwiML Appand enter a friendly name. Then, in Request URL under Voice Configuration, enter your ngrok url copied in step 3 with the path /twiml appended. It should look like this:

Enter in the following information, and be sure to change these fields for your local setup (and taste):

- Friendly Name: Your Awesome Twilio WebRTC Demo Application

- Voice Configuration

- Request URL:

https://<your-ngrok-domain>/twiml

Click Create.

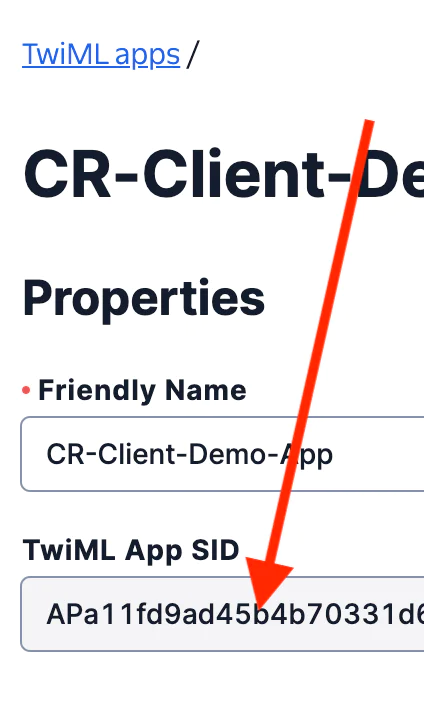

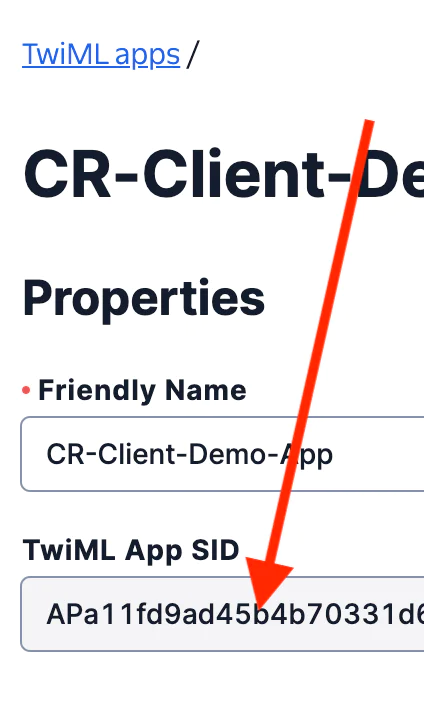

Be sure to copy the TwiML App SID from your newly created TwiML App because you will need it shortly.

Your client application references this TwiML App when placing a call. The TwiML App directs calls placed from your client application (hosted on your local machine) to a URL of your choice. In the case of this app, the destination URL is also on your local machine.

Your local server (the Express.js application) accepts the inbound POST from Twilio and returns the TwiML needed to spin up a ConversationRelay session.

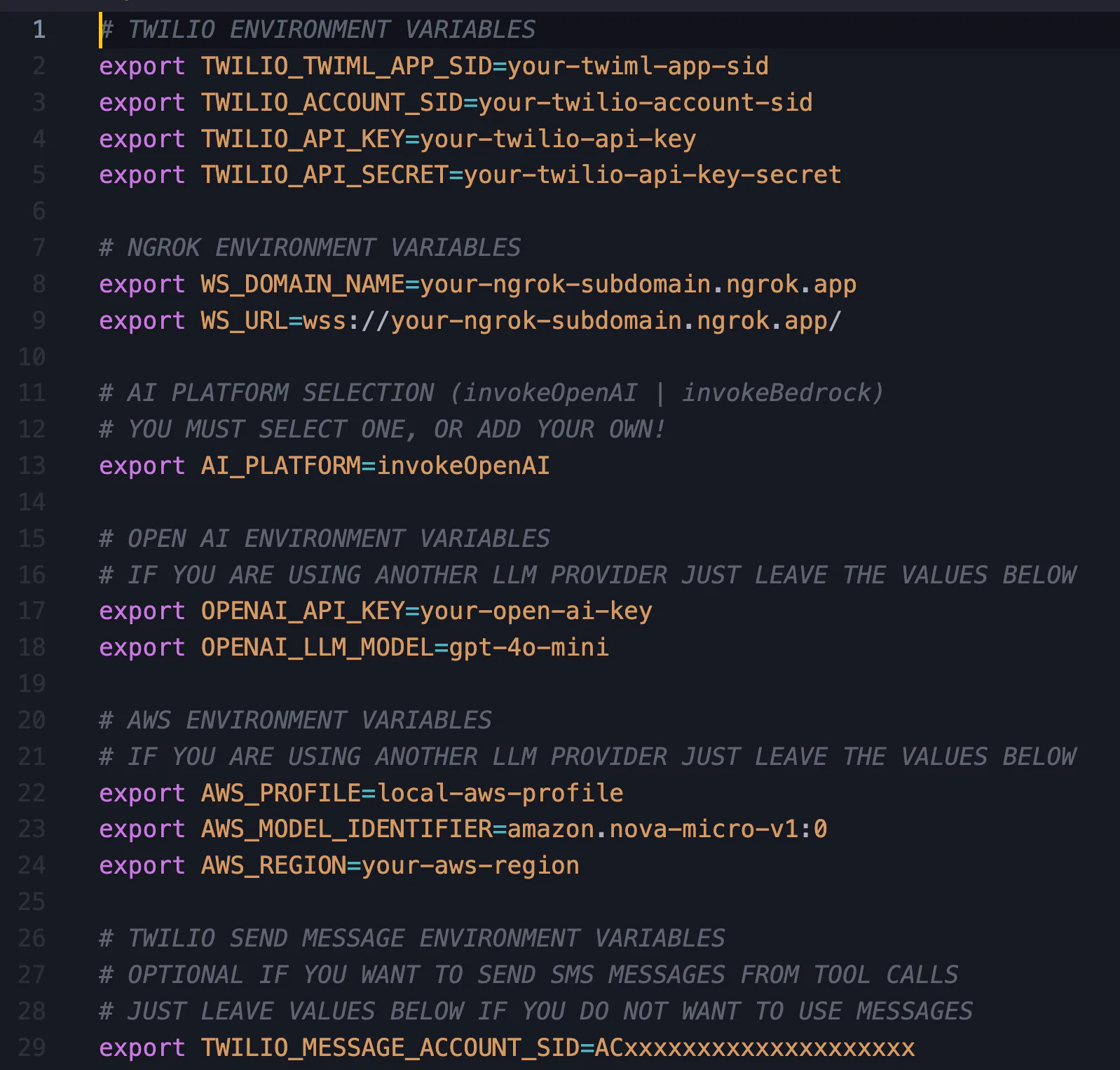

6. Configure your environment variables

All of the environment variables for this Demo Application are stored in a single “shell” script. You need to edit this shell script with your environment variables. Start by doing the following from a command prompt in the root directory of your project.

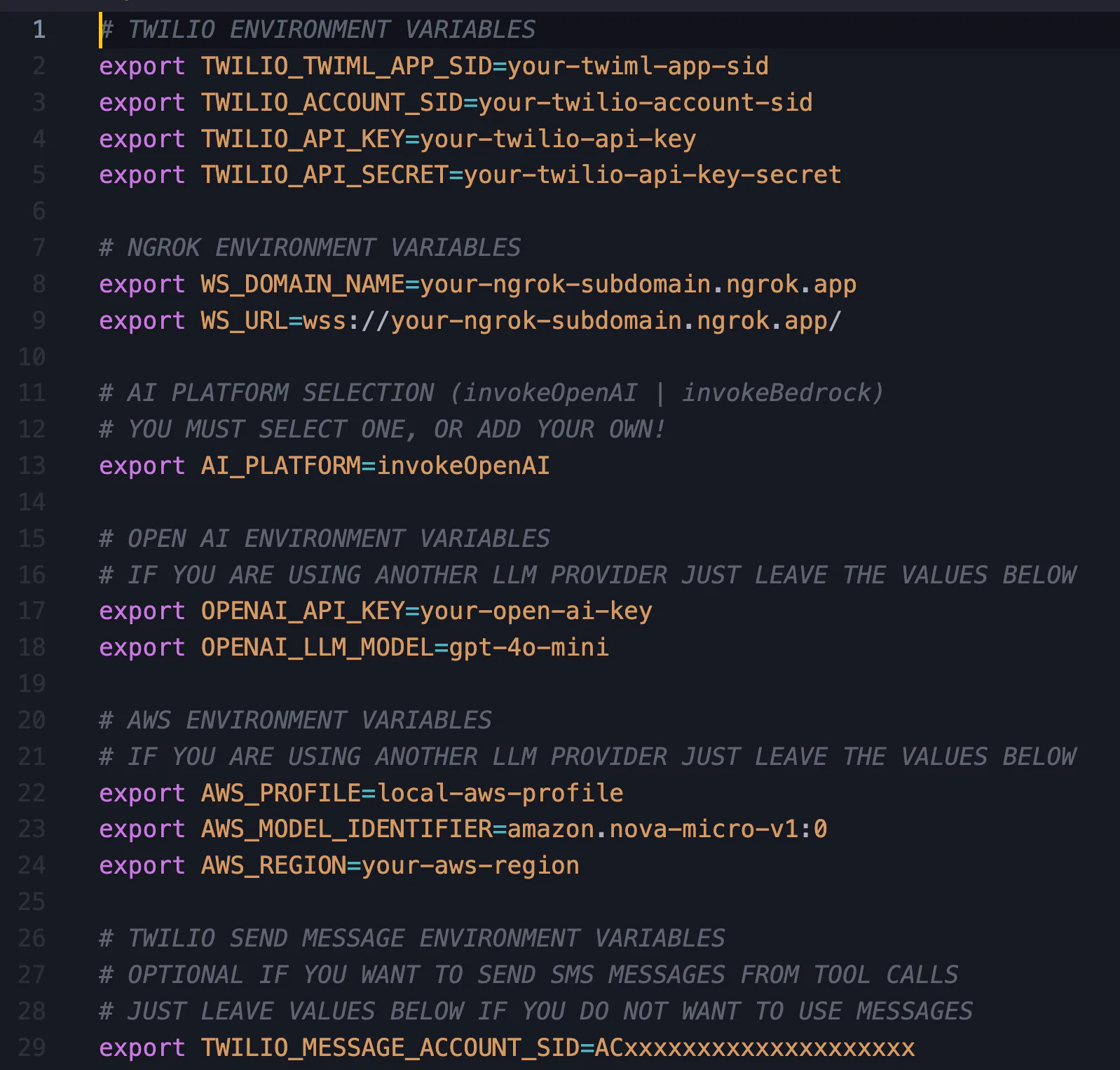

Open the file ‘ start-local-server.sh’ and you will see the following:

In the # TWILIO ENVIRONMENT VARIABLES section, enter your Twilio Account SID, the Twilio API Key details from step 4, and the TwiML App Sid from step 5.

In the # NGROK ENVIRONMENT VARIABLES section, domain initiated for your ngrok (or similar) tunnel to your local machine.

In the # AI PLATFORM SELECTION section, you can specify whether you want your application to call AWS Bedrock (invokeBedrock) or OpenAI (invokeOpenAI) for LLM processing. Whatever choice you make, you will need to have the necessary account and API credentials for the chosen platform and you will need to fill out the details in the corresponding section: respectively, the OPENAI ENVIRONMENT VARIABLES for OpenAI, and AWS ENVIRONMENT VARIABLES for AWS Bedrock. You can leave the default values for the AI Platform that you do not choose.

The STACK_USE_CASE variable is the default use case. You can change this as needed here – but also know that switching use cases is easy to do once you have the UI.

6a. (Optional) Configure Twilio Messaging and SendGrid environment variables

In the # TWILIO SEND MESSAGE ENVIRONMENT VARIABLES section, optionally enter Twilio account credentials and a phone number that is verified to send SMS messages. This will allow you to send messages (SMS) via tool calls. This could be the same Twilio account as the first variable block. You can add in Messaging Capabilities later if you just want to get up and running.

In the # TWILIO SENDGRID EMAILS ENVIRONMENT VARIABLES section, optionally enter a Twilio SendGrid API Key and verified FROM email address. This will allow you to send emails via tool calls. You can add in Email Capabilities later if you just want to get up and running.

7. Make a copy of the users.json file

The app allows you to set and save settings for your local user. Create a copy of the sample file for your local use.

From the root directory:

8. Start the Server and open a browser

With those settings in place, you are ready to fire it up! Start the server by executing the shell script you edited in step 6.

From the root directory:

Your local server should start up at http://localhost:3000. Let’s start using it!

Start talking to your Voice AI Agents

Phew, you’re done loading the app – now it’s time for the fun part, using it. I’ll walk you through the features you’ve now got at your fingertips (or your ears).

Use your new TALK-TO-AGENT button

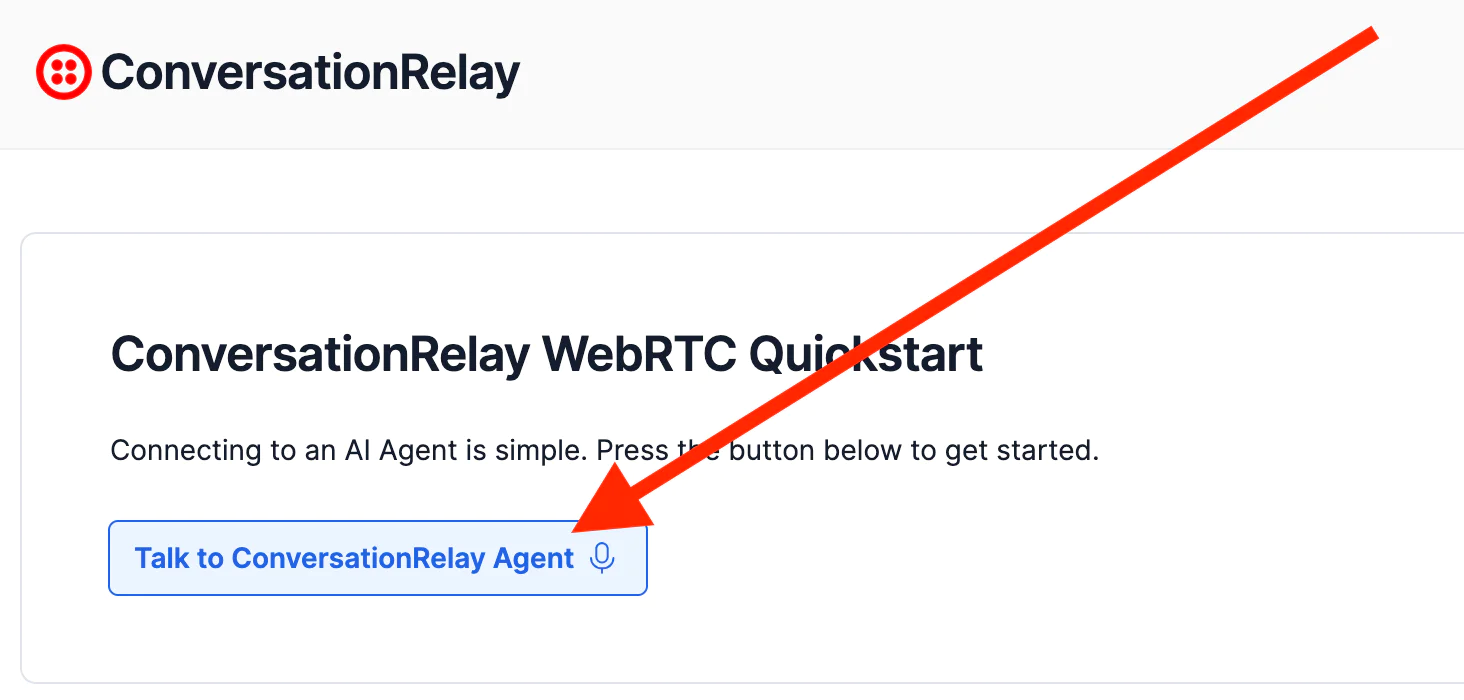

From your web browser pointed to http://localhost:3000, click on the Talk to ConversationRelay Agent button to kick things off!

By default, the application loads an Albert Einstein use case. Try asking a few questions to get a feel for the responsiveness and cadence. You will notice the conversation audio visualized as you and your agents converse. The conversation transcription will run in real time, and will even show interruptions and latency metrics can be toggled on and off.

Try a different pre-loaded Use Case or build your own.

Talking about Albert Einstein with a voice AI Agent is exhilarating – but you’ll probably want to keep exploring what agents can do when you’re Einsteined out.

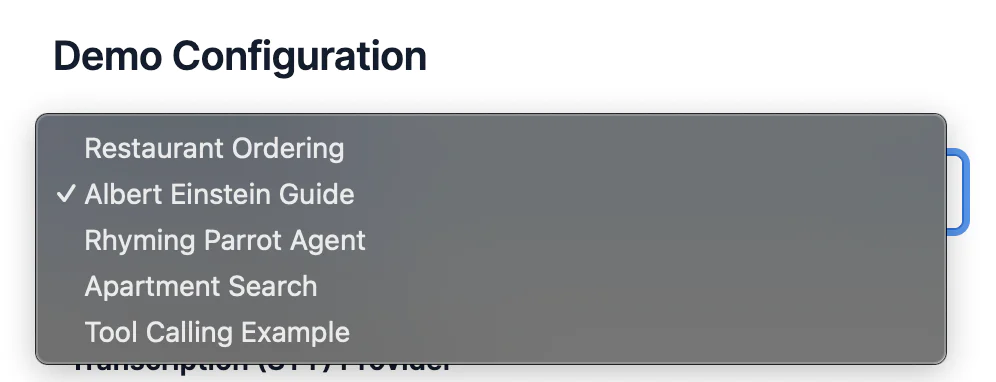

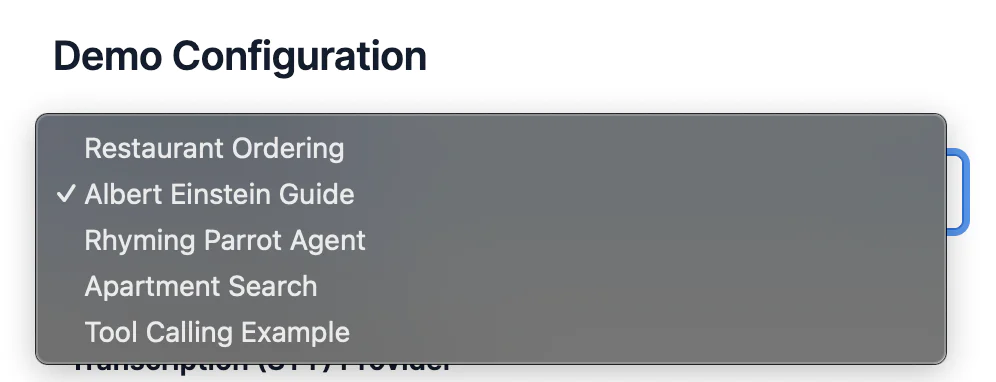

The demo application comes with pre-loaded use cases. From the UI, select a different use case, and save your configuration to launch a different experience:

Click on the Use Cases tab to review the pre-configured uses cases and then clone a use case to build your own experience. A working POC is within your reach!

Try different Text-to-Speech Voices

The voices that you choose for your voice AI Applications are a central component of the user experience that your business provides to your customers. ConversationRelay has relationships with top text-to-speech providers and allows you to choose – and you definitely should sample and test many voices to get this element correct.

This application has preloaded English voices across three providers ( Google, Amazon, and ElevenLabs) The available voices are increasing rapidly. Refer to the Twilio Docs for the latest voices.

It is straightforward to add or edit the available voices. Open the JSON file located at /data/tts-providers.json and follow the convention to add the voices and languages required for your use cases.

Call your new application using the PSTN

As diagramed in Image 2 earlier in this blog post, ConversationRelay works with Twilio Voice and you can connect to Twilio Programmable Voice via WebRTC, SIP, and the PSTN.

Here is how to connect to the PSTN.

From your Twilio Console Phone Numbers -> Manage -> Active numbers page, select the number that you want to use, and set the A call comes in to WEBHOOK and then enter your ngrok url as shown below:

Give the phone number a call and it will connect to your ConversationRelay app, where you can chat with an AI Agent trained (well, prompted) on the use case you picked!

Note that it will select the default use case – you can add a user in /data/users.json if you want to control the experience for specific phone numbers calling into your application.

You can create a similar setup using Twilio SIP Domains.

Tool Calling

Agentic and conversational AI applications ultimately need to use tool calling to be able to accomplish real work. Review the code in server/lib/tools to get a sense of how tool calling works in this application.

Note that tools need to be configured in each use case and there are examples that you can follow in the tool-calling-example directory.

ConversationRelay and WebRTC: Not just for JavaScript

This post has focused on adding AI-powered voice agent capabilities to your website, but this same concept applies to mobile applications. Check out our Voice Android and iOS SDKs to accomplish similar functionality in your mobile apps, with the same TwiML application.

Conclusion

In this blog post, we covered how you can build Voice-AI-backed applications using Twilio ConversationRelay and Twilio Programmable Voice to handle the elements that Twilio is good at while leaving important elements that differentiate your business up to you. You can be confident that your application can be reliably connected to the key voice channels – WebRTC, SIP and the PSTN – via Twilio’s industry leading communications platform.

Additional resources

Dan Bartlett has been building web applications since the first dotcom wave. The core principles from those days remain the same but these days you can build cooler things faster. He can be reached at dbartlett [at] twilio.com.

Charlie Avila has been guiding customers to enhanced CX solutions that unlock improved efficiency, cost benefits and customer satisfaction… He can be reached at cavila [at] twilio.com.

Ben Johnstone is a Principal Solutions Engineer at Twilio, based in Toronto. He works with enterprise retail customers to design and deliver scalable communications solutions, drawing on experience in cloud platforms, contact center technologies and complex system integrations. He can be reached at bjohnstone [at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.