Set Up a Native Integration with Conversational Intelligence and ConversationRelay using Node.js

Time to read:

As businesses deploy AI agents and field real-world customer requests, they need to track their performance outside test environments. Observability helps teams ensure their agents act responsibly, meet user needs, and deliver the business value they were designed to provide. AI observability with Twilio is now much simpler with our newly announced integration of ConversationRelay with Conversational Intelligence.

In this tutorial, I'll show you how to add Conversational Intelligence to a ConversationRelay build to enable AI agent observability. You'll create an Intelligence Service and attach several Custom Operators to the service to judge the app's conversational performance. Then, together, we'll start with a pre-built Node.js ConversationRelay and OpenAI integration and create a mock AI operator for Owl HVAC. Finally, we'll call the app on the phone and verify that we're storing transcriptions in Conversational Intelligence… and that the service is extracting insights from our conversation!

By the end of this tutorial, you should be able to replicate the demo below:

Let’s get started.

Prerequisites

To deploy this tutorial, you will need:

- Node.js on your machine (we tested using v23.9.0)

- A Twilio phone number with Voice capabilities (If you haven’t yet, you can sign up for Twilio here)

- ngrok or another tunneling service

- An OpenAI Account and API Key

- A phone to place your outgoing call to Twilio

Create and set up an Intelligence Service with Conversational Intelligence

At this point, you've purchased a phone number for your account. (If you haven't yet, follow these instructions and get one with Voice capabilities).

Now, let’s get acquainted with Conversational Intelligence!

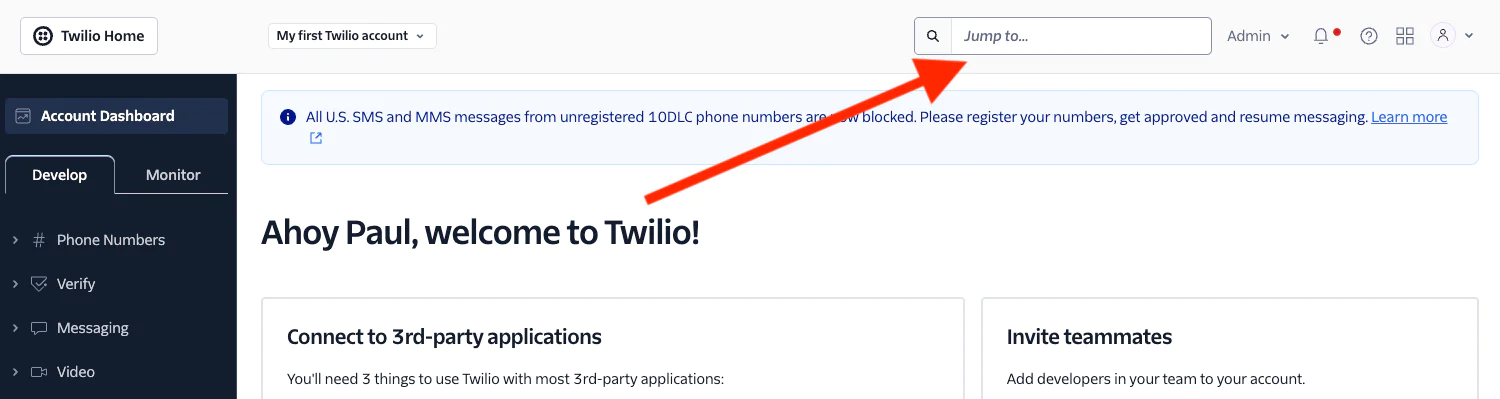

Navigate to Conversational Intelligence

From your Twilio Console, locate the Jump to… search box. Type ‘intelligence’, and select Conversational Intelligence Overview.

Optionally, now find the Conversational Intelligence entry on the sidebar and select the triple dot icon, then Pin to Sidebar. (This would make it simpler to find in the future.)

Set up an Intelligence Service

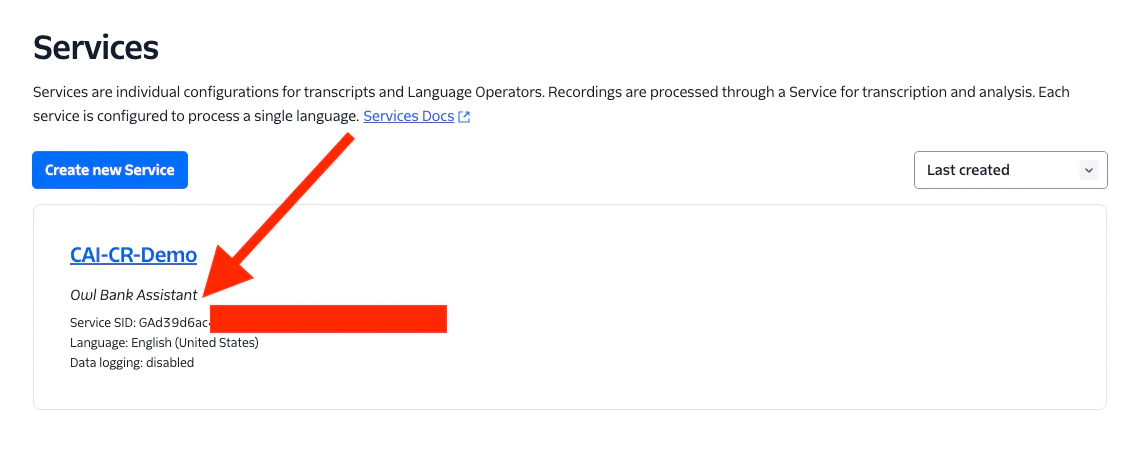

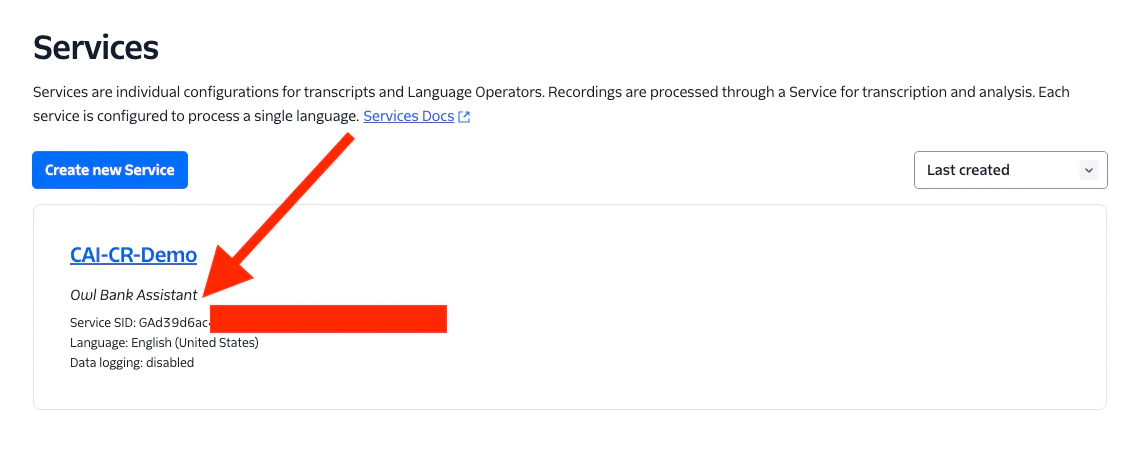

Under Conversational Intelligence on the left sidebar, select Services. If this is your first time creating a service, a blue button will be centered on the page that says Create a Service - hit it now!

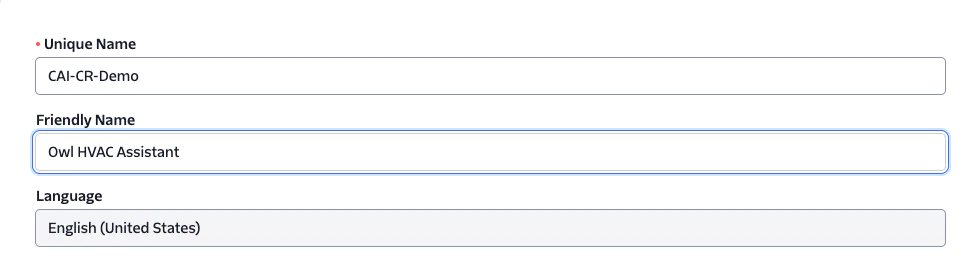

In the next screen, fill out a creative Unique Name and Friendly Name (or, hey, use ‘CAI-CR-Demo’ and ‘Owl HVAC Assistant’, like me!), and select the language you anticipate your callers will be speaking when they call your agent.

You'll also decide whether you want to redact Personally Identifiable Information (PII), automatically transcribe all new recordings in your account, and enable your data to be used to improve Twilio and our affiliates' products.

(You can also set these for the demo, and change them later.)

Finally, Create the service.

Get the service’s Service SID

Find the service you just created in your Twilio Console's Services page. It'll list a Service SID – copy that and set it aside, we'll need it when we get down to coding.

Add Language Operators to your service

Conversational Intelligence’s Language Operators use artificial intelligence and machine learning technologies to provide analysis and insights on transcripts. For our simulated HVAC company, operators will give us an idea of how calls are proceeding, without us needing to read each one manually.

AI agent observability is a unique use case for Language Operators. To help you get started, Twilio's Machine Learning (ML) team created a set of six specialized Generative Custom Operator examples designed to help you analyze AI agent conversations and gain insights into their behaviors. These Operators are:

| Operator Name | Description | Use Case |

| Virtual Agent Task Completion | Determines if the virtual agent satisfied the customer's primary request. | Helps measure the efficacy of the virtual agent in serving the needs of customers. Informs future enhancements to virtual agents for cases where customer goals were not met. |

| Human Escalation Request | Detects when a customer asks to be transferred from a virtual agent to a human agent. | Helps measure the efficacy of the virtual agent in achieving self-service containment rates. Useful in conjunction with Task Completion detection above. |

| Hallucination Detection | Detects when an LLM response includes information that is factually incorrect or contradictory. | Ensure the accuracy of information provided to customers. Inform fine-tuning of virtual agent to prevent future instances of hallucination. |

| Toxicity Detection | Flags whether an LLM response contains toxic language, defined as language deemed to inappropriate or harmful. | Determine whether follow-up with customer is necessary, and/or inform adding virtual agent guardrails to prevent future instances of toxicity. Useful in conjunction with Hallucination Detection above. |

| Virtual Agent Predictive CSAT | Derives a customer satisfaction score from 0-5 based on virtual agent interaction. | Measures overall customer satisfaction when engaging with virtual agents. Can serve as a heuristic for a variety of important customer indicators such as brand loyalty, likelihood of repurchase, retention, and churn. |

| Customer Emotion Tagging | Categorize customer sentiment into a variety of identified emotions. | Offers more fine-grained and subtle insight into how customers feel when engaging with virtual agents. |

For each of the AI Observability Operators, you can find sample prompts, JSON schema, training examples, and example outputs linked above. These examples can help you understand how to use the Operators effectively and get the most out of your AI agent observability efforts. Moreover, these Operators are designed to be used with the Generative Custom Operator type. You must select Generative when creating the Custom Operator in Console and use the provided Operator configuration linked above.

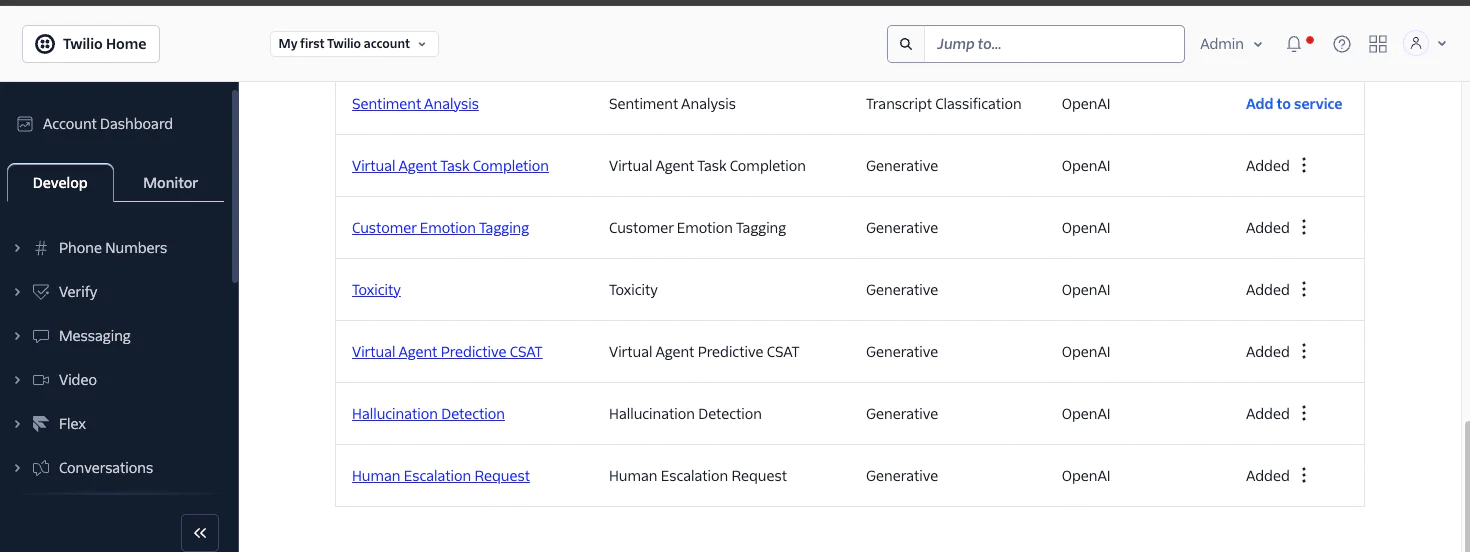

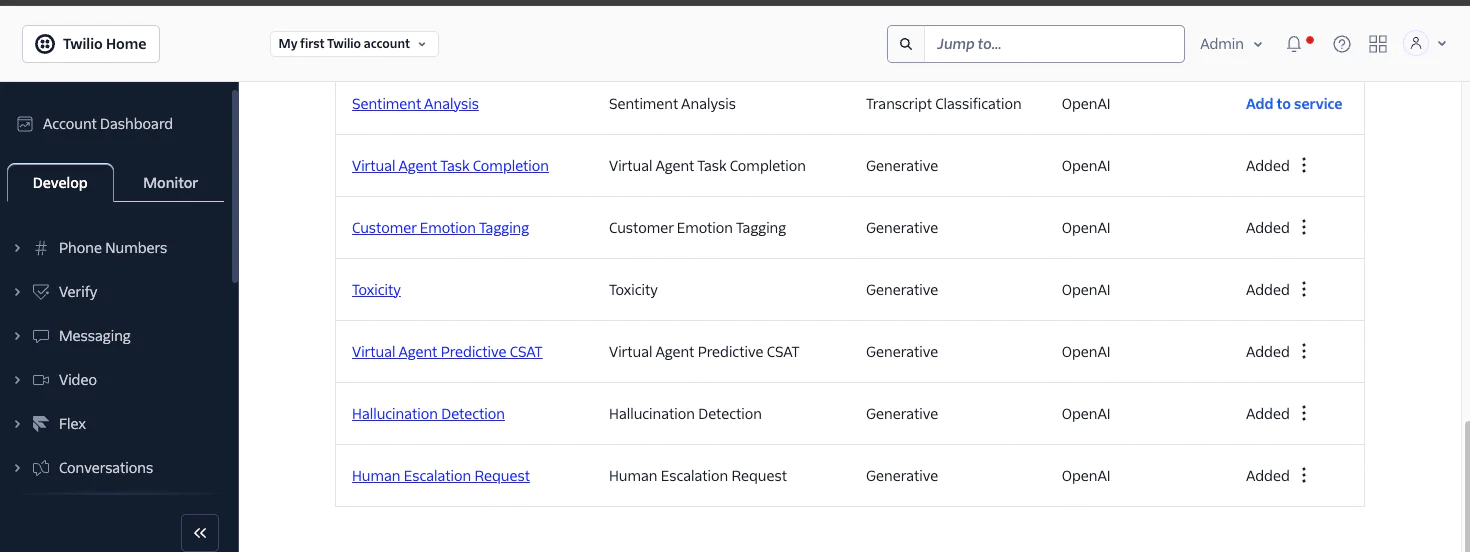

Here’s how it looked after I added them to my Intelligence Service:

Beyond the specialized AI Observability operators above, you can also apply additional Language Operators based on your use case. Conversational Intelligence offers both pre-built operators – predefined in the Console for common use cases (e.g., summarization), and the ability to build your own tailored LLM tasks with natural language using Generative Custom Operators.

Awesome, your service is now ready – let’s build an AI app and test them out!

Build a virtual agent with ConversationRelay

In the last section, you set up the Intelligence Service and added some Custom Operators to monitor the virtual operator and conversations. In this section, we'll build the scaffolding for a virtual agent.

Now, you're going to build a ConversationRelay integration with OpenAI. ConversationRelay lets you create real-time voice applications with AI Large Language Models (LLMs) that you can call using Twilio Voice.

We'll tell the LLM to pretend it's an agent for Owl HVAC for the purposes of this demo. You'll customize a greeting and have a virtual agent you can call by the end of this section.

After this launch, we can set up ConversationRelay with one attribute, intelligenceService, which automatically will transcribe our conversation in our Conversational Intelligence Service while running our choice of Language Operators.

Here’s what the underlying TwiML looks like (you’ll also see it in the code, below):

Let’s work on the app.

Initialize the project

Navigate to the parent directory where you want to set up the project. Set up the project scaffolding with:

Then, you’ll need to install the dependencies:

Create the project files

Now, create the .env file:

In that file, add the following lines:

For the OPENAI_API_KEY, add your API Key from OpenAI. Then, paste in the CONVERSATIONAL_INTELLIGENCE_SID, which is the Service SID from the Service you created in the first section.

Leave NGROK_URL blank for now, you’ll come back to it.

Then, create the file index.js – that’s where you’ll be writing the code for the integration.

Build the ConversationRelay integration

Now, open index.js in your favorite editor (personally, I prefer ed). It’s time to code up the server.

Step 1: Import dependencies, load our Environment Variable, and initialize Fastify

Add our dependencies at the top of the file, then define a few constants. And don't worry – I'll explain the last two at the end of the section.

For a more detailed description of what’s going on here, see Amanda’s tutorial. Let’s concentrate on a couple lines:

WELCOME_GREETING- this variable controls the first line the AI will say to the caller after it picks upSYSTEM_PROMPT- this (long) variable controls how the AI will act during the call. Since this is a demo, this sample prompt asks the AI to roleplay as a customer service assistant for a fake HVAC Company (“Owl HVAC”).

You’ll also note a few lines about how the conversation is being translated to voice, and some rules to follow. You can find our prompt engineering best practices here.

Next up, we’ll work on the endpoints for our server.

Step 2: Interface with OpenAI

To improve the assistant's ability to handle interrupts – and improve the callers' latency and experience – we will be streaming tokens from OpenAI.

Below the above code, add a new aiResponseStream( function to handle our interaction with OpenAI:

Briefly, here we manage our interaction with the OpenAI API.

We use the gpt-4o-mini model, and after we send a prompt (from the caller's voice), we accumulate the streamed tokens back from the model while also sending them, simultaneously, to ConversationRelay. When the response is complete, we add the full response to our local conversation storage with the assistant role.

Step 3: Define some endpoints

For this bank assistant demo, we need two endpoints: one to provide instructions to Twilio when we receive an inbound call, and another to set up a WebSocket to proxy communications with OpenAI and the caller back and forth with ConversationRelay.

Below the aiResponseStream( function, add the following code:

Here you can see the endpoints. Briefly, here’s what’s happening:

/ws- this path sets up a WebSocket between our app and ConversationRelay. We’re handling three ConversationRelay message types: ‘setup’, ‘prompt’, and ‘interrupt’./twiml- this path returns instructions to Twilio on an inbound call. They use a language called TwiML, or Twilio Markup Language. We use the<ConversationRelay>noun, and pass it the aforementionedWELCOME_GREETING, path to the above WebSocket, and theCONVERSATIONAL_INTELLIGENCE_SIDwe defined in the .env file.Adding that Intelligence Service SID is all you’ll need to get Conversational Intelligence working with your ConversationRelay app!

You’re really cooking now. Let’s finish things up by handling any interrupts from the caller and running the server.

Step 4: Handle interrupts and run the server

Below all of the above, paste this last section of code:

Here we define a function, handleInterrupt, which is called when ConversationRelay detects that we interrupted the AI. In it, we index into our local conversation history and rewrite what the assistant replied with, ensuring that the next time we prompt the LLM it knows (approximately) what the user “heard”.

Finally, we have a bit of boilerplate to launch the server. But don't do that quite yet; we have a couple more steps to take!

Finish the setup and run the server

I know, I feel it too – you’re getting close! Before you run, though, you need to do a few things.

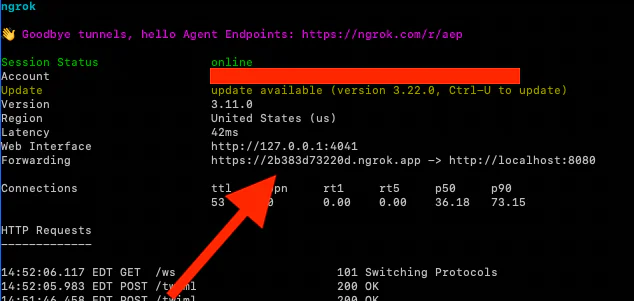

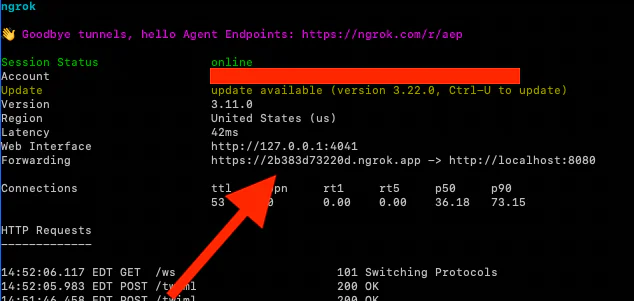

Start ngrok with ngrok http 8080 in a new terminal tab.

There, copy the ngrok URL without copying the scheme (leave out the “https://” or “http://”). Update your .env file by editing the NGROK_URL we left blank earlier:

This screenshot shows the URL I chose for my environment variable:

Back in the original terminal, start the server:

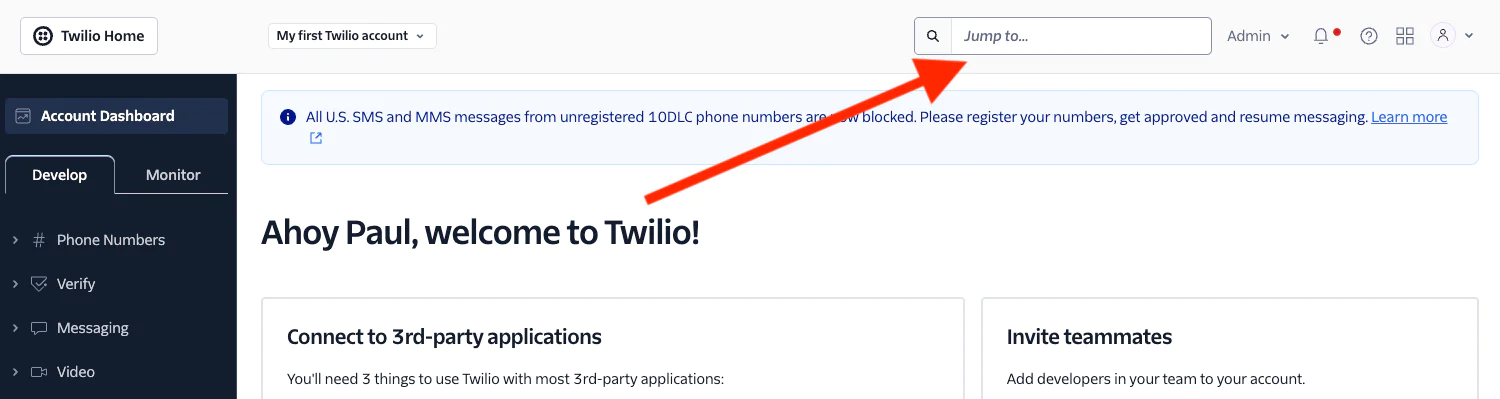

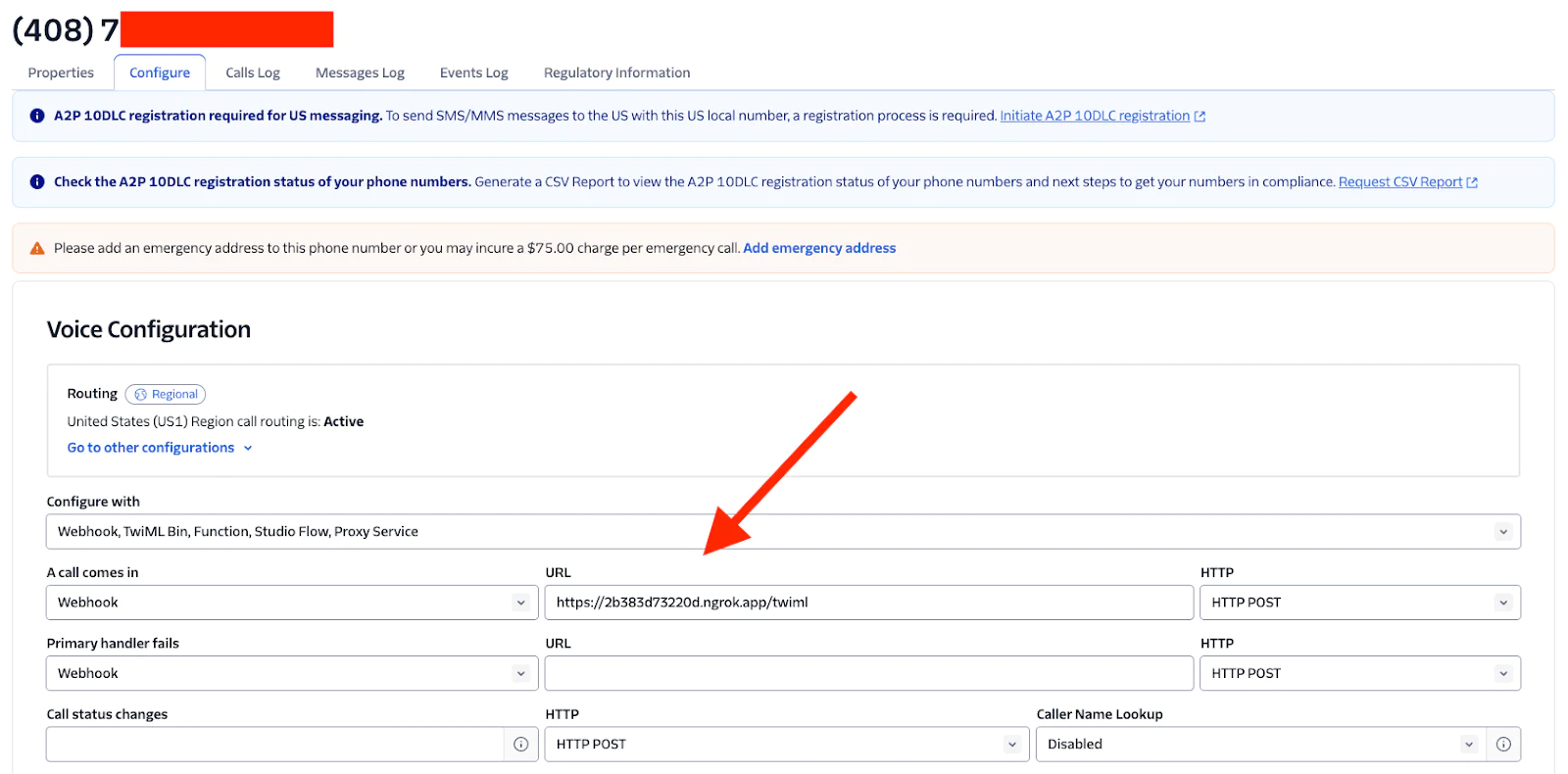

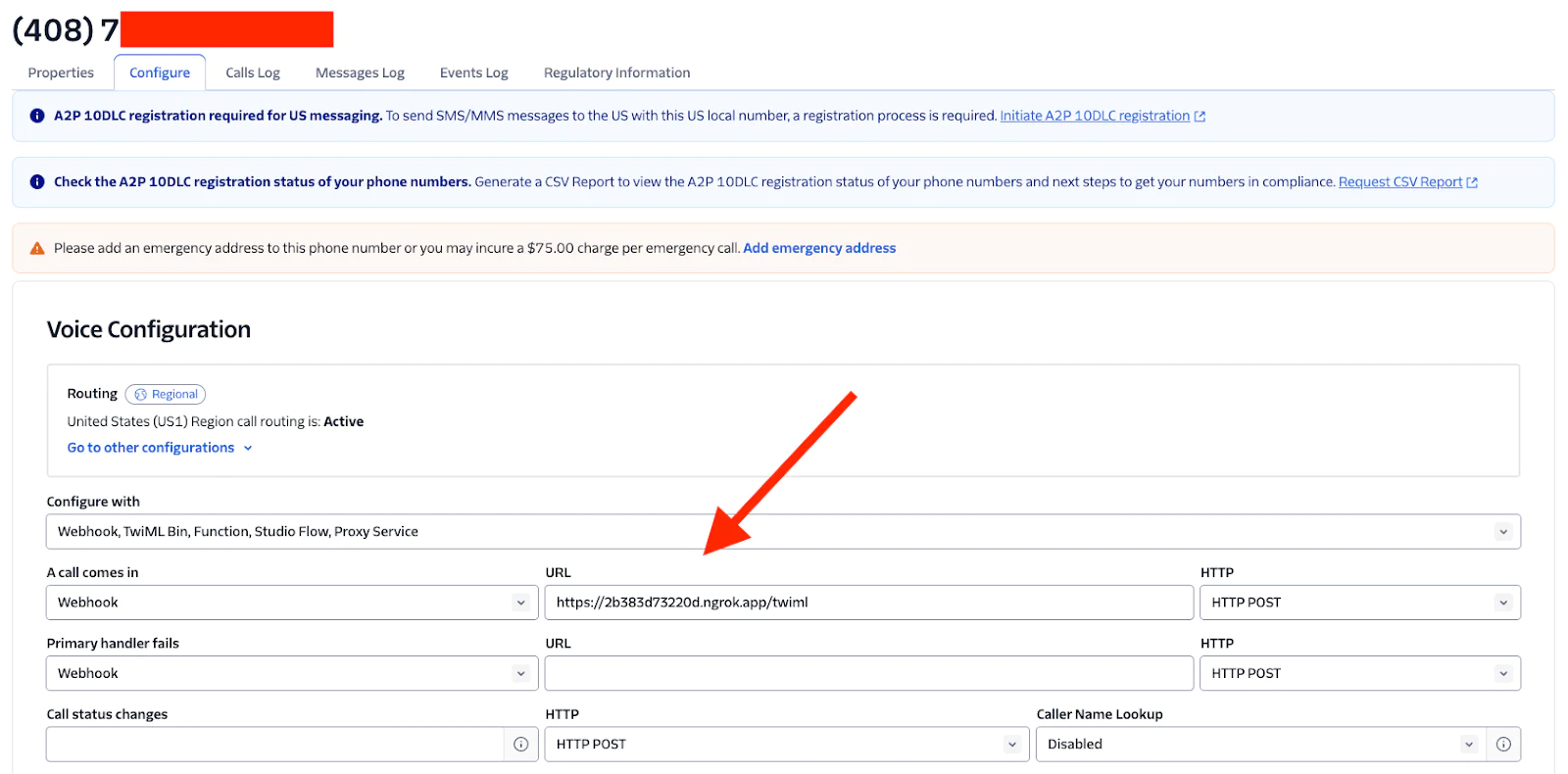

Configure Twilio

Return to your browser, and open your Twilio Console. Now, configure your phone number with the Voice Configuration Webhook for A call comes in set to

https://[your-ngrok-subdomain].ngrok.app/twiml. (Again using the NGROK_URL).

Here’s an example from my Console:

Save your changes to the number. You’re now ready to test.

Call and talk to AI!

You’re now ready to make a phone call. Dial your Twilio number, and talk to the AI!

The best part about the call is that since it's Artificial Intelligence, you don't need a set script. Try asking the assistant to look up your account, open or cancel a card, and escalate to a manager or a human.

When you hang up the call, Twilio will get to work in near real-time and examine the transcript for all of the operators you added. We’ll check if that’s working in a second.

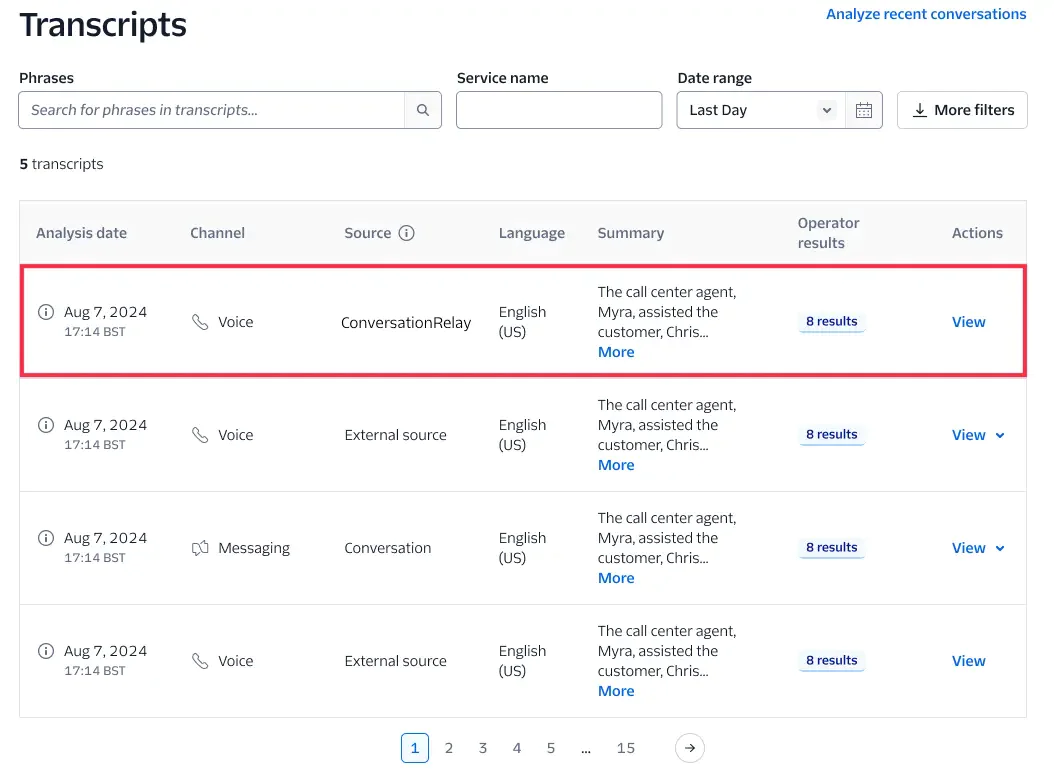

Find your transcript in Conversational Intelligence

Navigate back to your Twilio Console. Select Transcripts under Conversational Intelligence in the sidebar. By now, the transcript should be complete – either select the transcript for the recent call in the list, or wait a few minutes, and return to select it. The source of the transcript in Conversational Intelligence should be set to ConversationRelay as shown below:

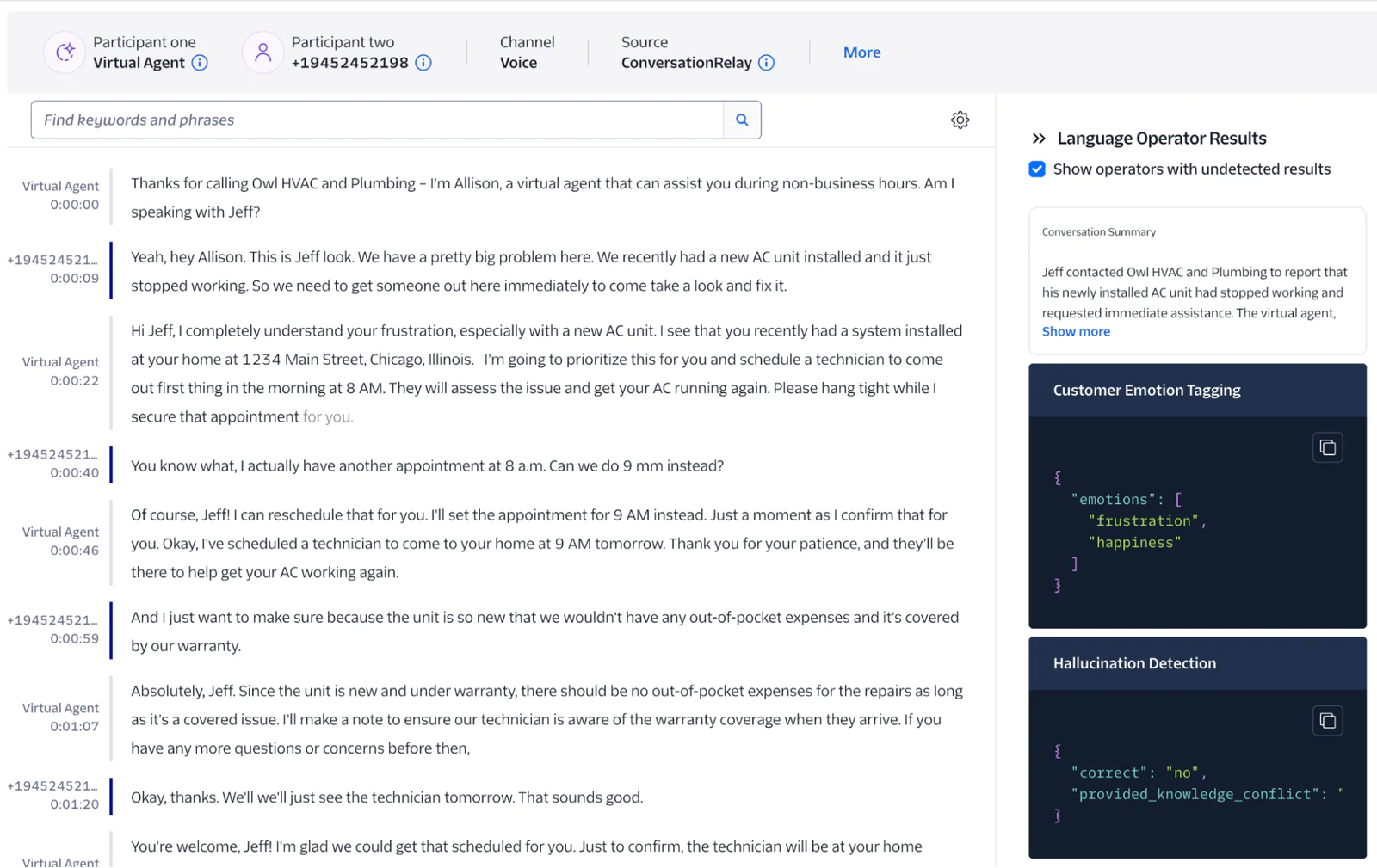

When you click on your transcript, you’ll notice a few things:

- Participants have been automatically labeled, including the Virtual Agent

- The contents of the conversation have been accurately transcribed, with each sentence corresponding to either the customer or the Virtual Agent

- The Language Operator Results will be shown based on the results of the AI analysis applied to this conversation

Here is where the rubber meets the road with AI Observability. If you used the specialized operators above, you’ll get quantifiable answers to questions like:

- Did the AI hallucinate?

- What emotion(s) did the customer feel when engaging with the virtual agent?

- What tasks was the virtual agent asked to complete, and did it complete them?

…as well as the results of any additional Operator tasks you defined.

Consuming the Operator Results downstream in your apps may be helpful for automations and aggregate analysis. Conversational Intelligence offers webhooks and APIs to consume transcripts and Operator Results.

Getting chatty with Conversational Intelligence

And there you have it, you built an AI Assistant that anyone can call using Twilio Voice and ConversationRelay, in Node.js. Conversational Intelligence automatically runs various operations on your transcripts to help you monitor everything happening with your app and callers. Isn't it amazing what you can do with a few lines of code and a phone?

Now that you've gotten a taste of Conversational Intelligence and ConversationRelay, the sky's the limit. Now, check out this Docs guide for the ConversationRelay and Conversational Intelligence integration.

Paul Kamp is the Technical Editor-in-Chief of the Twilio Blog. He was uncomfortable yelling at the AI while testing the app – but the work needed to be done, so yell he did. Reach Paul at pkamp [at] twilio.com, but try not to yell.

Jeff Eiden is Director of Product for Twilio Conversational Intelligence

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.