Breaking the Monolith: How We Used the Strangler Fig Pattern to Transform Segment’s Notification Architecture

Time to read:

Notifications infrastructure often represents a critical trust boundary between your platform and your users. When authentication codes fail to arrive or security alerts disappear into the void, the impact extends far beyond technical metrics – it undermines the foundational trust in the system's data integrity and reliability.

This was the challenge we faced with Twilio Segment’s alert and notification services: a monolithic architecture had evolved from a simple alerting system into a complex, tightly-coupled application responsible for millions of daily notifications. What started as an elegant solution had become our most significant reliability risk, where any component failure could cascade into system-wide outages, affecting many product features simultaneously.

Our solution wasn't just to break apart the monolith, but to reimagine alerting as composable, orchestrated workflows where each function became an independent task that can be combined, reused, and managed through a unified orchestration platform. In this post, we’ll show how we piecemeal broke apart functionalities and reworked our notifications architecture with the Strangler Fig pattern, while evolving our data model and migrating seamlessly along the way.

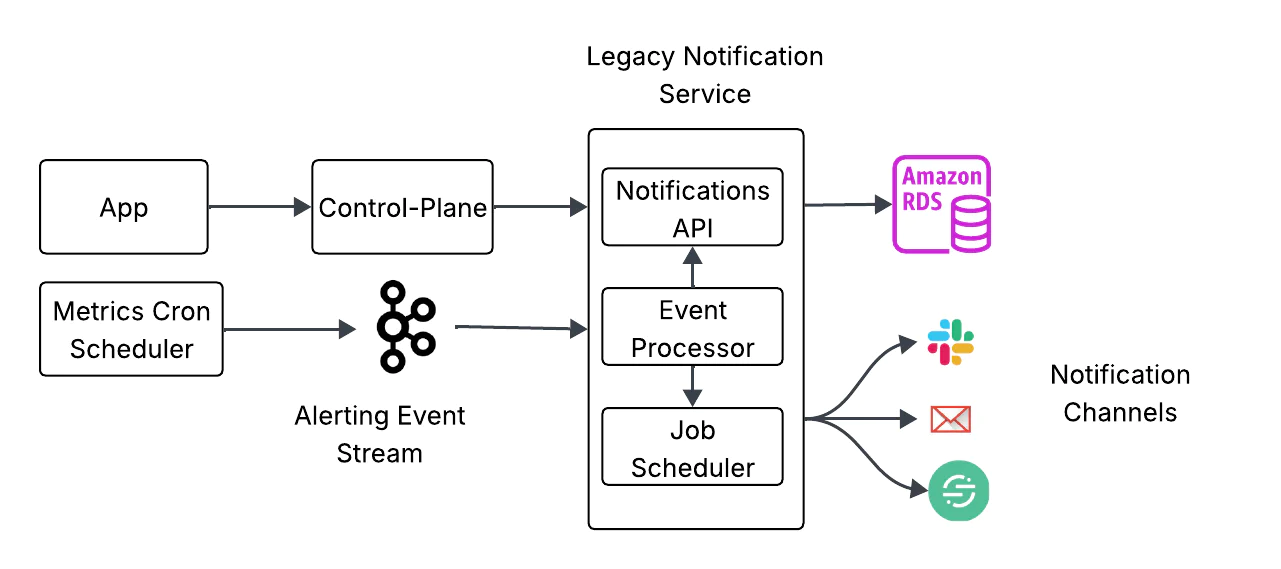

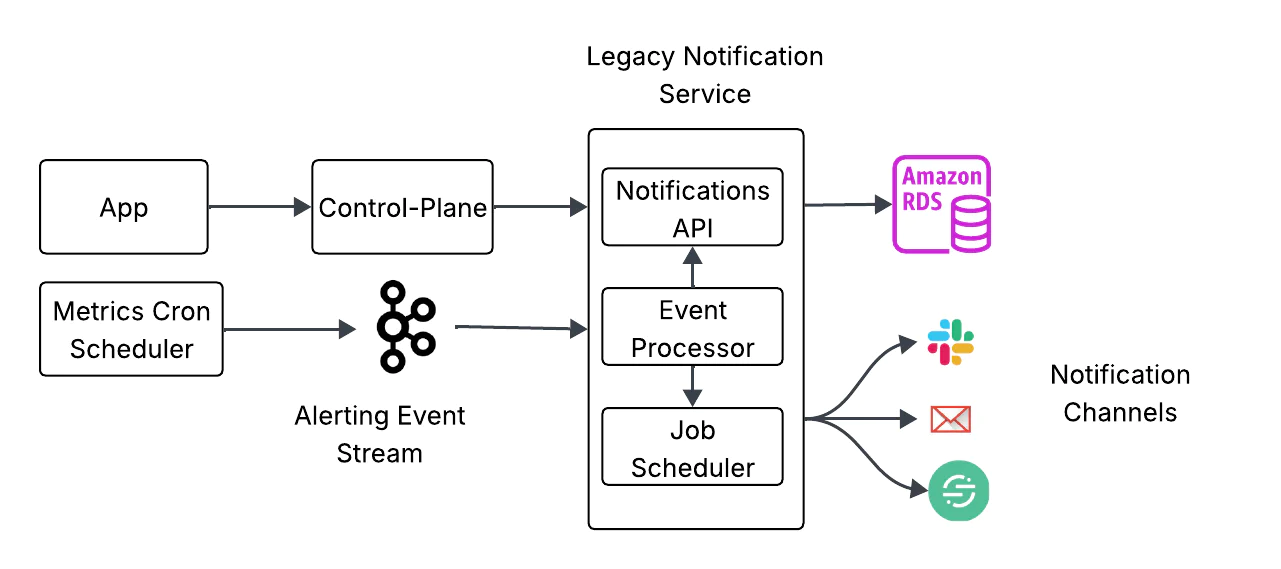

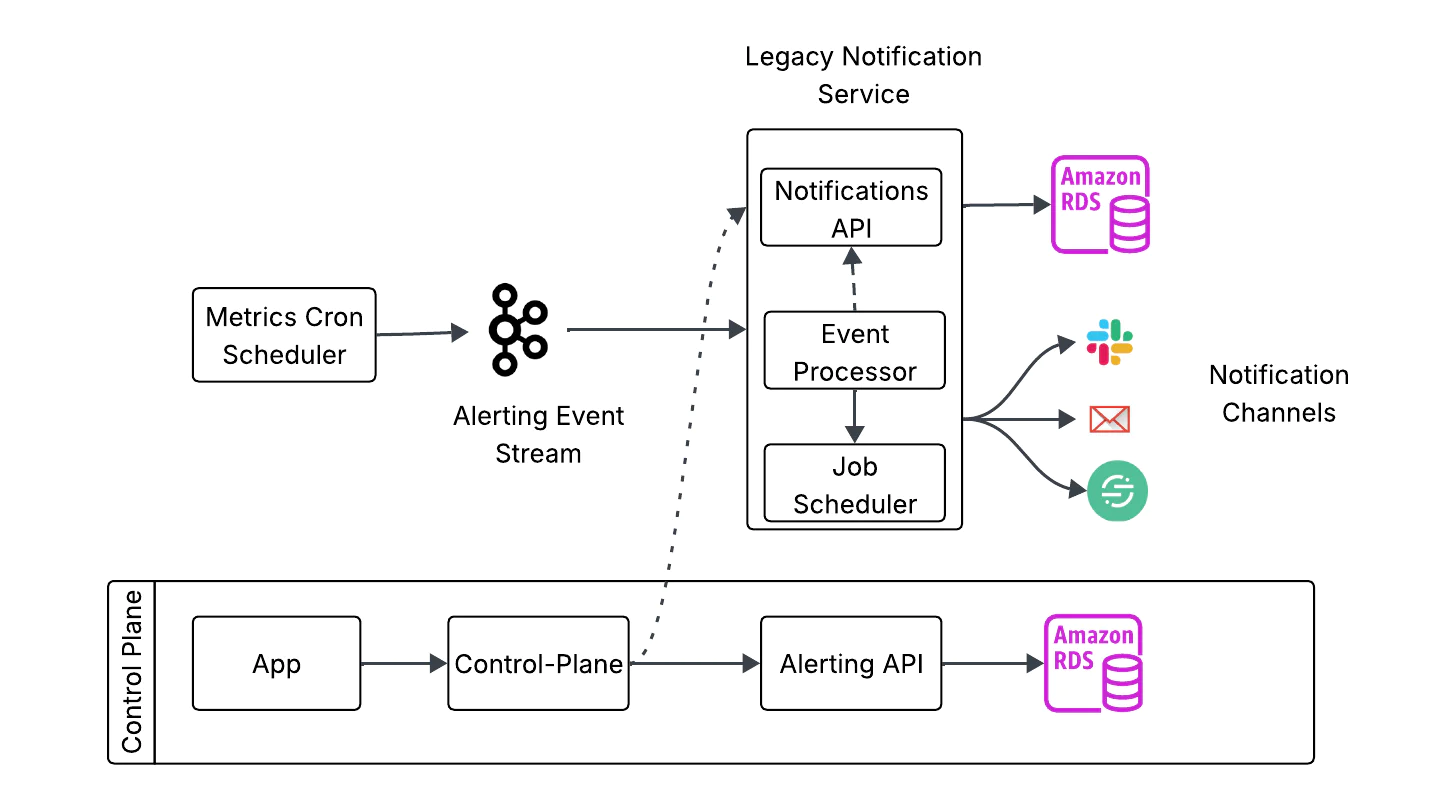

Anatomy of a brittle architecture

Our legacy notification service combined three different responsibilities within a single application:

- API Operations: Manages user preferences and notification configurations

- Event Processing: Processing of platform events from Kafka in real-time. Enriching events for notifications before scheduling delivery

- Job Scheduling: Time-based notification delivery and recurring alert management

While this consolidation initially provided development velocity, it introduced critical architectural interdependencies. These three components shared the same process space, database connections, and failure modes. This meant that an issue in event processing would immediately impact API availability for all users.

Our previously tightly coupled design meant that what should have been isolated slowdowns could sometimes cascade into broader inefficiencies. For example, delays in authentication code delivery intermittently created friction in verification workflows, while infrastructure bottlenecks at times slowed notification processing and impacted the timeliness of alerts. These ripple effects also surfaced in the notifications APIs, where features like in-app alerts experienced temporary degradation. While the specific scenarios differed, the pattern was consistent: strong coupling between components increased the blast radius of issues that would otherwise have remained contained.

Enter the Strangler Fig: API extraction and path to orchestration

Rather than attempting a risky "big bang" rewrite, we chose the Strangler Fig pattern—a migration strategy that gradually replaces legacy systems by building new functionality alongside existing services, slowly redirecting traffic until the original system can be safely retired.

Named after a vine that grows around its host tree, eventually replacing it entirely, this pattern offered us the ability to incrementally improve system reliability while maintaining operational stability, as well as build product features.

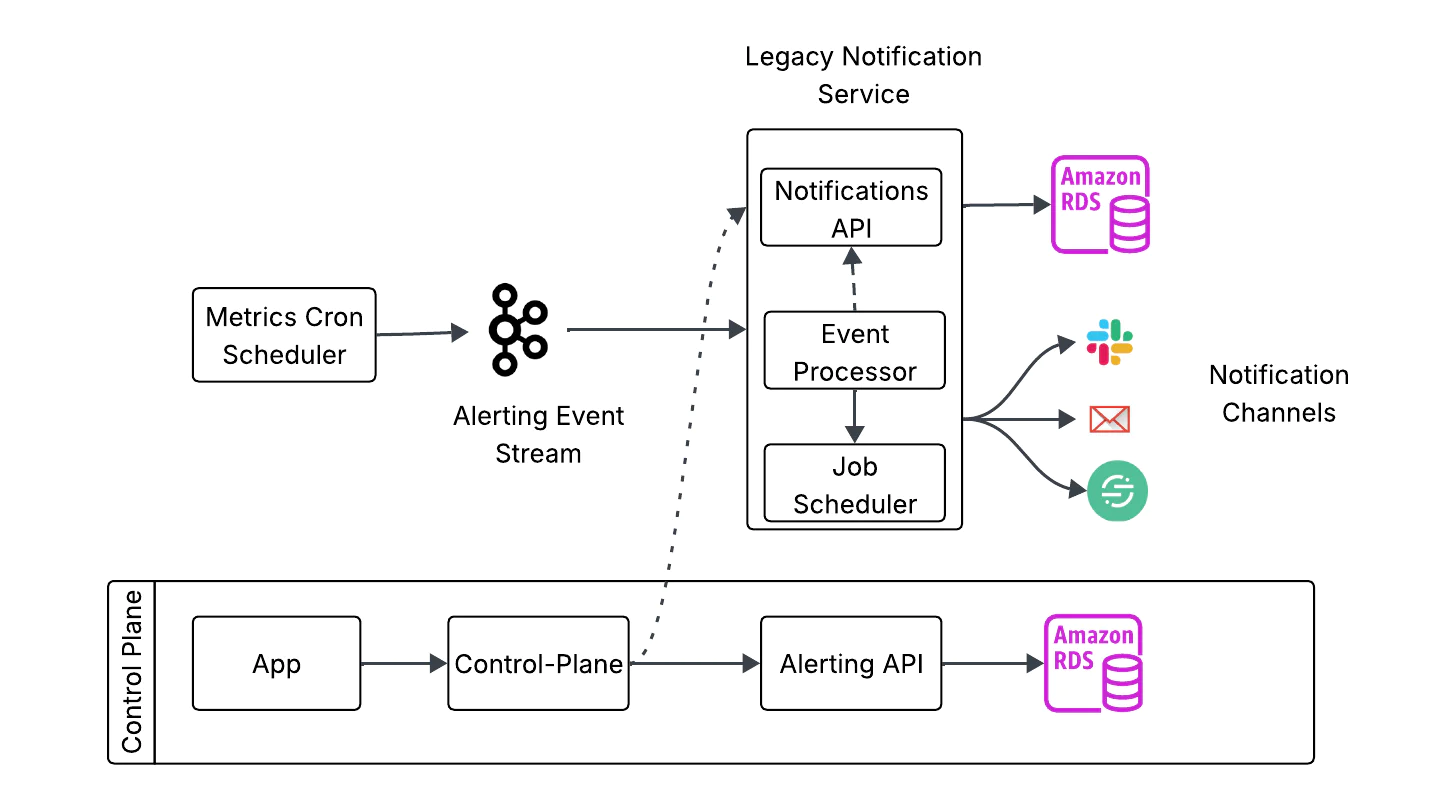

API extraction

We began with the API layer, as it represented our most direct customer impact vector. Our goal was to extract all CRUD ( Create, Read, Update, Delete) operations into a standalone service while preserving existing notification delivery mechanisms.

Technical implementation

We built the new Alerting API Service in Golang, and included several key improvements over the old system:

Clean Data Model: We redesigned the database schema with proper normalization and clear entity relationships by moving to a globally unique identifier-based approach. This eliminated the legacy "find or create" patterns that became difficult to reason about, as our entity constraints became more flexible.

Traffic Routing Strategy: Instead of an immediate cutover, we used feature flag-based routing to move reads and writes separately. This allowed for a gradual migration with real-time monitoring and instant rollback capabilities.

Dual-Write Pattern: During the transition period, both the legacy notification service and the new alerting service maintained synchronized data through carefully orchestrated dual-write operations, ensuring read consistency while enabling independent validation of the new system.

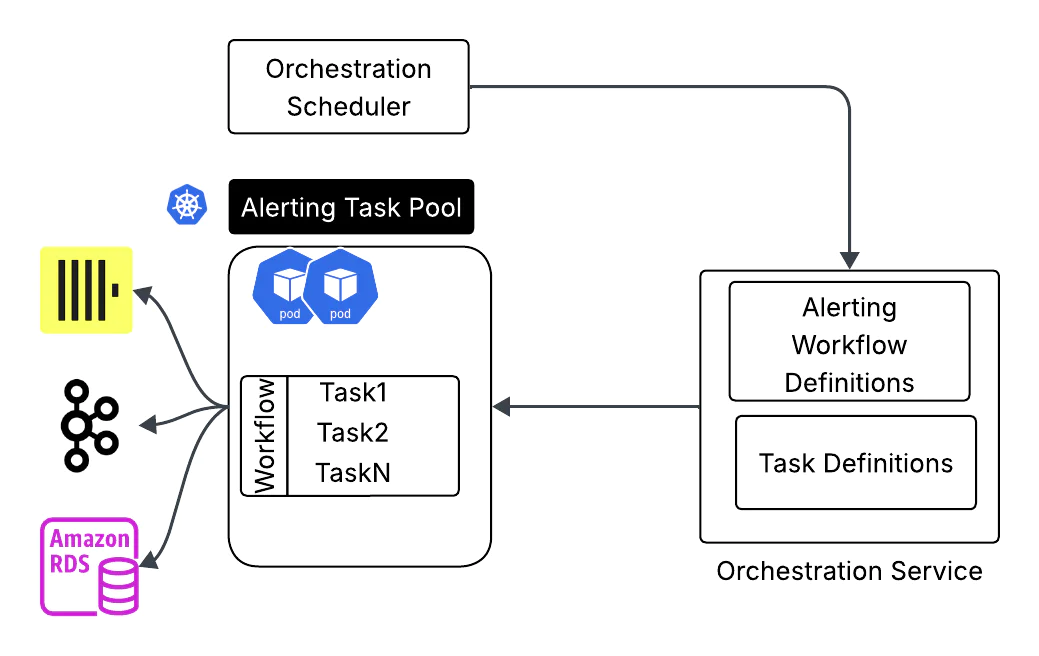

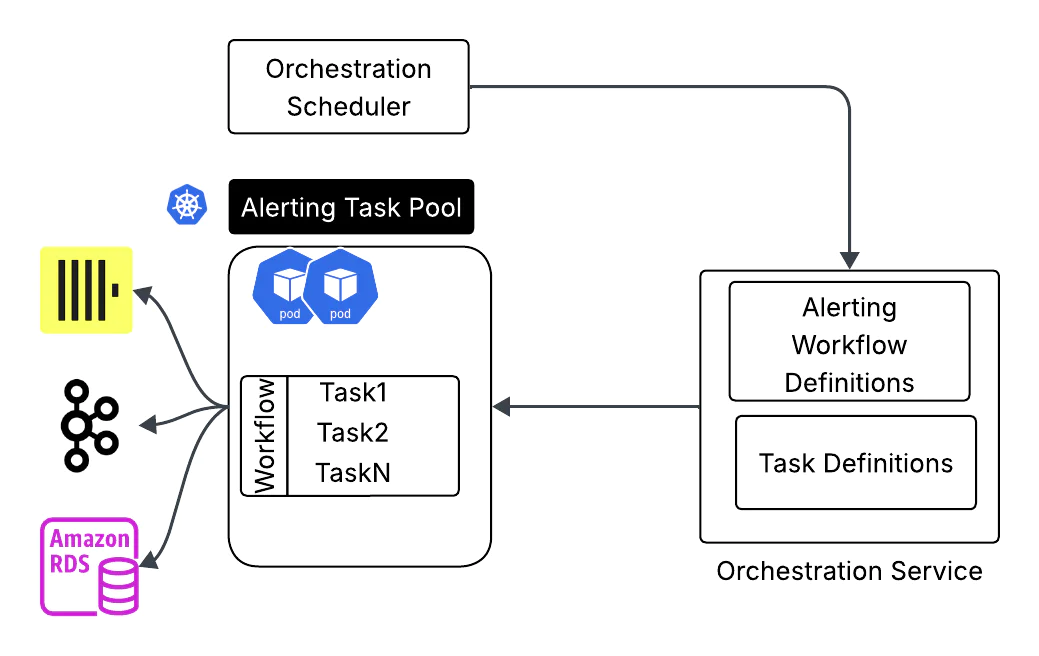

Orchestration transformation: Beyond traditional microservices

For the event producer and processing piece, we decided to move from a cron job scheduler to a modern orchestration platform, Orkes. This is where the magic happened—Orkes helped us transform our existing monolithic procedures for producing events to task-based workflows.

The workflow orchestration approach brought the following benefits to development and operations:

- Lego-Block Modularity: Our engineers rebuilt our notification capabilities as discrete, reusable tasks with standardized interfaces. New alert types are assembled from existing components rather than built from scratch.

- Parallel Development: Multiple teams can work on different notification tasks simultaneously without coordination overhead. Tasks are developed, tested, and deployed independently through the unified task pool.

- Simplified Testing: Each task has clear inputs and outputs, making unit testing straightforward. Integration testing happens at the workflow level.

- Streamlined Alert Management: Pre-built tasks for common functions (subscription lookup, event dispatches) eliminate the need for duplicate development for new alert types.

- Workflow Transparency: A new visual workflow execution provides real-time visibility into alert processing. Teams can see which tasks are running, which have failed, and where workflows are experiencing delays.

- Reduced Operational Overhead: Instead of managing multiple alert-specific services with different failure modes, operations teams manage one service with consistent operational characteristics.

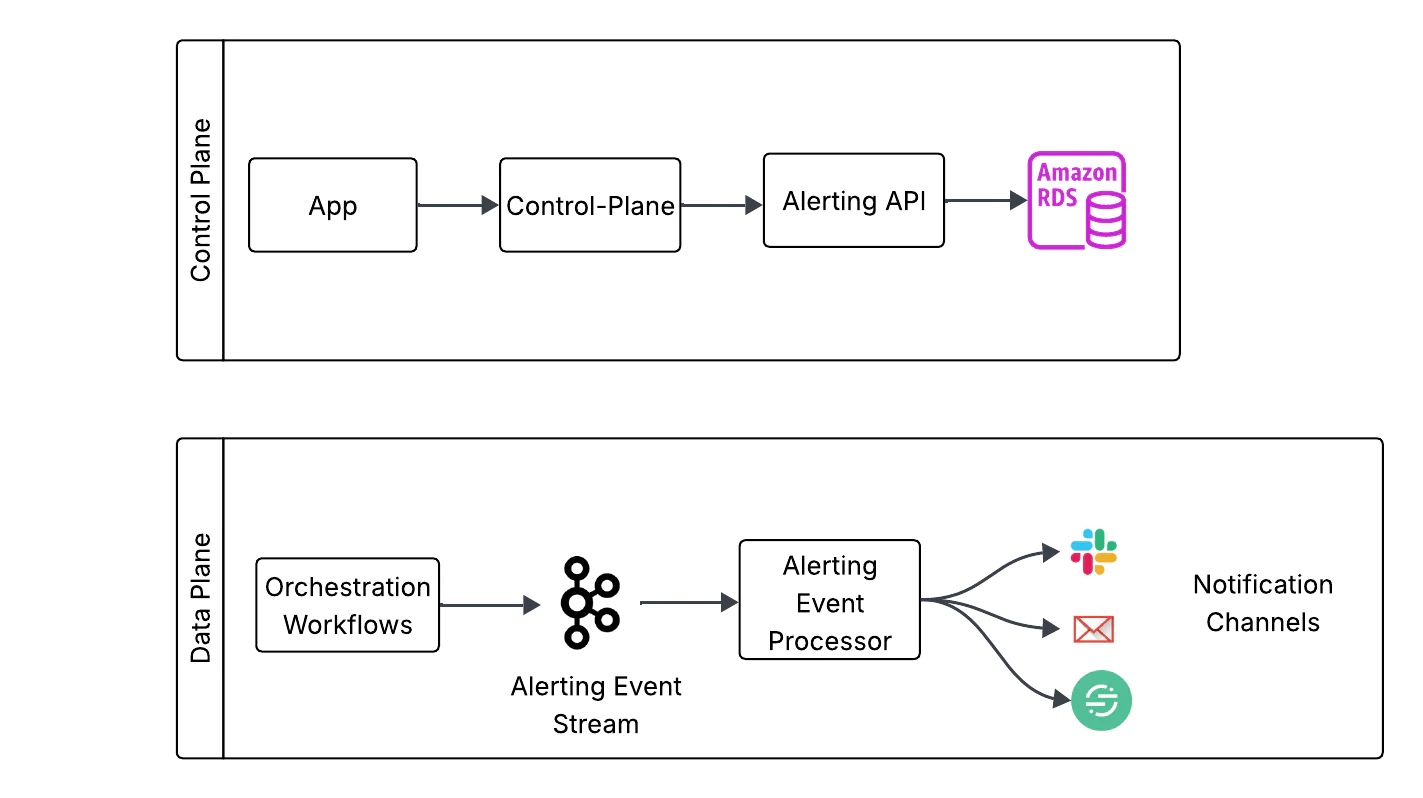

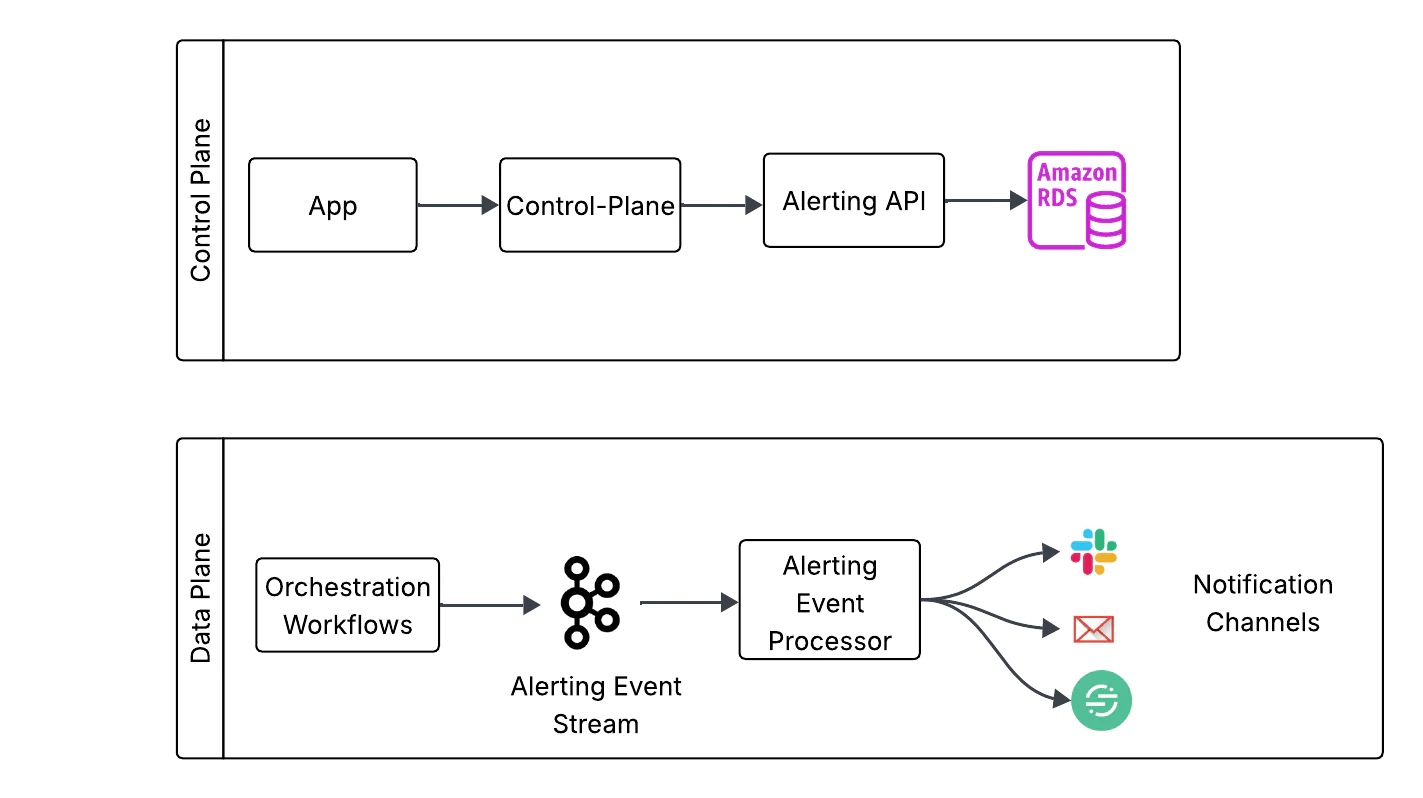

Clear separation of control and data planes

This orchestration-first approach also gave us something we were missing before: a clean separation between the control plane and the data plane. The control plane now owns the what—defining alert rules, thresholds, and configurations—while the data plane owns the how—executing workflows and delivering notifications at scale.

The benefits are clear:

- Resilience: The data plane continues processing events even if the control plane experiences hiccups, thanks to cached state and loose coupling.

- Scalability: Each plane scales independently, so configuration workloads and high-volume event delivery never compete for resources.

- Simplicity: By drawing a sharp boundary between intent (control) and execution (data), teams can evolve one without disrupting the other.

This separation, made possible by orchestration, not only modernized how we process events but also laid the foundation for a more resilient and future-ready notifications platform.

Lessons for successfully implementing the Strangler Fig

Going through this migration gave us a few hard-earned insights we think are useful for any team considering a similar journey:

- Start with customer impact: Prioritize components that touch users most directly. Even though event processing posed the biggest technical hurdles, beginning with the API layer delivered immediate customer value and built organizational confidence in the migration path.

- Favor orchestration over microservices: Instead of managing dozens of standalone services, orchestration platforms let you represent complexity as visual, manageable workflows. This task-based approach preserves modularity without the operational overhead of a pure microservices model.

- Design for reusability: Standardize task interfaces so they can be reused across workflows. That upfront investment pays off as new notification requirements arise, enabling faster delivery with less duplication of effort.

Conclusion

Today, our new alerting and notifications service processes events at scale with predictable performance and clear failure boundaries. It brings together the modularity benefits of microservices with the operational simplicity of a unified platform. What was once a brittle, monolithic system is now a flexible, resilient architecture that supports rapid innovation while maintaining exceptional reliability.

Implementing the Strangler Fig pattern taught us that architectural transformation doesn’t have to be a binary choice between monoliths and microservices. Orchestration platforms enable a third path: task-based composition with unified service management. This approach enables teams to isolate failures, accelerate development, and simplify operations—all simultaneously.

For teams facing similar monolithic challenges, our journey shows that the future of distributed systems isn’t about creating more services—it’s about better composition. If you’re exploring ways to modernize complex systems, consider how orchestration can transform operational complexity into visual, composable workflows that are easier to build, evolve, and trust.

A heartfelt thanks to Amanda Ma, Joel Bandi, Peter Tuhtan, Eric Hyde and the many engineers across orchestration and partner teams whose support was instrumental throughout this migration.

Modern alerting and notification systems don’t just handle events, they enable teams to move faster, stay reliable, and confidently innovate. To learn more about alerting and observability at Segment, check out the Alerting Hub, Custom Alerts, and Data Observability at Segment

Rahul Ramakrishna loves tinkering with orchestration and data observability, always looking for ways to make complex systems simpler and more reliable. When he’s not breaking monoliths, he’s usually geeking out over coffee machines and roasting curves.

Connie Chen builds backend systems to make data more useful, and enjoys applying nature metaphors to software. She unravels complex systems by day and keeps knitting projects from unraveling at night.

Lauren Namba has a passion for building delightful experiences for customers and making user-facing applications easy and fun to use. In her spare time, she enjoys running with her pandemic puppy, Sebastian.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.