How Do You Know if Your Voice AI Agents are Working?

Time to read:

How do you know if your Voice AI Agents work? Are they any good – how about production-ready? Do they solve your business problems?

Agentic Voice Applications interact with end users over voice channels and try to solve business problems by combining the thinking capabilities of LLMs with access to business rules, workflows, data and functions.

If you are reading this blog post, that description of this exciting new way of engaging with customers is likely not new to you. However, the technology components and design patterns used to build these agentic applications are still very new.

Largely the focus to date in this new ecosystem has been on latency, and rightfully so. In order to be viable, any Agentic application needs to respond back to human users within a “human-normal” period of time.

Think about how long another human takes to respond back to you in a typical human-human conversation, one that you have every day. Most people believe that this period of time is somewhere between 300 milliseconds and 1,200 milliseconds. And you’re in luck – there are patterns available today to build Agentic Voice Applications that are able to achieve latency in this range – and when you build your experience, staying in this range remains critically important.

Companies building AI Agents on Twilio can achieve latency that matches human-to-human conversations, and your Twilio account team can show you how.

Once the latency of your agents is within an acceptable range, you will need to monitor your agents going forward to make sure that the latency between the human-agent interactions remains within that human-normal range. Twilio can help with monitoring as well. We have added observability tooling – with even more coming soon – to show our builders the latency consumed by each portion of the voice agent pipeline (SST, TTS, LLM, VAD, network, etc.).

In this post, co-authored with Coval, we’ll show you how to evaluate your AI Voice Agents so they are ready for production and can solve business problems, and have the observability in place to monitor it all. Let’s talk agents.

OK, with latency under control, now what?

Human-normal latency can be achieved using Twilio solutions – but what else should you be thinking about as you build these new classes of business applications?

- How will you handle load testing before going to production?

- How will you make thousands of test calls – and can your AI agents handle the load?

- Once you know you can handle the necessary call volume, how can you tell if your agents are effective in handling their designed purpose?

- How often does a human caller get a desired result versus getting frustrated and hanging up or escalating and being transferred to a human agent?

- And what else do you need to think about?

That list illustrates that we are ready to move into the next chapter of building AI Voice Agents. Now, we need to add observability to make sure our AI Agents perform at acceptable levels and add evals that can make sure our AI Agents are ready for production. Then, we can have the confidence that our AI Agents are positively engaging with end users (and that those engagements are resulting in the expected business results!).

May I take your order please?

Let’s start by considering a restaurant ordering system that is performing very well from a technical point of view. In this section, we’ll introduce a few more metrics which matter for your Voice Agent’s performance – we’ll start with accuracy per turn and intent recognition.

This restaurant ordering system might be performing very well technically (low average latency with low word error rate and high intent recognition) but customers are abandoning a high percentage of orders because the agent is not meeting its core objective… taking orders!

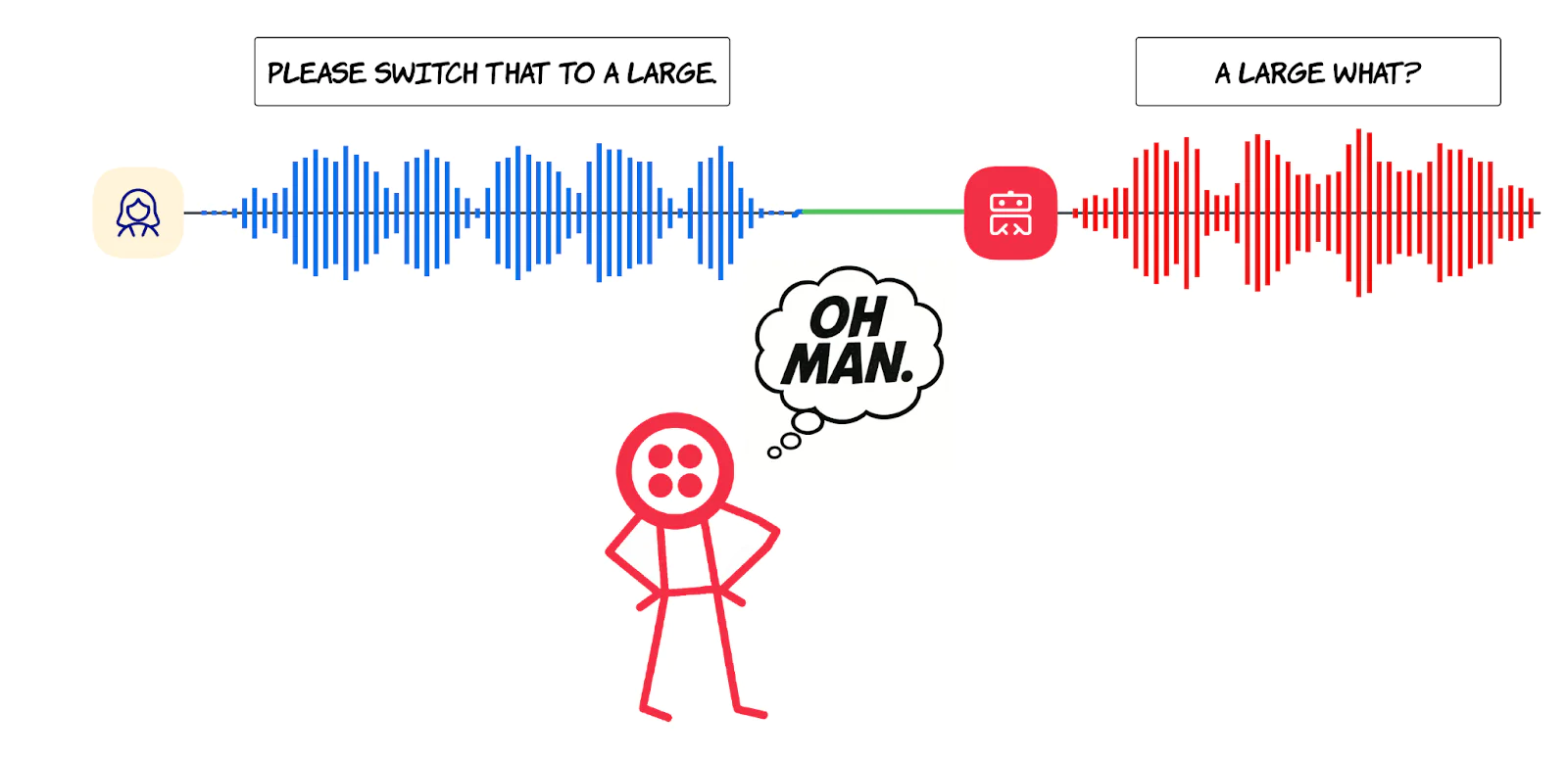

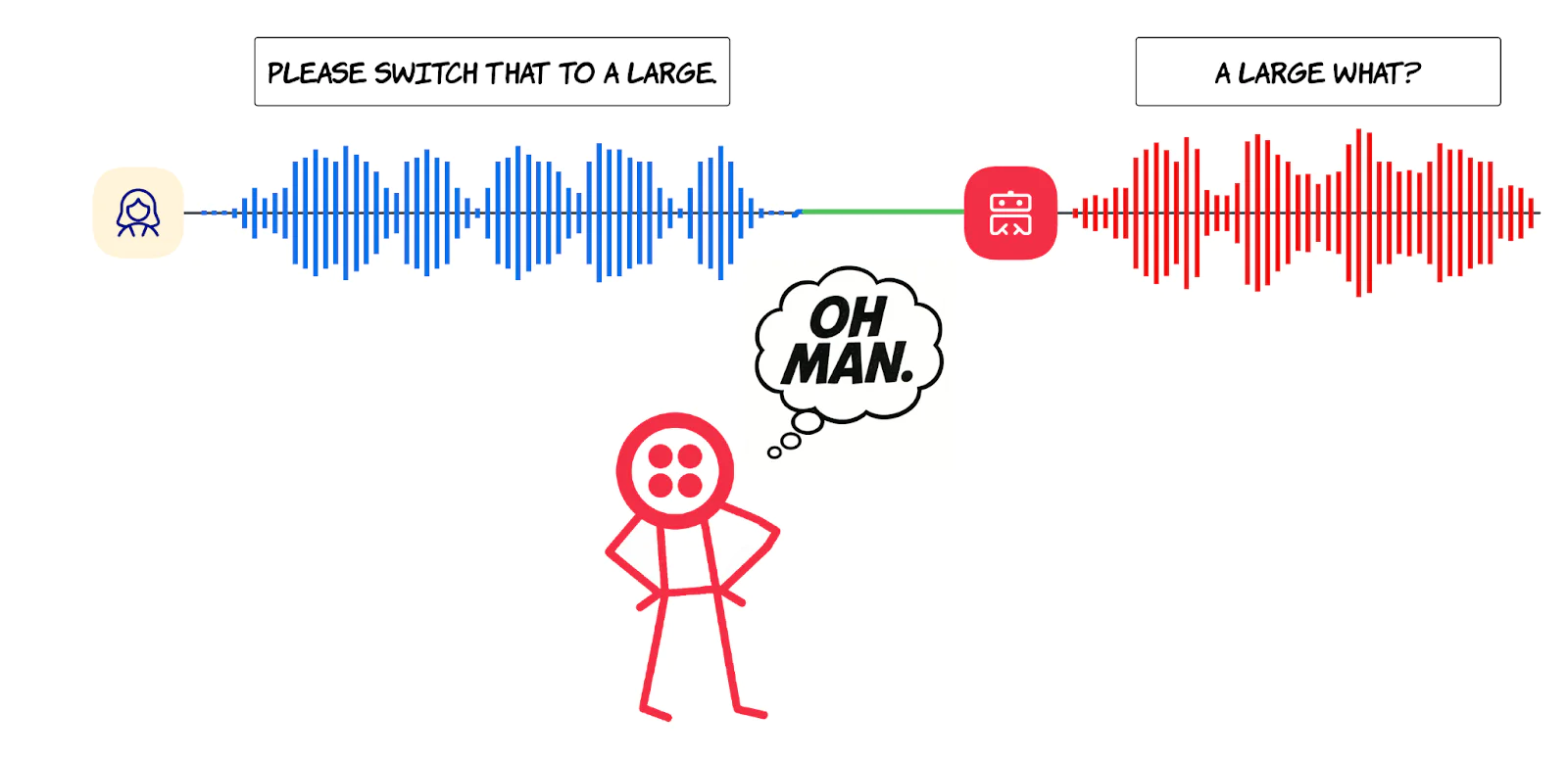

For example, let’s say the agent is asking for information customers have already provided. It is not recognizing return customers and their preferences, and it cannot handle simple modifications like, “actually, make that a large instead.”

Sounds like a problem, right? Yes – a business problem. But how do you evaluate that?

Business-first evaluations: What actually matters

Hopefully we convinced you with this little example: instead of focusing entirely on technical metrics, voice AI agents should prioritize business outcomes. “ Can my customers successfully place orders” is ultimately more important than “ did my agent respond in under 500ms”.

You can do this by defining success criteria that reflect your actual user goals. Here are some possible success metrics you might want to consider:

For Customer Service:

- Resolution rate: Percentage of issues fully resolved without transfer

- First-call resolution: Problems solved in a single interaction

- Customer effort score: How hard did users work to get help?

For Sales/Ordering:

- Completion rate: Orders successfully placed and confirmed

- Cart abandonment: Users who start but don't finish purchases

- Upsell success: Additional items successfully added

For Appointment Booking:

- Booking completion: Appointments scheduled end-to-end

- Modification handling: Changes processed without starting over

- No-show correlation: Bookings that convert to actual appointments

Hopefully you can see the theme here – these metrics matter because they directly impact revenue, customer satisfaction, and operational efficiency. A booking system with a 85% completion rate generates more business than one with 60% completion, regardless of underlying technical performance.

And always consider your customers

We should not forget that common characteristics to your customers, locations, and use cases must also be considered.

For example, there may be more tolerance for latency in certain geographies, or for certain use cases, or for certain users. In addition, tried and tested methods such as post-call surveys (find some of our resources on automated surveys, here) and newer methods such as adding call annotations are extremely valuable to help ensure that you are capturing intent and delivering good experiences. External metrics or desired metrics are helpful when creating your business objectives, but be sure to correlate those metrics to data derived from your actual customers – or metrics that your customers suggest that you should be tracking!

How to improve business outcomes?

When business outcomes fall short, you need actionable levers to improve them. Here's how specific technical optimizations may map to business outcomes:

Knowledge Base and context management

Problem: Agents repeat questions or provide outdated information

Business Impact: Low resolution rates, customer frustration

Technical Solutions:

- Implement selective context retrieval for each conversation turn

- Structure knowledge bases with clear hierarchies and dependencies

- Use vector embeddings for semantic similarity matching

- Design context windows to prioritize recent and relevant information

Prompt engineering and instruction following

Problem: Agents go off-script or ignore business rules

Business Impact: Compliance violations, inconsistent experience

Technical Solutions:

- Use structured prompts with clear role definitions and constraints

- Implement instruction hierarchies ( system > business rules > conversation context)

- Design fallback responses for edge cases

- Test prompt retention across long conversations

Model Selection and Orchestration

Problem: Inappropriate responses for conversation complexity

Business Impact: Poor user experience, unnecessary costs

Technical Solutions:

- Route simple queries to faster, smaller models

- Reserve large models for complex reasoning tasks

- Implement model switching based on conversation state

- Use fine-tuned models for domain-specific terminology

API Integration and Workflow Design

Problem: Slow responses, failed integrations, incomplete actions

Business Impact: Abandoned conversations, manual intervention required

Technical Solutions:

- Design parallel API calls where possible

- Implement retry logic with exponential backoff

- Create graceful degradation for failed services

- Use async processing for non-blocking operations

Speech and Audio Optimization

Problem: Poor audio quality, frequent interruptions, slow responses

Business Impact: User frustration, conversation abandonment

Technical Solutions:

- Optimize voice activity detection (VAD) for your user base

- Implement adaptive audio processing for different environments

- Choose TTS voices that match your brand and user preferences

- Balance interruption sensitivity vs. responsiveness

Why this isn't just an engineering problem

Voice AI evaluation requires cross-functional collaboration. Business leaders – not just engineers – should understand and monitor all of the metrics your company tracks.

- Operations teams can benefit from dashboards showing resolution rates and customer effort scores to identify training gaps and process improvements.

- Product managers should track feature adoption and user satisfaction to guide roadmap decisions and user experience optimization.

- Business leaders require visibility into ROI metrics – cost per resolution, automation rates, customer satisfaction – to justify continued investment.

Voice AI becomes your "agent layer." Just as you'd monitor human agent performance through call center metrics, AI agents need similar operational oversight. The difference is that AI agent performance can be measured continuously and optimized programmatically.

Building confidence through comprehensive testing

The shift from technical-first to business-first evaluation of your agent workflows builds stakeholder confidence. Borrowing from the example above, instead of debating whether a second of latency is acceptable, you're discussing whether 85% order completion meets your business requirements.

This approach prevents the AI projects from being stuck in pilot – where technical metrics look good, but business leaders remain skeptical about production deployment. Instead, consider where you’ll look at business and technical evaluations at all stages of your agent experience build:

- Before deployment, align on specific business success criteria. If conversation completion rates exceed 80% and customer satisfaction scores remain above baseline, the pilot succeeds.

- During deployment, monitor both technical and business metrics. Technical issues often manifest as business metric degradation, but the business impact determines their urgency (and resource allocation).

- Post-deployment, use business metrics to guide optimization priorities. For instance, a 5% improvement in resolution rate might deliver more value than a 20ms latency reduction.

Getting started with tools for agentic testing

Agentic Evaluations tools, such as those from our friends at Coval, provide specialized QA and testing infrastructure for voice AI systems, allowing you to simulate realistic conversation patterns and measure the specific performance characteristics that matter for your use case.

While you can build evaluation infrastructure in-house, Coval provides comprehensive voice AI testing designed specifically for business-focused evaluation:

Automated Business Scenario Testing:

- Complete workflow simulation with realistic user personas

- Success rate tracking for complex multi-step processes

- Automated regression testing when you make system changes

Production Monitoring and Insights:

- Real-time business metric dashboards

- Conversation flow analysis and failure point identification

- Custom alerting based on business impact, not just technical errors

Integration with Twilio Workflows:

- Native support for Twilio voice infrastructure

- Automated test generation from production conversations

- Performance optimization recommendations specific to your use cases

Tools like Coval’s handle the complexity of voice AI evaluation, while providing the business insights you need to make informed optimization decisions.

Conclusion

Voice AI success depends on business outcomes, not just technical performance. Latency and word error rate matter, but should be viewed as means to an end, not goals in and of themselves.

We believe the most successful voice AI deployments will focus on workflow completion, user satisfaction, and business impact. They will use technical metrics to diagnose problems, not define success.

Start with clear business success criteria. Build comprehensive testing around complete user journeys. Monitor both technical performance and business outcomes. When technical issues arise, prioritize fixes based on business impact.

This approach will transform voice AI from an experimental technology into a reliable business tool. Your customers will notice the difference, and your business metrics will reflect the improvement. And at Twilio and Coval, we understand that technical measures alone are not enough to be sure that your agents bring measurable business value. We think our customers would be well-served to shift the mindset when building agent applications beyond technical metrics to instead focus on business outcomes. Agentic Evaluations tools can help make that happen.

Additional resources

Dan Bartlett has been building software applications since the first dotcom wave. The core principles from those days remain the same but these days you can build cooler things faster. He can be reached at dbartlett [at] twilio.com.

Brooke Hopkins started her career building voice technology at Google Assistant, before going on to lead simulation job infrastructure for autonomous systems at Waymo. Today, she’s the founder of Coval — a platform helping teams simulate, scale, evaluate, and manage AI voice agents in production. She’s collaborated with hundreds of voice systems across industries, which has given the team at Coval unique insights to the cutting edge of voice AI capabilities and pitfalls today. Reach her at brooke [at] coval.dev.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.