Generating Yugioh Fan Fiction with OpenAI's GPT-3 and Twilio SMS

Time to read:

This post is part of Twilio’s archive and may contain outdated information. We’re always building something new, so be sure to check out our latest posts for the most up-to-date insights.

Fans of all types of media often have fun coming up with alternate stories that take place in the universe of their favorite pieces of fiction. Sometimes, particularly talented writers or artists working on fan fiction even end up being selected to work professionally on the licensed material.

OpenAI's new GPT-3 (Generative Pre-trained Transformer 3) model was trained on a massive corpus of text making it incredibly powerful. This can be used to generate full dialogue between characters with surprisingly little input text given to it as a prompt.

Let's walk through how to create a text-message powered bot to generate fan fiction in Python using Twilio Programmable Messaging and OpenAI's API for GPT-3. During quarantine, I've spent a lot of time listening to the soundtrack for Yugioh! The Eternal Duelist Soul because it is great coding music, so let's use the ridiculous universe of the Yugioh anime as an example.

Before moving on, you'll need the following:

- Python 3.6 or newer. If your operating system does not provide a Python interpreter, you can go to python.org to download an installer.

- A virtual environment enabled before installing any Python libraries.

- A Twilio account and a Twilio phone number, which you can buy here.

- An OpenAI API key. Request beta access here.

- ngrok to give us a publicly accessible URL to our code

Introduction to GPT-3

GPT-3 (Generative Pre-trained Transformer 3) is a highly advanced language model trained on a very large corpus of text. In spite of its internal complexity, it is surprisingly simple to operate: you feed it some text, and the model generates some more, following a similar style and structure.

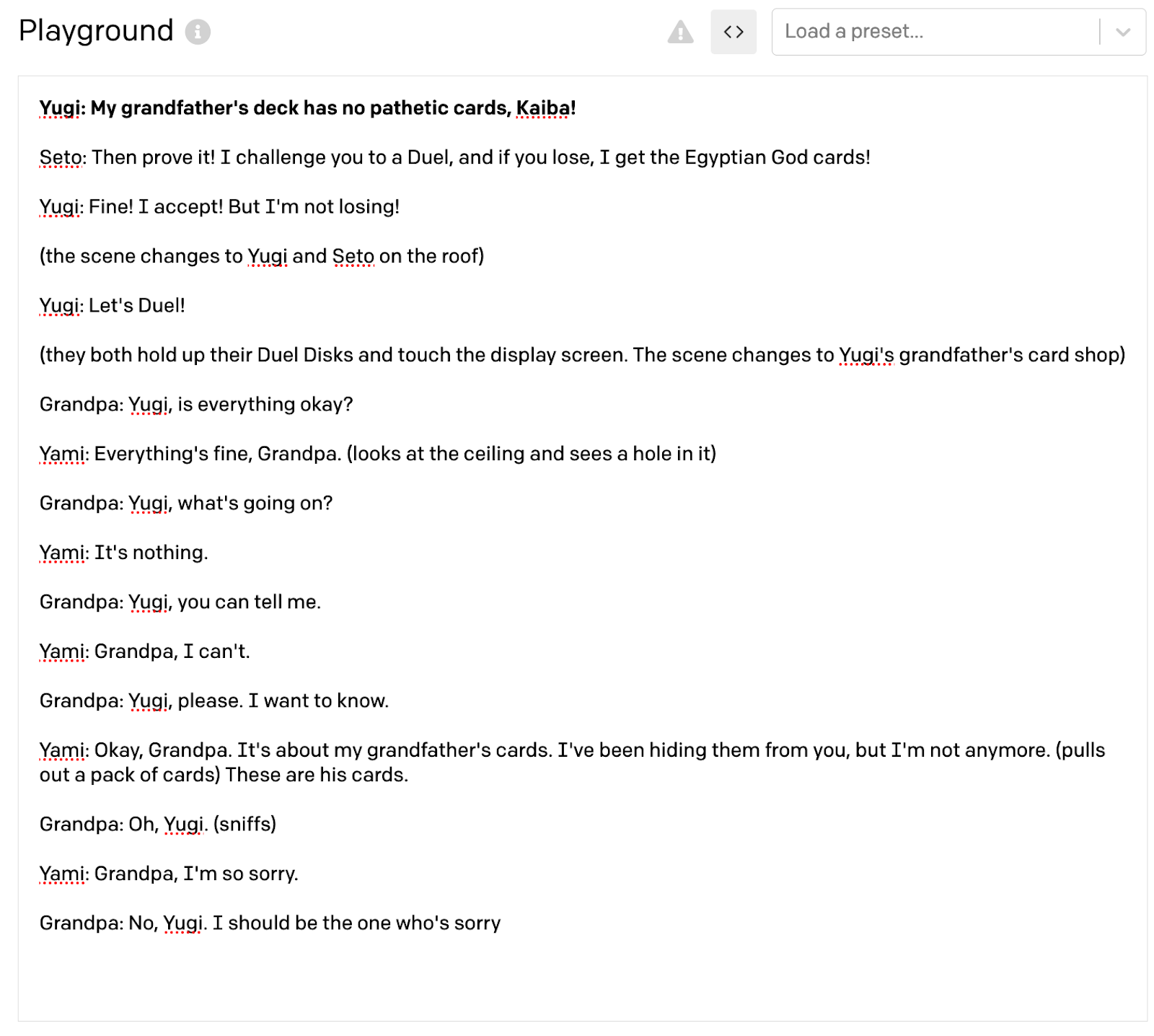

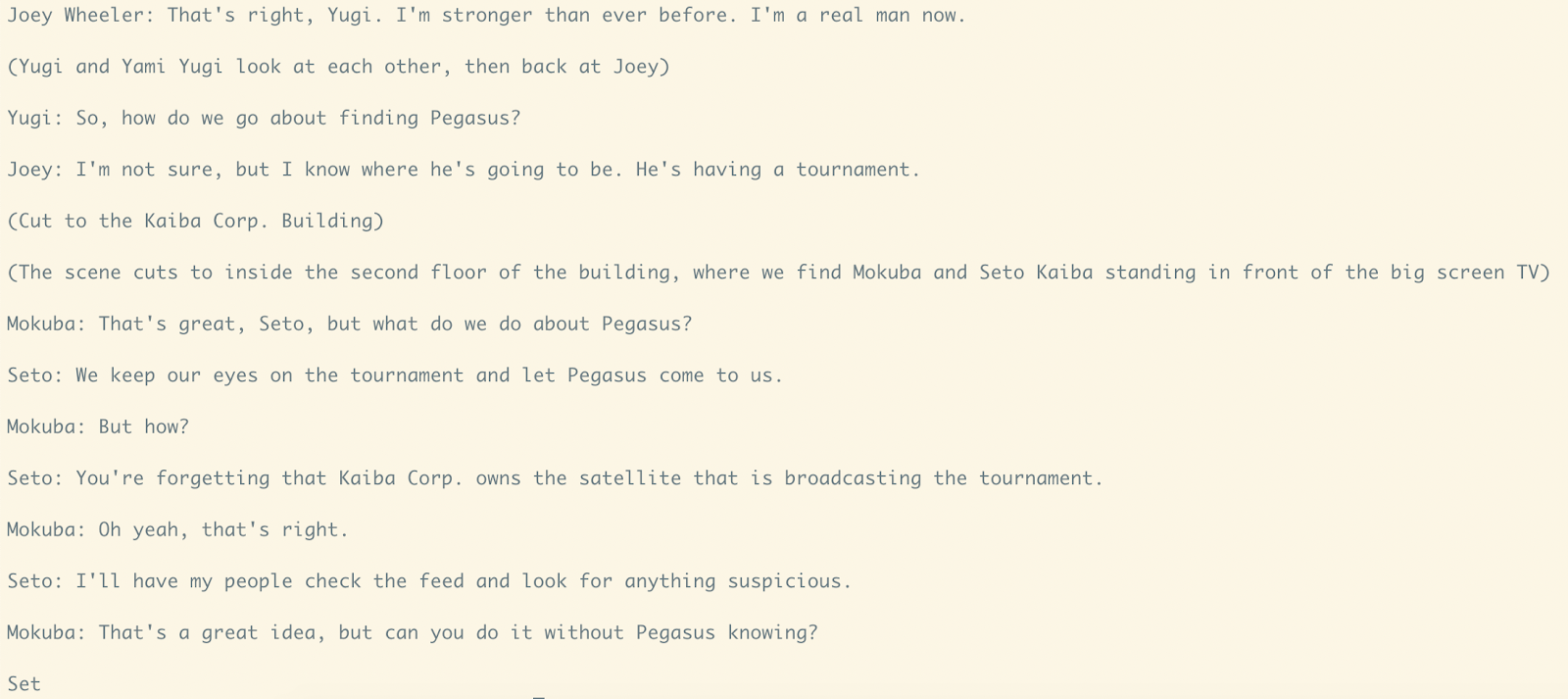

We can see how versatile it is by giving it a tiny amount of input text. Just from a character's name followed by a colon, it's able to determine that this text is structured as character dialogue, figure out which series the character is from, and generate somewhat coherent scenes with other characters from that series. Here's an example from Yugioh, with the input text being this iconic (and widely memed) line from the first episode "Yugi: My grandfather's deck has no pathetic cards, Kaiba!"

As mentioned above, this project requires an API key from OpenAI. At the time I’m writing this, the only way to obtain one is by being accepted into their private beta program. You can apply on their site.

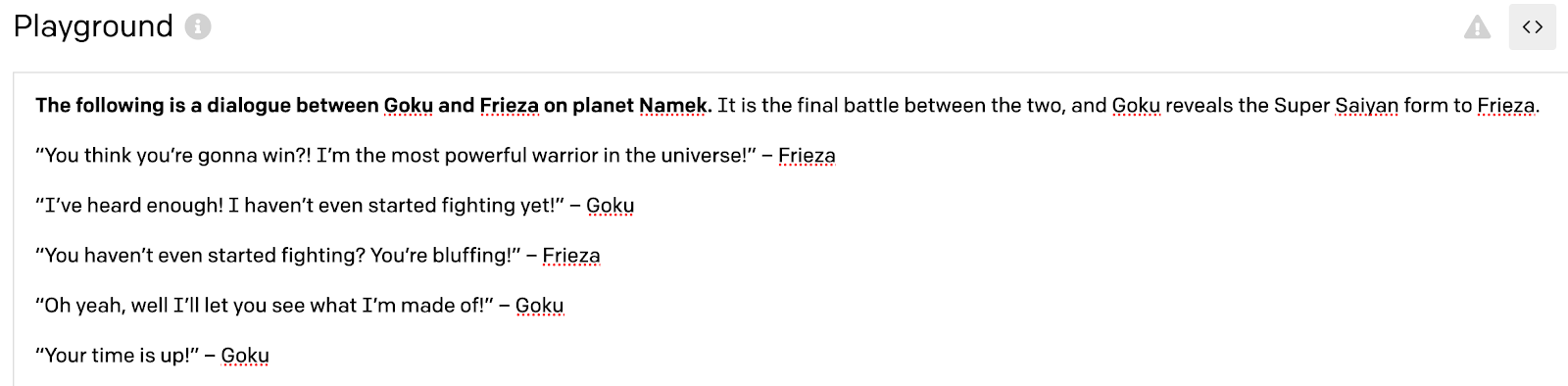

Once you have an OpenAI account, you can use the Playground to play around with GPT-3 by typing in text and having it generate more text. The Playground also has a cool feature that allows you to grab some Python code you can run, using OpenAI's Python library, for whatever you used the Playground for.

Working with GPT-3 in Python using the OpenAI helper library

To test this out yourself, you'll have to install the OpenAI Python module. You can do this with the following command, using pip:

Now create an environment variable for your OpenAI API key, which you can find when you try to export code from the Playground:

We will access this in our Python code. To try this out, open a Python shell and run the following code, most of which I took directly from the Playground:

You should see something like this printed to your terminal:

Now let's change the code to begin the dialogue with a randomly selected character from the series, so it isn't always Yugi:

Run this code a few times to see what happens!

Configuring a Twilio phone number

Before being able to respond to messages, you’ll need a Twilio phone number. You can buy a phone number here.

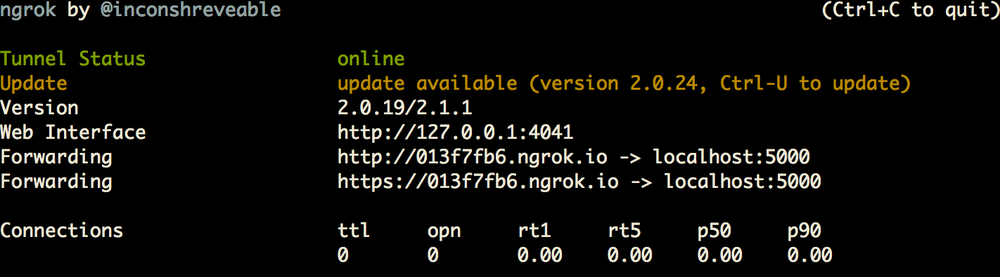

We're going to create a web application using the Flask framework that will need to be visible from the Internet in order for Twilio to send requests to it. We will use ngrok for this, which you’ll need to install if you don’t have it. In your terminal run the following command:

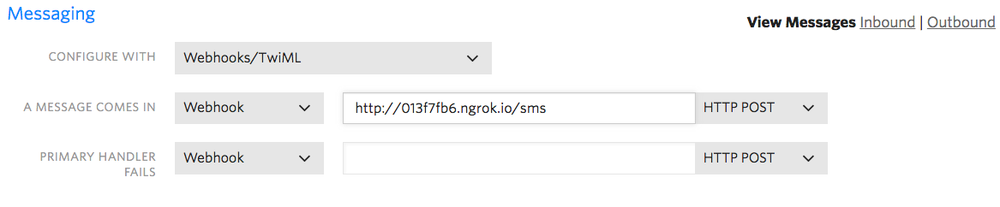

This provides us with a publicly accessible URL to the Flask app. Configure your phone number as seen in this image so that when a text message is received, Twilio will send a POST request to the /sms route on the app we are going to build, which will sit behind your ngrok URL:

With this taken care of, we can move onto actually building the Flask app to respond to text messages with some Yugioh fanfic.

Responding to text messages in Python with Flask

So you have a Twilio number and are able to have the OpenAPI generate character dialogue. It's time to allow users to text a phone number to get a scene of dialogue from the Yugioh universe. Before moving on, open a new terminal tab or window and install the Twilio and Flask Python libraries with the following command:

Now create a file called app.py and add the following code to it for our Flask application:

This Flask app contains one route, /sms, which will receive a POST request from Twilio whenever a text message is sent to our phone number, as configured before with the ngrok URL.

A few changes were made to the OpenAI API code. First, we are having it generate only 128 tokens, because we are using the davinci engine, which is the most advanced model. This can potentially take a lot of time to generate text, so keeping the number of tokens small will make sure the HTTP response to Twilio doesn't timeout. We're also omitting everything after the final newline character, because sometimes generation will stop mid-sentence, as you can see in this example, where it stopped in the middle of a character's name:

Save your code, and run it with the following command:

Because we have ngrok running on port 5000, the default port for Flask, you can now text it and receive some hilarious or questionably sensical Yugioh scenarios!

Tweaking it for better results

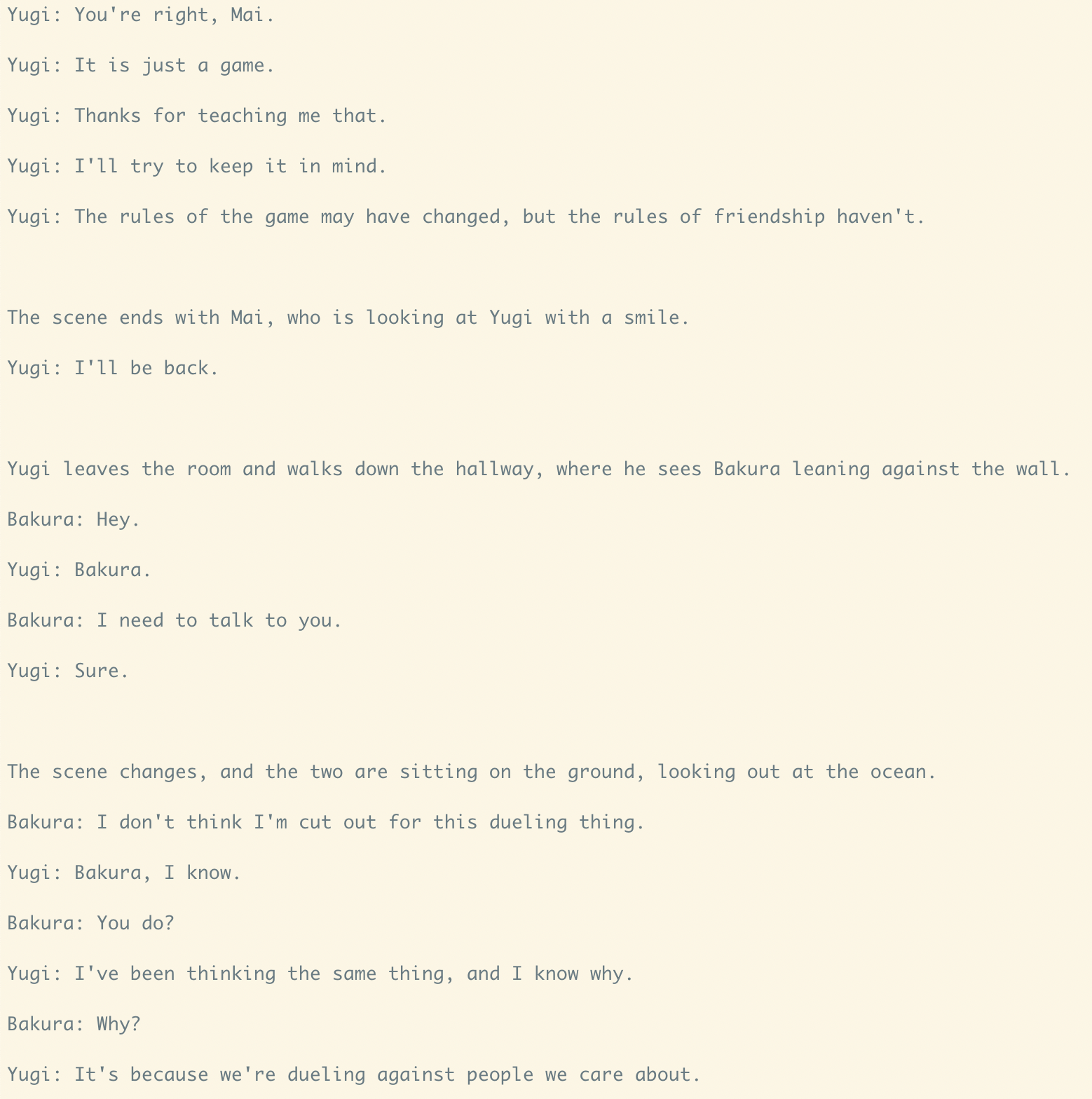

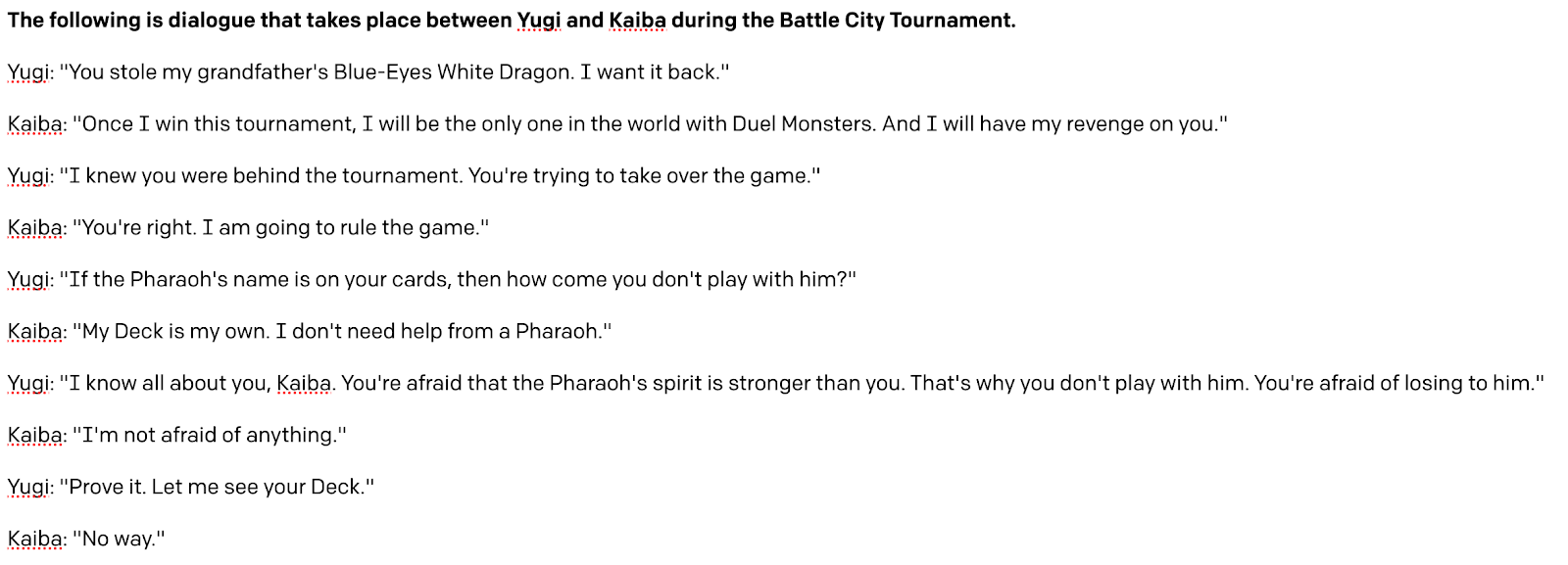

We now have a Yugioh fanfic text bot! However, this is just the beginning. You can improve this in many ways, by experimenting with all of the different parameters in the API request, or even the prompts that you use to generate the text. For example, maybe you can try just entering a sentence that describes a scene, and seeing what type of dialogue the AI generates:

In this example, it even generated some more descriptive text after my initial description, and the dialogue is in a different format than before.

Now you can create different types of fanfic bots for any series, movies, or books you want! Here's an example using DragonBall Z characters, which I wrote a similar tutorial about:

In terms of technical changes you can also experiment with having it generate more tokens, but making up for the added time that takes by using Redis Queue to send a text message asynchronously when generation is finished, rather than risking a timeout by responding to Twilio's HTTP request right away with TwiML.

If you want to do more with Twilio and GPT-3, check out this tutorial on how to build a text message based chatbot.

Feel free to reach out if you have any questions or comments or just want to show off any stories you generate.

- Email: sagnew@twilio.com

- Twitter: @Sagnewshreds

- Github: Sagnew

- Twitch (streaming live code): Sagnewshreds

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.