Visualize Twilio Usage Data with Python, Pandas and Jupyter Notebooks

Time to read:

Some mysteries in life can only be answered by data. For example, if you have questions about your Twilio usage, there’s a few ways to dig into the data. There’s prebuilt summaries and graphs of your activity in the Twilio console, and if you’re handy with a spreadsheet, you can export the data. There’s also third-party providers with out-of-the-box analytics and visualizations.

If nothing answers your particular question, this tutorial shows you how to make custom reports using the Twilio REST API to access your data and drive your own insights.

Tutorial requirements

- Python version 3

- A free or paid Twilio account with a history of activity

Set up the Python virtual environment

Make a new project directory, and change into the directory from the command line.

Create a virtual environment called venv. If you’re on Unix or Mac operating systems, enter these commands in a terminal to create and activate the virtual environment.

If you’re on a Windows machine, enter these commands in a command prompt window.

In the activated virtual environment, install these dependencies.

The Python packages we’re using are:

- jupyter - a web app to make and share documents containing live code

- requests - to send and authorize HTTP requests

- python-dotenv - to manage environment variables

- pandas - for data analysis and handling

- matplotlib - to create graphs and charts

Run a Jupyter notebook

Jupyter is an interactive shell originally developed for the Python programming language. In a Jupyter notebook, you can write and run code in your browser while inspecting the output at each step.

Start the Jupyter notebook server from the virtual environment.

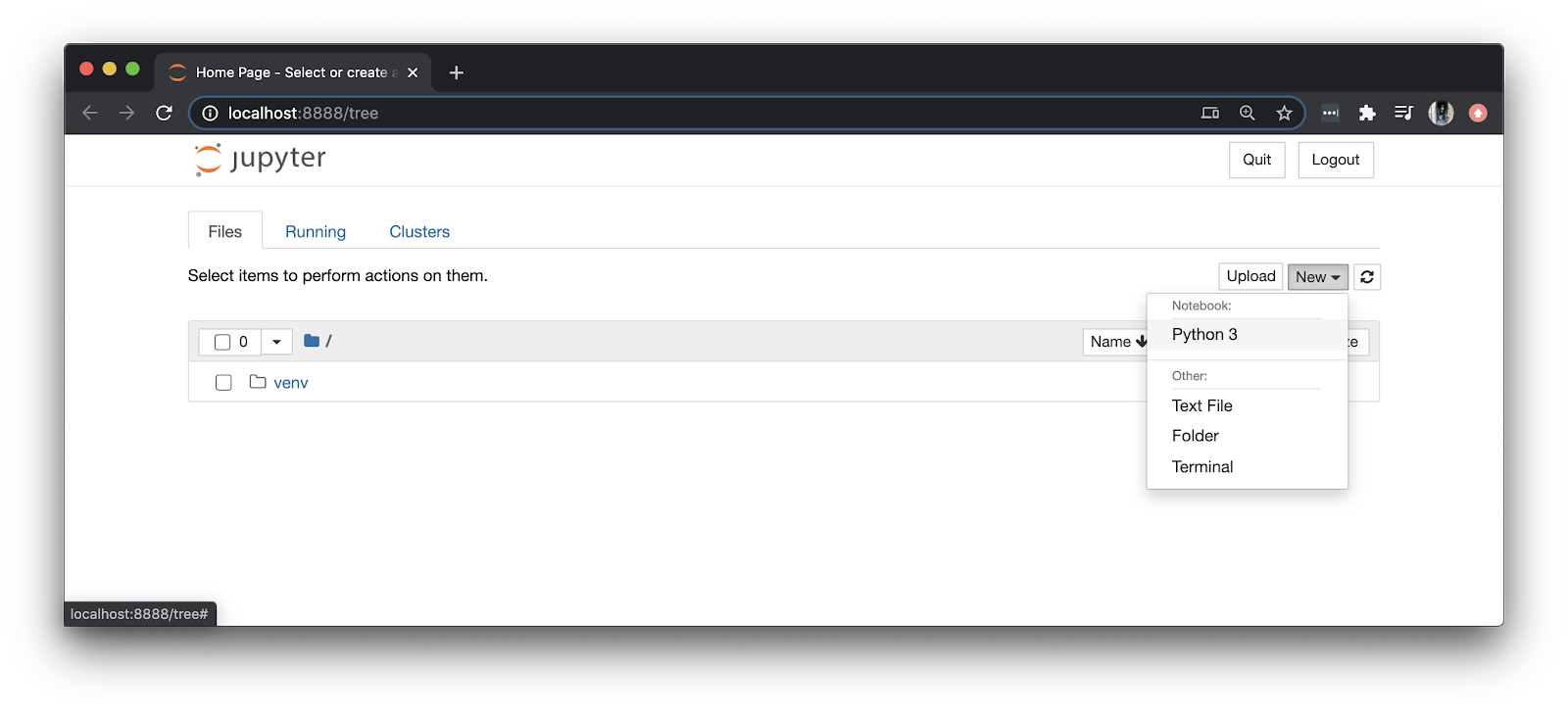

Once Jupyter is running at port 8888, go to your browser at http://localhost:8888/tree. This is the Jupyter web dashboard running on your local machine. You should see your current project directory, containing just one folder called venv.

Let’s add a notebook to the dashboard. Click the "New" dropdown to create a Python 3 notebook.

In the new tab, rename the Untitled notebook to twilio-usage at the top of the page.

Store Twilio credentials as environment variables

In order to access your Twilio data using the Twilio REST API, you’ll need to authorize your requests with your credentials.

Back in the terminal, you can see Jupyter running. Open a new command prompt on a separate tab, and activate the virtual environment again. Create a file called .env (notice the dot in front of this filename).

In the .env file, save your credentials from the Twilio Console formatted like this:

Write and run code in Jupyter

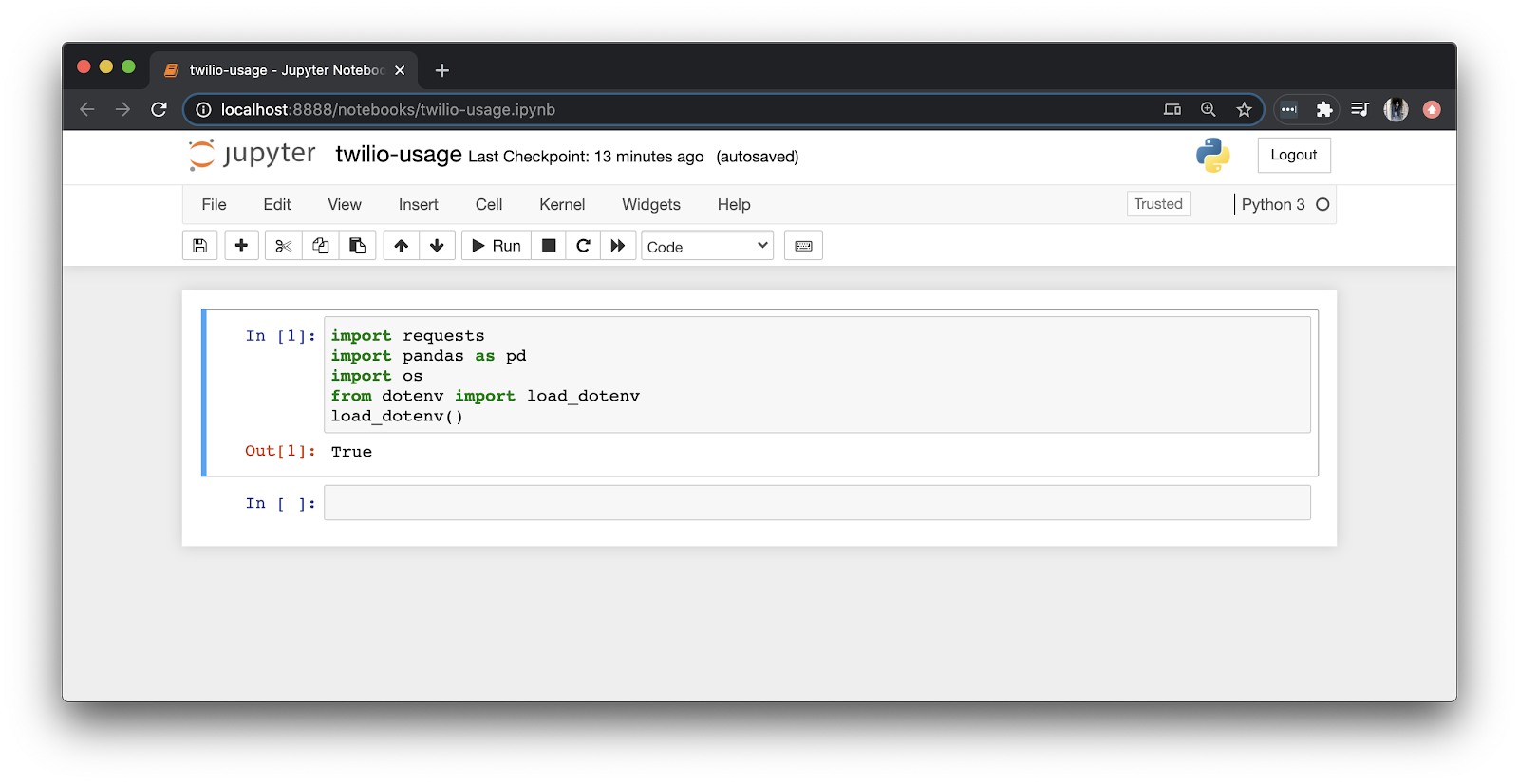

It’s time for our first line of code in our brand new notebook! Since you launched the notebook from within the virtual environment venv, you can access the dependencies installed in that environment by using an import statement in the notebook.

Copy the following code into the first code cell of the twilio-usage notebook and hit the “Run” button or press Shift+Enter.

If the code executes properly, the resulting output beneath the code is True. This code imports the Python dependencies and loads the environment variables stored in .env so you can access the Twilio REST API with your credentials.

Copy the following code into the next cell of the notebook, and hit “Run”.

This makes a GET request to the Twilio REST API to retrieve all of your SMS messages up to the paging maximum, which is 50 by default. The data is saved to a variable called messages that we can use while running subsequent cells. The resulting output is a log statement with a count of your SMS messages and a JSON object of those messages. If new messages are sent, remember to re-run this code cell to get the latest data.

Now that you know how the response from Twilio is formatted, you can transform the data using the primary pandas data structure called a DataFrame to handle the data like you would in a SQL table. Run the following code in the next cell of the notebook to see the first five rows of SMS messages.

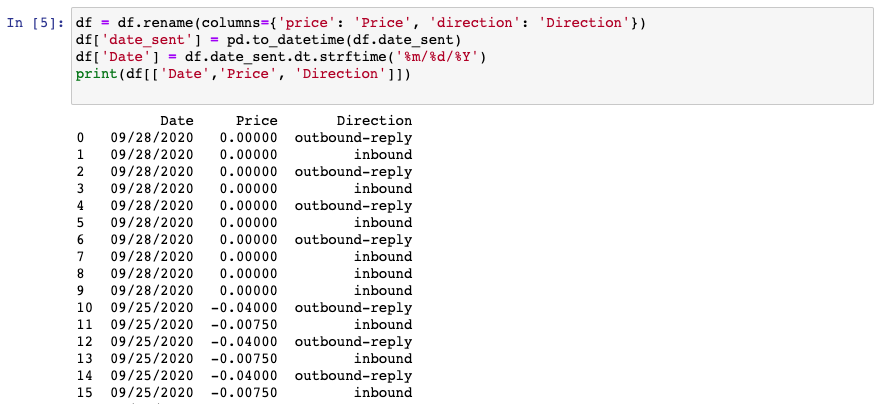

Tidy up the data in the next code cell. Display only the date, price, and direction of your messages, formatting the output. Hit “Run”.

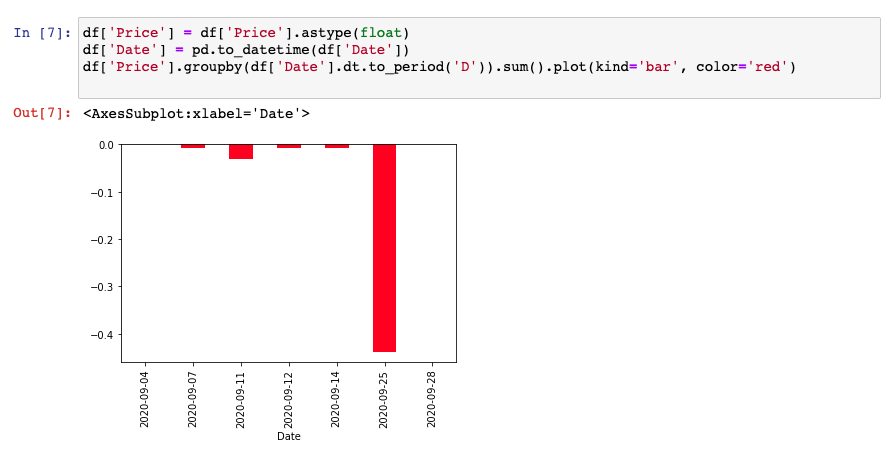

Show this data in a bar graph displaying total price over time in the next code cell. Hit “Run”.

Continue updating your view of the data by modifying or adding new code blocks. You could also focus on a specific period of time, filter by other criteria, or display a different type of graph.

Share the notebook

Once you have the data the way you like it, you can share the findings with other people. Here’s some considerations for sharing a Jupyter notebook.

- Add documentation: Jupyter cells can run code or render Markdown-formatted text. Interspersing explanatory text with code shows readers how to interact with the notebook.

- Share a virtual environment: We used our virtual environment locally by launching Jupyter from within the activated environment. If you want to capture those dependencies, you can use ipykernel to replicate and share a Python kernel that contains the virtual environment.

- Save to version control securely: Before pushing to Git, make sure you’re not leaking sensitive information. Make sure

.envis included in the.gitignoreof your project directory, so that the file is ignored from being checked in to version control. Another good practice is to remove the output saved by default with the underlying.ipynbnotebook file. You can do this by opening the Jupyter command palette (CMD/CTRL + Shift + P) and selectingclear all cells output.

Once a notebook is saved to version control, other people can view and run the notebook on their own.

There’s a few ways to render the notebook as a static HTML web page from a hosted version-control system, like GitHub, so that you can share the public URL with others to view. Here’s an example on GitHub and on Jupyter’s nbviewer.

To run the notebook interactively, people can set up a notebook server locally. There’s also cloud options that don’t require any software installation. For example, you can Execute on Binder to run the notebook interactively on nbviewer.

Conclusion

Now that you know the basics of working with the Twilio REST API in Jupyter, try using data from other Twilio APIs. You can also explore other popular libraries like numpy for advanced math calculations.

Interacting with data by tweaking the inputs can provide more insights than reading static graphs.

Even though there’s lots of out-of-the-box options for visualizing your Twilio usage data, you can also build and share your own custom reports.

Let me know what you come up with!

Joyce likes coding, cats, and second lunches. Find her on Twitter and LinkedIn.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.