Detecting iOS 26 Call Screening and Leaving Voicemail: How Twilio AMD and Real-Time Transcriptions Make It Possible

Time to read:

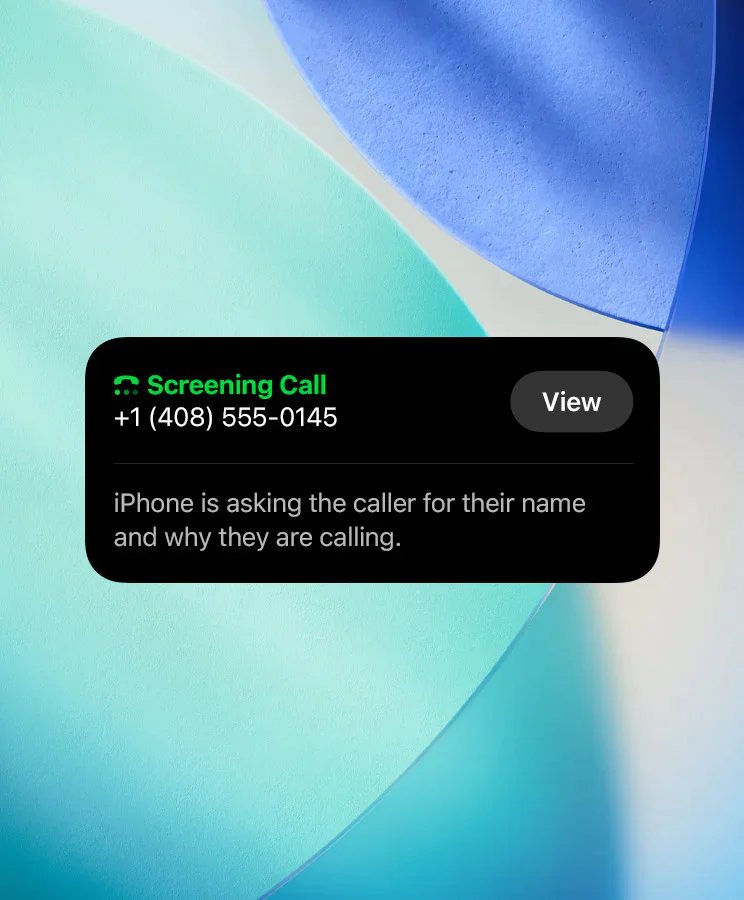

If you’ve been making outbound voice calls since Apple’s iOS 26 release, you’ve likely run into a new flow: call screening. Now, when you dial an iPhone with this feature enabled, you may not instantly reach your recipient; first, an automated voice asks you (or your app!) to “record your name and reason for calling”. If the person doesn’t pick up, you’re sent to voicemail. For brands trying to reach customers with high fidelity, this adds uncertainty:

- Did a human answer or was it screening?

- When should we leave a voicemail so our message isn't lost?

- How do I avoid repeating myself or missing my chance?

You need a precise, automated way for your app to detect when it is speaking with the iOS call screen and either leave a message or reply with who is calling.

That’s where combining Twilio’s Answering Machine Detection and Real-Time Transcriptions shines. With a bit of code, you can now offer a human-grade experience, even in this complex new landscape – and today, we’ll share with you what that code might look like. Let’s dive in.

How does iOS 26 Call Screening work?

Let’s break it down – when a user has Screen Unknown Callers enabled in iOS 26, your inbound call will trigger the following flow:

- Screening Preamble: iOS 26 picks up the call and says something like: “Hi, if you record your name and reason for calling, I'll see if this person is available.”

- Your App’s Turn: You need to record your intent or identification (so a human can see it and decide to answer).

- Fork in the Road:

- If answered by the person: conversation begins.

- If rejected or ignored: goes to voicemail or the call is ended.

- If screening is disabled: standard call/voicemail process.

If you miss your window to reply or if your solution can't tell the difference between iOS screening, a real human, or a generic machine, your app will no longer work correctly.

Prerequisites

For you to build and test this solution, you’ll need a Twilio account and a few things in place:

- A Twilio Account. If you don’t yet have one, sign up for a free account here

- A Twilio Phone Number with Voice capabilities

- You can learn to search for and buy a Twilio number here

- Access to Twilio Functions, our serverless environment for microservices.

- Accept the Predictive and Generative AI/ML Features Addendum in Voice Settings to use Real-time Transcriptions.

- (Optional) The Twilio CLI installed to test the outbound flow.

Building an iOS call screening flow

In these next steps, we’ll walk through planning for iOS call screening, then we’ll share a demo of the solution we’re building, then share the code. After this section we’ll show you how to run and test the solution.

Let’s start with a plan!

The four scenarios: What your code needs to handle

Your function needs to detect and adapt to four scenarios automatically:

Scenario 1: iOS Screening, No Answer, Goes to Voicemail

- Detect the iOS call screening preamble

- Play your identification message

- AMD detects voicemail end

- Leaves voicemail message

Scenario 2: iOS Screening, Person Answers

- Detect the iOS call screening preamble

- Play your identification message

- Human picks up ( “Hello?”)

- AMD detects a human

- Passes the logic through to the conversation, with no duplicate prompts

Scenario 3: No Screening, Person Answers

- A human answers immediately ( “Hello?”)

- AMD detects a human

- Your function passes through to the logic for a natural conversation

- No screening or voicemail messages get triggered

Scenario 4: No Screening, Goes to Voicemail

- Voicemail prompt gets detected directly

- AMD detects a machine

- Your function doesn’t detect the screening preamble

- Leaves voicemail message

If you can identify the scenarios early, you don’t require complex branching code or dial logic, your function can recognize and adjust using live call data.

Here’s a demo of the logic in action:

The code: Your drop-in Twilio Function

Ready to try it? Next, we’ll walk you through the logic of the script.

Here’s the complete code of the function on GitHub. You can clone it using:

You can find the code in the iosCallScreeningTranscriptions directory.

In these next few sections, we’ll shine a spotlight on some of the parts of the code and explain what’s happening.

Key code snippets explained

Call State Management

Twilio Functions are stateless, so we manage memory in Maps to track each call’s lifecycle and events, letting us coordinate complex detection logic across multiple incoming webhooks.

When a new call starts, we initialize all states using initializeCallState(). As the call progresses and new webhook events arrive, we read/update these Maps.

It’s helpful to know why this simple pattern “just works” in Twilio Functions:

- Multiple webhooks for the SAME call typically arrive at the SAME function instance.

- Function instances stay “warm” for several minutes between invocations.

- For production and horizontal scaling, use Redis (or similar).

Pattern-Matching Helpers

These helper functions recognize speech patterns in real-time transcriptions - distinguishing iOS26 preambles, intermediate prompts, standard voicemail greetings, and live human conversation. This is foundational for driving the adaptive call flow.

Scenario Decision Logic

This function accumulates transcribed speech, checks state, and orchestrates the detection and response for all four scenarios.

Live call control: Adaptive call actions

Depending on what was detected, the system uses Twilio’s REST API to:

- Play an identification message to iOS 26 screener

- Leave a voicemail (after beep, with transcription paused)

- Stop transcription and ‘pass through’ to the human

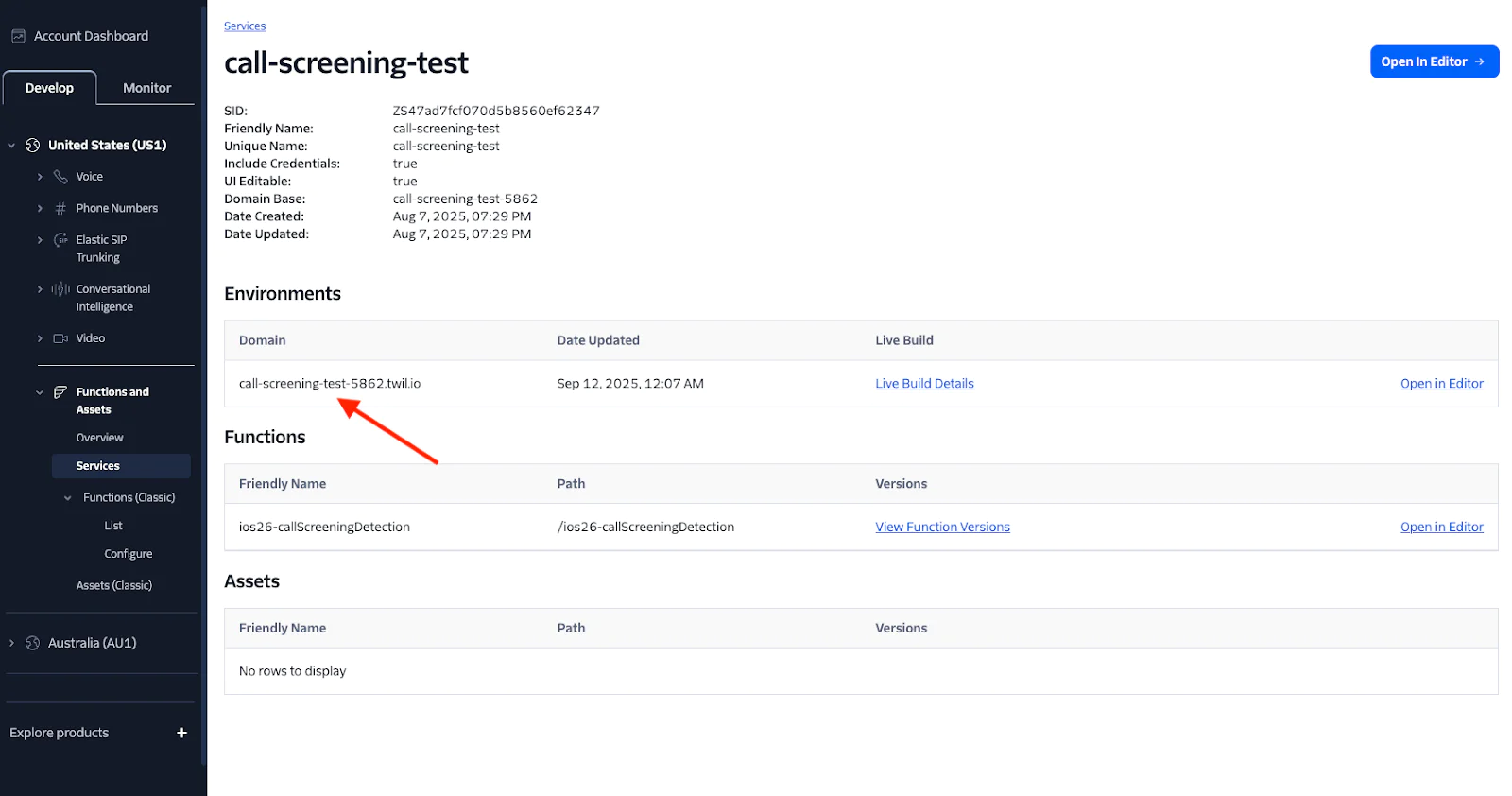

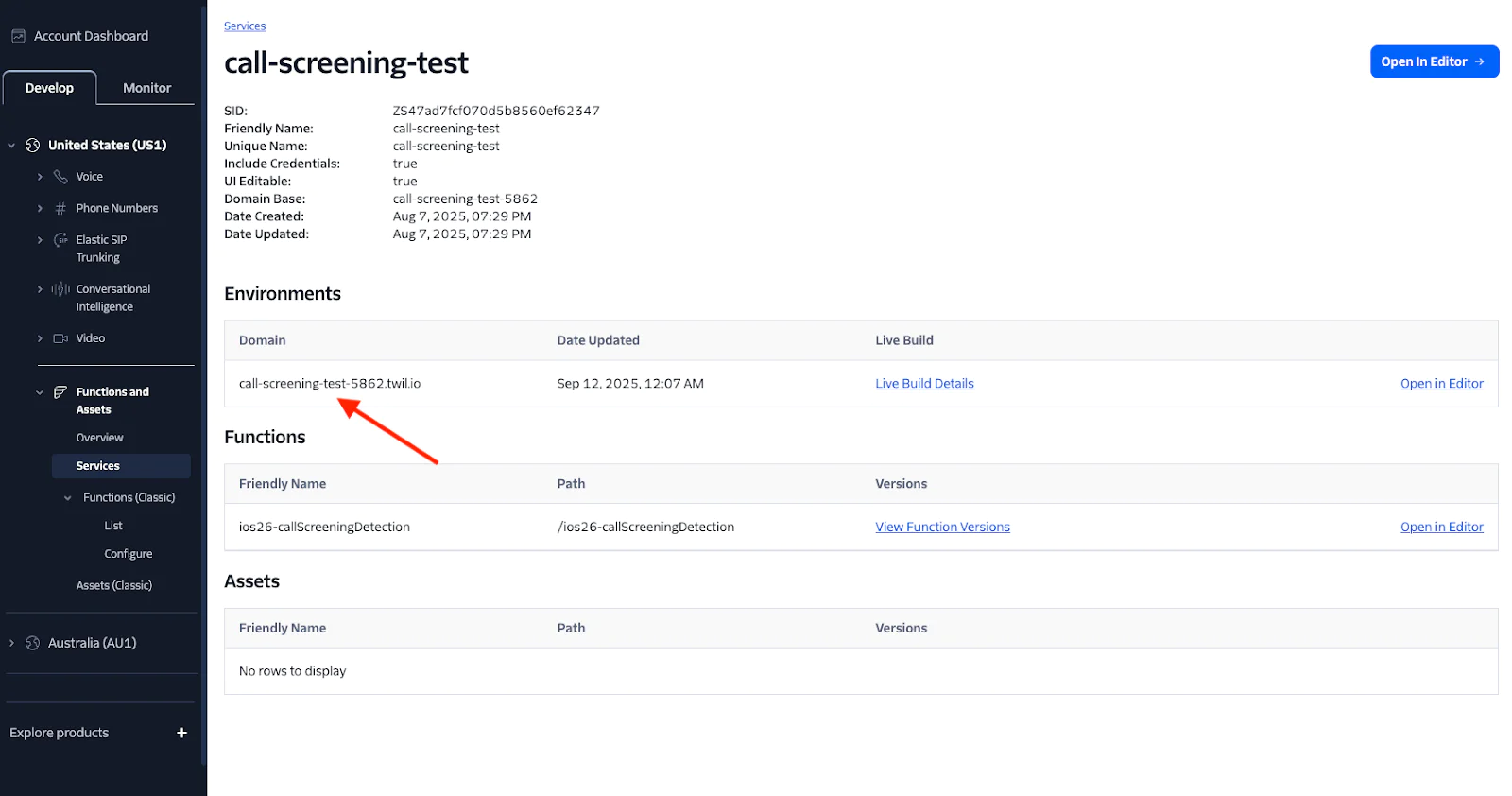

How to deploy and invoke it

- Create a new Function in one of your Services in Twilio Functions and Assets with the name ios26-callScreeningDetection

- Copy the function to the created file

- Set an environment variable named DOMAIN_NAME with the value of the function's domain in the format <your_domain>.twil.io

- (Optional) Set these environment variables if you want to personalize the messages:

SCREENING_RESPONSE: The text played on screening ( "This is Twilio calling...")VOICEMAIL_MESSAGE: The voicemail body

- (Optional) Set the

IOS26_PRIMARY_PHRASEenvironment variable if you want to change the canonical phrase to trigger iOS 26 - Save and Deploy your function

- Invoke the function

- Watch your logs for end-to-end detection, branching, and voicemails.

Get the Function’s domain name, you’ll need it to invoke it when making a call.

Test the Function with the Twilio CLI

And here’s how to invoke with the Twilio CLI:

With that CLI call, the function automatically:

- Kicks off transcription on the callee's inbound audio

- Continuously accumulates transcript text to run phrase detection

- Monitors Async AMD in parallel

- Knows exactly when to inject your ID message, when to stop transcription, when to leave voicemail, and when to connect to a real human

Example: Logs that help you debug

Make sure the Live Logs are enabled on your function and you’ll see exactly what happened in the Twilio Function logs. For example:

Conclusion

You’ve just learned how to reliably detect and respond to iOS 26 call screening using Twilio AMD and Real-Time Transcription, no need for guesswork or manual handling. With this function, you can now ensure your outbound voice calls reach real people more efficiently and handle all scenarios automatically.

Further Resources

Rosina Garcia Bru is a Product Manager at Twilio Programmable Voice. Passionate about creating seamless communication experiences. She can be reached at rosgarcia [at] twilio.com

Robert McCulley is a Product Manager at Twilio focused on Trusted Voice communications. Passionate about protecting the simple human connection behind every phone call by working to restore trust in the PSTN and driving telecom fraud to zero. He can be reached at rmcculley [at] twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.