Build a Smart QA ChatOps Assistant with Playwright, Twilio WhatsApp, LLMs, and MCP

Time to read:

Build a Smart QA ChatOps Assistant with Playwright, Twilio WhatsApp, LLMs, and MCP

Build a Smart QA ChatOps Assistant with Playwright, Twilio WhatsApp, LLMs, and MCP

Smart QA ChatOps isn't your traditional test automation where you write rigid test scripts. This is intelligent, dynamic testing where you simply tell the system what to test in natural language via WhatsApp, and the AI figures out how to test it.

You can say:

- Test login on https://example.com with user@test.com

- Check if the search works on https://example.com

- Try adding a product to cart on https://example.com

- Test the checkout flow on https://example.com

In this tutorial, you’ll build an application that triggers a web test via WhatsApp; the MCP-powered backend immediately runs Playwright tests, captures artifacts (screenshots, video, traces, logs), and returns a concise, plain-language report back to WhatsApp typically within minutes. By doing so, it breaks down technical barriers so that anyone (product, support, non-tech teammates) can invoke QA.

Traditional test automation requires developers to write and maintain test scripts for every scenario. When the UI changes, tests break. When requirements change, scripts need updates. This AI-powered approach eliminates rigid test scripts by:

- Interpreting natural language: Describe what you want to test in plain English

- Dynamic element discovery: AI identifies buttons, forms, and links intelligently without hard-coded selectors

- Self-healing capabilities: AI adapts to UI changes automatically

- Democratized testing: Non-technical team members can trigger tests

- Instant feedback loop: Results delivered directly to WhatsApp

What You'll Learn

- Integrate Twilio WhatsApp API with .NET for bidirectional messaging

- Use OpenAI to parse natural language and generate test plans

- Execute dynamic browser automation with Playwright

- Store and serve test artifacts (screenshots, traces) locally

- Orchestrate complex workflows between AI, webhooks, and browser automation

- Build production-ready ChatOps applications

Prerequisites

- .NET 9 SDK installed (verify: dotnet --version and expect 9.x).

- An OpenAI API key (or equivalent LLM API).

- A Twilio account and phone number (trial accounts require verified recipient numbers).

- ngrok (or any tunnel) for exposing local HTTP to the public internet.

- Node.js (for Playwright browser drivers)

- Basic understanding of C#, ASP.NET Core, and REST APIs

Project Setup and Structure

Start by cloning the complete working project from GitHub.

All code is already functional in the repository.

Understand the Solution Structure

Open the cloned project in your IDE. You'll see a clean multi-project architecture:

Why this structure?

- QAChatOps.Api: Web API layer handling webhooks and HTTP concerns. This serves as your entry point

- QAChatOps.Core: Business logic and domain models. Pure C# with no external dependencies

- QAChatOps.Infrastructure: External integrations (Twilio, OpenAI, Playwright). Isolates third-party dependencies

This separation ensures testability, maintainability, and follows Clean Architecture principles. The Core project remains framework-agnostic, making it reusable across different application types.

Project References

Now establish proper project references:

The reference hierarchy matters: Infrastructure can depend on Core, but Core never depends on Infrastructure. This creates a unidirectional dependency flow that prevents circular references and maintains clean architecture boundaries.

Install Dependencies

Install all required NuGet packages for each project:

About these packages:

Microsoft.Playwright is a Cross-browser automation library that allows you to control Chrome, Firefox, and WebKit. Twilio is the official Twilio SDK for WhatsApp messaging that simplifies API calls and handles authentication. Microsoft.Extensions.Http provides IHttpClientFactory for OpenAI API calls that includes resilience and performance features.

Microsoft.Extensions.Configuration.Abstractions enables dependency injection for configuration and critical for the Infrastructure layer. playwright downloads Chromium, Firefox, and WebKit browsers to your system. These browsers are specifically patched versions that enable automation features like screenshot capture, network interception, and trace recording. This step is crucial. Without it, Playwright tests will fail with "browser not found" errors.

Cross-Platform: Browser Binary Installation via npm

If you're on macOS or Windows and encounter browser installation errors (like "Executable doesn't exist"), you can use Playwright's npm initializer as an alternative. This works cross-platform and downloads the correct browser binaries for your OS.

Building the Core Domain Models

Draw your attention to the code in this project so you can understand the basic structure.

The Core project defines your domain without any infrastructure concerns. These models represent the "language" of your application and will be used across all layers.

TestRequest Model

This model represents a parsed testing request. It's the bridge between a user's natural language and executable tests.

The TestType enum helps the AI categorize requests. While the AI generates dynamic test plans, knowing the type helps with selector strategies. For example, login tests are known to look for email inputs and submit buttons, while cart tests look for "Add to Cart" buttons.

The Parameters dictionary stores dynamic values extracted from user messages. If someone says "Test login with user@test.com and password123", the AI populates this with {"email": "user@test.com", "password": "password123"}. Head to the next model.

TestPlan Model

The AI generates this from a TestRequest. It's a structured representation of what steps to execute.

Action types include: navigate, click, type, verify, wait, screenshot, scroll. Each action is atomic and self-contained. Target can be a CSS selector OR natural language text (like "Login button"). The orchestrator tries intelligent matching strategies. IsOptional flag: Some steps are nice-to-have but not critical. For example, waiting for a popup might be optional—if it doesn't appear, the test continues. This prevents brittle tests that fail on minor UI variations.

The Selectors dictionary stores reusable selector strategies. The AI might define {"loginButton": "button[type='submit'], button:has-text('Login'), [aria-label*='login']"} which provides multiple fallback options. This is key for resilient testing. Go to the final model.

TestExecutionResult Model

This captures everything about a test run success/failure, timing, artifacts, and AI analysis.

JobId is used to correlate artifacts. All screenshots for a test run will have this ID in their filename, making debugging easier.

ExecutedSteps creates an audit trail. You can see exactly what happened, when it happened, and whether it succeeded. This is invaluable for debugging flaky tests. Artifact paths (screenshots, traces, videos) are stored as local file paths. Later, the WhatsApp service will convert these to public URLs. This separation of concerns means the Core layer doesn't need to know about web hosting or URL generation.

Service Interfaces

Before implementing infrastructure, this defines service contracts in the Core project. This inverts dependencies the Infrastructure will implement these interfaces defined in Core.

Why three methods?

ParseIntentAsync which converts natural language to structured TestRequest. GenerateTestPlanAsync which creates executable steps from the request.

AnalyzeResultsAsync which interprets test results and provides insights. This interface makes the AI provider swappable. Today it's OpenAI, tomorrow it could be Anthropic Claude or a local LLM. The rest of your application doesn't care.

Implement the AI Test Generator

Now you'll implement the brain of the system, the component that converts natural language into executable test plans using OpenAI's GPT-4. Feel free to use any LLM of your choice.

Direct your attention to the following code block:

Constructor injection: you inject IHttpClient (for resilience), IConfiguration (for settings), and ILogger (for observability). This is standard .NET dependency injection where every dependency is explicit and testable.

You use HttpClient directly While OpenAI has official SDKs, using HttpClient directly gives us complete control over requests/responses and simplifies error handling. The OpenAI Chat Completions API is straightforward enough that an SDK adds little value.

Parsing Natural Language Intent

Continuing to explain the code in this generator, take a look at this segment:

Why "temperature 0.3"? Temperature controls randomness. At 0.3, responses are mostly deterministic. You want consistent structure, not creative variations. The prompt engineering matters: Notice how you explicitly state "Respond with ONLY a JSON object" and provide the exact structure. This constrains GPT-4's output and reduces parsing errors. The prompt also handles edge cases (missing URL, no parameters). Markdown cleanup with GPT-4 sometimes wraps JSON in backticks for code blocks. We strip these before parsing. This is a common pattern when working with LLMs that output code. For error handling if parsing fails completely, you return a basic TestRequest with the original message. This prevents the entire flow from crashing on malformed AI responses.

Generating Test Plans

Higher temperature (0.5) is used in this portion because you want creativity here. GPT-4 should generate varied selector strategies and think about edge cases. This is intentional: test plans benefit from diverse approaches. The system prompt is critical: "Generate robust, intelligent test plans with fallback strategies" directs GPT-4's reasoning. It knows to provide multiple selectors per element, which makes tests resilient to UI changes and dynamic. Max tokens set to 2000: Test plans are larger than intent parsing. Complex flows might have 10-15 steps with detailed selectors. Selector format: The prompt instructs GPT-4 to provide comma-separated alternatives: "button[type='submit'], button:has-text('Login'), [aria-label*='login']". The orchestrator will try each until one succeeds.

Analyzing Test Results

A few key points in this block:

Temperature 0.4: Balanced between determinism and natural language. You want consistent structure but human-readable text.- Max chars constraint: "Keep it concise (max 1200 chars)" ensures the analysis fits well in WhatsApp messages. Without this, GPT-4 might generate multi-paragraph essays.

- Emojis for clarity: WhatsApp is a casual medium. Emojis (✅, ❌, ⚡) make reports scannable and engaging.

Why do you not use structured JSON here? Unlike intent parsing and test plans, analysis is meant for human consumption. Natural language is perfect for explaining test results to non-technical stakeholders. This transforms raw test execution data into something a little more end-user-friendly. Instead of "Step 3 failed," users get "Login button not found. The site might be using a modal dialog. Try waiting longer or check if there's a cookie consent popup." Next, proceed to the test orchestrator.

Implement the Test Orchestrator

The orchestrator executes AI-generated test plans using Playwright. This is where plans become reality, browsers launch, elements click, screenshots capture.

Draw your attention to the following code:

This code creates the necessary directories. . The orchestrator is responsible for artifacts. It should ensure storage exists before attempting writes. This prevents cryptic "directory not found" errors during test execution. Configurable path: Using IConfiguration makes the artifacts path environment-specific. In development it's wwwroot/artifacts, in production it could be a cloud storage mount point.

Test Execution Error Handling and Individual Step Execution

Playwright's configuration ensures robust testing through SlowMo delays (100ms) to prevent race conditions and automatic video recording. With tracing, it captures screenshots, DOM snapshots, and network requests for complete test replay. The orchestrator handles critical vs optional steps. Breaking on essential failures while continuing past non-critical ones like cookie banners. NetworkIdle waits ensure pages fully load (max 2 connections for 500ms) before proceeding, while comprehensive error handling catches all exceptions and always returns results without propagation.

Finally blocks guarantee browser cleanup, preventing process leaks, and screenshots at each step create a visual debugging timeline accessible through Playwright's trace viewer.

Intelligent Element Clicking

This is the magic of resilient testing: The AI provides multiple selectors separated by commas. You try each until one works. If button[type='submit'] fails (maybe the site changed), you try button:has-text('Login'), then [aria-label*='login']. Text-based selectors are checked first, since these are most resilient to UI changes. A button with text "Login" is likely to remain even if CSS classes change. You use a hort timeout (5s), because you don't want to wait forever per selector. If an element isn't found in 5 seconds, the code will try the next approach. Why catch and continue? Each selector might fail for different reasons (element not found, element not clickable, wrong element type). You don't care why it failed. You just try the next option.

Placeholder replacement: The AI generates test plans with placeholders like {email} and {password}. This method replaces them with actual values from the TestRequest parameters or sensible defaults. Why this pattern? It decouples test plan generation from actual test data. The same test plan can be reused with different credentials simply by changing the parameters. FillAsync vs TypeAsync: FillAsync clears the field first, then fills it. TypeAsync simulates individual keystrokes. For forms, filling is faster and more reliable.

Element Verification

WaitForSelectorState.Visible elements must be both present in the DOM and visible on screen. This prevents false positives where an element exists but is hidden with display: none. Verification is crucial. This is how we confirm tests actually succeeded. After clicking login, you verify the dashboard element appears. Without verification, you'd never know if actions had the intended effect.

Screenshot Capture

FullPage = true captures the entire scrollable page, not just the viewport. This is better for documentation and debugging. The filename format {jobId}_{step}_{timestamp}.png makes screenshots self-documenting and easy to find. The jobId groups related screenshots together.

Videos are saved automatically when RecordVideoDir is configured. You just need to get the path after test completion.

Next, move to the communication channel.

Implement the WhatsApp Service

Now you integrate Twilio's WhatsApp API to send test results back to users.

TwilioClient.Init is a global initialization. It configures the SDK with your credentials. You only need to call this once, typically in the constructor. PublicBaseUrl is critical. WhatsApp media messages require publicly accessible URLs. Local file paths won't work. You'll convert paths like wwwroot/artifacts/screenshots/abc123.png to https://your-domain.com/artifacts/screenshots/abc123.png.

Sending Text Messages

This is the final task to be handled here:

For PhoneNumber format both from and to must be in WhatsApp format: whatsapp:+1234567890. The webhook will provide this format automatically. Message SID: Twilio returns a unique identifier for each message. Log this for tracking and debugging delivery issues. Error handling you log and rethrow. The calling code should handle failures gracefully.

Build the Webhook Controller

The webhook is the heart of the system. It receives WhatsApp messages, orchestrates all services, and sends responses.

Constructor injection. All dependencies are injected to make the controller testable and follows SOLID principles. Three core dependencies: AI for intelligence, Orchestrator for execution, WhatsApp for communication. This controller coordinates them.

Receiving WhatsApp Messages

[FromForm] Twilio sends webhook data as form-urlencoded, not JSON. This attribute tells ASP.NET to bind from form data. Fire and forget: _ = Task.Run(...) starts background processing without awaiting. The webhook returns immediately (HTTP 200 OK) within milliseconds. Why not await? Tests take 30-60 seconds. If we awaited, Twilio would timeout the webhook. By returning immediately, you acknowledge receipt while processing continues in the background. The discard operator _: Tells the compiler we intentionally ignore the Task. Without this, you'd get warnings about unawaited async calls.

Processing Test Requests

Users receive 3-4 messages during execution:

- Initial acknowledgment ("Got it!")

- Test plan confirmation

- Final results with screenshots

This keeps users engaged and informed. Without it, they'd wait 60 seconds wondering if their message was received.

URL validation: If the AI couldn't extract a URL, we fail fast with helpful guidance. No point executing a test without a target.

The 5-step pipeline:

- Parse natural language → TestRequest

- Generate dynamic plan → TestPlan

- Execute with browser → TestExecutionResult

- Analyze with AI → Enhanced result

- Format and send → WhatsApp message

The idea is to catch all exceptions, and inform the user gracefully. No stack traces in WhatsApp, just user-friendly error messages.

Sending Test Results

Observe the following code.

WhatsApp formatting: Bold text with asterisks (*bold*), emojis for visual hierarchy. This makes reports scannable on mobile devices. Step limit (8) prevents message overflow. If there are, for example, 15 steps, we show the first 8 and note there are more. This keeps messages readable.

The analysis from GPT-4 is embedded in the report. This gives context beyond pass/fail, explains why something failed and how to fix it. Conditional media: If screenshots exist, send them. If not (maybe the test failed immediately), send text only and link to the media for access. This handles edge cases gracefully.

Help Message

Users typing "help" or "start" get comprehensive examples. This reduces friction and demonstrates capabilities immediately. Each example is carefully chosen to show different test types. Users can copy, modify, and send.

Serve Test Artifacts with the ArtifactsController

While sending screenshots and videos directly through WhatsApp seems convenient, it's impractical in production due to WhatsApp's 3-media-per-message limit, 16MB file size restrictions, and cost. Large media files may timeout during Twilio's webhook processing, and there's no way to provide video playback controls or organize multiple artifacts effectively.

The HTML Report Solution

To solve these shortcomings, you will provide a single clickable link (provided in the WhatsApp analysis report) to a comprehensive HTML report. The same pattern, a standard protocol, is used by GitHub Actions, CircleCI, and Jenkins. The ArtifactsController serves three endpoints:

/api/artifacts/report/{jobId}- Professional HTML report with screenshot grid, embedded video player, and trace download links/api/artifacts/video/{fileName}- Video streaming with range requests for playback controls/api/artifacts/trace/{fileName}- Downloadable Playwright trace files for debugging

This approach delivers unlimited screenshots, full video playback controls, persistent storage, instant WhatsApp delivery, lower costs, and enables easy sharing across teams. Reports remain accessible indefinitely and can be archived for compliance.

The complete controller with responsive HTML templating and video streaming is available in the GitHub repository. This webhook → process → notify with link pattern is scalable, reliable, and provides superior UX compared to inline media delivery. Next, proceed to configure the application to wrap up the development.

Configure the Application

In the Program.cs, this is where everything is wired together and configures the application settings.

Program.cs Setup

This is the content of your QAChatOps.Api/ Program.cs.

Application Configuration

Review the file QAChatOps.API/appsettings.json.

ArtifactsPath: Relative path from the application root. Using wwwroot/artifacts ensures files are web-accessible.

This file contains placeholder values that you will need to replace: PublicBaseUrl: This will be your ngrok URL during development (we'll set this up next). In production, it's your actual domain. This is critical—without it, WhatsApp can't access screenshots.

Twilio configuration:

- AccountSid: Found in Twilio Console dashboard

- AuthToken: Found in Twilio Console (keep this secret!)

- WhatsAppNumber: Your Twilio WhatsApp number in format

whatsapp:+14155238886

OpenAI configuration:

- ApiKey: Get from OpenAI Platform

- Model: Use

gpt-4for best results. According to your budget you can use more powerful models for better results.

Running the Application with ngrok

Since Twilio needs to send webhooks to your application, you need to expose localhost to the internet. ngrok is perfect for this.

Step 1: Start Your Application

Fire the command to start the application.

Step 2: Start ngrok

Open a new terminal window (keep your app running) and start ngrok:

Note that you may need to adjust the port number to the port on which your application is running.

Copy the HTTPS URL provided (e.g., https://example123.ngrok.io). ngrok creates a secure tunnel from a public URL to your localhost. Twilio can now reach your webhook at https://abc123.ngrok-free.app/api/webhook/whatsapp. Navigate to http://127.0.0.1:4040 to see all webhook requests in real-time. This is invaluable for debugging because you can see exactly what Twilio is sending.

Step 3: Update Configuration

Update your appsettings.Development.json with your ngrok URL:

Restart your application for the change to take effect. In your app terminal, press Ctrl+C, then:

Step 4: Configure Twilio Webhook

- Go back to Twilio Console → Messaging → Try it out → Send a WhatsApp message

- Scroll to Sandbox Configuration. You may need to first join the sandbox using the Join code provided.

- In the When a message comes in field, enter your ngrok URL that you just created, including the full URL from the application, for example: https://abc123.ngrok.app/api/webhook/whatsapp

- Method: HTTP POST

- Click Save

4. 5.

Send "join code" to whatsapp:+14155238886 (use the join code and number shown in your Twilio Console) to connect your WhatsApp to the sandbox.

Testing the webhook: Send any message to your Twilio WhatsApp number. Check your application logs. You should see:

If you see this, your webhook is working!

Testing Your QA ChatOps Assistant

Now for the exciting part! It's time to test the complete system.

Test 1: Simple Navigation

Send this WhatsApp message:

Check if search works on https://amazon.com for MacBook

The screenshot below shows what happens.

You can also look at the logs to see what's happening. To access the media files, click or copy the link provided in the WhatsApp message and paste in your browser. Make sure your app is still up and running.

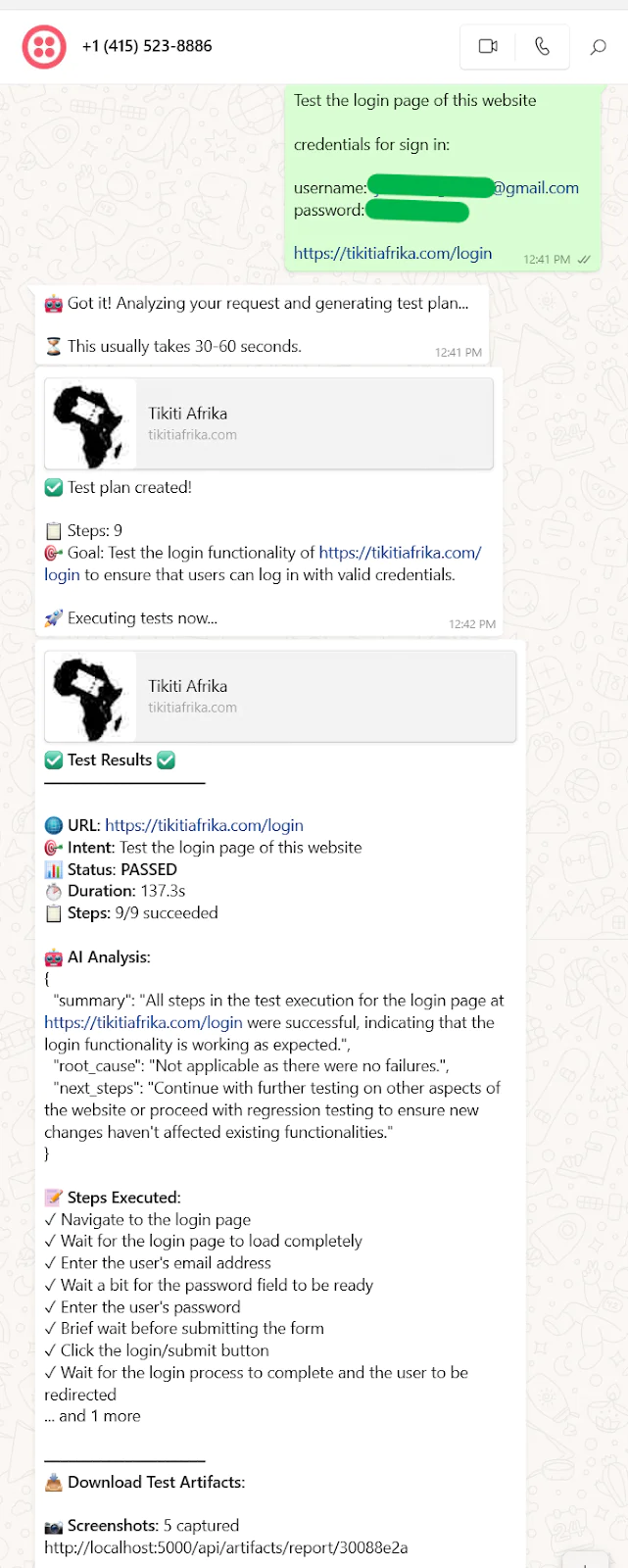

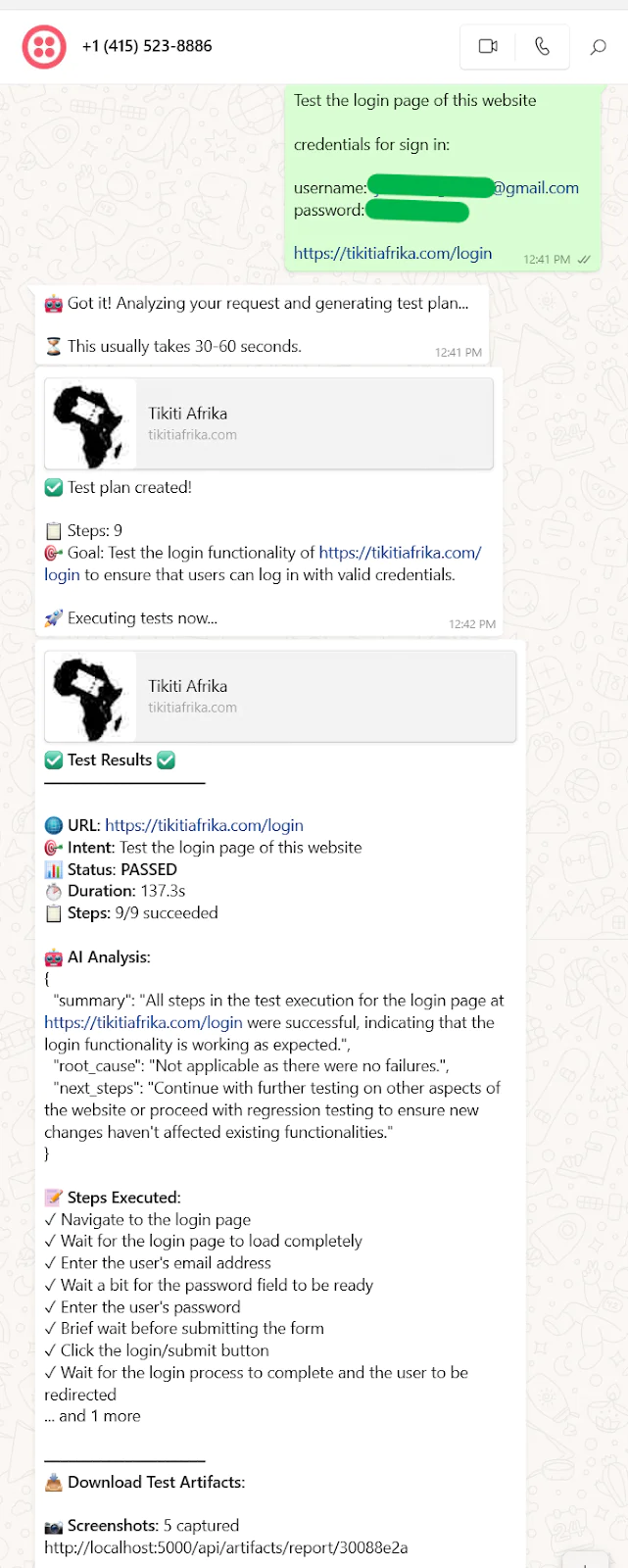

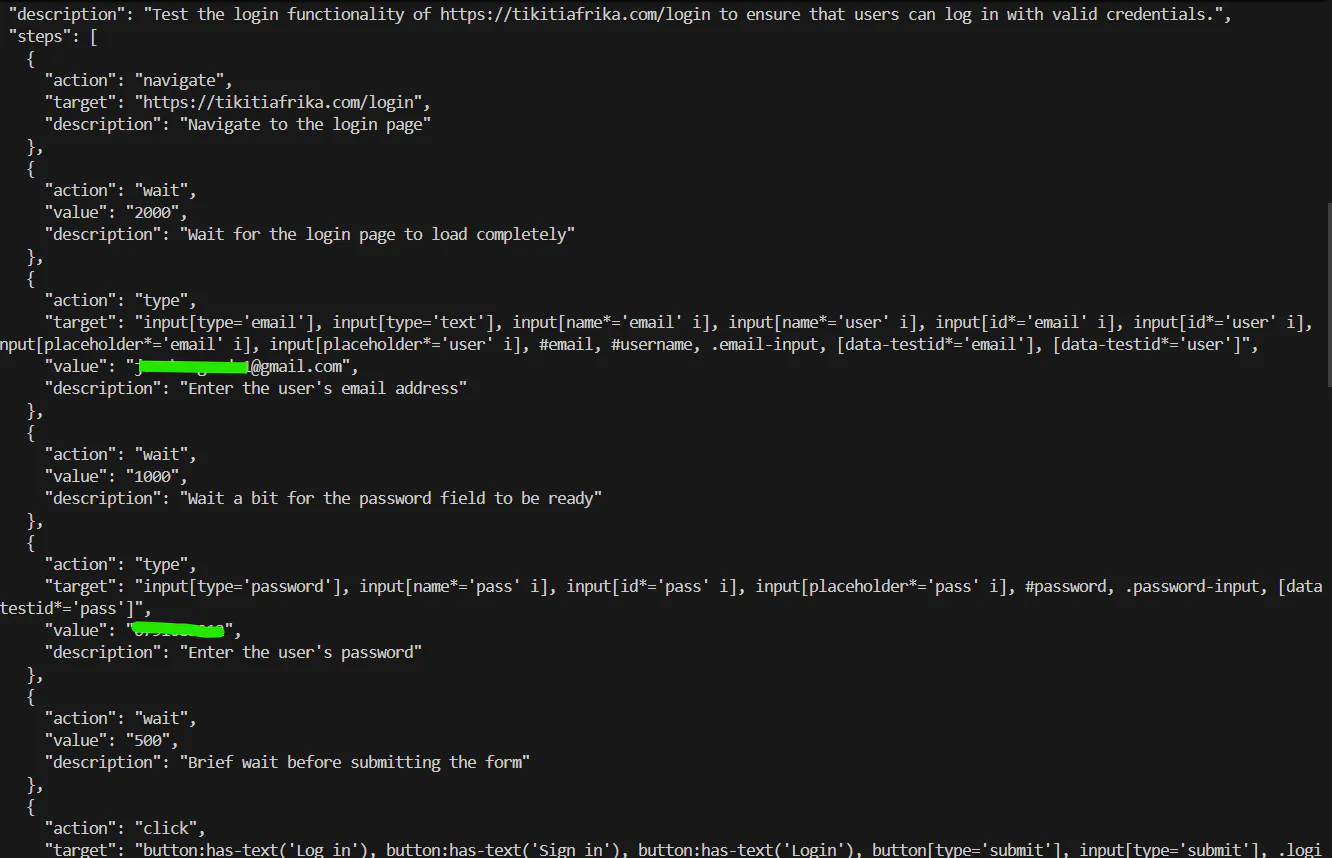

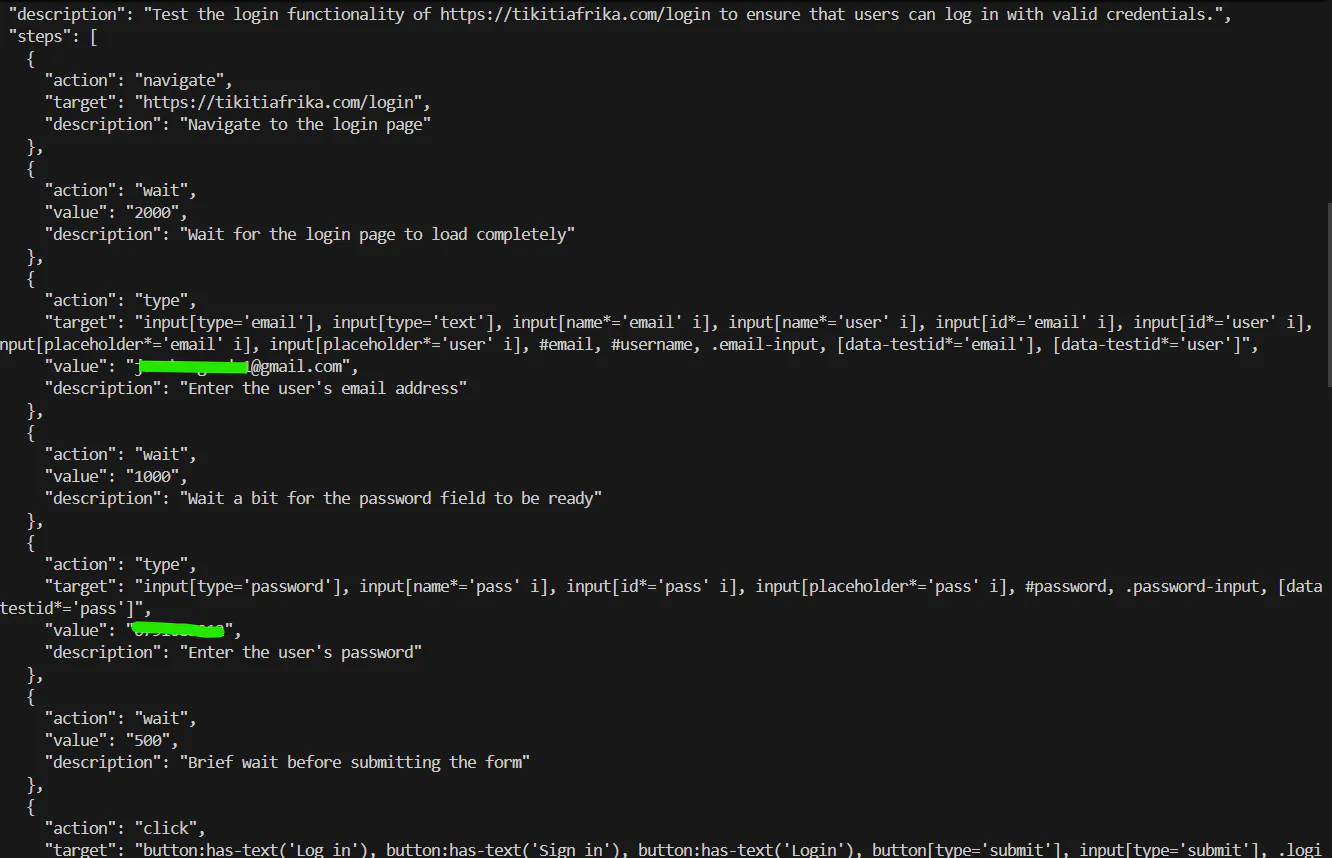

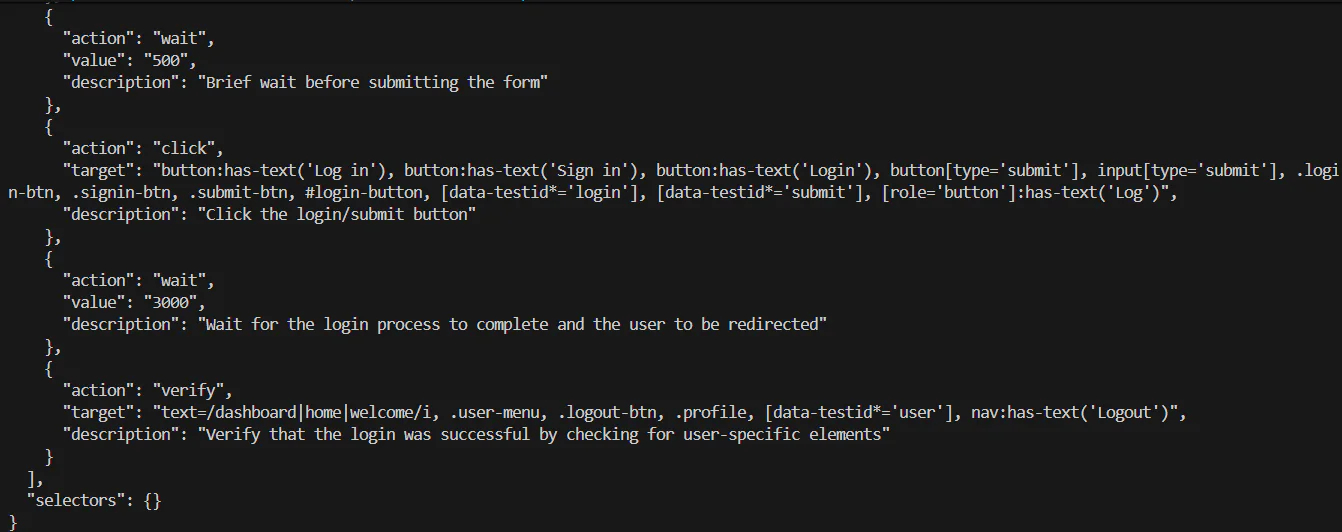

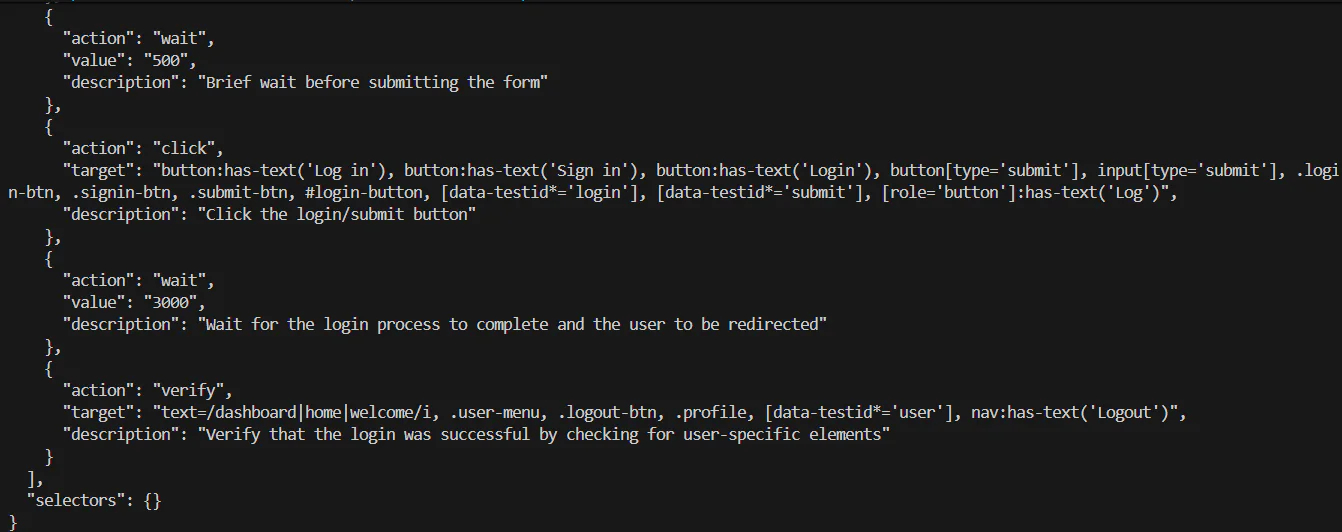

Test 2: Login Flow

Send this message:

Test the login page of this website. Create an account and use those details for performing the test.

Credentials for performing test:

username: YourUserName

password: YourPassword

https://tikitiafrika.com/login

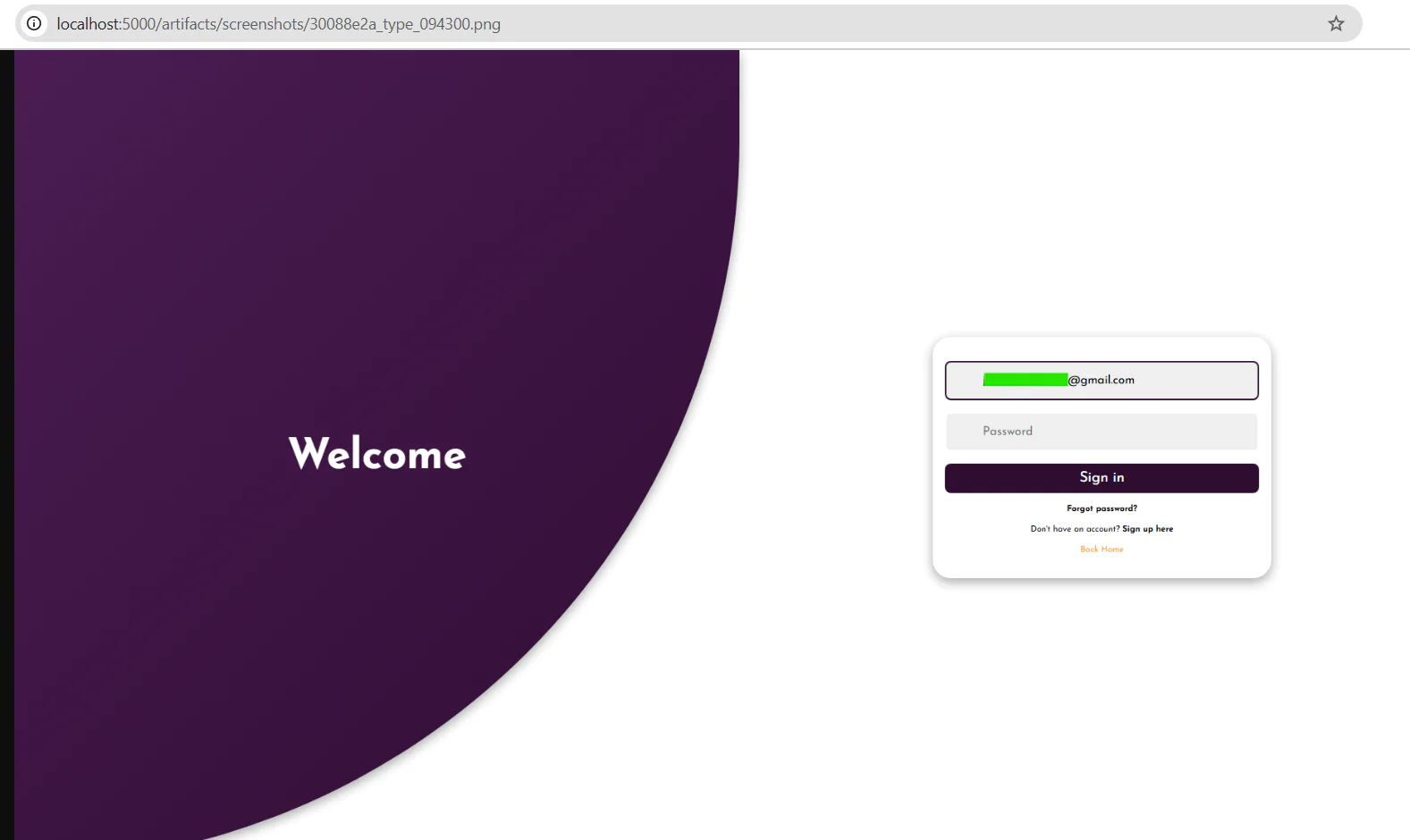

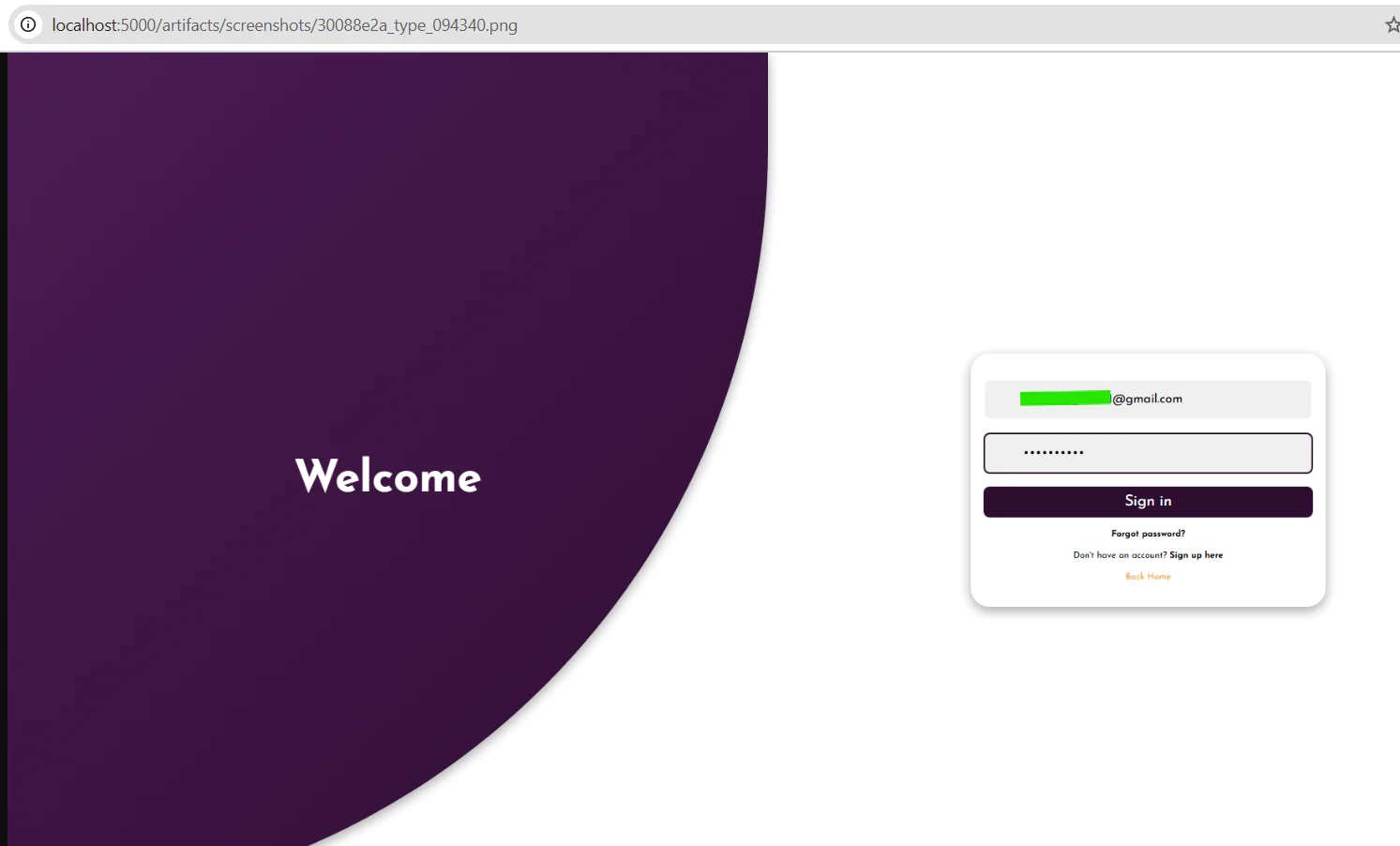

Check out the screenshots below to see the results of your application.

See the logs in your terminal to understand every step happening in your login workflow test.

Visually illustrated with screenshots are the step by step processes of the login workflow.

To access the full report with the media and traces, click or copy the link provided in the WhatsApp message and paste in your browser. Make sure your app is still up and running. Here is the report as shown in the screenshot below.

Feel free to perform other types of tests by just chatting through WhatsApp.

Troubleshooting Common Issues

Issue 1: "OpenAI API key not configured"

Symptoms: Application crashes on startup with this error.

Solution: Ensure appsettings.json has your OpenAI API key:

Testing: After adding the key, restart your app. You should see the startup log without errors.

Issue 2: WhatsApp messages not received

Symptoms: Sending messages to Twilio number, but webhook never fires.

Diagnostics:

- Check ngrok is running:

http://127.0.0.1:4040 - Check Twilio webhook URL matches your ngrok URL exactly

- Ensure your ngrok URL is HTTPS, not HTTP

- Verify you joined the WhatsApp sandbox (send the join code)

Solution:

Issue 3: "Failed to generate test plan"

Symptoms: Bot responds but says it couldn't generate a plan.

Causes:

- OpenAI API quota exceeded

- Network connectivity to OpenAI

- Invalid API key

Diagnostics: Check application logs for OpenAI API errors:

Look for HTTP error codes in logs (401 = invalid key, 429 = rate limit).

Issue 4: Tests timeout frequently

Symptoms: Many tests fail with timeout errors after 30 seconds.

Causes:

- Slow internet connection

- Target website is slow

- Selectors not found

Solution: Increase timeouts in TestOrchestrator.cs:

Better solution: Ask AI to generate more resilient selectors by modifying the system prompt in AITestGenerator.cs.

Next Steps and Enhancements

Now that you have a working weather SMS service, consider these improvements:

- Scheduled recurring tests - Use Hangfire for hourly/daily smoke tests

- Slack/Teams integration - Send results to team channels, not just WhatsApp

- API testing capabilities - Extend beyond UI to test REST/GraphQL endpoints

- Blob storage integration - Replace local file storage with Azure Blob or S3

- Add test history database - Track all test runs, success rates, and trends

Your intelligent testing system is now operational and ready to revolutionize how your team performs quality assurance. Simply send a WhatsApp message describing what you want to test, and watch as AI interprets your intent, generates dynamic test plans, executes them with Playwright, and delivers comprehensive results with screenshots. Your ChatOps QA assistant is ready to transform your quality assurance workflow!

Jacob Snipes is a seasoned AI Engineer who transforms complex communication challenges into seamless, intelligent solutions. With a strong foundation in full-stack development and a keen eye for innovation, he crafts applications that enhance user experiences and streamline operations. Jacob's work bridges the gap between cutting-edge technology and real-world applications, delivering impactful results across various industries.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.