A Guide to Core Latency in AI Voice Agents (Cascaded Edition)

Time to read:

Latency is the defining constraint in AI voice agent design. Humans have a deeply ingrained aversion to pauses in conversation, and even small delays can make an interaction feel unnatural. Meeting those expectations at scale requires careful engineering and attention to detail.

At Twilio, we’ve spent the past several years helping teams design and deploy voice AI systems. And we’ve learned that latency can mean many different things depending on context: spikes from tool calls, added computation from complex reasoning, or the inherent processing time built into the core pipeline.

This article is a guide to core latency in AI voice agents: the baseline delays that occur in every turn of a conversation. We’ll walk through the components of the cascaded voice agent architecture that contribute to core latency, show where delays accumulate, and provide initial milestone latency targets to get you started. Along the way, we’ll provide tips and highlight the tradeoffs that matter most for builders.

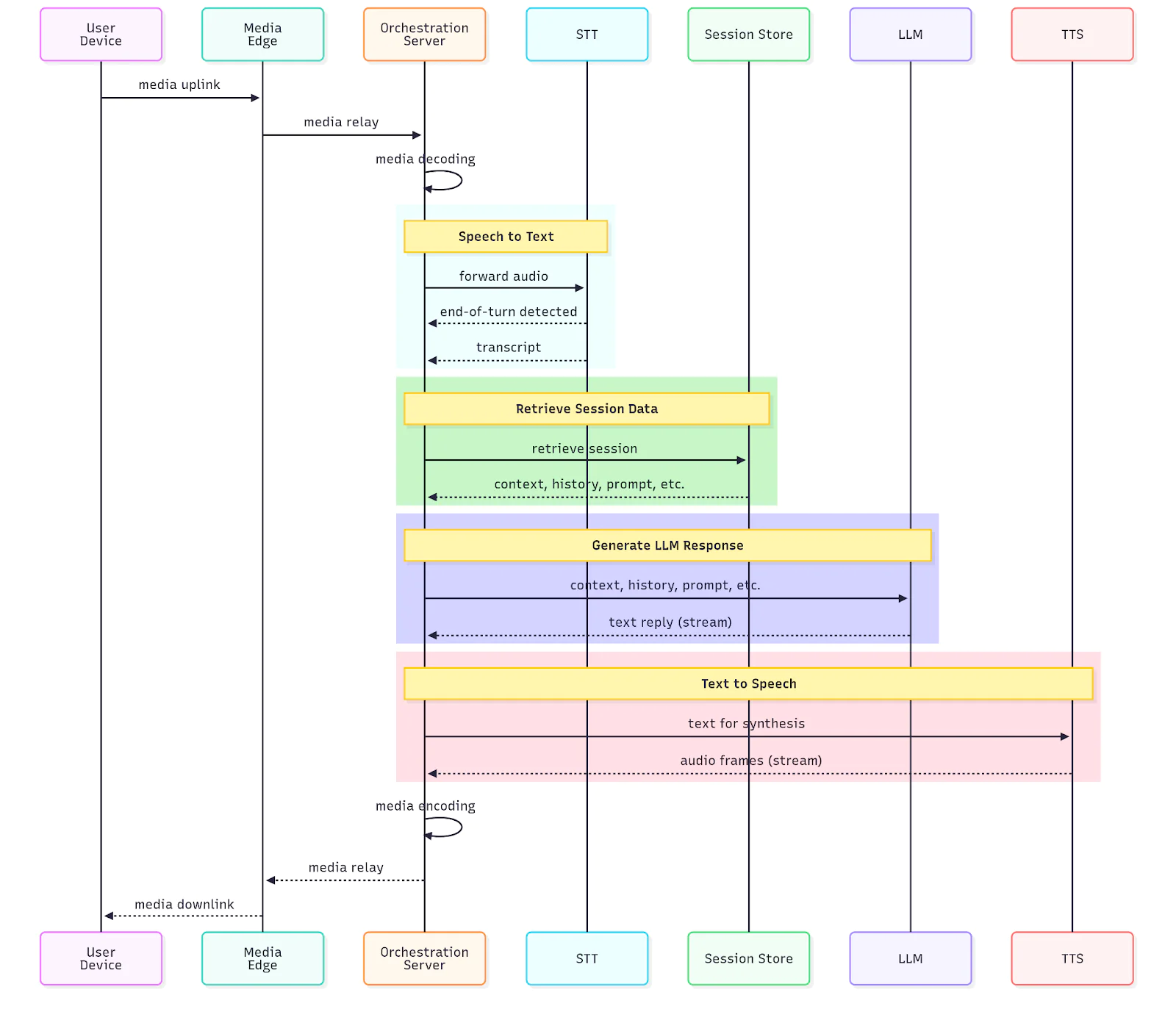

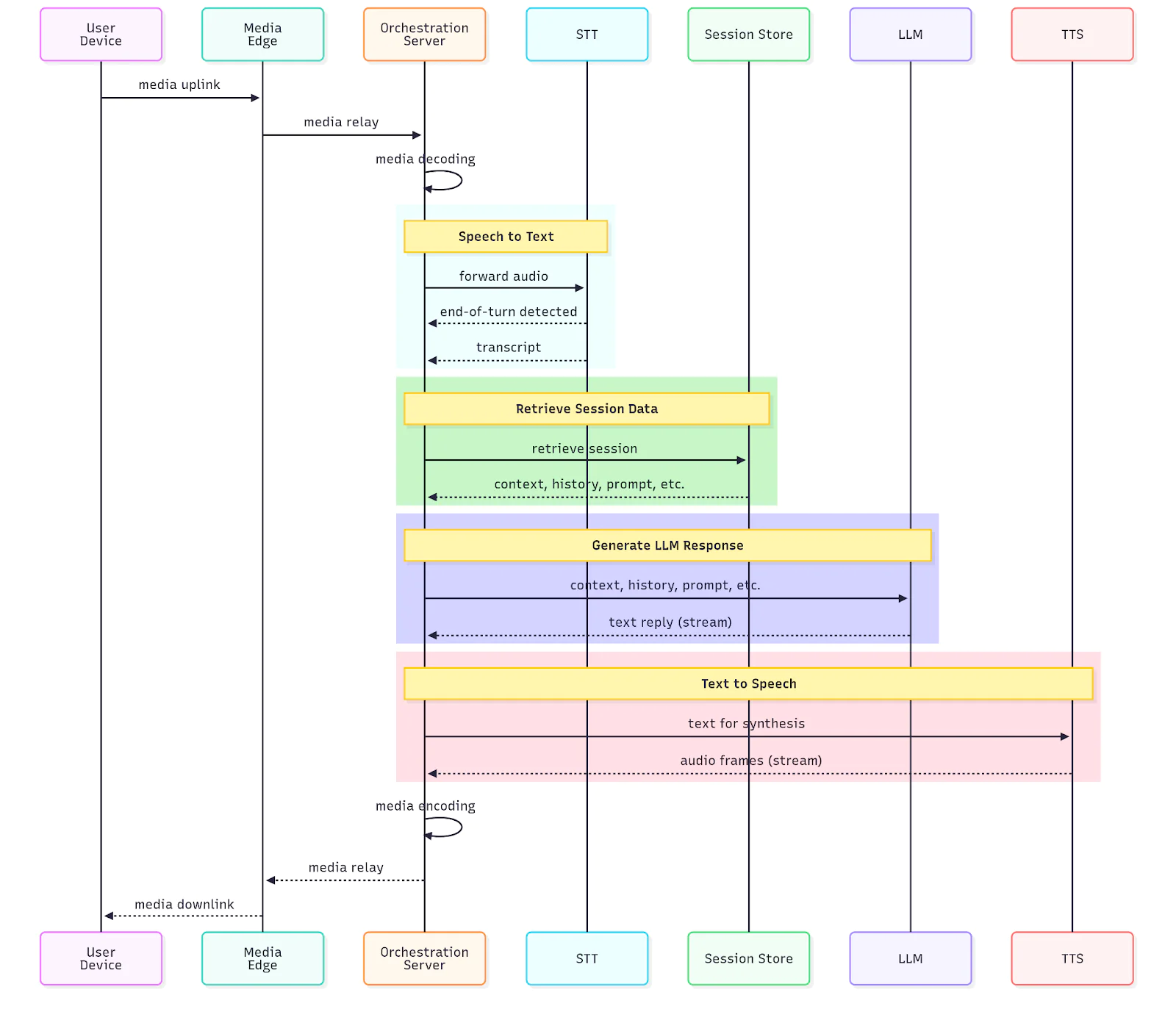

Core components of a Cascaded Voice Agent Architecture

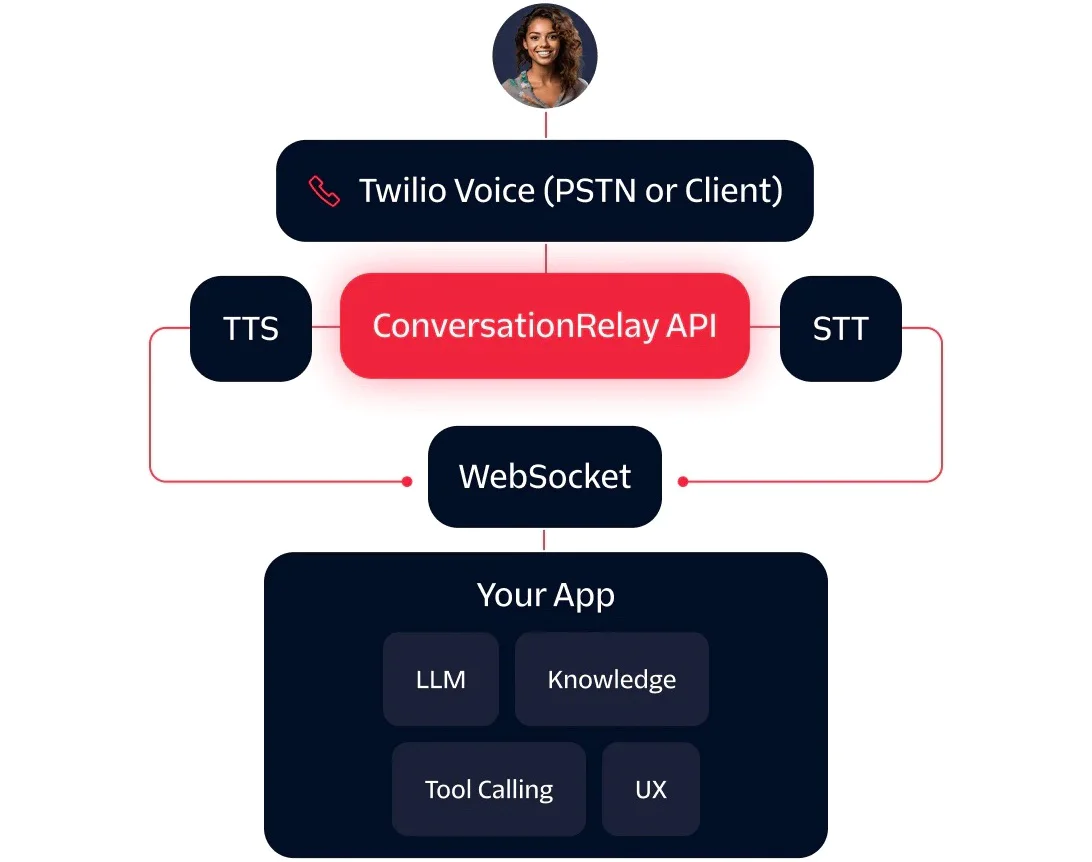

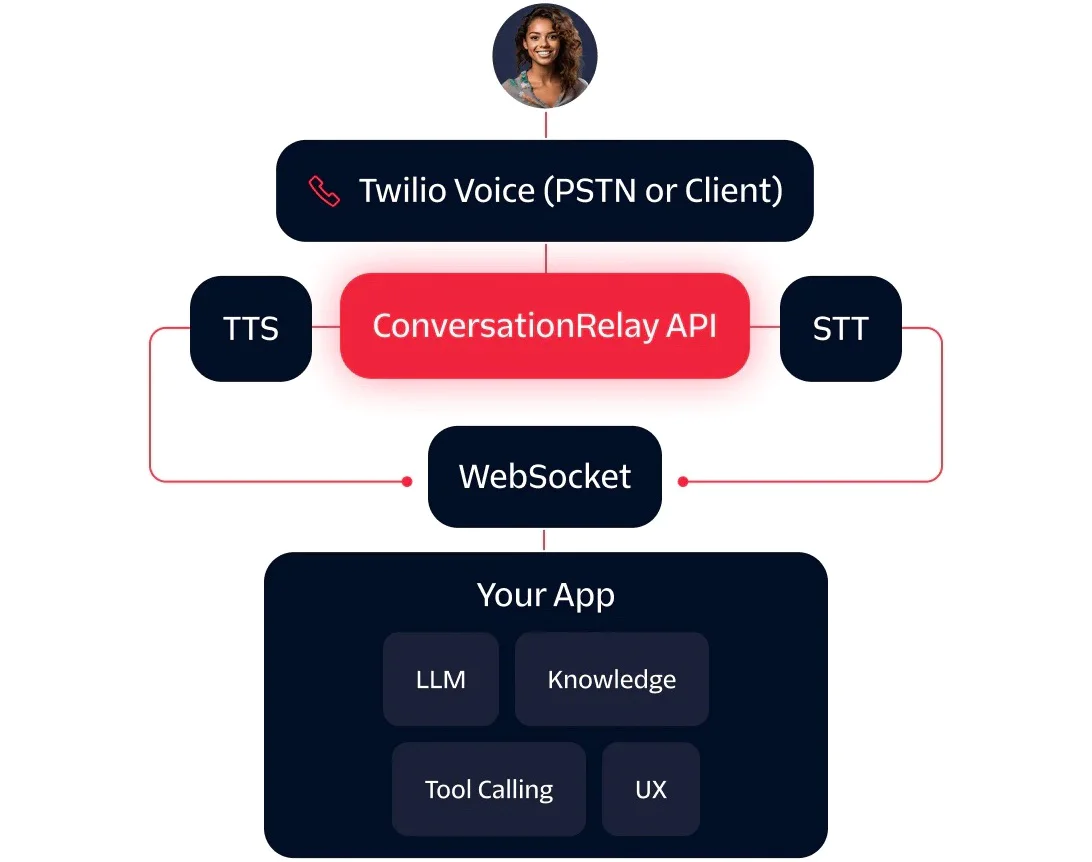

Voice agents can be built in different ways, but the most common approach today is a cascaded architecture where several specialized models are chained together in a pipeline. At Twilio, we refer to this pattern as a Cascaded Voice Agent Architecture.

At its core, the user’s speech is transcribed, the transcript is processed by an LLM, then the resulting tokens are synthesized into audio.

Speech-to-Text (STT) => Large Language Model (LLM) => Text-to-Speech (TTS)

Of course, there’s much more to it than that. The real strength of this architecture is that it is highly modular, which gives builders many ways to optimize and extend a voice agent. But that same flexibility also means latency can be defined and experienced in different ways. And if we tried to cover everything in this post, it would be a textbook.

So to keep the discussion focused and practical, we’ll narrow in on the essentials:

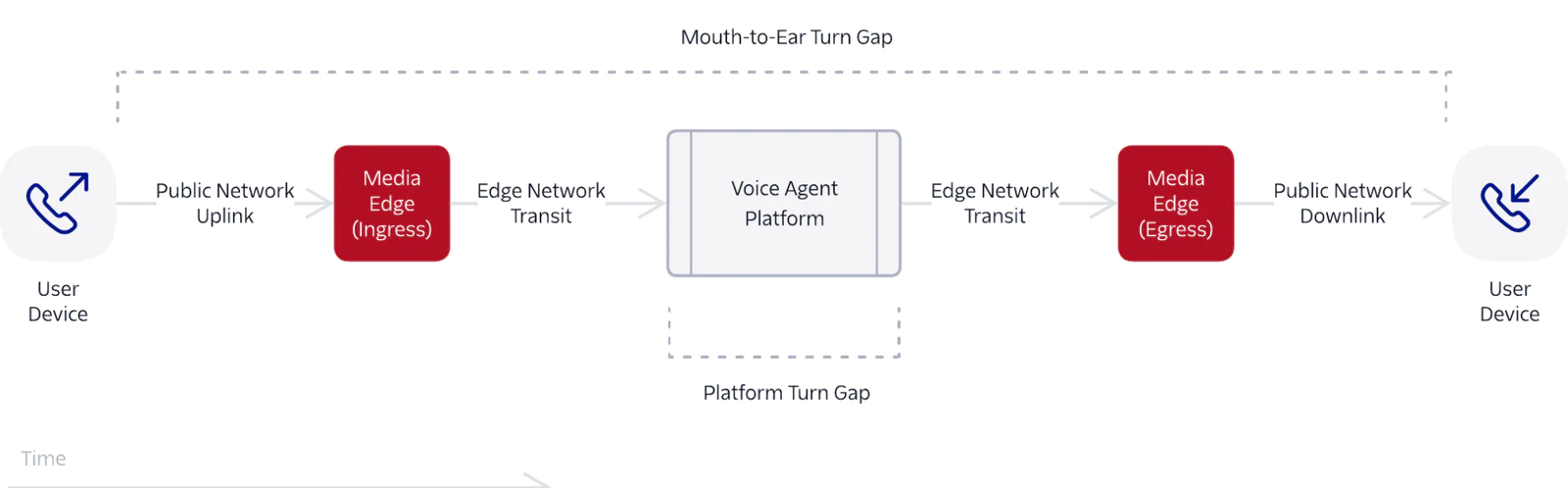

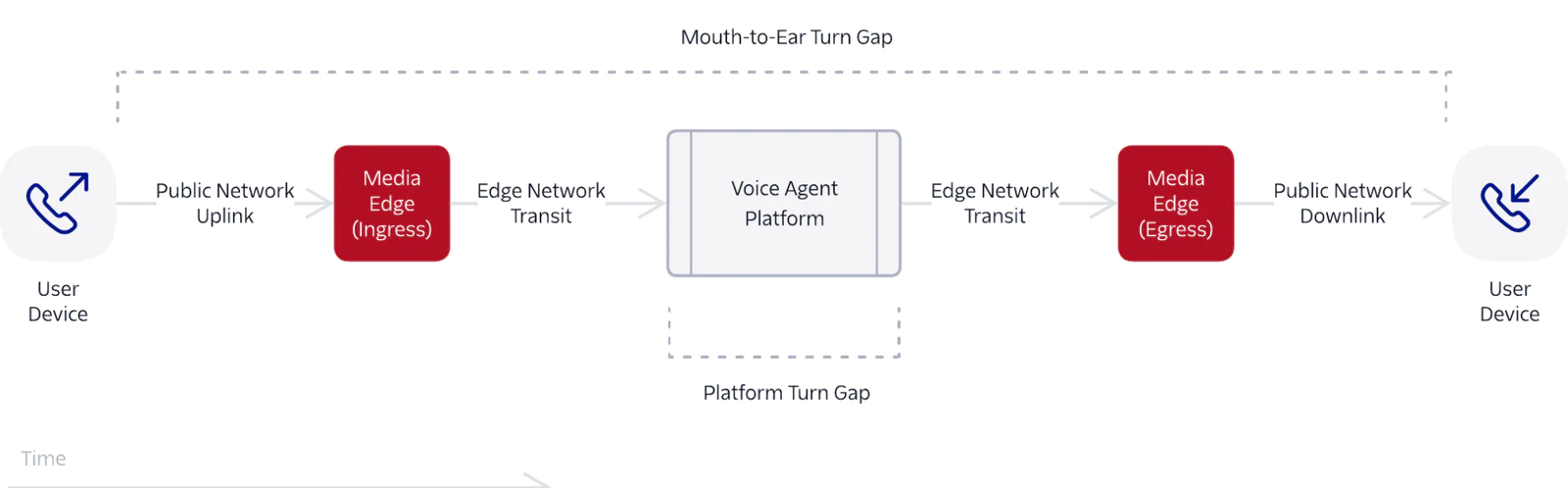

- Latency is defined as the mouth-to-ear turn gap, i.e., the time from when a user stops speaking to when the voice agent’s reply reaches their ear.

- We only consider core latency drivers, i.e., the factors that contribute to latency in every single interaction, not the occasional spikes that come from tool calls or complex reasoning.

- We exclude orchestration-layer optimizations such as generative interstitial fillers, parallel reasoning patterns such as talker-reasoner, graduated turn boundaries, and other optimizations or sources of lag. Our discussion assumes a straightforward cascaded pipeline where we can isolate the core sources of latency.

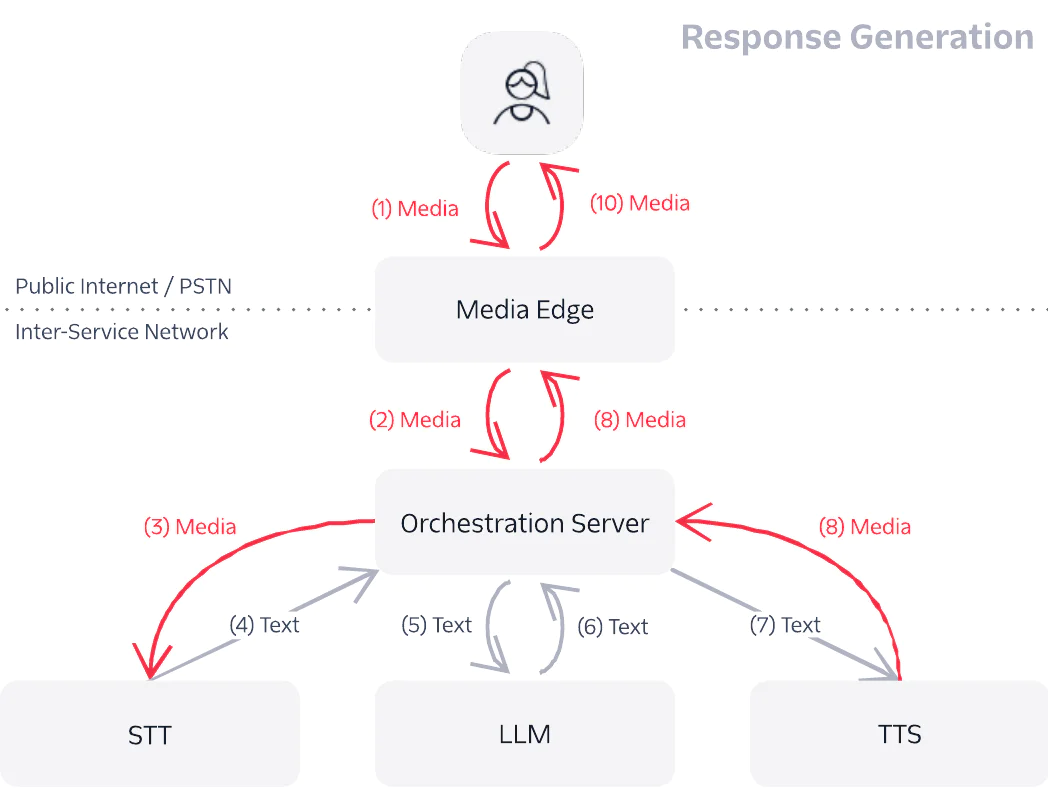

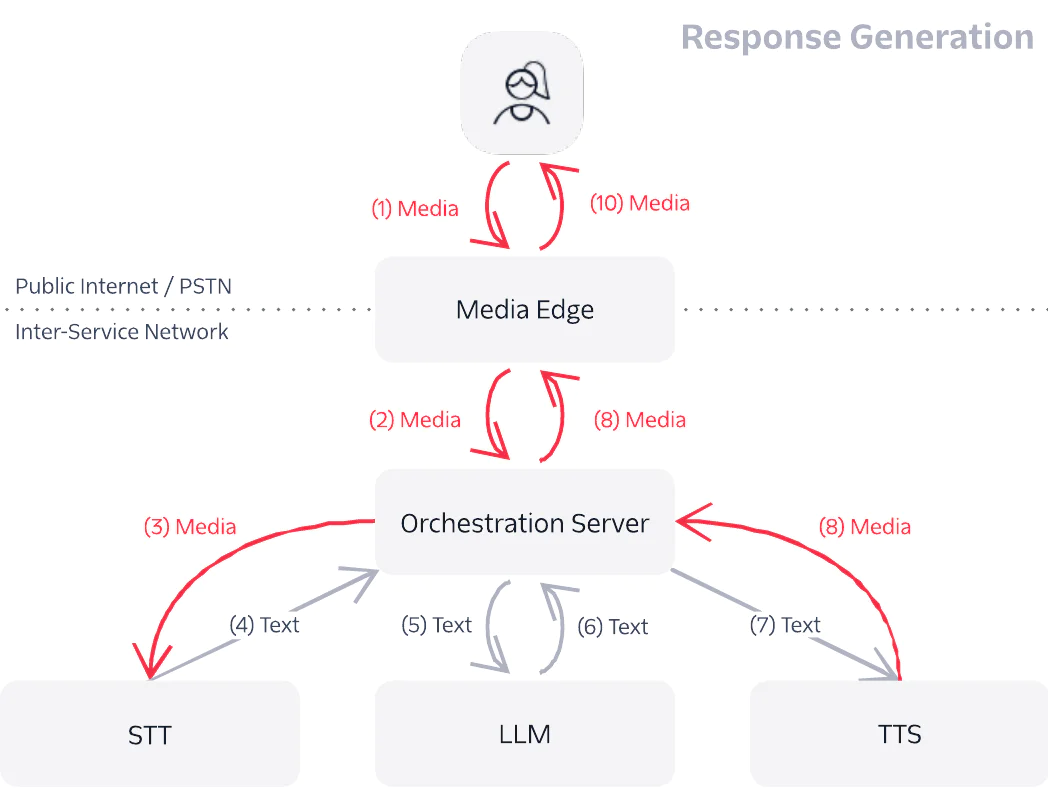

With that in mind, here’s a high-level look at the steps involved in generating a response that contribute to latency.

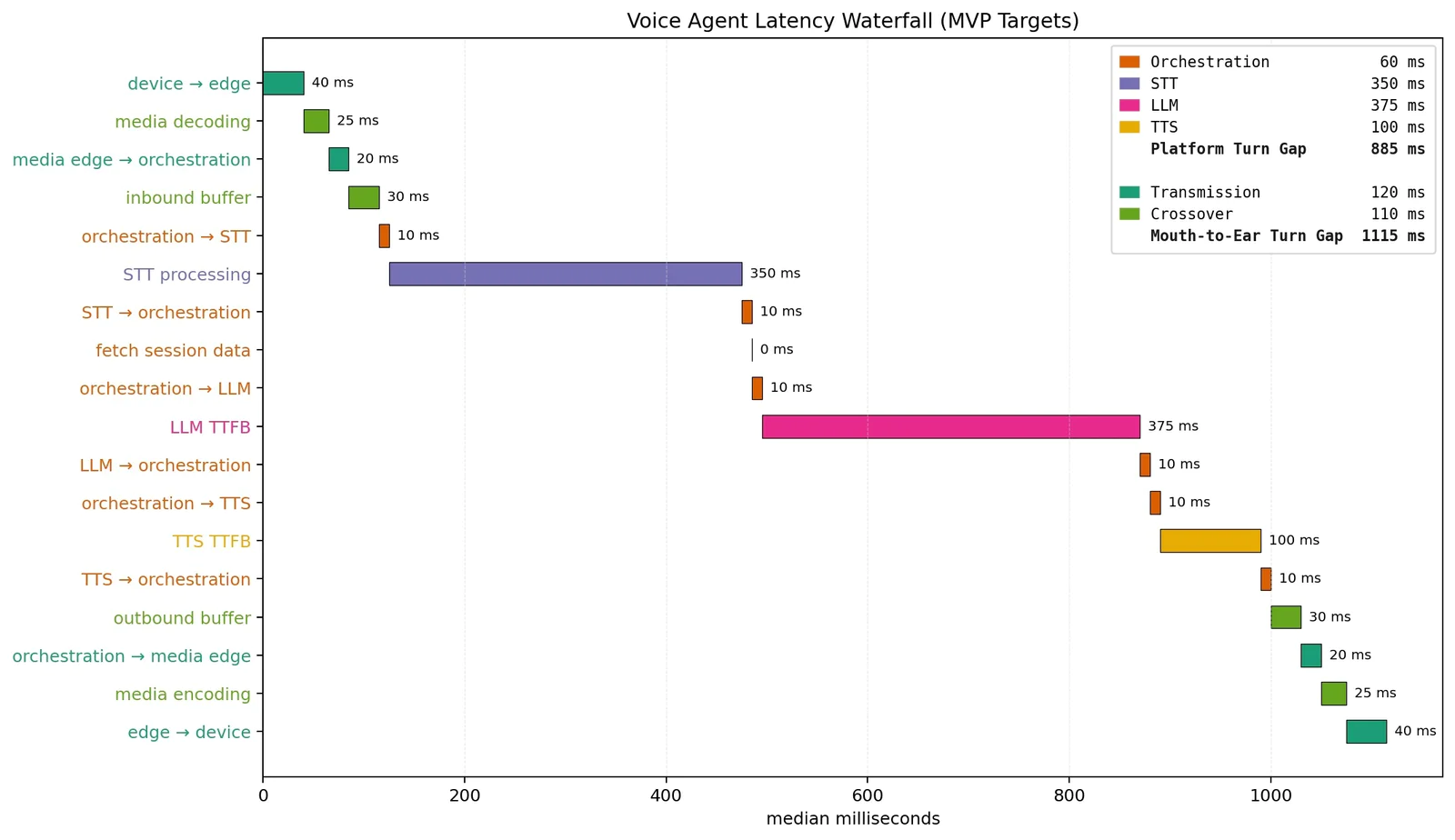

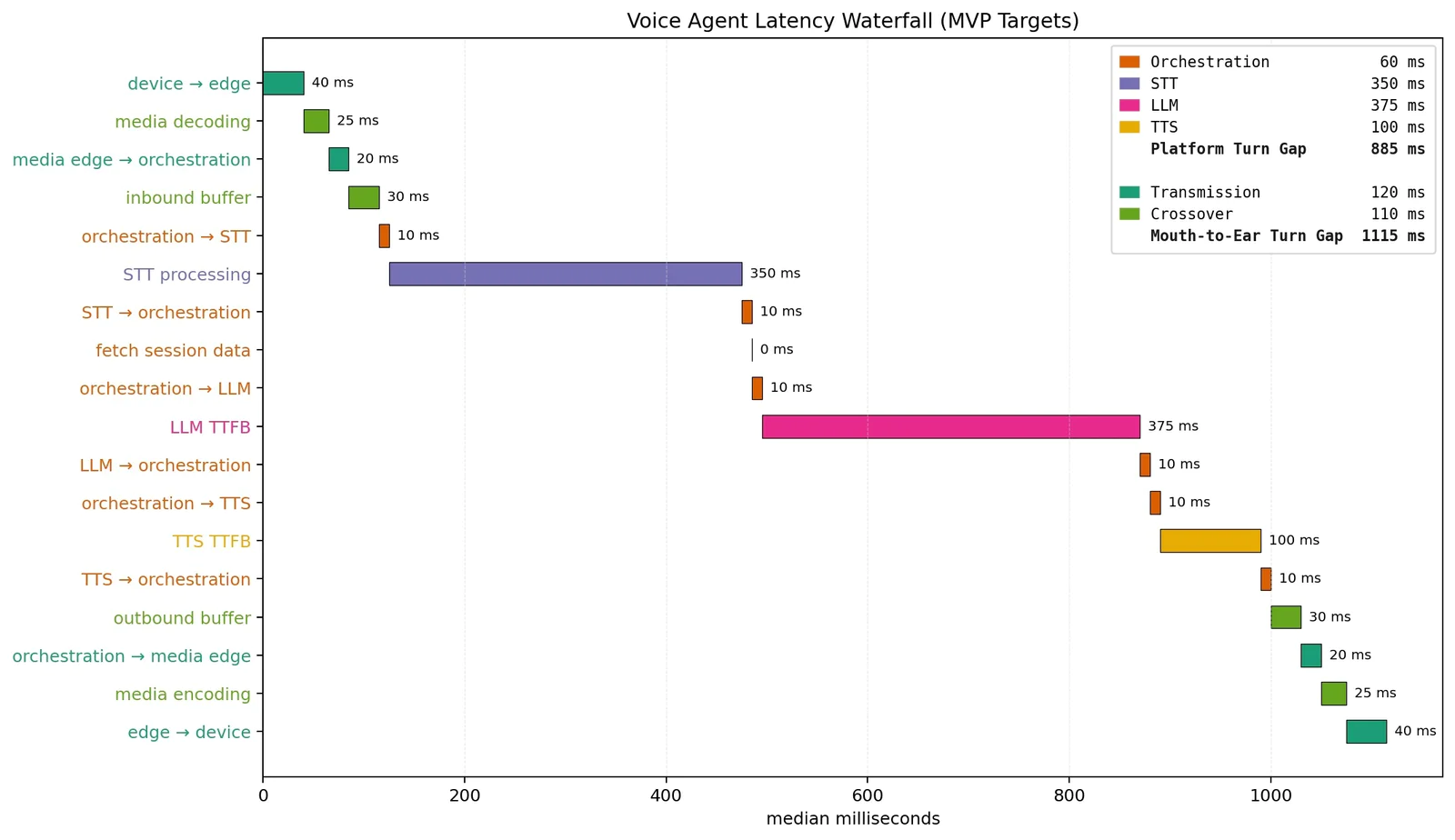

Understanding latency accumulation and initial targets

Voice agent latency in a Cascaded Voice Agent Architecture doesn’t come from a single source. It’s the result of several steps stacked together, each one adding its own bit of delay. The purpose of this section is to give you intuition for where that latency comes from, and a rough sense of the order of magnitude you should expect when putting together your first milestones.

We’ll go deeper into benchmarking and performance optimizations in future posts, but for now, here’s how latency can build up across the pipeline with a straightforward implementation (note that an implementation with Twilio will not necessarily have all of these sources of latency).

Audio travels from the user’s device to the media edge (40 ms) with buffering (30 ms) and decoding (25 ms), then through orchestration to STT (350 ms), LLM (375 ms), and TTS (100 ms), each separated by brief service hops (~10 ms). The result is re-encoded, buffered, and returned to the user (≈1.1 s total mouth-to-ear latency).

It’s important to also be precise about how latency is defined. In many cases we’ve seen from other vendors, benchmarks only measure a platform’s internal processing, leaving out the variability of network transmission. To keep things clear, we’ll provide two definitions:

Mouth-to-Ear Turn Gap reflects the latency exactly as the user perceives it: it begins when the user stops speaking and ends when the agent’s reply reaches their ear. It encompasses all latency, including the transmission of audio across the voice network to the agent platform.

Platform Turn Gap only reflects the latency within the voice agent platform. It excludes latency introduced by the audio traveling across the internet or public switched telephone network (PSTN) between the user’s device and the platform.

Target latencies for voice AI agents at launch

As of November 2025, these are our median end-to-end benchmark ranges for the initial launch of a voice agent:

| End-to-End Latency | Target (ms) | Upper Limit (ms) |

| Mouth-to-Ear Turn Gap | 1,115 | 1,400 |

| Platform Turn Gap | 885 | 1,100 |

And here are our benchmark ranges for each major component:

| Component Latency | Target (ms) | Upper Limit (ms) |

| Speech-to-Text | 350 | 500 |

| LLM TTFT | 375 | 750 |

| Text-to-Speech TTFB | 100 | 250 |

Orchestration layer latency drivers

A cascaded voice agent is defined by the orchestration layer, a runtime platform that coordinates the communication between several specialized models and services. By contrast, a speech-to-speech model processes the entire exchange in a single latent space with no intermediate representations.

Orchestration latency is primarily driven by network transmission time and overhead during network crossover, such as jitter buffers and codec transcoding. These overheads express themselves in two distinct but interrelated domains:

- Voice Network Latency: The delays introduced as audio travels from the user’s device, across the public internet and/or PSTN, through edge infrastructure, and into the agent platform. It reflects both controllable factors and uncontrollable ones (last-mile congestion, variable packet loss, noisy neighbors), making it one of the most variable contributors to end-to-end latency.

- Inter-Service Latency: The delays introduced within the platform itself as audio and data pass between services like STT, LLM, and TTS. Each inter-service hop adds transmission and processing overhead, compounding across the pipeline.

Minimizing inter-service latency

To generate a single response, a cascaded agent requires at least ten network traversals: two voice legs over the public network (internet or PSTN) and eight inter-service handoffs. The key challenge here is a seemingly benign inefficiency can become problematic when repeated across some or all of these steps.

Cluster your services and deploy them regionally

Where you host your services is one of the first considerations. When your services are spread across regions (or routed inefficiently), each hop between STT, LLM, and TTS adds a few extra milliseconds which compounds across each hop.

The general principles are simple: keep your services close together and deploy them close to the customer. You can achieve this through the usual means such as monolithic infrastructures, colocation, or regionalized APIs. A fourth option is managed voice orchestration, a service that colocates STT, TTS, and orchestration near the media edge so you don’t have to.

💡 Prioritize your media services first

Audio is heavier than text. It consumes more bandwidth, is more sensitive to intermittent service degradation, and is more strenuous in nearly every way. Prioritize your initial effort and money on ensuring orchestration server, STT, and TTS models are close together. Delays from the LLM or tool endpoints still matter, but they’re easier to mask with interstitial fillers and are generally less volatile since they only deal with text.

💡 Colocate at the media edge location for maximum performance

Media always enters through the edge location nearest the customer. What technically matters is the distance from that ingress point to your services, not the distance to the end-user. For best results, we suggest our customers colocate at a Twilio Edge Location or use Twilio Interconnect to connect privately into the edge.

💡 Be cautious when co-hosting STT and TTS on the same machine

STT and TTS workloads are generally out of phase, which makes it tempting to run them on the same machine. However, when interruptions or false VAD triggers cause turn collisions, the workloads align in phase and produce constructive interference that drives tail workload spikes.

If any component degrades in a way that triggers more collisions than normal, many sessions will be at tail workload levels. Once GPU memory and compute cross a threshold, task-switching overhead and contention pile on. That adds latency across all services on the inference stack, triggering additional situations that cause constructive interference. Eventually, the system feeds back into a death spiral. Colocation is typically a better alternative to co-hosting.

💡 Take the time to plan your regional strategy

You do not control where your customers are. That makes it essential to plan ahead by choosing inference providers who offer regionalized APIs or support on-premise colocation, and pairing them with a voice network that has global media edge locations.

This may sound obvious, but many providers still do not host certain models worldwide, and some solutions, such as TTS, depend on broad caching networks that are not always truly regionalized. Do your homework up front, since replacing providers after colocation and integration is expensive and disruptive.

Avoid unnecessary crossover costs

A certain amount of processing overhead is unavoidable. Our warning here: it is easy to introduce additional overhead by mistake, particularly when working with audio. Here are a few tips on how to avoid these situations:

💡 Pay attention to audio formatting to avoid hidden conversions

All audio models – including STT, TTS, and other audio ML components – are trained on raw PCM audio with a specific format. Provider APIs often accept a variety of inputs and silently convert them to the format the model expects, which introduces latency and can sometimes degrade quality.

Our recommendation isn’t necessarily to handle all conversion yourself, although that is often the cleanest solution. But rather to understand how audio conversion occurs throughout the pipeline to avoid unnecessary overhead.

Pay particular attention to these aspects of your audio format:

- Codec: All audio models operate on uncompressed PCM.

- Sampling rate: STT models typically expect 16 kHz; TTS models often use 24 kHz or 48 kHz. Any mismatch triggers resampling.

- Channel layout: Most models are trained on mono input. Other layouts may be downmixed automatically.

- Frame alignment: STT models process audio in small frames for real-time processing, while TTS models typically buffer and return larger audio segments. Ensure your buffers are aligned with each service to avoid over or under buffering.

💡 Use persistent REST HTTP connections

Confirm that your HTTP clients and SDKs are reusing persistent (keep-alive) connections to avoid unnecessary handshakes. This is particularly important for serverless architectures as additional steps to keep-alive connections may be needed.

Minimizing voice network latency

Optimizing a voice network is a huge topic, and Twilio’s blog and documentation have already covered it extensively. What we’ll do here is focus on a few high-level considerations that matter most for latency-sensitive voice agents.

💡Minimize the distance between the user and the media edge location

Every voice connection begins with network transmission across a public network. Audio from the user’s device travels over the PSTN or open internet to a media edge location. From there, it is relayed across a private edge network to the agent platform.

The public leg is the slowest and least reliable part of this path. Your goal is to minimize the distance between the user and the media edge so calls spend as little time as possible on the public network. You can read more about this topic here: Operate Globally with Twilio Regions and Edge Locations.

If your voice provider doesn’t operate a global edge network, your server effectively acts as the media edge, forcing audio to traverse the public internet all the way to your platform.

Twilio maintains a global voice network with media edge locations distributed across major regions, ensuring users connect through the closest ingress point. For even greater control, Twilio Interconnect lets you establish private connections directly with your data center further improving performance and reliability.

💡Eliminate extra network traversals with BYOC

Each additional network boundary can introduce another encode, another decode, and another jitter buffer. These costs don’t come from Twilio voice legs – Twilio’s media edge and private backbone are designed to avoid unnecessary transcoding and buffering.

The real risk comes from crossing between multiple external networks (for example: third-party CPaaS platforms, SIP intermediaries, or WebRTC bridges). Every time traffic leaves one private domain and re-enters another, there is often a hidden media conversion, a buffer realignment, or an extra relay hop. These crossover costs accumulate quickly, adding latency and increasing carrier spend while also degrading audio quality.

Bring Your Own Carrier (BYOC) removes those unnecessary traversals. By using Twilio Elastic SIP Trunking as the direct connectivity backbone, your contact center platform connects without detouring through external CPaaS hops or additional public-network touchpoints, while still retaining Twilio’s low-level call control.

The result is fewer inter-network crossings, lower latency, and materially lower cost, especially at scale.

You can find solution blueprints for major contact center providers here: Elastic SIP Trunking – Solution Blueprints.

Using a managed voice orchestration service

For most builders, the hardest part of achieving low-latency performance is everything around audio. There are many specialized models, each with their own quirks and dependencies. The workloads are heavy, sensitive to degradation, and leave little margin for error. On top of that, voice integrations require deep industry knowledge of telephony networks.

Most AI teams don’t want to manage that type of complexity, nor should they have to.

A managed voice orchestration service handles the entire real-time audio and AI processing pipeline for you. It streams, transcribes, detects turns, and synthesizes speech while keeping every step synchronized and optimized for latency. It turns the complexity of audio into a clean text interface, letting you focus on the agent’s reasoning instead of media plumbing.

Twilio ConversationRelay

ConversationRelay is Twilio’s managed voice orchestration service for AI voice agents. It manages the entire real-time media and AI pipeline so your team doesn’t have to.

- Built for live conversation: <0.5 second median latency, <0.725 second at the 95th percentile1

- Audio pipeline orchestration: Manages real-time speech recognition and synthesis to keep audio tightly synchronized.

- Excellent voice models: Integrates leading STT and TTS providers including Amazon, Deepgram, ElevenLabs, Google, and more to come.

- Performance optimization: Low-latency infrastructure, streamlined handoffs, and tuned network paths.

- Global deployment: Operates across Twilio’s global media edge network for consistently low latency worldwide.

- Cross-protocol compatibility: Works across WebRTC, SIP, and the PSTN, so your voice agent can connect anywhere.

- Built on Twilio Programmable Voice: Provides programmable call control and access to Twilio’s enterprise integration ecosystem, including CCaaS, PBXs, and SBCs.

Speech to Text latency

The speech-to-text component includes two processes that are roughly performed in parallel: transcription and end-of-turn detection.

End-of-turn detection is usually the long pole in the tent because systems are tuned to avoid false positives. When the system misidentifies a natural pause as the end of speech, the AI agent starts speaking and interrupts the user, which feels far worse than a little extra latency. As a result, end-of-turn mechanisms are typically configured with built-in padding.

💡 Aggressive endpoint thresholds can backfire

Pushing end-of-turn detection too aggressively can cause the agent to interrupt users. Err – and interrupt – on the side of caution.

Types of End-of-Turn Detection

End-of-turn detection is a rich field of active innovation and there are many strategies to determine when a user has finished speaking. Most of these approaches use some combination of these signals:

- Silence Thresholds: The simplest approach, based on detecting when audio energy drops below a set level for a fixed duration.

- Voice Activity Detection (VAD): Distinguishes between speech and non-speech segments to produce more accurate turn boundaries than simple silence timers.

- Prosodic Features: Cadence, inflection, and other auditory cues are used to determine when the user is finishing speaking.

- Semantic Meaning: The transcript is analyzed for linguistic cues that indicate the user has finished their thought.

For our purposes, we’ll squash this very rich field into two overly simplistic buckets:

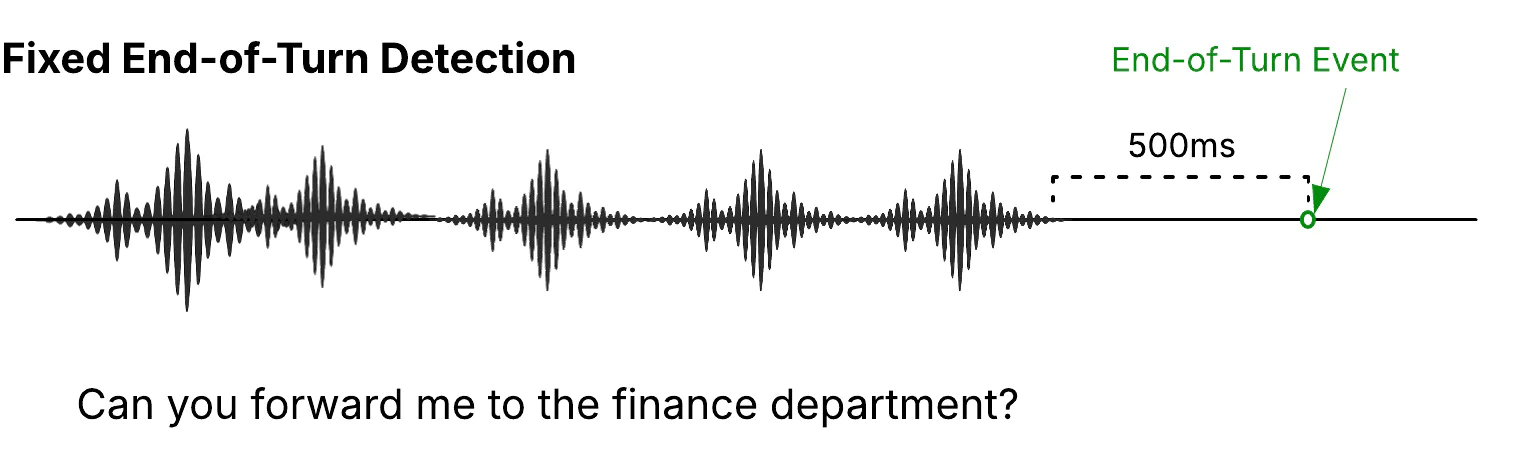

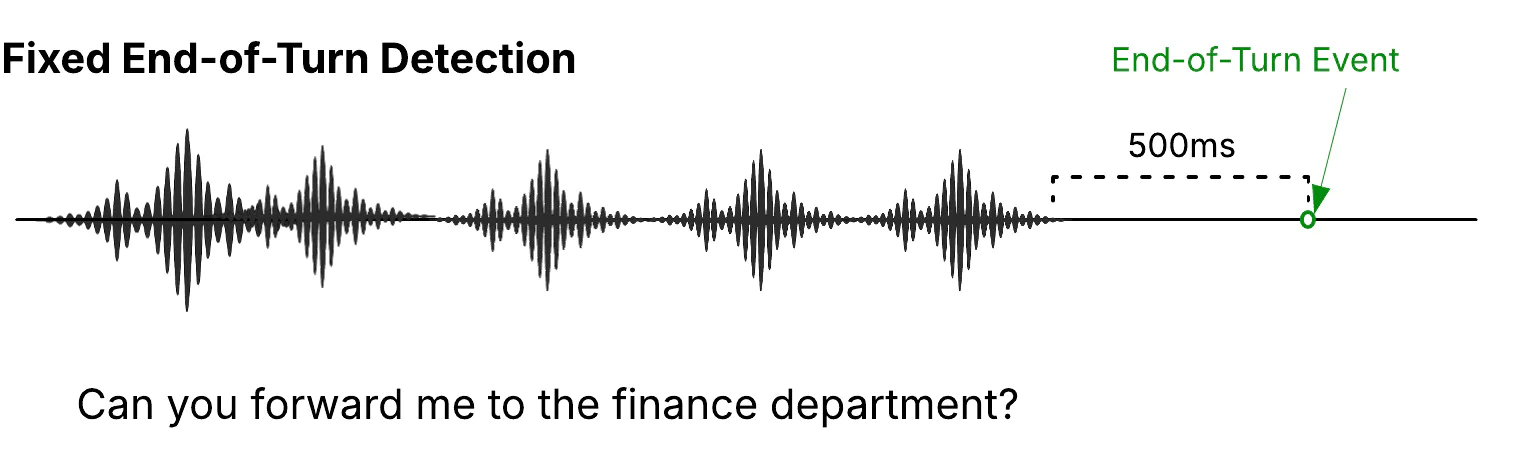

- Fixed End-of-Turn Detection uses silence timers or lightweight VAD.

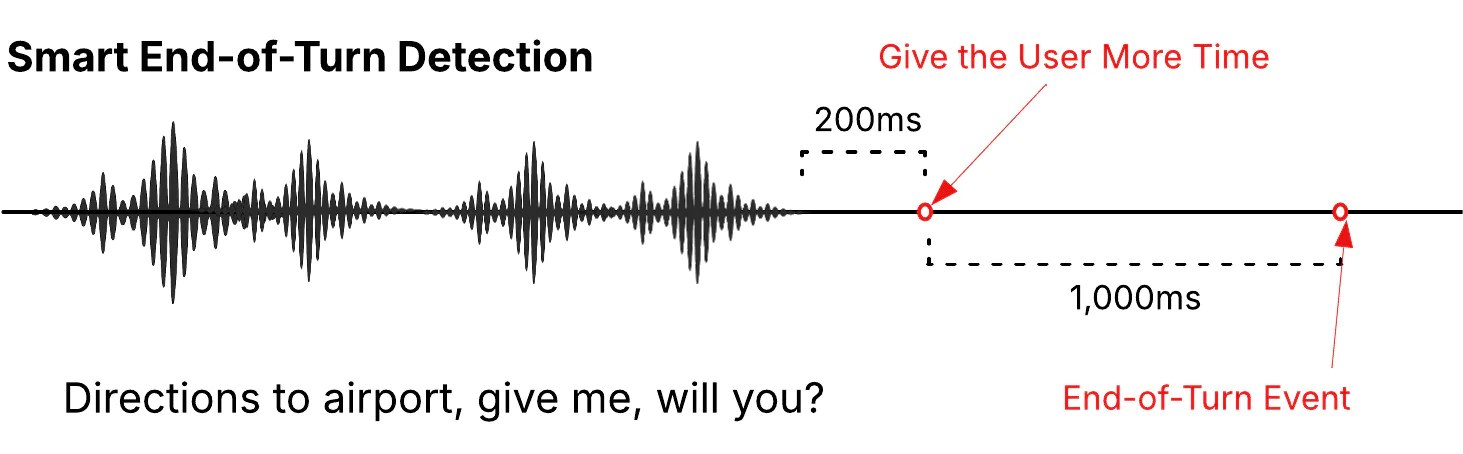

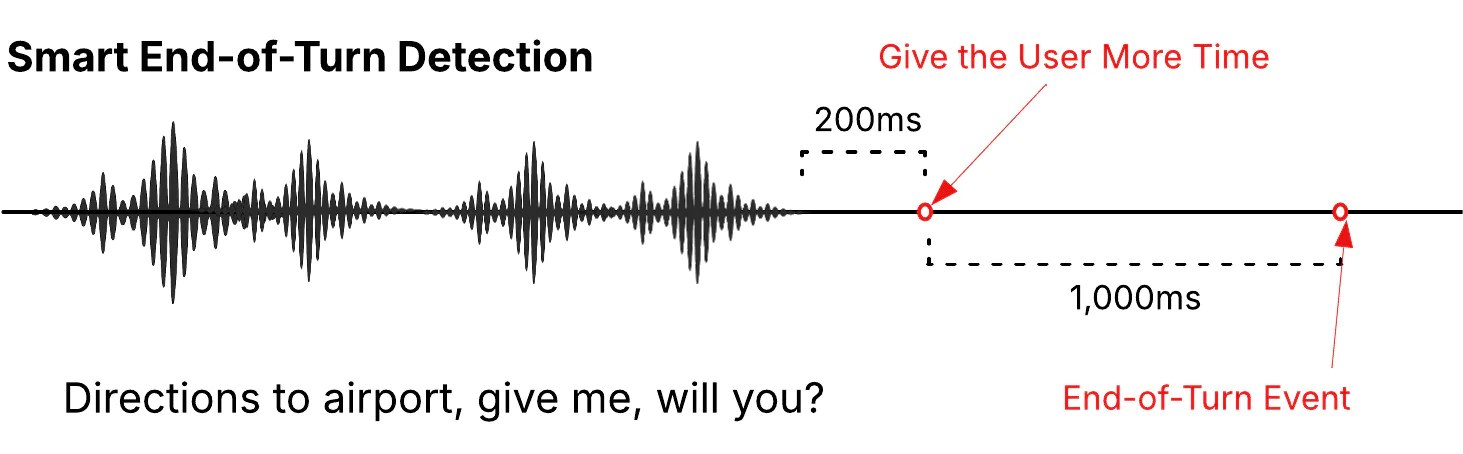

- Smart End-of-Turn Detection applies trained models to advanced VAD, acoustic features, and semantics to infer natural turn boundaries.

In practice, nearly all end-of-turn systems also include a timeout based on raw silence, ensuring a fallback even when higher-order models fail to trigger.

Fixed vs Smart End-of-Turn Detection

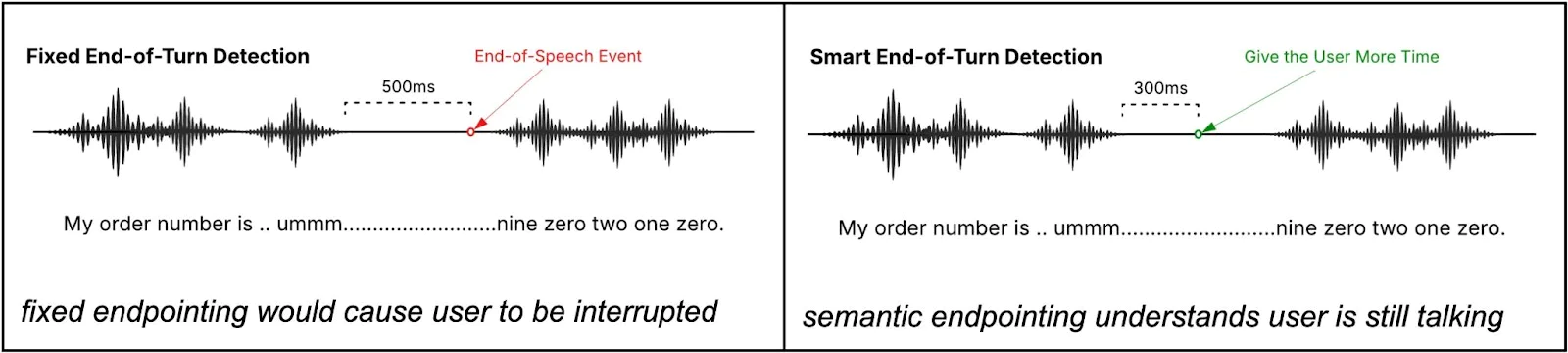

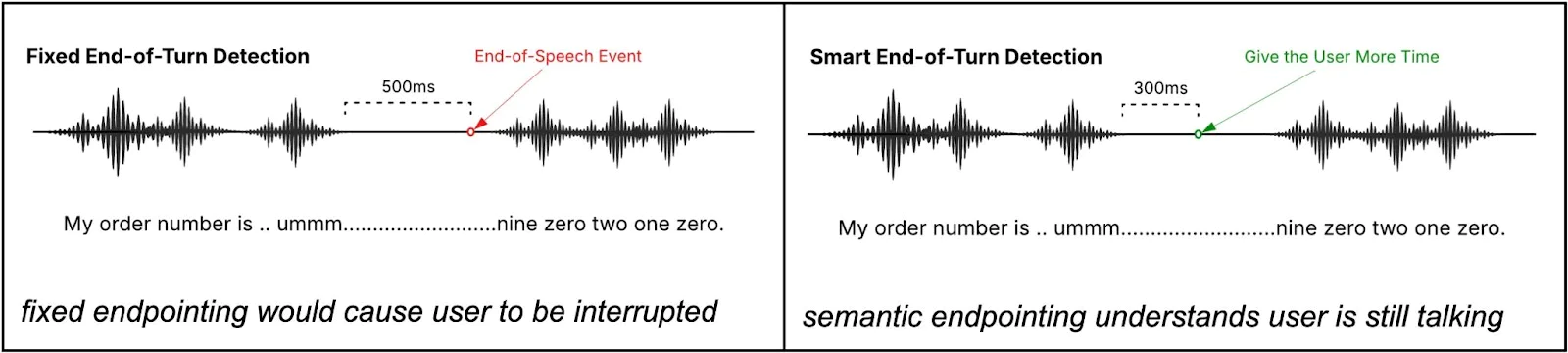

Fixed End-of-Turn Detection is the traditional approach to determine when a user has finished their speaking turn. The system assumes a user is finished speaking after some period of silence. Most defaults are set around 500ms and reducing the threshold is risky because natural pauses can be erroneously flagged as the end of a turn.

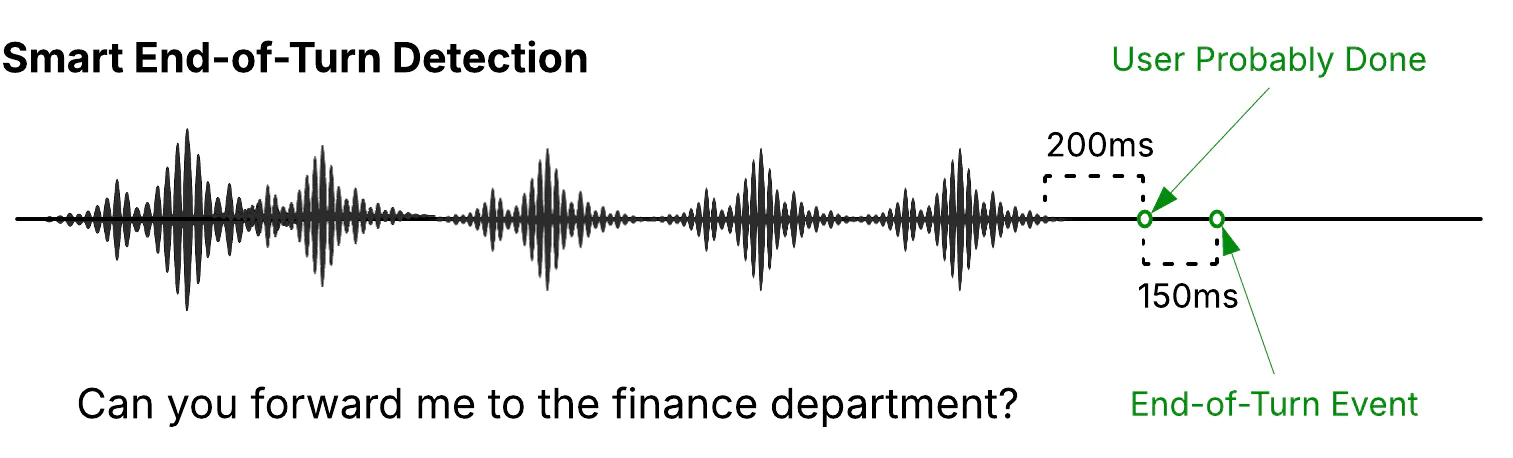

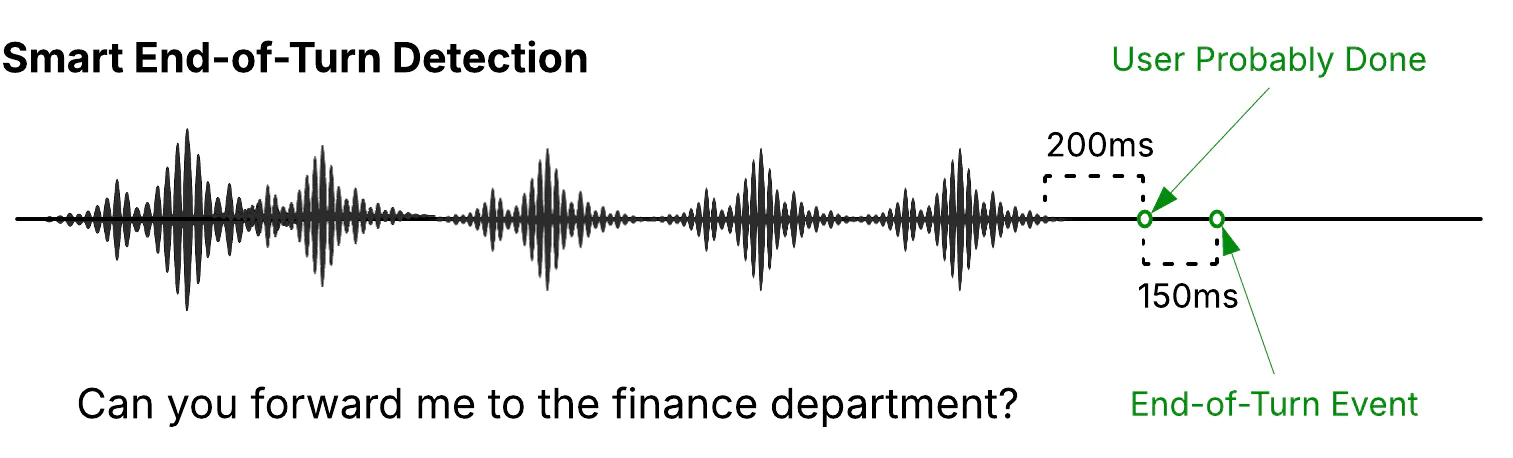

Smart End-of-Turn Detection takes advantage of trained models that look beyond raw silence. By combining these richer signals, smart endpointing can more accurately decide whether a pause is just a hesitation or a genuine end of turn. Because it is smarter, it can usually support tighter timing thresholds than fixed methods, cutting latency while still avoiding false positives.

To understand the advantage of smart endpointing, let’s imagine a scenario where a user pauses for a moment while they try to remember their order number. With fixed endpointing, the user could be interrupted by the agent because their natural pause is longer than the silence threshold. Smart endpointing understands the user is likely pausing based on the context and correctly waits before triggering the agent to start speaking again.

The risk with smart endpoint detection is introducing additional latency when the model believes the user is still speaking but they are actually finished. This can occur due to inaccurate transcripts, call quality degradation, accents, or individuals with divergent speaking patterns.

For instance, users with divergent speech patterns (such as irregular pacing or non-standard grammar) can confuse the model into thinking the user is still speaking when they are finished, which introduces an unnecessary delay.

💡 Smart endpointing can reduce median latency, but watch for tail latency spikes

Smart endpointing reduces median latency, but can also cause abrupt jumps in tail latency. The endpointing model will intentionally delay the agent’s response if it believes the user is still speaking. When this prediction is wrong, the user has already finished, but the agent continues waiting until a silence timeout triggers.

A common sign of suboptimal smart endpointing is a discrete jump in the tail of your latency distribution that is roughly aligned with your endpointing silence timeout.

Tighter End-of-Turn Detection with graceful aborts

The UX penalty for prematurely flagging the end of a speaking turn can be mitigated. STT happens first, but the system still needs to generate a completion, wait for the first token, send it through TTS, and forward the resulting media to the edge. With a tightly coupled pipeline and a graceful abort process, you buy several hundred milliseconds to catch and correct mistakes.

You can configure endpointing more aggressively if you can gracefully abort the downstream steps. That means you’ll need to:

- Clear outgoing audio buffer immediately

- Abort the LLM stream

- Cancel any open tool requests

- Clean up conversation history

💡 Colocate STT and TTS for reliable graceful aborts

Gracefully aborting the response pipeline is complex because STT, TTS, and the audio buffer must operate in lockstep. Latency between these services creates an unavoidable race condition that will trigger a disorienting stutter effect some percentage of the time. The tighter your STT, TTS and audio buffer are, the lower that percentage is.

Managed voice orchestration services, such as ConversationRelay, are designed with this type of problem in mind, giving you a tight integration layer without the burden of stitching it together yourself.

LLMs for Voice Agents

Low latency is a hard requirement for LLMs that power voice agents. In a chat interface, people can wait a few seconds while the model thinks; in fact, latency gives users the assurance that the LLM is working. In a voice conversation, even a short pause can feel unnatural and break the flow.

Selecting a model

Voice agent architectures are fundamentally distinct from text-based chat architectures. Real-time dialogue is typically driven by a lightweight conversational model optimized for latency, emotional intelligence, and speaking style. Deeper or more computationally intensive reasoning is usually handled asynchronously by background systems.

The conversational LLM should be optimized for two things:

- Speed. The core latency introduced by an LLM is controlled by the time-to-first-token (TTFT). Speech synthesis can begin as soon as the first tokens are received – hence, getting to the first token is what matters.

- Instruction Following. Models that follow directions well can be coached to generate prose that translate well to speech and focus on emotional intelligence. They are also more easily controlled by background models with higher reasoning. For instance, OpenAI’s GPT-4.1 showed noticeable improvements over GPT-4o in this respect ( GPT 4.1 model card).

What to look for in a provider and API

Choosing a model is only half the story. The provider and API you pick will shape how well that model performs. The right setup won’t shave hundreds of milliseconds off latency, but the wrong one can add noticeable delays or break the system entirely.

Provider considerations:

- Regional availability. Voice agents need to be deployed close to the edge, which should be dictated by where your users are located. Having the option to process in-region is critical if you want to avoid extra network hops.

- Shared vs. dedicated infrastructure. Some providers offer dedicated capacity, which can help address long-tail latency during peak hours. In practice, isolated hardware makes the biggest difference in audio stacks (STT, TTS), where tail latency can’t be hidden the way it can in LLMs.

API considerations:

- Streaming is non-negotiable. If the LLM API doesn’t support streaming, it’s an immediate disqualifier. No exceptions.

- Beware of managed conversation history. Many APIs offer “helpful” layers that manage conversation history or tool calls. These were mostly designed for chat, where latency isn’t a primary concern. In a voice context, they often introduce delays and rigidities that don’t fit. If you go down this route, benchmark early before committing.

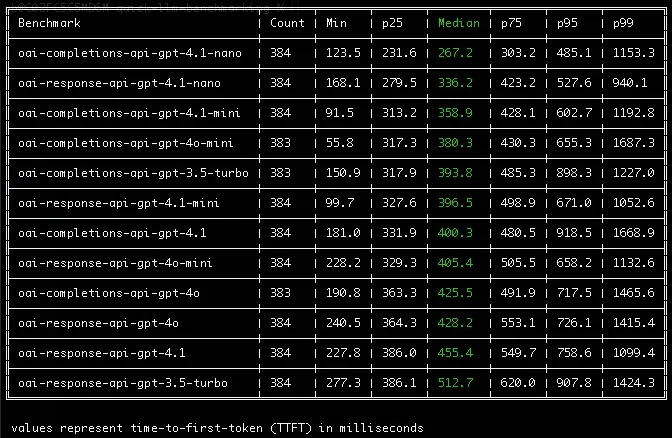

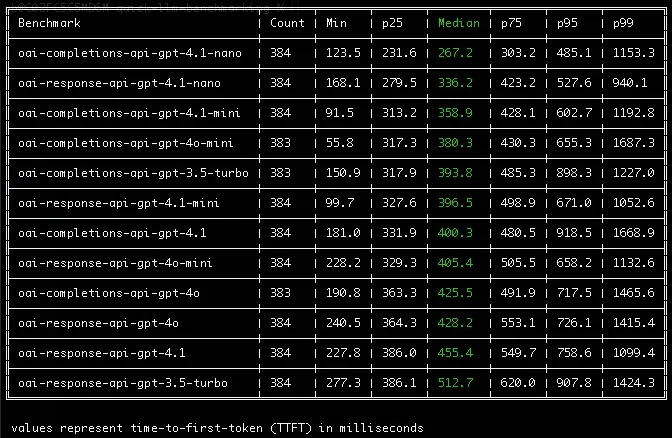

Benchmark early to validate your LLM selection

There are many public sources for LLM benchmarking, such as the Artificial Analysis Leaderboard. While those can be useful for broad comparisons, it is essential to benchmark the LLM stack you plan to use in production. Test the LLM model running on the inference provider using the specific API interface.

And benchmark early, before design decisions start to harden. Many teams design their agent around a model or reasoning pipeline before validating its latency profile, only to discover late in the process that it can’t meet conversational timing requirements.

The primary metric you’re looking for is time-to-first-token (TTFT): the time it takes to generate the first word of a response. As soon as the first token arrives, the orchestration layer can begin streaming output to TTS, so the LLM’s contribution to total latency is determined entirely by this number.

You can ignore latency spikes from tool requests at this stage. That is a derivative of baseline latency and can be covered up with techniques like interstitial fillers. To validate your LLM selection, you simply need to measure the TTFT for a straightforward response.

For your convenience, we’ve published a lightweight benchmarking repository ( quick-llm-benchmarking) to help you run quick, early tests and confirm that an LLM stack performs within the right latency range.

With our library, you write a function that calls your LLM service, like so:

The resulting benchmarks will include the LLM model and all of the overhead introduced by the provider and the API. The ping latency to the provider’s endpoint is extracted to eliminate the noise of your local network.

Here is an example output from a quick benchmark run of several OpenAI models, hosted by OpenAI and using the Chat Completions and Responses APIs.

Benchmark performed on September 17th, 2025 from 6:30pm to 8:30pm Pacific Daylight Time

The art and science of Text-to-Speech latency

Latency is critical to voice agents because silence functions as a paralinguistic signal. People attribute meaning to the sound of silence and the turn taking rhythm shapes how words are interpreted.

Text-to-speech controls nearly all of the other paralinguistic elements, such as prosody, enunciation and non-lexical sounds. Those characteristics affect the user experience in the same way latency does, perhaps more profoundly in some cases. It’s easy to look at latency as a number – which is what we’re doing here! – but keep in mind, a faster system can be subjectively worse and feel slower if the voice is less expressive.

Types of voice models

In the 1990s to the early 2010s, concatenative and parametric synthesis set the standard for TTS. Those methods have since been surpassed by neural and, more recently, generative voice models.

Neural Voices are intonated, but they are static. The same input always yields the same output. They work in a two stage process. First, the text to be spoken is passed through a neural network to produce a mel-spectrogram, a 2D map showing how frequency and amplitude change over time. Then, a second neural network, the vocoder, converts that spectrogram into a raw audio waveform.

In general, neural voices are known for:

- Low and predictable latency.

- Deterministic speech, the same input always yields the same output.

- Limited configurability, with only coarse controls like pitch and rate.

Some examples of neural voices include: Amazon Polly, Google Neural2.

Generative Voices are expressive and highly dynamic, theoretically capable of changing every aspect of the voice to serve the situational context. They use large generative architectures like transformers, diffusion, etc.

In general, generative voices are known for:

- Higher inherent processing, but perceived latency is often lower.

- Non-deterministic speech, the same input can be spoken differently each time.

- Capable of non-lexical sounds, including breaths, hesitations, and fillers.

- Customizable voices, offering control over accent, style, and even speaker cloning.

Some examples of generative voices include: Amazon Generative, ElevenLabs.

Practical guidelines for Text-to-Speech latency

💡 Colocate TTS with the orchestration server and media edgeThe closer your TTS service runs to the media edge, the less network overhead you pay. And since your media edge should be controlled by where your customers are, a TTS provider who has the capability to deploy their service globally allows your voice agent to be performant globally.

💡 Perceived latency is what ultimately mattersExpressive voices feel faster than static voices. They are also better at conveying meaning and delivering an emotionally engaging experience that maintains the user’s attention span. Latency only matters in service of those outcomes. So don’t chase the lowest number, pick the model that feels right, even if it costs a few extra milliseconds.

💡 Ensure your system supports many voices

Your system will likely need to support more than one voice. If you serve multiple languages or dialects, then you’ll need multiple voices – at least until generative voices fulfill all their promises. Changing a TTS provider can be complex. It requires careful evaluation, colocation, and (often) commercial commitments. You could end up locked into a suboptimal experience if your TTS component doesn’t provide variety.

💡 Reevaluate voice selection toward the end of implementation

Voices often sound different when used in different contexts. A voice may meet your expectations during a proof of concept, but after you implement your use-case, tools, interstitial fillers, and other features the experience might be served better by a different voice. You’ll be surprised how many issues go away by simply changing a voice.

Conclusion

This post was a plain English tour of core voice agent latency, what it is, where it comes from, and why it shapes every design choice. We kept the scope tight to the fundamentals and practical starting points. We have more voice agent topics on the way, so keep an eye on the blog.

In the meantime, reach out to your account team. Twilio account executives and solution architects help product and engineering teams on these challenges every day. Whether you need a high performance global voice network, ConversationRelay for managed voice agent orchestration with low latency out of the box, or another primitive for AI voice agents, we can help.

That is it for now. We can’t wait to see hear what you build.

Phil Bredeson is a Solutions Architect at Twilio, where he helps customers design high-performance, low-latency communications systems. He leads Twilio’s AI Majors, a subgroup of the solutions organization, and specializes in real-time voice AI agents. He can be reached at pbredeson (at) twilio (dot) com.

1 Based on internal benchmarks with ConversationRelay (p50 491 ms, p95 713 ms) using different models. Results may vary

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.