How Positive was Your Year with TensorFlow.js and Twilio

Time to read:

As 2019 (and the decade) come to an end, it's interesting to reflect on the time spent. What do our text messages say about how positive or negative our time was? This post uses TensorFlow.js to analyze the sentiment of your Twilio text messages for the year.

Prerequisites

- A Twilio account - sign up for a free one here

- A Twilio phone number with SMS capabilities - configure one here

- Node.js installed- download it here

How does TensorFlow.js help with sentiment analysis?

TensorFlow makes it easier to perform machine learning (you can read 10 things you need to know before getting started with it here) and for this post we will use one of their pre-trained models and training data. Let's go over some high-level definitions:

- Convolutional Neural Network (CNN): a neural network often used to classify images and video that takes input and returns output of a fixed size. Exhibits translational invariance, that is, a cat is a cat regardless of where in an image it is.

- Recurrent Neural Network (RNN): a neural network best-suited for text and speech analysis that can work with sequential input and output of arbitrary sizes.

- Long Short-Term Memory networks (LSTM): a special type of RNN often used in practice due to its ability to learn to both remember and forget important details.

TensorFlow.js provides a pre-trained model trained on a set of 25,000 movie reviews from IMDB, given either a positive or negative sentiment label, and two model architectures to use: CNN or LSTM. This post will be using the CNN.

What do your Twilio texts say about you?

To see what messages sent to or from your Twilio account say about you you could view previous messages in your SMS logs but let's do it with code.

Setting up

Create a new directory to work in, called sentiment, and open your terminal in that directory. Run:

to create a new Node.js project. Install the dependencies we will use: Tensorflow.js, node-fetch (to fetch metadata from the TensorFlow.js sentiment concurrent neural network) and Twilio:

Make a file called sentiment.js and require the Node.js modules at the top. A JavaScript function setup() will loop through text messages sent from a personal phone number to our Twilio client (make sure to get your Account SID and Auth Token from the Twilio console.) We set the dates so we retrieve all messages sent this year in 2019, but you can play around with it to reflect a time period of your choosing. setup() will then return an array of text messages.

If you have a lot of duplicate messages, you could analyze the sentiment of each unique unique message by returning Array.from(new Set(messages.map(m => m.body)));.

Prepare, clean, and vectorize data

Next we want to fetch some metadata which provides both the shape and type of the model, but can generally be viewed as a training configuration that does some heavy lifting for us. This is where we'll use node-fetch to get the metadata hosted at a remote URL to help us train our model.

Soon we will convert words to sequences of word indices based on the metadata but first we need to make those sequences of equal lengths and convert the strings of words to integers, a process which is called vectorizing. Sequences longer than the size of the last dimension of the returned tensor (metadata.max_len) are truncated and sequences shorter than it are padded at the start of the sequence. This function is credited to the TensorFlow.js sentiment example.

We need to load our model before we can predict the sentiment of a text message. This is done in this function similar to the one that loaded our metadata:

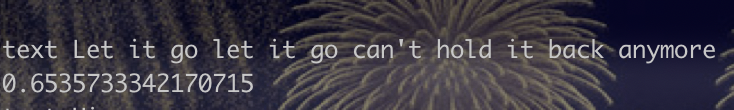

Then the function that predicts how positive a text message is accepts three parameters: one text message, the model loaded from a remote URL in the next function, and the metadata. In predict the input text is first tokenized and trimmed with regular expressions to convert it to lower-case and remove punctuation.

Next those trimmed words are converted to a sequence of word indices based on the metadata. Let's say a word is in the testing input but not in the training data or recognition vocabulary. This is called out-of-vocabulary, or OOV. With this conversion, even if a word is OOV like a misspelling or emoji, it can still be embedded as a vector, or array of numbers, which is needed to be used by the machine learning model.

Finally, the model predicts how positive the text is. We create a TensorFlow object with our sequences of word indices. Once our output data is retrieved and loosely downloaded from the GPU to the CPU with the synchronous dataSync() function, we need to explicitly manage memory and remove that tensor's memory with dispose() before returning a decimal showing how positive the model thinks the text is.

Here's the complete code for predict:

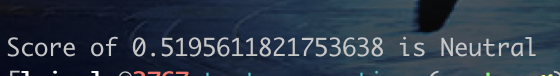

We could sure use a helper function that compares each positivity score and determines whether that makes the text message positive, negative, or neutral.

This helper function will be called in run() which calls most of our functions. In run(), we first load our pretrained model at a remote URL to use it to create our model with the TensorFlow.js-specific function loadLayersModel() (load_model() in Keras, a high-level open source neural networks Python library that can run on top of TensorFlow and other machine learning tools) which accepts a model.json file as its argument. If you have a HDF5 file (which is how models are saved in Keras), you can convert that to a model.json using the TensorFlow.js pip package.

For each text, the model makes a prediction and adds it to a running sum of decimals before finally calling getSentiment() on the average of the predictions for each text message.

Don't forget to call run()!

Test your app

On the command line, run node sentiment.js. You should see whether or not your texts for the year are positive, negative, or neutral.

Was your year positive? What about your decade maybe?

What's Next?

In this post, you saw how to retrieve old text messages from the Twilio API, clean input with regular expressions, and perform sentiment analysis on texts with TensorFlow in JavaScript. You can also change the dates you retrieve text messages from or change the phone number (maybe your Twilio number sent more positive messages than your personal one sent to a Twilio number!).

For other projects, you can apply sentiment analysis to other forms of input like text files of stories (the first Harry Potter story is a text file on GitHub here, you're welcome!), real-time chat (maybe with Twilio), email, social media posts like tweets, GitHub commit messages, and more!

If you have any questions or are working with TensorFlow and communications, I'd love to chat with you!

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.