How to Build a Smart Inventory Chatbot on WhatsApp with LangChain, LangGraph, OpenAI, and Flask

Time to read:

How to Build a Smart Inventory Chatbot on WhatsApp with LangChain, LangGraph, OpenAI, and Flask

In an earlier post, we saw how to build an inventory chatbot with keyword-matching for products. We didn’t integrate AI with it or made it smarter so that the chatbot can know what the user wants.

In this post, you’re going to learn how to build a smart solution so that the chatbot / AI agent can understand the context and take actions accordingly.

This WhatsApp-based AI agent should be able to retrieve product details, know if it’s in or out of stock, can list the available products, and finally can place an order for the desired product.

In this tutorial, you will build this service using Flask, Twilio's WhatsApp messaging API, Pyngrok, LangChain, LangGraph, and SQLAlchemy.

You'll use Twilio's API to access the WhatsApp messaging product to let the clients send messages via WhatsApp and start the chatbot. This chatbot is built using aFlask backend. It is then communicated withPyngrok, which is a Python wrapper for ngrok that puts the Flask localhost on the internet. This chatbot will fetch data from the PostgreSQL database and update inventory data in the database using the SQLAlchemy ORM.

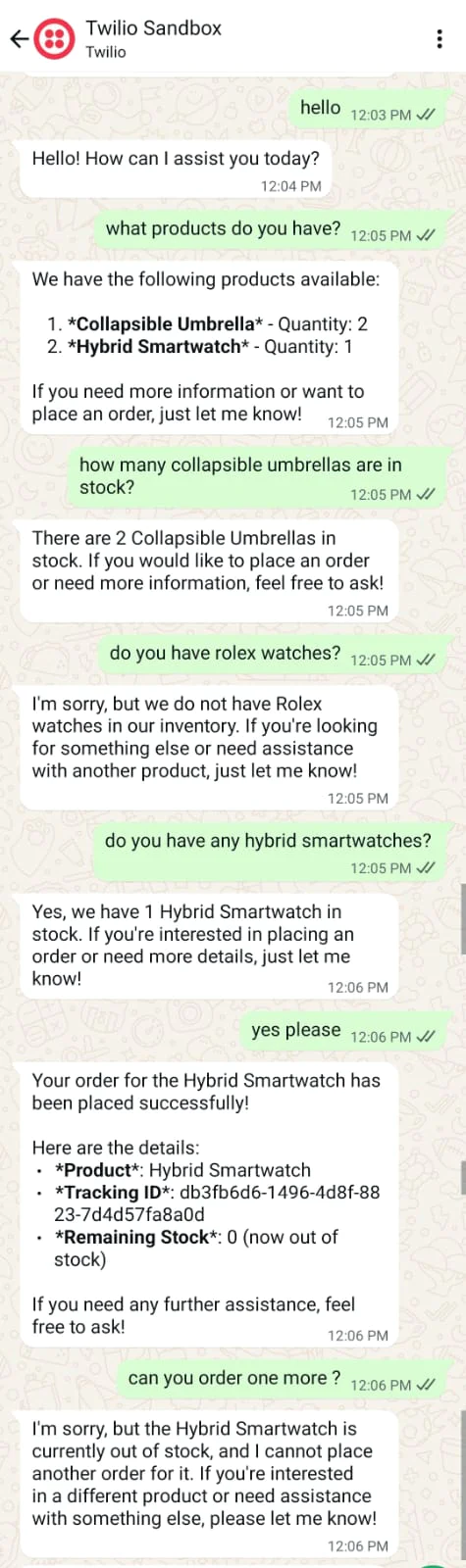

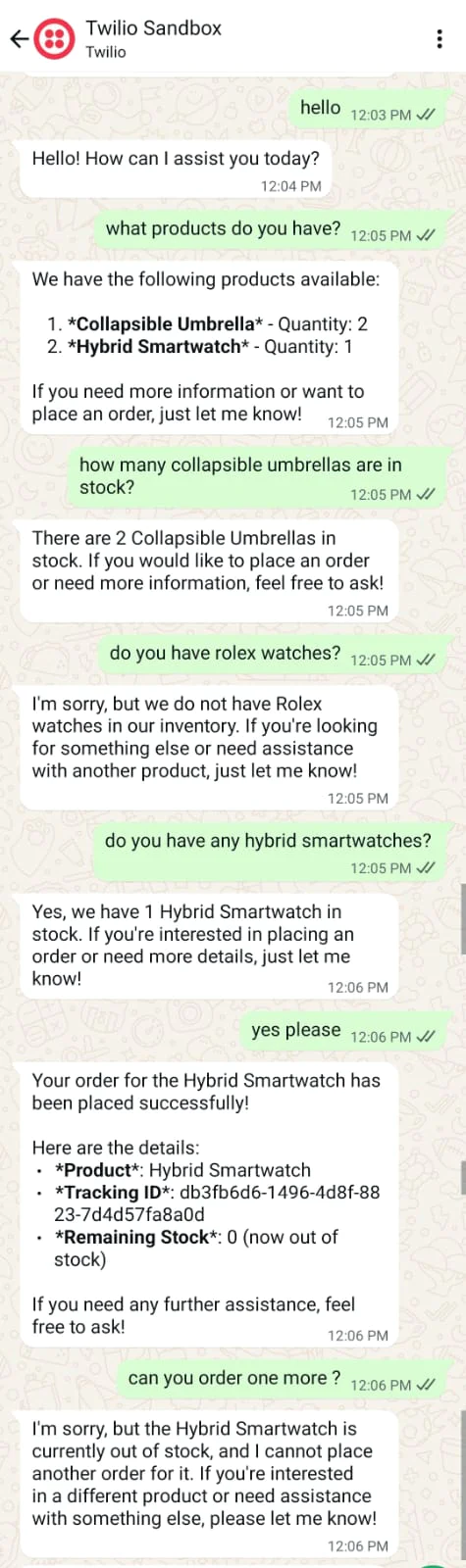

At the end of this tutorial, you'll be able to create a chatbot like so:

The app is straightforward. You have a product in your inventory. When the client orders that product, the chatbot replies to place that order for them or says it's not available. The chatbot knows what products you have in the inventory and knows what products you don’t have.

Prerequisites

To follow along with this tutorial, you need to have the following:

- Python 3.6+ installed

- PostgreSQL installed

- A Twilio account set up. If you're new, create a free account here.

- A smartphone with WhatsApp installed

- A basic knowledge of Flask

- A basic knowledge of what ORM is. If you have no idea what ORM is, consult this wiki page!

- A basic knowledge of AI agents

- A basic knowledge of LangChain

Creating your Smart Inventory

Start with setting up your database. Say, you have two products in your inventory:

- 2 collapsible umbrellas

- 1 hybrid smartwatch

To be able to create these two records in the database, you should define a schema and then create a table based on that schema.

Initiating the Python Project and the PostgreSQL Database

Download and set up PostgreSQL if you haven’t already. Create a new database — for this project, we would call it mydb.

Create a new directory called smart_inventory_chatbot and initiate a virtual environment inside:

For now, you only need to install these two libraries:

The psycopg2-binary library is a binary version of psycopg2, which is a Python driver for the PostgreSQL database.

Setting up the SQLAlchemy Model

For this project, you will use the SQLAlchemy ORM to access a PostgreSQL database engine and interact with it. Create a new file called models.py. Model the data and create the schema in the models.py file:

To use the environment variables, create a .env file and fill it like so, replacing the placeholder texts with your database credentials:

As you can see, the url object defines the database URL with the following options:

- The

drivernameas the database engine - The

usernameandpasswordof your database - The

hostin this example is localhost if you’re running it locally - The

databaseis the name of the database you need to access; in this tutorial let’s name itmydb - The

port, you can set it to5432which is the default port for PostgreSQL

You can choose your own specifications depending on your case.

You then define the engine instance which is the starting point of your application. That instance is passed into the sessionmaker function to create a Session class which is instantiated to create a session object.

The session object manages the persistence layer and does the ORM operations.

The Product class inherits from the Base class, which declares the mapped classes into tables. Inside that Product table, there is a definition of its name and its attributes.

Similarly, if you want to define another table, you can create a class that inherits from the base class and declare your attributes.

The Base.metadata.create_all() function creates all the tables defined in the mapped classes.

Inserting Data in SQLAlchemy

Now, insert records into the products table. To do that, create a file called insert_db.py and add the following code to the file:

The umbrella and smartwatch objects are products in the database. The former amounts to two in the inventory, while the latter is just one piece.

In the code above, you add them into the database with the session.add_all() method and then commit it into the PostgreSQL database with session.commit().

To run this script, make sure you have the Python virtual environment activated, thenrun the script with this command:

To check if everything worked correctly, you can access your PostgreSQL database through a SQL client or a PostgreSQL client like psql.

Alternatively, you can of course use any database administration tool like pgAdmin, or a multi-platform database tool like DBeaver.

Here is how you can use psql to explore the data in your database.

Running psql -U newuser -d mydb lets you use the psql client to connect to the database named mydb as the user newuser. You can replace newuser with your own username.

Take a look at the psql documentation to learn more about the commands you can run.

Once you are logged in and connected to your database, you can run the following query to list the products in your inventory:

Below is what the psql log statements might look like if you're running them on a Linux machine:

As you can see, both records are now created. Once you are finished, you can enter \q and then press the Enter key to quit psql.

Creating the Chatbot

The database is now set up and the tiny inventory is already there. Now you need to track the orders for this simple data. Let's configure the Twilio WhatsApp API first.

Configuring the Twilio Sandbox for WhatsApp

Assuming you've already set up a new Twilio account, go to the Twilio Console and choose the Messaging tab on the left panel. Under Try it out , choose Send a WhatsApp message . You'll be redirected to the Sandbox tab by default, and you’ll see a phone number "+14155238886" with a code to join next to it on the left side of the page. You’ll also see a QR code to scan with your phone on the right side of that page .

To enable the Twilio testing environment, send a WhatsApp message with this code's text to the displayed phone number. You can click on the hyperlink to direct you to the WhatsApp chat if you are using the web version . Otherwise, you can scan the QR code with your phone.

Now, the Twilio sandbox is set up, and it's configured so that you can try out your application after setting up the backend.

Setting up the Flask Backend

To set up Flask, navigate to the project directory and create a new file called main.py. Inside that file add this very minimal FastAPI backend :

To run this backend, you need to install flask:

To run the app:

Open your browser to localhost:8000. The result you should see is the success JSON response:

To connect Twilio with your backend, you need to host your app on a public server. An easy way to do that is to use Ngrok.

If you’re new to Ngrok, you can consult this blog post and create a new account.

To use it, open a new terminal window, activate the same virtual environment you created earlier, and then install pyngrok, which is a Python wrapper for Ngrok:

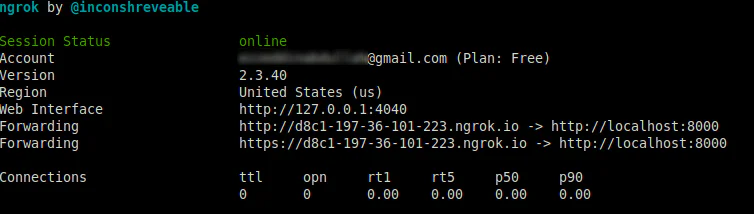

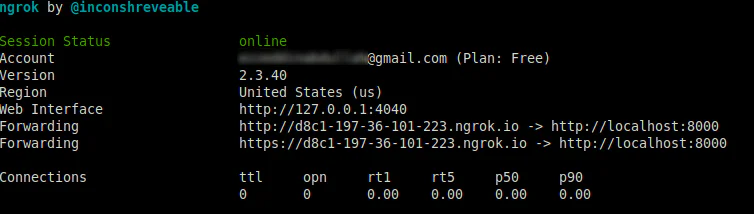

Leave the Flask app running on port 8000, and run this ngrok command:

This will create a tunnel between your localhost port 8000 and a public domain created on the ngrok.io site. W hen a client visitsyour Ngrok forwarding URL , the Ngrok service will automatically forward that request to your backend.

Go to the URL (preferably, with the https prefix) as shown below:

After you click on the forwarding URL, Ngrok will redirect you to your FastAPI app’s index endpoint.

Configuring the Twilio Webhook

To be able to receive a reply when you message the Twilio WhatsApp sandbox number, you need to configure a webhook known to Twilio.

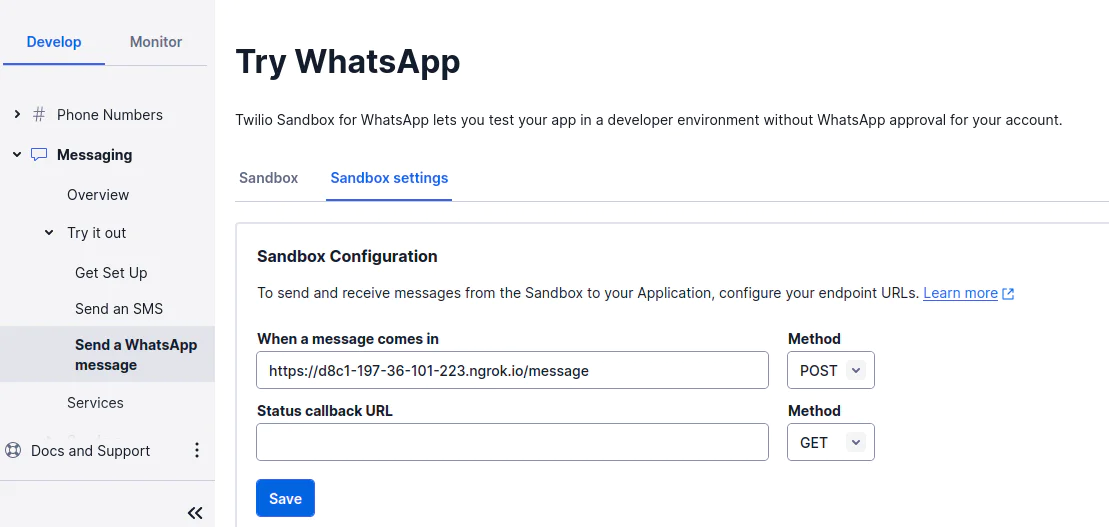

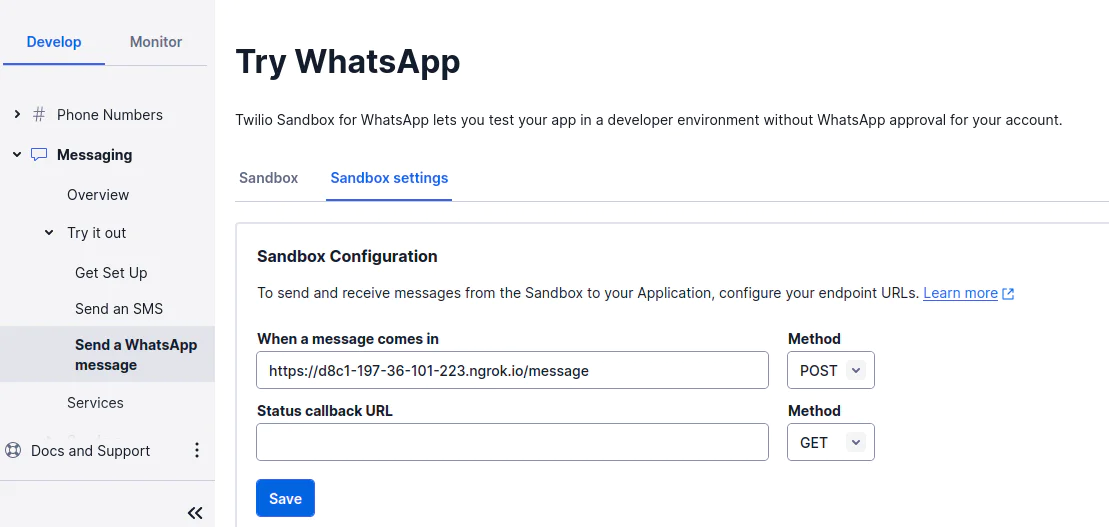

To do that, head over to the Twilio Console and choose the Messaging tab on the left panel. Under the Try it out tab, click on Send a WhatsApp message. Next to the Sandbox tab, choose the Sandbox settings tab.

Copy the ngrok.io forwarding URL and append /message . Paste it into the box next to WHEN A MESSAGE COMES IN :

The full URL will be something like: https://d8c1-197-36-101-223.ngrok.io/message.

Note: The /message is the endpoint we will set up in the Flask application. This endpoint will have the chatbot logic.

Once you finish, click the Save button.

Authenticating your Twilio Account

Earlier, you created the index route to just test that your backend is working with Ngrok. From this point onwards, you won’t need it anymore.

Before setting up the /message endpoint, authenticate your Twilio account to be able to use the Twilio client. Open the main.py file and add the following highlighted lines of code:

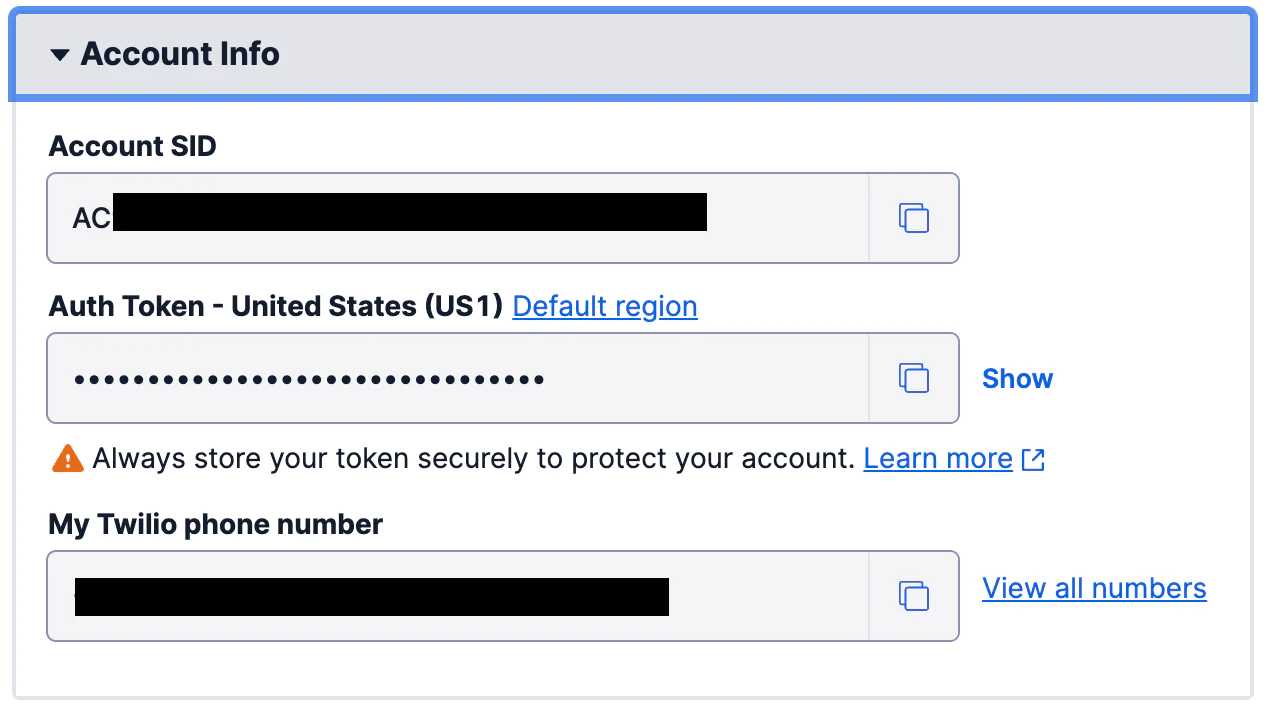

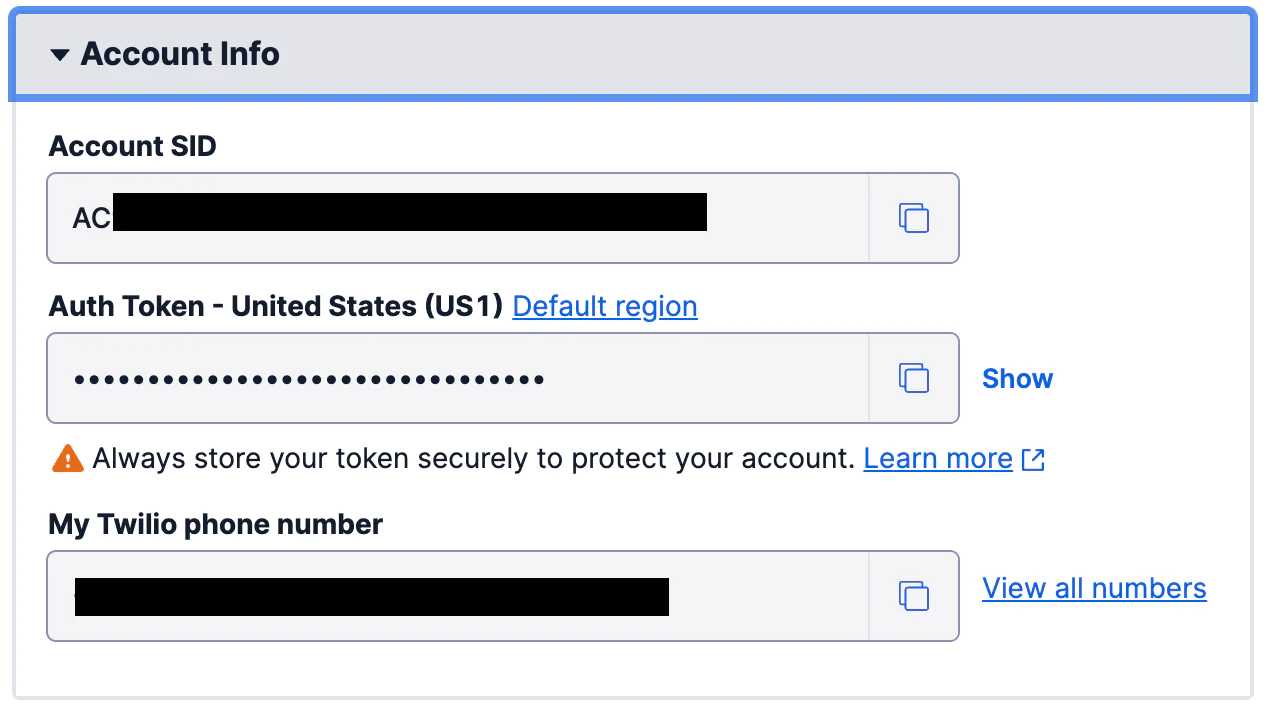

In your Twilio console, find your Account SID and Auth Token. In the next step, you will set these as environment variables.

You will use dotenv to read and set the environment variables and twilio to create the Twilio client. Install these two packages in your virtual environment with the following:

To use the environment variables, you can add up the Twilio credentials to the .env file and fill it like so, replacing the placeholder text with your values from the Console:

Now, the app is ready to use Twilio capabilities to create the chatbot through WhatsApp.

Adding the Chatbot Logic

Preparing the Tools

Create a new file called tools.py and put the following imports:

Then define four tools that the AI agent should know. Here is the first tool to list all available products in the inventory:

And here is the second one to be more specific and get details about a certain product:

And here is the third one to place an actual order and update the database accordingly:

And here is the last one to check if a certain product is in stock or out of stock:

Next, you’re going to use these tools in the agent.

Preparing the Agent

After defining the tools, we need to use them and make the LLM decide which tool to use:

Import the OpenAI API key:

after defining the environment variable for it in the .env file:

Define the state of LangGraph:

and get an instance of the LLM with the following function:

Create the system prompt with the following function:

Define the first node in the graph:

Create the second node (the tools executor):

Create a function to be used in the conditional edge:

Create the graph:

And finally, instantiate it:

This is the instance you’ll import at the main.py script.

Putting Things Together

Now, you can use the inventory agent instance into our main Flask script. Here are the updated imports at the top of the file as follows:

Now, in main.py, below the index() endpoint, add the following logic:

Don’t forget to define your phone numbers in .env file:

And finally, you can create the reply() view method:

The send_message() function takes the text message that you want to send from the Twilio WhatsApp sandbox account to your phone number. Change the placeholder text in the to option value to your phone number in E.164 format (country code followed by your phone number).

The /message endpoint will receive a POST request that

The /message endpoint handles incoming WhatsApp messages and processes them through a LangGraph-based inventory agent.

Here's a breakdown of how it works:

- Request handling:

- The method is a Flask route handler for POST requests to the "/message" endpoint

- It extracts the message body and the sender's phone number from the Twilio webhook request

- Session management:

- Uses the user's phone number as a unique session identifier

- Considers the body as the message without any processing

- Agent invocation:

- Creates an initial state with the user's message wrapped in a HumanMessage object

- Invokes the LangGraph agent with this state and configures it to use the session ID as a thread ID which is used in the

MemorySaver - This allows the agent to maintain conversation history for each unique user

- Response extraction:

- Searches through the agent's response messages in reverse order

- Finds the last AIMessage with content, which represents the agent's final response

- Response delivery:

- Sends the agent's response back to the user via WhatsApp using the

send_messagefunction - Returns a JSON response indicating success

- Sends the agent's response back to the user via WhatsApp using the

- Error handling:

- Catches any exceptions that occur during processing

- Sends a generic error message to the user

- Logs the actual error for debugging

- Returns a JSON response indicating an error occurred

This method effectively creates a bridge between the WhatsApp messaging platform and the LangGraph-powered inventory agent, maintaining separate conversation sessions for each user based on their phone number.

Testing the Final App

Earlier, you defined two products in the inventory:

2 collapsible umbrellas

1 hybrid smartwatch

When you first send a greeting to the app, it replies with a friendly welcome: “Hello! How can I assist you today?”

If you ask, “What products do you have?”, the chatbot lists the available inventory. Asking, “How many collapsible umbrellas are in stock?”, gets the correct reply of 2 umbrellas. On the other hand, if you try something outside the catalog, like “Do you have Rolex watches?”, the chatbot apologizes and explains that the item isn’t available.

For valid items, the chatbot goes a step further. If you ask about hybrid smartwatches, it replies that there is 1 in stock and asks whether you’d like to order it. Confirming with “Yes please” places the order and removes the item from inventory. If you then try to order another one, the chatbot apologizes and informs you there are none left.

This flow shows how the chatbot manages conversations: it can greet users, share product availability, confirm orders, and gracefully handle requests for out-of-stock or unknown items. You can continue experimenting with your own questions to explore just how flexible the system is.

Finally, if you’re in a production environment you’ve got to take care of the following:

- setting up a Twilio phone number instead of using the default Twilio number used for testing

- using the live credentials of Twilio instead of the ones used for testing

- hosting on a VPS instead of tunneling with Ngrok

- setting debug to False in the Flask main app

Ezz is a data platform engineer with expertise in building AI-powered chatbots. He has helped clients across a range of industries, including nutrition, to develop customized software solutions. Check out his website for more.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.