Perform a Warm Transfer to a Human Agent from the OpenAI Realtime API using Twilio Programmable SIP and TypeScript

Time to read:

We’re so excited for our friends at OpenAI, who just released their Realtime API to general availability. Their multilingual and multimodal gpt-realtime model unlocks the ability to directly analyze a caller’s pitch and tone to better understand their sentiment and intent.

With their GA, OpenAI has also released a new SIP connector to connect to the gpt-realtime model via SIP. My colleague Paul has a tutorial on how you can get started with the OpenAI Realtime SIP Connector using Twilio’s Elastic SIP Trunking. In this tutorial, I will show how to use Twilio Programmable SIP to dynamically manage the call, unlocking the ability to perform a warm transfer to a human agent.

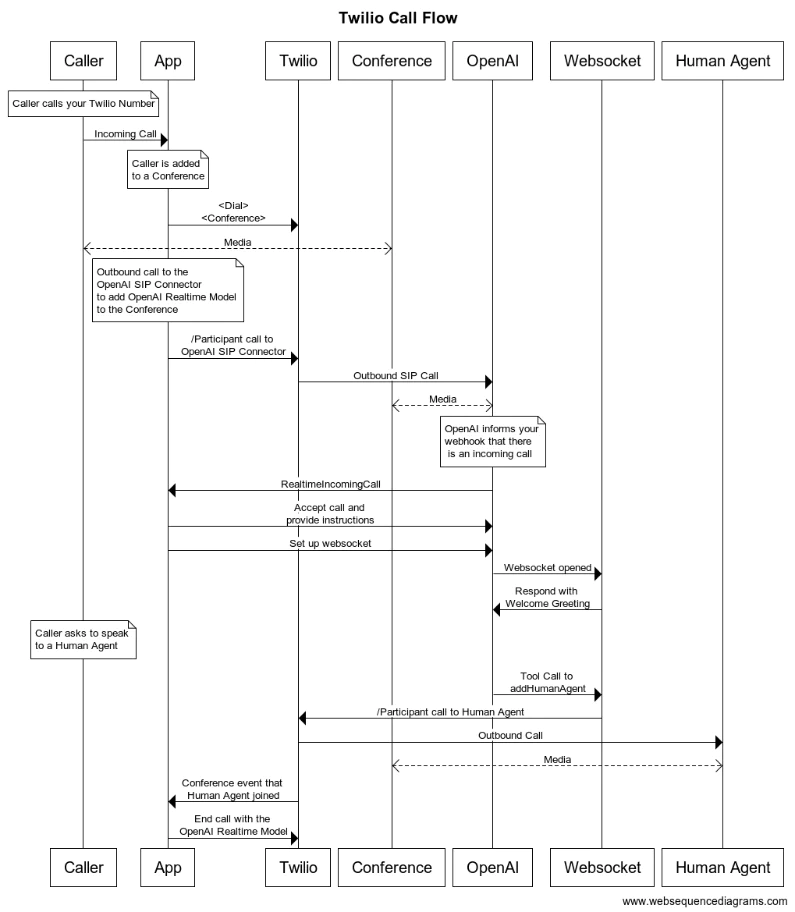

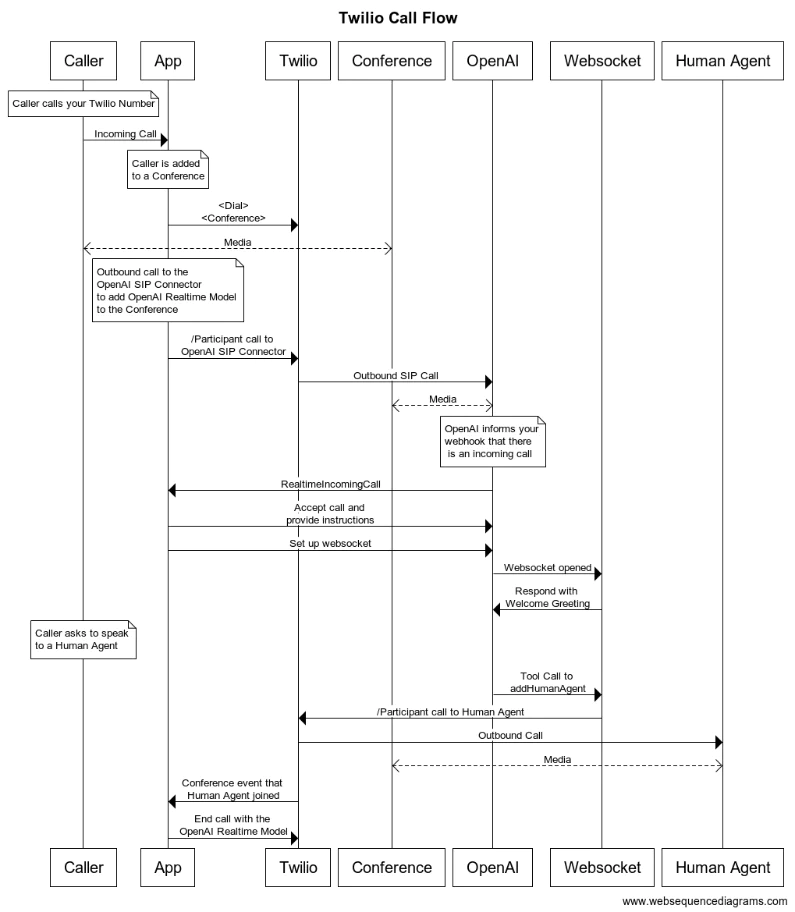

Architecture for SIP human escalation with the OpenAI Realtime API and Twilio

In this tutorial, you’ll be building the following architecture in order to perform a warm transfer from the virtual agent – powered by the OpenAI gpt-realtime model – to a human agent.

Prerequisites

Before you can build this demo with OpenAI’s Realtime API, you need to check off a few prerequisites first.

- An upgraded Twilio account with a Primary Business Profile - Sign up using this link

- A Twilio number with Voice capabilities. See instructions to purchase

- An OpenAI API key on a premium plan or with available credits

- Access to the OpenAI Realtime API. Check here for more information

- Node.js/Typescript (I used version 22.15.0 – you can download it from here)

- A tunnelling solution like Ngrok (You can download ngrok here)

Get started with OpenAI and Ngrok

In order to connect to the OpenAI gpt-realtime model via Twilio Programmable SIP, you’ll first create a Webhook with OpenAI to direct SIP calls to. When OpenAI receives an incoming call to your OpenAI project, it will send a realtime.call.incoming event to the webhook. You’ll reply with the instructions for the session – in our example, the prompt instructions, voice, and available tools. You’ll also set up a WebSocket connection so you can exchange messages with the session, including receiving tool execution requests from the model.

If you have already completed my teammate Paul’s tutorial on how to Connect the OpenAI Realtime SIP Connector with Twilio Elastic SIP Trunking, you can reuse the webhook you’ve set up within OpenAI’s console and will just have to make some adjustments to your app. If you’re starting new here, no sweat – we’ll step through it here.

Follow Paul’s Set up a static domain with Ngrok and the Get started with SIP and OpenAI Realtime steps, but come back before you set up the Elastic SIP Trunk.

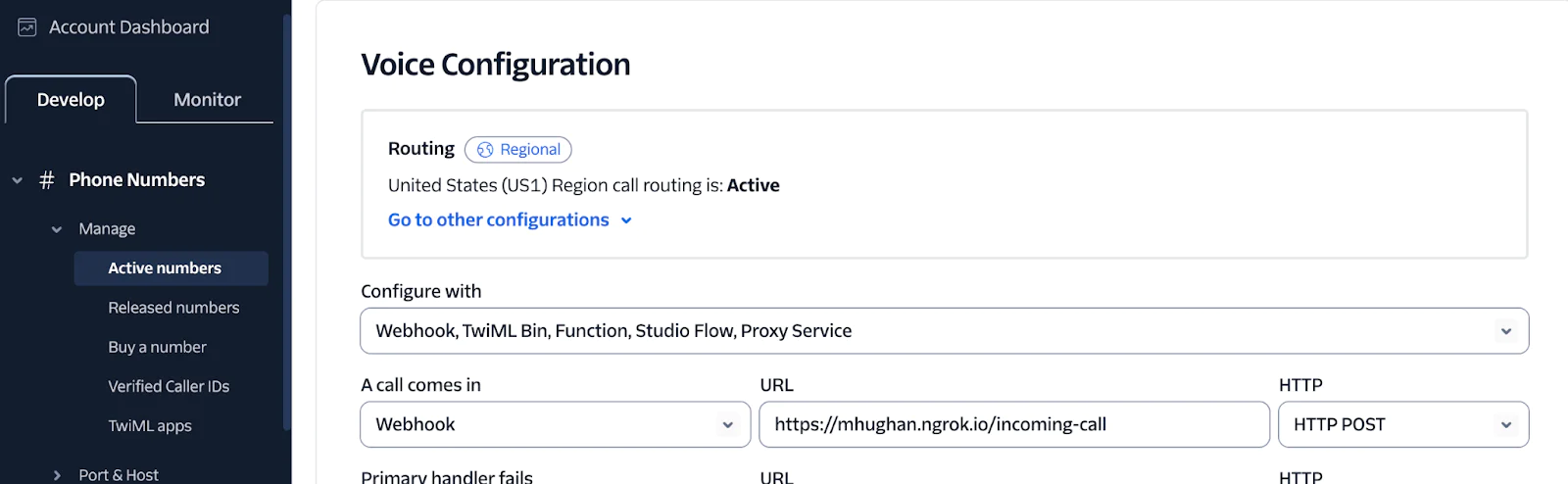

Configure your Twilio Number

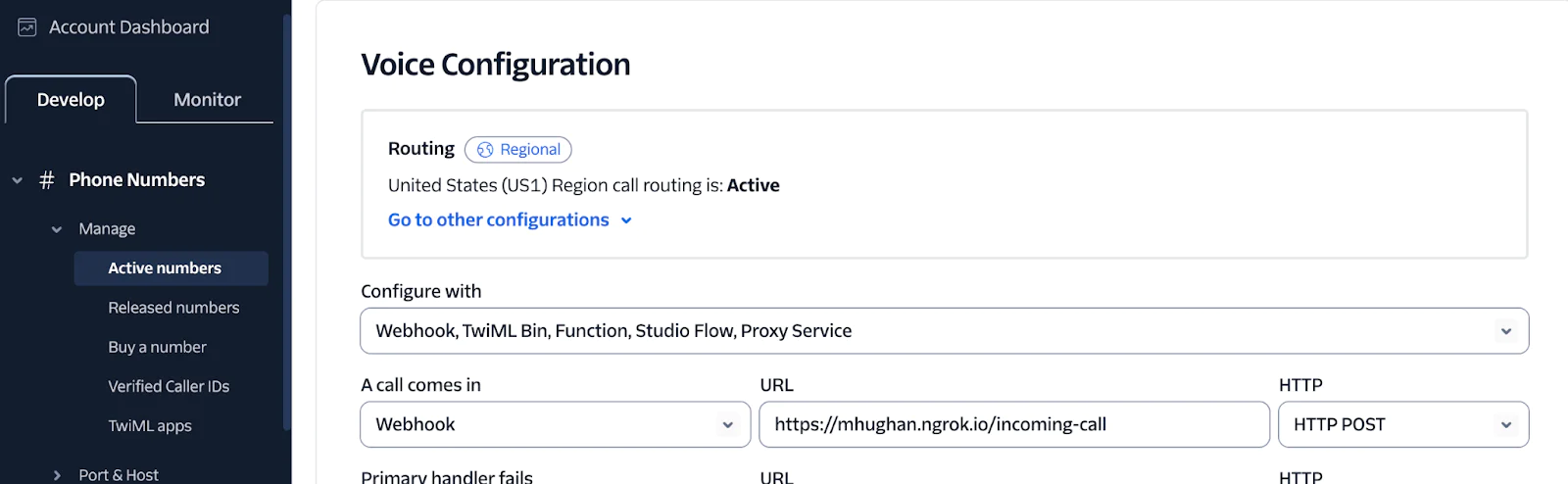

First, you will configure your Twilio number to get instructions for incoming calls from a webhook, /incoming_call you will set up later on. You will use the domain you created in the Set up a static domain with Ngrok step to build this URL.

You can add this URL either via the Console or the IncomingPhoneNumber API resource.

From the Console,

- Access the Active Numbers page in Console.

- Click the desired phone number to modify.

- Scroll to the Voice Configuration section.

- Add the url: “https://{DOMAIN}/incoming-call” for when A call comes in, using the domain you created previously.

Build the App

This section handles adding an incoming call to a Twilio Conference while the caller waits to speak to the virtual agent.

Conferences add a host of orchestration capabilities such as putting users on hold and muting, but most importantly for this use case, they let you keep the user engaged with the virtual agent until a human agent has accepted the call to join the conference. This workflow also ensures the caller is never disconnected – no dropped calls in our demo!

Set up the code base

Open up a terminal and run the following commands:

This sets up the Node/TypeScript environment, sets up the production install packages and development environment packages, then creates a src directory and a dist build directory, an .env file to hold all your keys, and a tsconfig.json file to tell the tsx compiler what to do when compiling TypeScript.

Next, let’s set up some Environment variables.

Set up Environment Variables

Open up the .env file in your favorite text editor or IDE. Then, follow these steps to configure your app:

- Enter your OpenAI project API Key

- Enter the OpenAI Webhook secret (which you copied earlier when setting up the webhook)

- Paste your OpenAI project ID. You can find this in the URL when viewing your OpenAI project in the OpenAI platform, or by going to Settings > Project > General on the left hand side.

- Set a

PORTso your server knows where to listen - Also add the domain you created in the previous step, without the scheme (the ‘https://’), for example

mhughan.ngrok.io. - Next, add your Twilio credentials in

TWILIO_ACCOUNT_SIDandTWILIO_AUTH_TOKEN. You can find these in your Twilio Console. - Finally, enter the number for the human agent you want to transfer the call to in E.164 format

If you don’t have an additional phone to place the call and accept a second call, you can always use a second Twilio number with a voice URL that executes TwiML to simulate greeting the user for testing. (More on that later.)

Set up tsconfig.json

Next, enter the below into the tsconfig.json file. This tells the tsx compiler various options, for example what flavor of JavaScript/TypeScript you are working with, where your TypeScript files exist, and where the build files should be compiled to:

Next, make sure the below text is included in the package.json file.

I have no unit tests for this tutorial, but you may want to add some to yours (and you certainly will before you take an application to production!).

The dev command tells the tsx compiler where to watch for code (this has the nice upside of updating when you make changes). build tells the tsx compiler where to put the compiled JavaScript files, and start tells node where to find the compiled index JavaScript file, dist/index.js.

Lay the groundwork for the server

Nice work, now we’re cooking with gas.Next, I’ll help you write a basic Express server that can receive OpenAI webhooks, verify them, and then talk back to the Realtime API. To start, the app pulls in your environment variables and creates a client each for OpenAI and Twilio. The Express app is configured with a raw body parser because OpenAI’s signature verification needs the exact request bytes, untouched by JSON parsing.

To your src/index.ts file, add the following.

Next add a variable to map the unique OpenAI call_id for each call to the Twilio Conference so you can add and remove participants from the call when you get tool execution requests from OpenAI.

You’ll also add two variables to map the conference name to the caller ID (From number) of the user who called in and the authentication callToken for that incoming call. Saving the callToken lets you reuse that caller’s From number for the outbound call to the human agent. This is nice because if for any reason the call drops (not that it should!), the agent can call back the user.

Instruct the GPT Realtime model

Here you’ll define how you want the model to greet the user and any prompt instructions and available tools for the model. For this use case, you will define a function to addHumanAgent in the event that the user asks to speak to a real person.

Receive incoming calls to your Twilio Number and orchestrate the conference

Next, create the webhook to receive incoming calls to your Twilio number.

When a user calls in, your app will make a call out to your OpenAI project’s SIP connector to add the virtual agent to the conference, powered by the Realtime API. Your app will then add the caller to that same conference.

You name the conference with the CallSid of the incoming call so it’s guaranteed to be unique for each conference assuming multiple users call your application simultaneously. You will also pass the conference name as a custom SIP header to OpenAI so when OpenAI sends back that header in the webhook request for the incoming call, you can map the OpenAI call_id to the conference.

In the previous step, you added a conferenceStatusCallback to the conference so you would be notified in cases where a Participant joins the conference. When that webhook is called, you will check whether the Participant that joined was a “human agent” and if so, end the call with the virtual agent so the user can speak directly with the agent without interruption.

Receive incoming calls to the OpenAI SIP Connector

As we discussed above, when your app sends the SIP call to the OpenAI SIP connector, you will get a webhook request from OpenAI indicating they received an incoming call. Here, you will set up the app to receive that webhook request and respond with the model instructions defined previously.

You grab the call_id from the request and the conference name from the custom SIP header and add that to the mapping for later. You also accept the call from OpenAI and set up a WebSocket so you can receive and send data to the session.

Manage the WebSocket

The final step (finally!) is to define how to create that WebSocket, handle incoming messages, and execute tool calls. When the user asks to speak to a real person, OpenAI will send a message to the WebSocket with type response.done. Here, you will create a handleFunctionCall function to check if that message was a function_call and further check whether it was a request to execute the addHumanAgent tool.

If the conditions match, you will get the call_id of the overall OpenAI session from the URL (not to be confused with the call_id for this WebSocket message!) and use that call_id to add the human agent.

You might recall the variables you created earlier to map the call_id to the conference name and the conference name to the From number and call_token. That was all for this payoff – you can now place an outbound call to the human agent, using the call_token from the inbound call to prove you have the right to use the same From number for this outbound call.

Label this participant as “human agent” so you can properly remove the virtual agent from the conference once you get the status callback that the human agent joined. PHEW!

Let’s run it!

Sweet, let’s boot back up our ngrok tunnel, then start the server.

In any terminal, execute:

From your project directory, execute:

If all went well, you should see something like this:

Now, give your Twilio number a call. Feel free to chat with the agent for a bit and then make your request to talk to a real person. Following your request, you should find that the human agent is added to the call and the virtual agent is removed. You should now be happily chatting with a real person, not frustrated by extended hold times or archaic IVRs, ideally having already had some of your questions answered by your virtual agent. WIN.

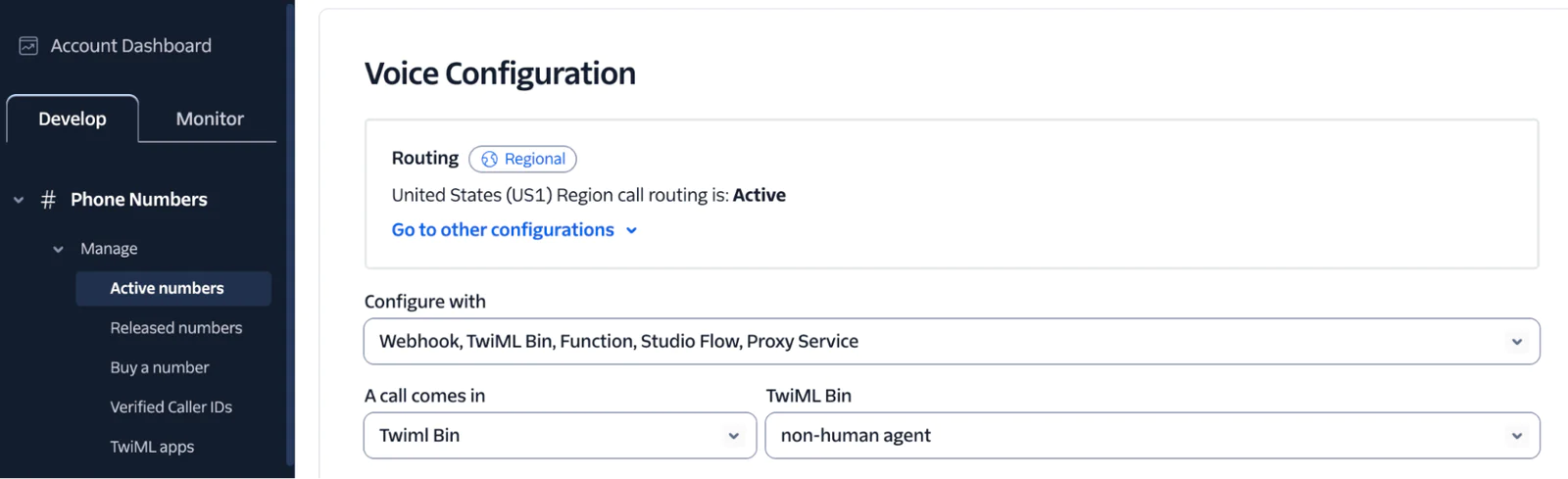

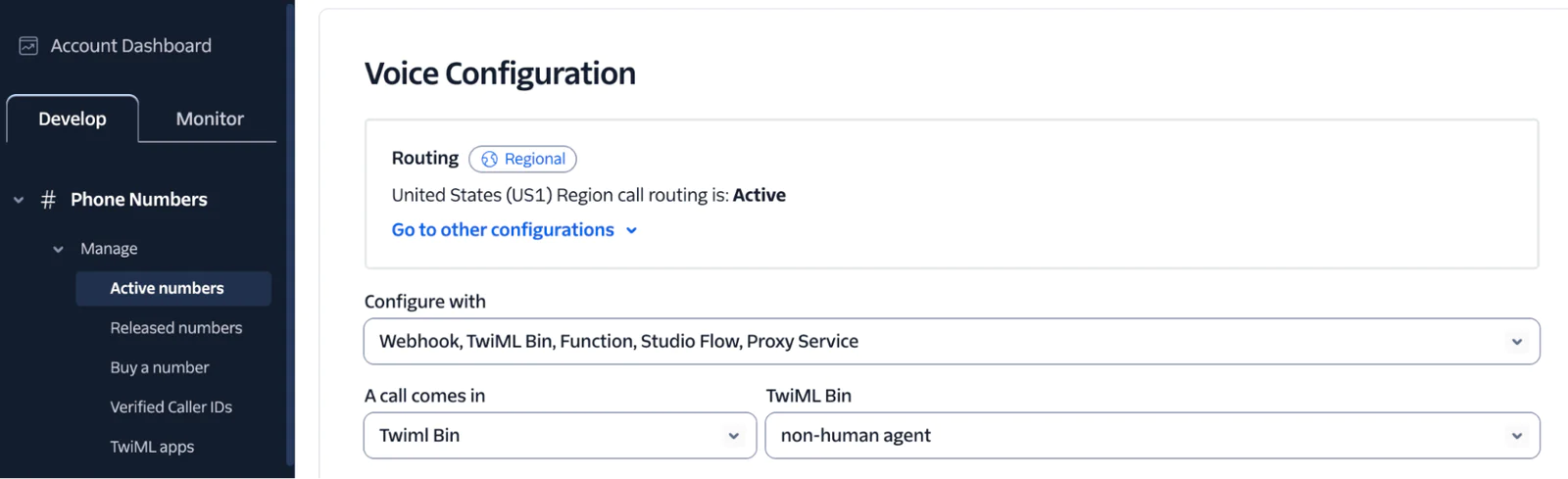

(Optional) Simulate the human agent

If you don’t happen to have two phones to both place and receive calls, you can always purchase a second Twilio number and add a TwiML bin to simulate greeting a user so you can test that the virtual agent is, in fact, removed.

(I realize this does entirely defeat the purpose of warm transferring to a human, but try not to think about that!)

If you take that path, you can just create a TwiML Bin:

Navigate to the TwiML Bin section of the Console.

Click Create a new TwiML Bin.

Give it a friendly name and populate the TwiML with the below code. We’ll add a 5 second pause so you can make sure the virtual agent doesn’t respond to the greeting to ensure the agent has been removed.

4. Click Create.

5. Update the Voice Configuration for your second number to point to the TwiML Bin.

Conclusion

In this tutorial we walked through how to perform a warm transfer from a virtual agent powered by the OpenAI gpt-realtime model to a human at the user’s request.

By connecting to the OpenAI Realtime API using Twilio Programmable SIP, you can dynamically manage the call, ensuring that your users can be seamlessly moved throughout the conversation.

Additional resources

Margot Hughan is a Product Manager at Twilio and loves working on all things Voice. Her email address is mhughan [at] twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.