Build an AI Voice Assistant with Twilio Voice, the OpenAI Realtime Agents SDK, and Node.js

Time to read:

One of the most powerful ways to interact with an AI agent is to talk to it. With help from Twilio Media Streams, you can connect a Twilio phone number to the OpenAI Realtime API.

The kind folks at OpenAI have made this even easier by building the OpenAI Agents SDK for TypeScript, powering not only the voice interaction, but also making helpful extensions like tool calling, guardrails, and more possible with a few lines of JavaScript/TypeScript code.

This tutorial will show you how to quickly build an AI Agent that can:

- Stream OpenAI Realtime responses over a voice call

- Integrate tool calling for an appointment scheduling bot

- Manage output guardrails like blocking certain words from the agent response

Let's get started!

Prerequisites

To code along with this post, you will need:

- Node.js 22+

- A Twilio account with a Voice-enabled phone number

- OpenAI API key with Realtime API access

- A public HTTPS/WSS URL. We'll use ngrok for local development

Project setup

Create a new directory to host the code:

Then initialize a new node project and install the required dependencies:

Fastify is a lightweight webserver framework, zod helps with schema validation, and the OpenAI dependencies will make interacting with the Realtime API much easier.

Create a .env file and populate it with the following. Make sure to add .env to your .gitignore file if you plan on using source control:

Create a new file named index.js and paste in the following code. This will set up a basic Twilio webhook using TwiML, Twilio's Markup Language, to tell the phone number how to respond to an incoming call. After an initial greeting, it will open a websocket and connect that to the OpenAI Realtime API. Using Twilio Voice Media Streams, the application will then provide a seamless interface between the caller and OpenAI.

Start the server with:

Expose the server publicly so it can talk to Twilio with ngrok:

Copy the https://<subdomain>.ngrok.io URL to use in the next step.

Connect your Twilio phone number and test

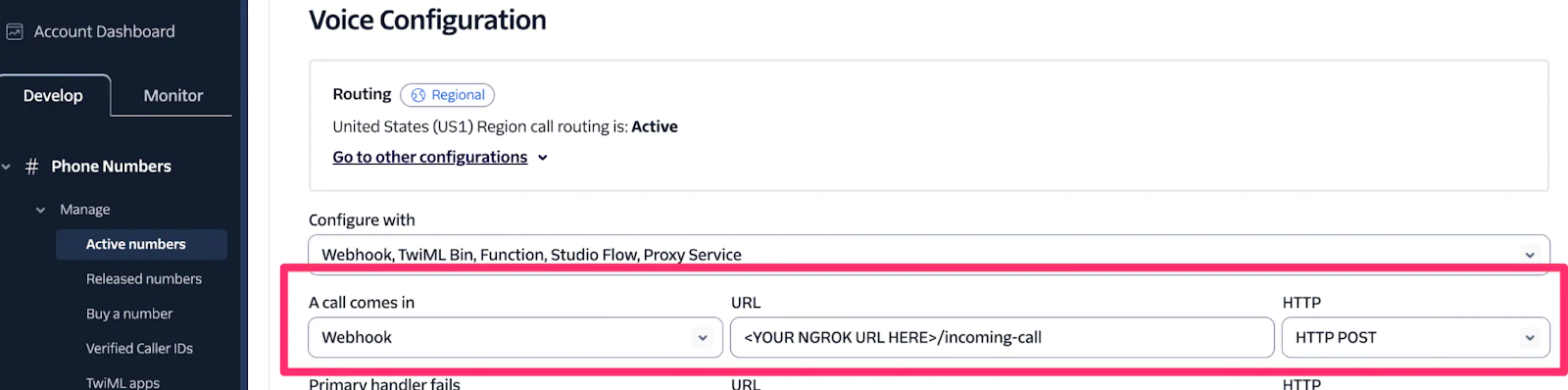

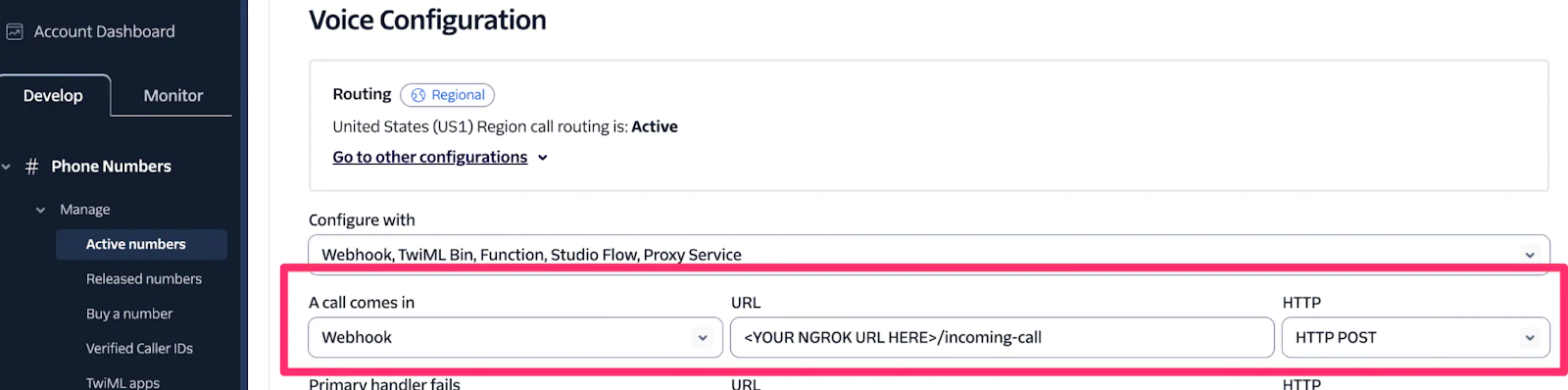

Head over to the Twilio Console to configure your phone number. Select your phone number, then under Voice Configuration > A Call Comes In add the webhook endpoint:

Save the configuration and call your Twilio number. You should hear "Hello, I am a voice assistant powered by Twilio and OpenAI. Ask me anything!" and be able to interact with the agent.

The OpenAI Realtime Agents SDK will handle the API session and speech recognition and audio response over the Twilio Media Stream.

Extend your agent's capabilities with tool calling

This bot already does a lot, but it's too generic for most real use cases. Tools give your agents more power to take helpful action, and the Agents SDK will automatically decide when to call a tool and pass in the validated inputs.

Next, you’ll update the application to be more tailored for a Veterinary office by adding a new greeting, instructions, and appointment scheduling capabilities. In your index.js, add a tool definition for scheduling appointments. This code uses zod for schema validation, which helps make sure the inputs are structured correctly.

To reflect the new use case, update the welcome greeting to something you might hear when you call a doctor's office:

Import zod and add tool as an import from the realtime SDK

Then add the tool definition. For this example, we're hardcoding the response, but the SDK will ask you for your date preference for the appointment.

Finally, update the agent configuration to add the tool:

Test it out by calling your Twilio phone number and asking "Can I schedule an appointment for Friday?" The agent should confirm the date, call your tool, and let you know the appointment has been scheduled for 10am. Notice that you didn't have to include instructions for how to call the tool, the SDK handles that automatically behind the scenes.

Add output guardrails to your agent

One of the other neat built-in features of the Agents SDK is the ability to add guardrails: these will trip the agent if you try to prompt it to give you information about something prohibited, whether that's specific words, content, or anything else you wish to define.

Let's add a block list of terms we don't want our agent to talk about, things like "cure" or "discount". Add a guardrails definition in your index.js:

Then pass the guardrails into the RealtimeSession:

Restart the server and test by calling your Twilio phone number and asking for a refund, the agent will say something like "I'm sorry, but I'm not able to help with that request."

Guardrails are incredibly powerful for building agents safely. This example focuses on keywords to exclude, but you can customize this in many other ways, like using another agent to detect content. Learn more in this example that detects and prevents the agent from doing math.

Next steps for building realtime agents

There are so many more things that OpenAI’s Agents SDK enables, such as handoffs to more purpose-built agents, enablement for human-in-the-loop interactions, features to trace agent decisions, and more. Check out the examples to learn more and get inspired.

If you want to build more with Twilio Voice and OpenAI, check out these resources:

Kelley Robinson works on the developer relations team at Twilio and has over 10 years of experience as a software engineer in a variety of API and data engineering roles. Prior to working in software she traded live cattle futures, planned art fairs, established an endowment fund, and designed promotional posters for a regional beer distributor. She has delivered dozens of technical talks to large audiences, including live coding from the NYSE floor. She graduated from the University of Michigan and now lives in Upstate New York with her partner and a pit bull named Fish.

Dominik Kundel works on Developer Experience at OpenAI.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.